181:, or PC is a register that holds the address that is presented to the instruction memory. The address is presented to instruction memory at the start of a cycle. Then during the cycle, the instruction is read out of instruction memory, and at the same time, a calculation is done to determine the next PC. The next PC is calculated by incrementing the PC by 4, and by choosing whether to take that as the next PC or to take the result of a branch/jump calculation as the next PC. Note that in classic RISC, all instructions have the same length. (This is one thing that separates RISC from CISC ). In the original RISC designs, the size of an instruction is 4 bytes, so always add 4 to the instruction address, but don't use PC + 4 for the case of a taken branch, jump, or exception (see

632:

205:. In the case of CISC micro-coded instructions, once fetched from the instruction cache, the instruction bits are shifted down the pipeline, where simple combinational logic in each pipeline stage produces control signals for the datapath directly from the instruction bits. In those CISC designs, very little decoding is done in the stage traditionally called the decode stage. A consequence of this lack of decoding is that more instruction bits have to be used to specifying what the instruction does. That leaves fewer bits for things like register indices.

27:

639:

522:

720:: Depending on the design of the delayed branch and the branch conditions, it is determined whether the instruction immediately following the branch instruction is executed even if the branch is taken. Instead of taking an IPC penalty for some fraction of branches either taken (perhaps 60%) or not taken (perhaps 40%), branch delay slots take an IPC penalty for those branches into which the compiler could not schedule the branch delay slot. The SPARC, MIPS, and MC88K designers designed a branch delay slot into their ISAs.

784:), may not want wrapping arithmetic. Some architectures (e.g. MIPS), define special addition operations that branch to special locations on overflow, rather than wrapping the result. Software at the target location is responsible for fixing the problem. This special branch is called an exception. Exceptions differ from regular branches in that the target address is not specified by the instruction itself, and the branch decision is dependent on the outcome of the instruction.

300:

instruction's destination register. On real silicon, this can be a hazard (see below for more on hazards). That is because one of the source registers being read in decode might be the same as the destination register being written in writeback. When that happens, then the same memory cells in the register file are being both read and written the same time. On silicon, many implementations of memory cells will not operate correctly when read and written at the same time.

407:

142:

229:

target computation generally required a 16 bit add and a 14 bit incrementer. Resolving the branch in the decode stage made it possible to have just a single-cycle branch mis-predict penalty. Since branches were very often taken (and thus mis-predicted), it was very important to keep this penalty low.

796:

Exceptions are different from branches and jumps, because those other control flow changes are resolved in the decode stage. Exceptions are resolved in the writeback stage. When an exception is detected, the following instructions (earlier in the pipeline) are marked as invalid, and as they flow to

299:

During this stage, both single cycle and two cycle instructions write their results into the register file. Note that two different stages are accessing the register file at the same time—the decode stage is reading two source registers, at the same time that the writeback stage is writing a previous

228:

The decode stage ended up with quite a lot of hardware: MIPS has the possibility of branching if two registers are equal, so a 32-bit-wide AND tree runs in series after the register file read, making a very long critical path through this stage (which means fewer cycles per second). Also, the branch

821:

There are two strategies to handle the suspend/resume problem. The first is a global stall signal. This signal, when activated, prevents instructions from advancing down the pipeline, generally by gating off the clock to the flip-flops at the start of each stage. The disadvantage of this strategy

220:

If the instruction decoded is a branch or jump, the target address of the branch or jump is computed in parallel with reading the register file. The branch condition is computed in the following cycle (after the register file is read), and if the branch is taken or if the instruction is a jump, the

755:

The most serious drawback to delayed branches is the additional control complexity they entail. If the delay slot instruction takes an exception, the processor has to be restarted on the branch, rather than that next instruction. Exceptions then have essentially two addresses, the exception address

713:

Branch Likely: Always fetch the instruction after the branch from the instruction cache, but only execute it if the branch was taken. The compiler can always fill the branch delay slot on such a branch, and since branches are more often taken than not, such branches have a smaller IPC penalty than

674:

machine relied on the compiler to add the NOP instructions in this case, rather than having the circuitry to detect and (more taxingly) stall the first two pipeline stages. Hence the name MIPS: Microprocessor without

Interlocked Pipeline Stages. It turned out that the extra NOP instructions added

502:

Decode stage logic compares the registers written by instructions in the execute and access stages of the pipeline to the registers read by the instruction in the decode stage, and cause the multiplexers to select the most recent data. These bypass multiplexers make it possible for the pipeline to

279:

During this stage, single cycle latency instructions simply have their results forwarded to the next stage. This forwarding ensures that both one and two cycle instructions always write their results in the same stage of the pipeline so that just one write port to the register file can be used, and

216:

At the same time the register file is read, instruction issue logic in this stage determines if the pipeline is ready to execute the instruction in this stage. If not, the issue logic causes both the

Instruction Fetch stage and the Decode stage to stall. On a stall cycle, the input flip flops do

817:

Occasionally, either the data or instruction cache does not contain a required datum or instruction. In these cases, the CPU must suspend operation until the cache can be filled with the necessary data, and then must resume execution. The problem of filling the cache with the required data (and

808:

changes to the software visible state in the program order. This in-order commit happens very naturally in the classic RISC pipeline. Most instructions write their results to the register file in the writeback stage, and so those writes automatically happen in program order. Store instructions,

800:

To make it easy (and fast) for the software to fix the problem and restart the program, the CPU must take a precise exception. A precise exception means that all instructions up to the excepting instruction have been executed, and the excepting instruction and everything afterwards have not been

768:

The simplest solution, provided by most architectures, is wrapping arithmetic. Numbers greater than the maximum possible encoded value have their most significant bits chopped off until they fit. In the usual integer number system, 3000000000+3000000000=6000000000. With unsigned 32 bit wrapping

825:

Another strategy to handle suspend/resume is to reuse the exception logic. The machine takes an exception on the offending instruction, and all further instructions are invalidated. When the cache has been filled with the necessary data, the instruction that caused the cache miss restarts. To

325:

Classic RISC pipelines avoided these hazards by replicating hardware. In particular, branch instructions could have used the ALU to compute the target address of the branch. If the ALU were used in the decode stage for that purpose, an ALU instruction followed by a branch would have seen both

709:

Predict Not Taken: Always fetch the instruction after the branch from the instruction cache, but only execute it if the branch is not taken. If the branch is not taken, the pipeline stays full. If the branch is taken, the instruction is flushed (marked as if it were a NOP), and one cycle's

266:

operations. During the execute stage, the operands to these operations were fed to the multi-cycle multiply/divide unit. The rest of the pipeline was free to continue execution while the multiply/divide unit did its work. To avoid complicating the writeback stage and issue logic, multicycle

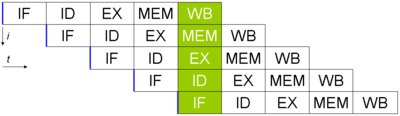

153:, ID = Instruction Decode, EX = Execute, MEM = Memory access, WB = Register write back). The vertical axis is successive instructions; the horizontal axis is time. So in the green column, the earliest instruction is in WB stage, and the latest instruction is undergoing instruction fetch.

208:

All MIPS, SPARC, and DLX instructions have at most two register inputs. During the decode stage, the indexes of these two registers are identified within the instruction, and the indexes are presented to the register memory, as the address. Thus the two registers named are read from the

240:

The ALU is responsible for performing boolean operations (and, or, not, nand, nor, xor, xnor) and also for performing integer addition and subtraction. Besides the result, the ALU typically provides status bits such as whether or not the result was 0, or if an overflow occurred.

822:

is that there are a large number of flip flops, so the global stall signal takes a long time to propagate. Since the machine generally has to stall in the same cycle that it identifies the condition requiring the stall, the stall signal becomes a speed-limiting critical path.

726:: In parallel with fetching each instruction, guess if the instruction is a branch or jump, and if so, guess the target. On the cycle after a branch or jump, fetch the instruction at the guessed target. When the guess is wrong, flush the incorrectly fetched target.

675:

by the compiler expanded the program binaries enough that the instruction cache hit rate was reduced. The stall hardware, although expensive, was put back into later designs to improve instruction cache hit rate, at which point the acronym no longer made sense.

503:

execute simple instructions with just the latency of the ALU, the multiplexer, and a flip-flop. Without the multiplexers, the latency of writing and then reading the register file would have to be included in the latency of these instructions.

463:

it is normally written-back. The solution to this problem is a pair of bypass multiplexers. These multiplexers sit at the end of the decode stage, and their flopped outputs are the inputs to the ALU. Each multiplexer selects between:

690:

The branch resolution recurrence goes through quite a bit of circuitry: the instruction cache read, register file read, branch condition compute (which involves a 32-bit compare on the MIPS CPUs), and the next instruction address

251:

Register-Register

Operation (Single-cycle latency): Add, subtract, compare, and logical operations. During the execute stage, the two arguments were fed to a simple ALU, which generated the result by the end of the execute

607:

since it floats in the pipeline, like an air bubble in a water pipe, occupying resources but not producing useful results. The hardware to detect a data hazard and stall the pipeline until the hazard is cleared is called a

809:

however, write their results to the Store Data Queue in the access stage. If the store instruction takes an exception, the Store Data Queue entry is invalidated so that it is not written to the cache data SRAM later.

741:

Compilers typically have some difficulty finding logically independent instructions to place after the branch (the instruction after the branch is called the delay slot), so that they must insert NOPs into the delay

590:- they are prevented from flopping their inputs and so stay in the same state for a cycle. The execute, access, and write-back stages downstream see an extra no-operation instruction (NOP) inserted between the

255:

Memory

Reference (Two-cycle latency). All loads from memory. During the execute stage, the ALU added the two arguments (a register and a constant offset) to produce a virtual address by the end of the

769:

arithmetic, 3000000000+3000000000=1705032704 (6000000000 mod 2^32). This may not seem terribly useful. The largest benefit of wrapping arithmetic is that every operation has a well defined result.

694:

Because branch and jump targets are calculated in parallel to the register read, RISC ISAs typically do not have instructions that branch to a register+offset address. Jump to register is supported.

582:

instruction is already through the ALU. To resolve this would require the data from memory to be passed backwards in time to the input to the ALU. This is not possible. The solution is to delay the

797:

the end of the pipe their results are discarded. The program counter is set to the address of a special exception handler, and special registers are written with the exception location and cause.

667:'s Decode stage. This causes quite a performance hit, as the processor spends a lot of time processing nothing, but clock speeds can be increased as there is less forwarding logic to wait for.

663:

can be solved by stalling the first stage by three cycles until write-back is achieved, and the data in the register file is correct, causing the correct register value to be fetched by the

237:

The

Execute stage is where the actual computation occurs. Typically this stage consists of an ALU, and also a bit shifter. It may also include a multiple cycle multiplier and divider.

697:

On any branch taken, the instruction immediately after the branch is always fetched from the instruction cache. If this instruction is ignored, there is a one cycle per taken branch

166:. The term "latency" is used in computer science often and means the time from when an operation starts until it completes. Thus, instruction fetch has a latency of one

444:. Write-back of this normally occurs in cycle 5 (green box). Therefore, the value read from the register file and passed to the ALU (in the Execute stage of the

326:

instructions attempt to use the ALU simultaneously. It is simple to resolve this conflict by designing a specialized branch target adder into the decode stage.

686:

The classic RISC pipeline resolves branches in the decode stage, which means the branch resolution recurrence is two cycles long. There are three implications:

510:

in time - the data cannot be bypassed back to an earlier stage if it has not been processed yet. In the case above, the data is passed forward (by the time the

1956:

730:

Delayed branches were controversial, first, because their semantics are complicated. A delayed branch specifies that the jump to a new location happens

403:

The instruction fetch and decode stages send the second instruction one cycle after the first. They flow down the pipeline as shown in this diagram:

482:

The current register pipeline of the access stage (which is either a loaded value or a forwarded ALU result, this provides bypassing of two stages):

928:

748:

processors, which fetch multiple instructions per cycle and must have some form of branch prediction, do not benefit from delayed branches. The

2067:

1250:

826:

expedite data cache miss handling, the instruction can be restarted so that its access cycle happens one cycle after the data cache is filled.

221:

PC in the first stage is assigned the branch target, rather than the incremented PC that has been computed. Some architectures made use of the

756:

and the restart address, and generating and distinguishing between the two correctly in all cases has been a source of bugs for later designs.

1769:

1047:

1926:

1492:

1309:

2280:

335:

Data hazards occur when an instruction, scheduled blindly, would attempt to use data before the data is available in the register file.

1272:

818:

potentially writing back to memory the evicted cache line) is not specific to the pipeline organization, and is not discussed here.

1921:

1993:

2275:

1746:

788:

247:

Instructions on these simple RISC machines can be divided into three latency classes according to the type of the operation:

2864:

2690:

1814:

1077:

921:

2700:

1841:

162:

The instructions reside in memory that takes one cycle to read. This memory can be dedicated to SRAM, or an

Instruction

765:

Suppose a 32-bit RISC processes an ADD instruction that adds two large numbers, and the result does not fit in 32 bits.

125:. During operation, each pipeline stage works on one instruction at a time. Each of these stages consists of a set of

968:

896:

734:

the next instruction. That next instruction is the one unavoidably loaded by the instruction cache after the branch.

2008:

1836:

1809:

1188:

835:

70:

48:

41:

2823:

2386:

1279:

1245:

1240:

1159:

1124:

88:

2859:

2798:

2695:

2096:

2003:

1804:

1025:

914:

586:

instruction by one cycle. The data hazard is detected in the decode stage, and the fetch and decode stages are

1824:

1543:

978:

670:

This data hazard can be detected quite easily when the program's machine code is written by the compiler. The

309:

1998:

1846:

1819:

1680:

1294:

1255:

1112:

781:

84:

2435:

2197:

1673:

1634:

1289:

1284:

1218:

1030:

647:

A pipeline interlock does not have to be used with any data forwarding, however. The first example of the

2062:

1759:

1457:

1154:

777:

323:

Structural hazards occur when two instructions might attempt to use the same resources at the same time.

2712:

2359:

1776:

1267:

1235:

1005:

993:

973:

2803:

2766:

2756:

1144:

201:

Another thing that separates the first RISC machines from earlier CISC machines, is that RISC has no

2818:

2225:

2161:

2138:

1988:

1950:

1786:

1736:

1731:

1208:

1102:

1010:

35:

2771:

2554:

2448:

2412:

2329:

2313:

2155:

1944:

1903:

1891:

1754:

1668:

1589:

1354:

1015:

958:

190:

126:

91:

2577:

2549:

2459:

2424:

2173:

2167:

2149:

1883:

1877:

1781:

1685:

1576:

1515:

1377:

1020:

698:

259:

118:

52:

2751:

2660:

2406:

2118:

1936:

1695:

1663:

1621:

1334:

1149:

1139:

1129:

1119:

1089:

1072:

937:

222:

787:

The most common kind of software-visible exception on one of the classic RISC machines is a

2781:

2717:

2303:

2025:

1915:

1862:

1394:

1107:

963:

945:

468:

A register file read port (i.e. the output of the decode stage, as in the naive pipeline):

122:

8:

2828:

2813:

2633:

2484:

2466:

2430:

2418:

2072:

2019:

1796:

1712:

1594:

1449:

1344:

1203:

130:

2685:

2677:

2529:

2504:

2308:

2183:

1707:

1648:

1528:

1260:

988:

603:

491:

348:

170:(if using single-cycle SRAM or if the instruction was in the cache). Thus, during the

225:(ALU) in the Execute stage, at the cost of slightly decreased instruction throughput.

2638:

2605:

2521:

2453:

2354:

2344:

2334:

2265:

2260:

2255:

2178:

2107:

2013:

1973:

1606:

1556:

1506:

1482:

1364:

1304:

1299:

1181:

1097:

892:

723:

717:

631:

186:

171:

163:

150:

99:

2808:

2741:

2727:

2582:

2489:

2443:

2250:

2245:

2240:

2235:

2230:

2220:

2090:

2057:

1968:

1963:

1872:

1724:

1719:

1702:

1690:

1629:

1193:

1171:

1057:

1035:

953:

2722:

2707:

2655:

2559:

2534:

2371:

2364:

2215:

2210:

2205:

2144:

2052:

2042:

1764:

1599:

1551:

1314:

1198:

1166:

1067:

1062:

983:

886:

737:

Delayed branches have been criticized as a poor short-term choice in ISA design:

178:

283:

For direct mapped and virtually tagged data caching, the simplest by far of the

2833:

2667:

2650:

2643:

2539:

2396:

2133:

2047:

1978:

1561:

1523:

1472:

1467:

1462:

1176:

1000:

871:

856:

263:

107:

638:

521:

2853:

2628:

2544:

1584:

1566:

1359:

1052:

773:

752:

ISA left out delayed branches, as it was intended for superscalar processors.

671:

314:

for situations where instructions in a pipeline would produce wrong answers.

210:

1487:

574:

is not present in the data cache until after the Memory Access stage of the

217:

not accept new bits, thus no new calculations take place during that cycle.

185:, below). (Note that some modern machines use more complicated algorithms (

2838:

2776:

2592:

2569:

2381:

2102:

1040:

339:

In the classic RISC pipeline, Data hazards are avoided in one of two ways:

117:

Each of these classic scalar RISC designs fetches and tries to execute one

2623:

2587:

2298:

2270:

2128:

1983:

906:

745:

455:

back to the

Execute stage (i.e. to the red circle in the diagram) of the

167:

705:

There are four schemes to solve this performance problem with branches:

2509:

2499:

2494:

2476:

2376:

2349:

1611:

1444:

1414:

1134:

772:

But the programmer, especially if programming in a language supporting

417:, without hazard consideration, the data hazard progresses as follows:

406:

288:

684:

Control hazards are caused by conditional and unconditional branching.

2600:

2597:

2339:

1409:

1387:

749:

284:

202:

141:

94:(RISC CPUs) used a very similar architectural solution, now called a

174:

stage, a 32-bit instruction is fetched from the instruction memory.

121:. The main common concept of each design is a five-stage execution

2615:

1434:

475:

The current register pipeline of the ALU (to bypass by one stage):

1424:

1382:

1439:

1404:

1369:

276:

If data memory needs to be accessed, it is done in this stage.

267:

instruction wrote their results to a separate set of registers.

1897:

1429:

1399:

103:

2761:

1909:

1829:

1419:

262:(Many cycle latency). Integer multiply and divide and all

146:

354:

Suppose the CPU is executing the following piece of code:

1349:

1339:

213:. In the MIPS design, the register file had 32 entries.

111:

244:

The bit shifter is responsible for shift and rotations.

291:

are used, one storing data and the other storing tags.

136:

486:

arrow. Note that this requires the data to be passed

451:

Instead, we must pass the data that was computed by

133:that operates on the outputs of those flip-flops.

884:

872:"RISC I: A Reduced Instruction Set VLSI Computer"

857:"RISC I: A Reduced Instruction Set VLSI Computer"

528:

2851:

436:is fetched from the register file. However, the

885:Hennessy, John L.; Patterson, David A. (2011).

888:Computer Architecture, A Quantitative Approach

533:However, consider the following instructions:

440:instruction has not yet written its result to

922:

710:opportunity to finish an instruction is lost.

1927:Computer performance by orders of magnitude

936:

929:

915:

514:is ready for the register in the ALU, the

193:) to guess the next instruction address.)

869:

854:

804:To take precise exceptions, the CPU must

424:instruction calculates the new value for

71:Learn how and when to remove this message

490:in time by one cycle. If this occurs, a

342:

140:

34:This article includes a list of general

432:operation is decoded, and the value of

308:Hennessy and Patterson coined the term

2852:

812:

506:Note that the data can only be passed

910:

317:

196:

1898:Floating-point operations per second

157:

137:The classic five stage RISC pipeline

20:

701:penalty, which is adequately large.

13:

678:

520:

498:operation until the data is ready.

448:operation, red box) is incorrect.

405:

40:it lacks sufficient corresponding

14:

2876:

891:(5th ed.). Morgan Kaufmann.

836:Iron law of processor performance

285:numerous data cache organizations

2824:Semiconductor device fabrication

870:Patterson, David (12 May 1981).

855:Patterson, David (12 May 1981).

637:

630:

271:

89:reduced instruction set computer

25:

2799:History of general-purpose CPUs

1026:Nondeterministic Turing machine

624:Problem resolved using a bubble

614:

578:instruction. By this time, the

570:The data read from the address

329:

145:Basic five-stage pipeline in a

979:Deterministic finite automaton

863:

848:

601:This NOP is termed a pipeline

529:Solution B. Pipeline interlock

494:must be inserted to stall the

1:

1770:Simultaneous and heterogenous

874:. Isca '81. pp. 443–457.

859:. Isca '81. pp. 443–457.

841:

760:

110:, and later the notional CPU

2454:Integrated memory controller

2436:Translation lookaside buffer

1635:Memory dependence prediction

1078:Random-access stored program

1031:Probabilistic Turing machine

398:; Writes r10 & r3 to r11

294:

85:history of computer hardware

7:

2865:Superscalar microprocessors

1910:Synaptic updates per second

829:

619:Bypassing backwards in time

347:Bypassing is also known as

10:

2881:

2314:Heterogeneous architecture

1236:Orthogonal instruction set

1006:Alternating Turing machine

994:Quantum cellular automaton

655:and the second example of

518:has already computed it).

303:

232:

2804:Microprocessor chronology

2791:

2767:Dynamic frequency scaling

2740:

2676:

2614:

2568:

2520:

2475:

2395:

2322:

2291:

2196:

2117:

2081:

2035:

1935:

1922:Cache performance metrics

1861:

1795:

1745:

1656:

1647:

1620:

1575:

1542:

1514:

1505:

1325:

1228:

1217:

1088:

944:

428:. In the same cycle, the

2819:Hardware security module

2162:Digital signal processor

2139:Graphics processing unit

1951:Graphics processing unit

535:

356:

280:it is always available.

260:Multi-cycle Instructions

191:branch target prediction

114:invented for education.

92:central processing units

2772:Dynamic voltage scaling

2555:Memory address register

2449:Branch target predictor

2413:Address generation unit

2156:Physics processing unit

1945:Central processing unit

1904:Transactions per second

1892:Instructions per second

1815:Array processing (SIMT)

959:Stored-program computer

377:; Writes r3 - r4 to r10

55:more precise citations.

2860:Instruction processing

2578:Hardwired control unit

2460:Memory management unit

2425:Memory management unit

2174:Secure cryptoprocessor

2168:Tensor Processing Unit

2150:Vision processing unit

1884:Cycles per instruction

1878:Instructions per cycle

1825:Associative processing

1516:Instruction pipelining

938:Processor technologies

525:

410:

154:

2661:Sum-addressed decoder

2407:Arithmetic logic unit

1534:Classic RISC pipeline

1488:Epiphany architecture

1335:Motorola 68000 series

524:

409:

343:Solution A. Bypassing

223:Arithmetic logic unit

144:

119:instruction per cycle

96:classic RISC pipeline

2782:Performance per watt

2360:replacement policies

2026:Package on a package

1916:Performance per watt

1820:Pipelined processing

1590:Tomasulo's algorithm

1395:Clipper architecture

1251:Application-specific

964:Finite-state machine

123:instruction pipeline

16:Instruction pipeline

2814:Digital electronics

2467:Instruction decoder

2419:Floating-point unit

2073:Soft microprocessor

2020:System in a package

1595:Reservation station

1125:Transport-triggered

813:Cache miss handling

131:combinational logic

129:to hold state, and

98:. Those CPUs were:

2686:Integrated circuit

2530:Processor register

2184:Baseband processor

1529:Operand forwarding

989:Cellular automaton

714:the previous kind.

610:pipeline interlock

526:

411:

349:operand forwarding

318:Structural hazards

197:Instruction decode

155:

2847:

2846:

2736:

2735:

2355:Instruction cache

2345:Scratchpad memory

2192:

2191:

2179:Network processor

2108:Network on a chip

2063:Ultra-low-voltage

2014:Multi-chip module

1857:

1856:

1643:

1642:

1630:Branch prediction

1607:Register renaming

1501:

1500:

1483:VISC architecture

1305:Quantum computing

1300:VISC architecture

1182:Secondary storage

1098:Microarchitecture

1058:Register machines

724:Branch Prediction

718:Branch Delay Slot

645:

644:

187:branch prediction

172:Instruction Fetch

158:Instruction fetch

151:Instruction Fetch

81:

80:

73:

2872:

2809:Processor design

2701:Power management

2583:Instruction unit

2444:Branch predictor

2393:

2392:

2091:System on a chip

2033:

2032:

1873:Transistor count

1797:Flynn's taxonomy

1654:

1653:

1512:

1511:

1315:Addressing modes

1226:

1225:

1172:Memory hierarchy

1036:Hypercomputation

954:Abstract machine

931:

924:

917:

908:

907:

902:

876:

875:

867:

861:

860:

852:

666:

662:

658:

654:

650:

641:

634:

615:

597:

593:

585:

581:

577:

573:

566:

563:

560:

557:

554:

551:

548:

545:

542:

539:

517:

513:

497:

485:

478:

471:

458:

454:

447:

443:

439:

435:

431:

427:

423:

420:In cycle 3, the

399:

396:

393:

390:

387:

384:

381:

378:

375:

372:

369:

366:

363:

360:

183:delayed branches

76:

69:

65:

62:

56:

51:this article by

42:inline citations

29:

28:

21:

2880:

2879:

2875:

2874:

2873:

2871:

2870:

2869:

2850:

2849:

2848:

2843:

2829:Tick–tock model

2787:

2743:

2732:

2672:

2656:Address decoder

2610:

2564:

2560:Program counter

2535:Status register

2516:

2471:

2431:Load–store unit

2398:

2391:

2318:

2287:

2188:

2145:Image processor

2120:

2113:

2083:

2077:

2053:Microcontroller

2043:Embedded system

2031:

1931:

1864:

1853:

1791:

1741:

1639:

1616:

1600:Re-order buffer

1571:

1552:Data dependency

1538:

1497:

1327:

1321:

1220:

1219:Instruction set

1213:

1199:Multiprocessing

1167:Cache hierarchy

1160:Register/memory

1084:

984:Queue automaton

940:

935:

905:

899:

880:

879:

868:

864:

853:

849:

844:

832:

815:

763:

681:

679:Control hazards

664:

660:

656:

652:

648:

595:

591:

583:

579:

575:

571:

568:

567:

564:

561:

558:

555:

552:

549:

546:

543:

540:

537:

531:

515:

511:

495:

483:

476:

469:

456:

452:

445:

441:

437:

433:

429:

425:

421:

401:

400:

397:

394:

391:

388:

385:

382:

379:

376:

373:

370:

367:

364:

361:

358:

345:

332:

320:

306:

297:

274:

235:

199:

179:Program Counter

160:

139:

77:

66:

60:

57:

47:Please help to

46:

30:

26:

17:

12:

11:

5:

2878:

2868:

2867:

2862:

2845:

2844:

2842:

2841:

2836:

2834:Pin grid array

2831:

2826:

2821:

2816:

2811:

2806:

2801:

2795:

2793:

2789:

2788:

2786:

2785:

2779:

2774:

2769:

2764:

2759:

2754:

2748:

2746:

2738:

2737:

2734:

2733:

2731:

2730:

2725:

2720:

2715:

2710:

2705:

2704:

2703:

2698:

2693:

2682:

2680:

2674:

2673:

2671:

2670:

2668:Barrel shifter

2665:

2664:

2663:

2658:

2651:Binary decoder

2648:

2647:

2646:

2636:

2631:

2626:

2620:

2618:

2612:

2611:

2609:

2608:

2603:

2595:

2590:

2585:

2580:

2574:

2572:

2566:

2565:

2563:

2562:

2557:

2552:

2547:

2542:

2540:Stack register

2537:

2532:

2526:

2524:

2518:

2517:

2515:

2514:

2513:

2512:

2507:

2497:

2492:

2487:

2481:

2479:

2473:

2472:

2470:

2469:

2464:

2463:

2462:

2451:

2446:

2441:

2440:

2439:

2433:

2422:

2416:

2410:

2403:

2401:

2390:

2389:

2384:

2379:

2374:

2369:

2368:

2367:

2362:

2357:

2352:

2347:

2342:

2332:

2326:

2324:

2320:

2319:

2317:

2316:

2311:

2306:

2301:

2295:

2293:

2289:

2288:

2286:

2285:

2284:

2283:

2273:

2268:

2263:

2258:

2253:

2248:

2243:

2238:

2233:

2228:

2223:

2218:

2213:

2208:

2202:

2200:

2194:

2193:

2190:

2189:

2187:

2186:

2181:

2176:

2171:

2165:

2159:

2153:

2147:

2142:

2136:

2134:AI accelerator

2131:

2125:

2123:

2115:

2114:

2112:

2111:

2105:

2100:

2097:Multiprocessor

2094:

2087:

2085:

2079:

2078:

2076:

2075:

2070:

2065:

2060:

2055:

2050:

2048:Microprocessor

2045:

2039:

2037:

2036:By application

2030:

2029:

2023:

2017:

2011:

2006:

2001:

1996:

1991:

1986:

1981:

1979:Tile processor

1976:

1971:

1966:

1961:

1960:

1959:

1948:

1941:

1939:

1933:

1932:

1930:

1929:

1924:

1919:

1913:

1907:

1901:

1895:

1889:

1888:

1887:

1875:

1869:

1867:

1859:

1858:

1855:

1854:

1852:

1851:

1850:

1849:

1839:

1834:

1833:

1832:

1827:

1822:

1817:

1807:

1801:

1799:

1793:

1792:

1790:

1789:

1784:

1779:

1774:

1773:

1772:

1767:

1765:Hyperthreading

1757:

1751:

1749:

1747:Multithreading

1743:

1742:

1740:

1739:

1734:

1729:

1728:

1727:

1717:

1716:

1715:

1710:

1700:

1699:

1698:

1693:

1683:

1678:

1677:

1676:

1671:

1660:

1658:

1651:

1645:

1644:

1641:

1640:

1638:

1637:

1632:

1626:

1624:

1618:

1617:

1615:

1614:

1609:

1604:

1603:

1602:

1597:

1587:

1581:

1579:

1573:

1572:

1570:

1569:

1564:

1559:

1554:

1548:

1546:

1540:

1539:

1537:

1536:

1531:

1526:

1524:Pipeline stall

1520:

1518:

1509:

1503:

1502:

1499:

1498:

1496:

1495:

1490:

1485:

1480:

1477:

1476:

1475:

1473:z/Architecture

1470:

1465:

1460:

1452:

1447:

1442:

1437:

1432:

1427:

1422:

1417:

1412:

1407:

1402:

1397:

1392:

1391:

1390:

1385:

1380:

1372:

1367:

1362:

1357:

1352:

1347:

1342:

1337:

1331:

1329:

1323:

1322:

1320:

1319:

1318:

1317:

1307:

1302:

1297:

1292:

1287:

1282:

1277:

1276:

1275:

1265:

1264:

1263:

1253:

1248:

1243:

1238:

1232:

1230:

1223:

1215:

1214:

1212:

1211:

1206:

1201:

1196:

1191:

1186:

1185:

1184:

1179:

1177:Virtual memory

1169:

1164:

1163:

1162:

1157:

1152:

1147:

1137:

1132:

1127:

1122:

1117:

1116:

1115:

1105:

1100:

1094:

1092:

1086:

1085:

1083:

1082:

1081:

1080:

1075:

1070:

1065:

1055:

1050:

1045:

1044:

1043:

1038:

1033:

1028:

1023:

1018:

1013:

1008:

1001:Turing machine

998:

997:

996:

991:

986:

981:

976:

971:

961:

956:

950:

948:

942:

941:

934:

933:

926:

919:

911:

904:

903:

898:978-0123838728

897:

881:

878:

877:

862:

846:

845:

843:

840:

839:

838:

831:

828:

814:

811:

774:large integers

762:

759:

758:

757:

753:

743:

728:

727:

721:

715:

711:

703:

702:

695:

692:

680:

677:

643:

642:

635:

627:

626:

621:

598:instructions.

536:

530:

527:

500:

499:

480:

473:

415:naive pipeline

357:

344:

341:

331:

328:

319:

316:

305:

302:

296:

293:

273:

270:

269:

268:

264:floating-point

257:

253:

234:

231:

198:

195:

159:

156:

149:machine (IF =

138:

135:

79:

78:

33:

31:

24:

15:

9:

6:

4:

3:

2:

2877:

2866:

2863:

2861:

2858:

2857:

2855:

2840:

2837:

2835:

2832:

2830:

2827:

2825:

2822:

2820:

2817:

2815:

2812:

2810:

2807:

2805:

2802:

2800:

2797:

2796:

2794:

2790:

2783:

2780:

2778:

2775:

2773:

2770:

2768:

2765:

2763:

2760:

2758:

2755:

2753:

2750:

2749:

2747:

2745:

2739:

2729:

2726:

2724:

2721:

2719:

2716:

2714:

2711:

2709:

2706:

2702:

2699:

2697:

2694:

2692:

2689:

2688:

2687:

2684:

2683:

2681:

2679:

2675:

2669:

2666:

2662:

2659:

2657:

2654:

2653:

2652:

2649:

2645:

2642:

2641:

2640:

2637:

2635:

2632:

2630:

2629:Demultiplexer

2627:

2625:

2622:

2621:

2619:

2617:

2613:

2607:

2604:

2602:

2599:

2596:

2594:

2591:

2589:

2586:

2584:

2581:

2579:

2576:

2575:

2573:

2571:

2567:

2561:

2558:

2556:

2553:

2551:

2550:Memory buffer

2548:

2546:

2545:Register file

2543:

2541:

2538:

2536:

2533:

2531:

2528:

2527:

2525:

2523:

2519:

2511:

2508:

2506:

2503:

2502:

2501:

2498:

2496:

2493:

2491:

2488:

2486:

2485:Combinational

2483:

2482:

2480:

2478:

2474:

2468:

2465:

2461:

2458:

2457:

2455:

2452:

2450:

2447:

2445:

2442:

2437:

2434:

2432:

2429:

2428:

2426:

2423:

2420:

2417:

2414:

2411:

2408:

2405:

2404:

2402:

2400:

2394:

2388:

2385:

2383:

2380:

2378:

2375:

2373:

2370:

2366:

2363:

2361:

2358:

2356:

2353:

2351:

2348:

2346:

2343:

2341:

2338:

2337:

2336:

2333:

2331:

2328:

2327:

2325:

2321:

2315:

2312:

2310:

2307:

2305:

2302:

2300:

2297:

2296:

2294:

2290:

2282:

2279:

2278:

2277:

2274:

2272:

2269:

2267:

2264:

2262:

2259:

2257:

2254:

2252:

2249:

2247:

2244:

2242:

2239:

2237:

2234:

2232:

2229:

2227:

2224:

2222:

2219:

2217:

2214:

2212:

2209:

2207:

2204:

2203:

2201:

2199:

2195:

2185:

2182:

2180:

2177:

2175:

2172:

2169:

2166:

2163:

2160:

2157:

2154:

2151:

2148:

2146:

2143:

2140:

2137:

2135:

2132:

2130:

2127:

2126:

2124:

2122:

2116:

2109:

2106:

2104:

2101:

2098:

2095:

2092:

2089:

2088:

2086:

2080:

2074:

2071:

2069:

2066:

2064:

2061:

2059:

2056:

2054:

2051:

2049:

2046:

2044:

2041:

2040:

2038:

2034:

2027:

2024:

2021:

2018:

2015:

2012:

2010:

2007:

2005:

2002:

2000:

1997:

1995:

1992:

1990:

1987:

1985:

1982:

1980:

1977:

1975:

1972:

1970:

1967:

1965:

1962:

1958:

1955:

1954:

1952:

1949:

1946:

1943:

1942:

1940:

1938:

1934:

1928:

1925:

1923:

1920:

1917:

1914:

1911:

1908:

1905:

1902:

1899:

1896:

1893:

1890:

1885:

1882:

1881:

1879:

1876:

1874:

1871:

1870:

1868:

1866:

1860:

1848:

1845:

1844:

1843:

1840:

1838:

1835:

1831:

1828:

1826:

1823:

1821:

1818:

1816:

1813:

1812:

1811:

1808:

1806:

1803:

1802:

1800:

1798:

1794:

1788:

1785:

1783:

1780:

1778:

1775:

1771:

1768:

1766:

1763:

1762:

1761:

1758:

1756:

1753:

1752:

1750:

1748:

1744:

1738:

1735:

1733:

1730:

1726:

1723:

1722:

1721:

1718:

1714:

1711:

1709:

1706:

1705:

1704:

1701:

1697:

1694:

1692:

1689:

1688:

1687:

1684:

1682:

1679:

1675:

1672:

1670:

1667:

1666:

1665:

1662:

1661:

1659:

1655:

1652:

1650:

1646:

1636:

1633:

1631:

1628:

1627:

1625:

1623:

1619:

1613:

1610:

1608:

1605:

1601:

1598:

1596:

1593:

1592:

1591:

1588:

1586:

1585:Scoreboarding

1583:

1582:

1580:

1578:

1574:

1568:

1567:False sharing

1565:

1563:

1560:

1558:

1555:

1553:

1550:

1549:

1547:

1545:

1541:

1535:

1532:

1530:

1527:

1525:

1522:

1521:

1519:

1517:

1513:

1510:

1508:

1504:

1494:

1491:

1489:

1486:

1484:

1481:

1478:

1474:

1471:

1469:

1466:

1464:

1461:

1459:

1456:

1455:

1453:

1451:

1448:

1446:

1443:

1441:

1438:

1436:

1433:

1431:

1428:

1426:

1423:

1421:

1418:

1416:

1413:

1411:

1408:

1406:

1403:

1401:

1398:

1396:

1393:

1389:

1386:

1384:

1381:

1379:

1376:

1375:

1373:

1371:

1368:

1366:

1363:

1361:

1360:Stanford MIPS

1358:

1356:

1353:

1351:

1348:

1346:

1343:

1341:

1338:

1336:

1333:

1332:

1330:

1324:

1316:

1313:

1312:

1311:

1308:

1306:

1303:

1301:

1298:

1296:

1293:

1291:

1288:

1286:

1283:

1281:

1278:

1274:

1271:

1270:

1269:

1266:

1262:

1259:

1258:

1257:

1254:

1252:

1249:

1247:

1244:

1242:

1239:

1237:

1234:

1233:

1231:

1227:

1224:

1222:

1221:architectures

1216:

1210:

1207:

1205:

1202:

1200:

1197:

1195:

1192:

1190:

1189:Heterogeneous

1187:

1183:

1180:

1178:

1175:

1174:

1173:

1170:

1168:

1165:

1161:

1158:

1156:

1153:

1151:

1148:

1146:

1143:

1142:

1141:

1140:Memory access

1138:

1136:

1133:

1131:

1128:

1126:

1123:

1121:

1118:

1114:

1111:

1110:

1109:

1106:

1104:

1101:

1099:

1096:

1095:

1093:

1091:

1087:

1079:

1076:

1074:

1073:Random-access

1071:

1069:

1066:

1064:

1061:

1060:

1059:

1056:

1054:

1053:Stack machine

1051:

1049:

1046:

1042:

1039:

1037:

1034:

1032:

1029:

1027:

1024:

1022:

1019:

1017:

1014:

1012:

1009:

1007:

1004:

1003:

1002:

999:

995:

992:

990:

987:

985:

982:

980:

977:

975:

972:

970:

969:with datapath

967:

966:

965:

962:

960:

957:

955:

952:

951:

949:

947:

943:

939:

932:

927:

925:

920:

918:

913:

912:

909:

900:

894:

890:

889:

883:

882:

873:

866:

858:

851:

847:

837:

834:

833:

827:

823:

819:

810:

807:

802:

798:

794:

792:

791:

785:

783:

779:

775:

770:

766:

754:

751:

747:

744:

740:

739:

738:

735:

733:

725:

722:

719:

716:

712:

708:

707:

706:

700:

696:

693:

689:

688:

687:

685:

676:

673:

672:Stanford MIPS

668:

640:

636:

633:

629:

628:

625:

622:

620:

617:

616:

613:

611:

606:

605:

599:

589:

534:

523:

519:

509:

504:

493:

489:

481:

474:

467:

466:

465:

462:

449:

418:

416:

408:

404:

355:

352:

350:

340:

337:

336:

327:

324:

315:

313:

312:

301:

292:

290:

286:

281:

277:

272:Memory access

265:

261:

258:

254:

250:

249:

248:

245:

242:

238:

230:

226:

224:

218:

214:

212:

211:register file

206:

204:

194:

192:

188:

184:

180:

175:

173:

169:

165:

152:

148:

143:

134:

132:

128:

124:

120:

115:

113:

109:

105:

101:

97:

93:

90:

87:, some early

86:

75:

72:

64:

61:December 2012

54:

50:

44:

43:

37:

32:

23:

22:

19:

2839:Chip carrier

2777:Clock gating

2696:Mixed-signal

2593:Write buffer

2570:Control unit

2382:Clock signal

2121:accelerators

2103:Cypress PSoC

1760:Simultaneous

1577:Out-of-order

1533:

1209:Neuromorphic

1090:Architecture

1048:Belt machine

1041:Zeno machine

974:Hierarchical

887:

865:

850:

824:

820:

816:

805:

803:

799:

795:

789:

786:

771:

767:

764:

736:

731:

729:

704:

691:multiplexer.

683:

682:

669:

659:followed by

651:followed by

646:

623:

618:

609:

602:

600:

587:

569:

532:

507:

505:

501:

487:

460:

450:

419:

414:

412:

402:

353:

346:

338:

334:

333:

330:Data hazards

322:

321:

310:

307:

298:

282:

278:

275:

246:

243:

239:

236:

227:

219:

215:

207:

200:

182:

176:

161:

116:

95:

82:

67:

58:

39:

18:

2624:Multiplexer

2588:Data buffer

2299:Single-core

2271:bit slicing

2129:Coprocessor

1984:Coprocessor

1865:performance

1787:Cooperative

1777:Speculative

1737:Distributed

1696:Superscalar

1681:Instruction

1649:Parallelism

1622:Speculative

1454:System/3x0

1326:Instruction

1103:Von Neumann

1016:Post–Turing

746:Superscalar

168:clock cycle

106:, Motorola

53:introducing

2854:Categories

2744:management

2639:Multiplier

2500:Logic gate

2490:Sequential

2397:Functional

2377:Clock rate

2350:Data cache

2323:Components

2304:Multi-core

2292:Core count

1782:Preemptive

1686:Pipelining

1669:Bit-serial

1612:Wide-issue

1557:Structural

1479:Tilera ISA

1445:MicroBlaze

1415:ETRAX CRIS

1310:Comparison

1155:Load–store

1135:Endianness

842:References

801:executed.

761:Exceptions

459:operation

127:flip-flops

36:references

2678:Circuitry

2598:Microcode

2522:Registers

2365:coherence

2340:CPU cache

2198:Word size

1863:Processor

1507:Execution

1410:DEC Alpha

1388:Power ISA

1204:Cognitive

1011:Universal

488:backwards

295:Writeback

203:microcode

2616:Datapath

2309:Manycore

2281:variable

2119:Hardware

1755:Temporal

1435:OpenRISC

1130:Cellular

1120:Dataflow

1113:modified

830:See also

790:TLB miss

2792:Related

2723:Quantum

2713:Digital

2708:Boolean

2606:Counter

2505:Quantum

2266:512-bit

2261:256-bit

2256:128-bit

2099:(MPSoC)

2084:on chip

2082:Systems

1900:(FLOPS)

1713:Process

1562:Control

1544:Hazards

1430:Itanium

1425:Unicore

1383:PowerPC

1108:Harvard

1068:Pointer

1063:Counter

1021:Quantum

588:stalled

508:forward

304:Hazards

233:Execute

83:In the

49:improve

2728:Switch

2718:Analog

2456:(IMC)

2427:(MMU)

2276:others

2251:64-bit

2246:48-bit

2241:32-bit

2236:24-bit

2231:16-bit

2226:15-bit

2221:12-bit

2058:Mobile

1974:Stream

1969:Barrel

1964:Vector

1953:(GPU)

1912:(SUPS)

1880:(IPC)

1732:Memory

1725:Vector

1708:Thread

1691:Scalar

1493:Others

1440:RISC-V

1405:SuperH

1374:Power

1370:MIPS-X

1345:PDP-11

1194:Fabric

946:Models

895:

806:commit

782:Scheme

776:(e.g.

742:slots.

604:bubble

492:bubble

484:purple

461:before

311:hazard

287:, two

256:cycle.

252:stage.

38:, but

2784:(PPW)

2742:Power

2634:Adder

2510:Array

2477:Logic

2438:(TLB)

2421:(FPU)

2415:(AGU)

2409:(ALU)

2399:units

2335:Cache

2216:8-bit

2211:4-bit

2206:1-bit

2170:(TPU)

2164:(DSP)

2158:(PPU)

2152:(VPU)

2141:(GPU)

2110:(NoC)

2093:(SoC)

2028:(PoP)

2022:(SiP)

2016:(MCM)

1957:GPGPU

1947:(CPU)

1937:Types

1918:(PPW)

1906:(TPS)

1894:(IPS)

1886:(CPI)

1657:Level

1468:S/390

1463:S/370

1458:S/360

1400:SPARC

1378:POWER

1261:TRIPS

1229:Types

750:Alpha

732:after

562:->

544:->

479:arrow

472:arrow

413:In a

392:->

371:->

289:SRAMs

164:Cache

108:88000

104:SPARC

2762:ACPI

2495:Glue

2387:FIFO

2330:Core

2068:ASIP

2009:CPLD

2004:FPOA

1999:FPGA

1994:ASIC

1847:SPMD

1842:MIMD

1837:MISD

1830:SWAR

1810:SIMD

1805:SISD

1720:Data

1703:Task

1674:Word

1420:M32R

1365:MIPS

1328:sets

1295:ZISC

1290:NISC

1285:OISC

1280:MISC

1273:EPIC

1268:VLIW

1256:EDGE

1246:RISC

1241:CISC

1150:HUMA

1145:NUMA

893:ISBN

778:Lisp

594:and

477:blue

189:and

177:The

147:RISC

100:MIPS

2757:APM

2752:PMU

2644:CPU

2601:ROM

2372:Bus

1989:PAL

1664:Bit

1450:LMC

1355:ARM

1350:x86

1340:VAX

780:or

699:IPC

665:AND

661:AND

653:AND

649:SUB

596:AND

584:AND

580:AND

572:adr

565:r11

553:r10

550:AND

547:r10

541:adr

516:SUB

512:AND

496:AND

470:red

457:AND

453:SUB

446:AND

442:r10

438:SUB

434:r10

430:AND

426:r10

422:SUB

395:r11

383:r10

380:AND

374:r10

359:SUB

112:DLX

2856::

2691:3D

793:.

657:LD

612:.

592:LD

576:LD

559:r3

538:LD

389:r3

368:r4

362:r3

351:.

102:,

930:e

923:t

916:v

901:.

556:,

386:,

365:,

74:)

68:(

63:)

59:(

45:.

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.