5779:

1702:

5381:

36:

5774:{\displaystyle {\begin{bmatrix}\gamma _{1}\\\gamma _{2}\\\gamma _{3}\\\vdots \\\gamma _{p}\\\end{bmatrix}}={\begin{bmatrix}\gamma _{0}&\gamma _{-1}&\gamma _{-2}&\cdots \\\gamma _{1}&\gamma _{0}&\gamma _{-1}&\cdots \\\gamma _{2}&\gamma _{1}&\gamma _{0}&\cdots \\\vdots &\vdots &\vdots &\ddots \\\gamma _{p-1}&\gamma _{p-2}&\gamma _{p-3}&\cdots \\\end{bmatrix}}{\begin{bmatrix}\varphi _{1}\\\varphi _{2}\\\varphi _{3}\\\vdots \\\varphi _{p}\\\end{bmatrix}}}

10749:

10729:

6942:

6949:

3106:

3443:

5110:. There is a direct correspondence between these parameters and the covariance function of the process, and this correspondence can be inverted to determine the parameters from the autocorrelation function (which is itself obtained from the covariances). This is done using the Yule–Walker equations.

9050:

one period prior to the one now being forecast is not known, so its expected value—the predicted value arising from the previous forecasting step—is used instead. Then for future periods the same procedure is used, each time using one more forecast value on the right side of the predictive equation

8511:

The process is non-stationary when the poles are on or outside the unit circle, or equivalently when the characteristic roots are on or inside the unit circle. The process is stable when the poles are strictly within the unit circle (roots strictly outside the unit circle), or equivalently when the

6763:

Estimation of autocovariances or autocorrelations. Here each of these terms is estimated separately, using conventional estimates. There are different ways of doing this and the choice between these affects the properties of the estimation scheme. For example, negative estimates of the variance can

6932:

values in the series; in the second, the likelihood function considered is that corresponding to the unconditional joint distribution of all the values in the observed series. Substantial differences in the results of these approaches can occur if the observed series is short, or if the process is

6792:

Formulation as an extended form of ordinary least squares prediction problem. Here two sets of prediction equations are combined into a single estimation scheme and a single set of normal equations. One set is the set of forward-prediction equations and the other is a corresponding set of backward

6915:

future values of the same series. This way of estimating the AR parameters is due to John Parker Burg, and is called the Burg method: Burg and later authors called these particular estimates "maximum entropy estimates", but the reasoning behind this applies to the use of any set of estimated AR

8769:

7417:

2919:

9062:

There are four sources of uncertainty regarding predictions obtained in this manner: (1) uncertainty as to whether the autoregressive model is the correct model; (2) uncertainty about the accuracy of the forecasted values that are used as lagged values in the right side of the autoregressive

125:(ARIMA) models of time series, which have a more complicated stochastic structure; it is also a special case of the vector autoregressive model (VAR), which consists of a system of more than one interlocking stochastic difference equation in more than one evolving random variable.

2861:

2539:

6928:. Two distinct variants of maximum likelihood are available: in one (broadly equivalent to the forward prediction least squares scheme) the likelihood function considered is that corresponding to the conditional distribution of later values in the series given the initial

7945:

104:

is a representation of a type of random process; as such, it can be used to describe certain time-varying processes in nature, economics, behavior, etc. The autoregressive model specifies that the output variable depends linearly on its own previous values and on a

6741:

4699:

2336:

8245:

5241:

6916:

parameters. Compared to the estimation scheme using only the forward prediction equations, different estimates of the autocovariances are produced, and the estimates have different stability properties. Burg estimates are particularly associated with

2702:

4935:

3306:

7161:

5950:

3740:

6611:

3321:

8072:

9276:

3101:{\displaystyle \Phi (\omega )={\frac {1}{\sqrt {2\pi }}}\,\sum _{n=-\infty }^{\infty }B_{n}e^{-i\omega n}={\frac {1}{\sqrt {2\pi }}}\,\left({\frac {\sigma _{\varepsilon }^{2}}{1+\varphi ^{2}-2\varphi \cos(\omega )}}\right).}

6896:

8586:

7238:

6398:

5070:

4817:

461:

8976:

6472:

3857:

273:

4331:

4549:

668:

6174:

7731:

6788:

for this problem can be seen to correspond to an approximation of the matrix form of the Yule–Walker equations in which each appearance of an autocovariance of the same lag is replaced by a slightly different

1709:

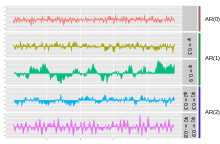

The simplest AR process is AR(0), which has no dependence between the terms. Only the error/innovation/noise term contributes to the output of the process, so in the figure, AR(0) corresponds to white noise.

1493:

7487:

becomes nearer 1, there is stronger power at low frequencies, i.e. larger time lags. This is then a low-pass filter, when applied to full spectrum light, everything except for the red light will be filtered.

6310:

2720:

1319:

1605:

2418:

2240:

819:

7815:

8575:

8334:

1957:

7023:

7613:

8133:

6240:

7827:

3585:

9038:

to equal its expected value, and the expected value of the unobserved error term is zero). The output of the autoregressive equation is the forecast for the first unobserved period. Next, use

6618:

322:

5848:

1248:

1145:

6016:

4554:

1022:

9536:

6051:

523:

2016:

2211:

6519:

8142:

4952:

The partial autocorrelation of an AR(p) process equals zero at lags larger than p, so the appropriate maximum lag p is the one after which the partial autocorrelations are all zero.

4223:

1888:

is negative, then the process favors changes in sign between terms of the process. The output oscillates. This can be likened to edge detection or detection of change in direction.

9273:

5319:

2569:

7222:

4108:

5132:

2374:

583:

4159:

9089:

4059:

8471:

8410:

8370:

7521:

7454:

4436:

9027:

4368:

3941:

2900:

2596:

1984:

1380:

1353:

1172:

904:

846:

353:

3480:

2172:

2083:

2604:

5352:

4824:

1645:

7485:

6090:

5100:

3914:

3774:

2043:

1886:

1859:

1832:

1805:

1773:

approaches 1, the output gets a larger contribution from the previous term relative to the noise. This results in a "smoothing" or integration of the output, similar to a

1672:

742:

5288:

10623:

4403:

3165:

2109:

3220:

9424:

7031:

4023:

1771:

1751:

1731:

109:

term (an imperfectly predictable term); thus the model is in the form of a stochastic difference equation (or recurrence relation) which should not be confused with a

4456:

4197:. It is therefore sometimes useful to understand the properties of the AR(1) model cast in an equivalent form. In this form, the AR(1) model, with process parameter

3618:

2403:

5860:

8505:

8002:

7975:

4003:

3972:

3887:

3530:

3500:

3212:

3185:

3136:

2136:

1523:

1103:

1076:

1049:

985:

958:

931:

877:

699:

172:

10840:

2235:

8436:

3438:{\displaystyle \Phi (\omega )={\frac {1}{\sqrt {2\pi }}}\,{\frac {\sigma _{\varepsilon }^{2}}{1-\varphi ^{2}}}\,{\frac {\gamma }{\pi (\gamma ^{2}+\omega ^{2})}}}

9748:

4179:

3630:

6526:

2138:

depends on time lag t, so that the variance of the series diverges to infinity as t goes to infinity, and is therefore not weak sense stationary.) Assuming

8010:

9327:

9300:

11375:

9871:

8764:{\displaystyle S(f)={\frac {\sigma _{Z}^{2}}{1+\varphi _{1}^{2}+\varphi _{2}^{2}-2\varphi _{1}(1-\varphi _{2})\cos(2\pi f)-2\varphi _{2}\cos(4\pi f)}}}

7412:{\displaystyle S(f)={\frac {\sigma _{Z}^{2}}{|1-\varphi _{1}e^{-2\pi if}|^{2}}}={\frac {\sigma _{Z}^{2}}{1+\varphi _{1}^{2}-2\varphi _{1}\cos 2\pi f}}}

6802:

11199:

10465:

6327:

4981:

4707:

372:

9309:

8890:

6404:

752:

In an AR process, a one-time shock affects values of the evolving variable infinitely far into the future. For example, consider the AR(1) model

3782:

1705:

AR(0); AR(1) with AR parameter 0.3; AR(1) with AR parameter 0.9; AR(2) with AR parameters 0.3 and 0.3; and AR(2) with AR parameters 0.9 and −0.8

11802:

10795:

188:

9046:

period for which data is not yet available; again the autoregressive equation is used to make the forecast, with one difference: the value of

4231:

1899:

11332:

11312:

4465:

592:

6098:

9664:

9451:(Edited by D. G. Childers), NATO Advanced Study Institute of Signal Processing with emphasis on Underwater Acoustics. IEEE Press, New York.

7632:

9462:

9063:

equation; (3) uncertainty about the true values of the autoregressive coefficients; and (4) uncertainty about the value of the error term

11716:

1404:

9559:

2856:{\displaystyle B_{n}=\operatorname {E} (X_{t+n}X_{t})-\mu ^{2}={\frac {\sigma _{\varepsilon }^{2}}{1-\varphi ^{2}}}\,\,\varphi ^{|n|}.}

11633:

6247:

1259:

11317:

2534:{\displaystyle {\textrm {var}}(X_{t})=\operatorname {E} (X_{t}^{2})-\mu ^{2}={\frac {\sigma _{\varepsilon }^{2}}{1-\varphi ^{2}}},}

122:

9606:

11643:

11327:

9981:

1531:

118:

755:

11685:

11582:

9864:

9834:

7742:

8515:

8256:

11872:

11862:

11708:

11400:

11385:

9360:

6759:) model, by replacing the theoretical covariances with estimated values. Some of these variants can be described as follows:

1253:(where the constant term has been suppressed by assuming that the variable has been measured as deviations from its mean) as

9413:

128:

Contrary to the moving-average (MA) model, the autoregressive model is not always stationary as it may contain a unit root.

11772:

11736:

10654:

9635:

7940:{\displaystyle z_{1},z_{2}={\frac {1}{2\varphi _{2}}}\left(\varphi _{1}\pm {\sqrt {\varphi _{1}^{2}+4\varphi _{2}}}\right)}

6966:

8981:

have been estimated, the autoregression can be used to forecast an arbitrary number of periods into the future. First use

7550:

6736:{\displaystyle \rho _{2}=\gamma _{2}/\gamma _{0}={\frac {\varphi _{1}^{2}-\varphi _{2}^{2}+\varphi _{2}}{1-\varphi _{2}}}}

2341:

12040:

11777:

10755:

10306:

10043:

8084:

6917:

6195:

9718:

9693:

3535:

134:

are called autoregressive, but they are not a classical autoregressive model in this sense because they are not linear.

11689:

10887:

10788:

9257:

7537:

4694:{\displaystyle X_{t+n}=\theta ^{n}X_{t}+(1-\theta ^{n})\mu +\Sigma _{i=1}^{n}\left(\theta ^{n-i}\epsilon _{t+i}\right)}

2046:

539:

281:

8869:. Since the AR model is a special case of the vector autoregressive model, the computation of the impulse response in

5787:

1200:

1108:

11842:

10567:

10194:

10001:

9857:

9786:

9740:

9396:

9227:

9213:

9178:

5958:

79:

57:

9298:"On a Method of Investigating Periodicities in Disturbed Series, with Special Reference to Wolfer's Sunspot Numbers"

1194:

is affected by shocks occurring infinitely far into the past. This can also be seen by rewriting the autoregression

50:

11887:

11693:

11677:

11592:

11420:

11390:

10812:

10522:

9336:

4947:

2331:{\displaystyle \operatorname {E} (X_{t})=\varphi \operatorname {E} (X_{t-1})+\operatorname {E} (\varepsilon _{t}),}

6092:

of the autocorrelation function. The full autocorrelation function can then be derived by recursively calculating

1834:

are positive, the output will resemble a low pass filter, with the high frequency part of the noise decreased. If

990:

11792:

11757:

11726:

11721:

11157:

11074:

6021:

476:

8240:{\displaystyle f^{*}={\frac {1}{2\pi }}\cos ^{-1}\left({\frac {\varphi _{1}}{2{\sqrt {-\varphi _{2}}}}}\right),}

1989:

11731:

11360:

11355:

11162:

11059:

10709:

10649:

10247:

9142:

4965:

2177:

8870:

6481:

12045:

11822:

11658:

11557:

11542:

11081:

10954:

10870:

10781:

10242:

9931:

9324:

9297:

8845:

8815:

8811:

8805:

6925:

6771:

problem in which an ordinary least squares prediction problem is constructed, basing prediction of values of

6755:

The above equations (the Yule–Walker equations) provide several routes to estimating the parameters of an AR(

5236:{\displaystyle \gamma _{m}=\sum _{k=1}^{p}\varphi _{k}\gamma _{m-k}+\sigma _{\varepsilon }^{2}\delta _{m,0},}

4200:

11817:

11697:

8004:

are the reciprocals of the characteristic roots, as well as the eigenvalues of the temporal update matrix:

5297:

2547:

1674:

are the coefficients in the autoregression. The formula is valid only if all the roots have multiplicity 1.

11827:

10684:

10081:

10038:

9991:

9986:

9173:. Gwilym M. Jenkins, Gregory C. Reinsel (3rd ed.). Englewood Cliffs, N.J.: Prentice Hall. p. 54.

7177:

4064:

11832:

11468:

6956:

545:

11430:

11014:

10959:

10875:

10735:

10031:

9957:

4121:

11762:

9066:

8985:

to refer to the first period for which data is not yet available; substitute the known preceding values

4031:

12071:

11767:

11752:

11395:

11365:

10932:

10830:

10359:

10294:

9895:

9840:

9508:

9117:

9103:

increases because of the use of an increasing number of estimated values for the right-side variables.

8861:

of a system is the change in an evolving variable in response to a change in the value of a shock term

8443:

8382:

8342:

7493:

7426:

5123:

4408:

4194:

2697:{\displaystyle {\textrm {var}}(X_{t})=\varphi ^{2}{\textrm {var}}(X_{t-1})+\sigma _{\varepsilon }^{2},}

9005:

8339:

The terms involving square roots are all real in the case of complex poles since they exist only when

8077:

AR(2) processes can be split into three groups depending on the characteristics of their roots/poles:

4930:{\displaystyle \operatorname {Var} (X_{t+n}|X_{t})=\sigma ^{2}{\frac {1-\theta ^{2n}}{1-\theta ^{2}}}}

4336:

3919:

2873:

2574:

1962:

1358:

1331:

1150:

882:

824:

331:

11847:

11648:

11562:

11547:

11478:

11054:

10937:

10835:

10760:

10618:

10257:

10088:

9911:

9509:"Autoregressive spectral estimation by application of the Burg algorithm to irregularly sampled data"

9147:

8832:: the Bayesian statistics and probabilistic programming framework supports autoregressive modes with

3451:

2141:

2052:

532:

2913:

of the autocovariance function. In discrete terms this will be the discrete-time

Fourier transform:

12066:

11681:

11567:

11069:

11044:

10989:

10659:

9916:

9127:

8842:

supports parameter inference and model selection for the AR-1 process with time-varying parameters.

8780:

6768:

6056:

5355:

5324:

3138:, which is manifested as the cosine term in the denominator. If we assume that the sampling time (

1621:

1391:

1325:

44:

17:

7463:

6066:

5078:

3892:

3752:

3301:{\displaystyle B(t)\approx {\frac {\sigma _{\varepsilon }^{2}}{1-\varphi ^{2}}}\,\,\varphi ^{|t|}}

2021:

1864:

1837:

1810:

1783:

1780:

For an AR(2) process, the previous two terms and the noise term contribute to the output. If both

1650:

704:

11982:

11972:

11787:

11663:

11445:

11370:

11184:

11049:

10905:

10860:

10704:

10689:

10342:

10337:

10237:

10105:

9886:

8823:

7156:{\displaystyle S(f)={\frac {\sigma _{Z}^{2}}{|1-\sum _{k=1}^{p}\varphi _{k}e^{-i2\pi fk}|^{2}}}.}

5266:

1685:

Each real root contributes a component to the autocorrelation function that decays exponentially.

529:

9656:

9091:

for the period being predicted. Each of the last three can be quantified and combined to give a

4373:

3141:

2088:

1688:

Similarly, each pair of complex conjugate roots contributes an exponentially damped oscillation.

11924:

11852:

11277:

11267:

11111:

10664:

10424:

10143:

10138:

9477:

7527:. This similarly acts as a high-pass filter, everything except for blue light will be filtered.

6784:

previous values of the same series. This can be thought of as a forward-prediction scheme. The

5945:{\displaystyle \gamma _{0}=\sum _{k=1}^{p}\varphi _{k}\gamma _{-k}+\sigma _{\varepsilon }^{2},}

4961:

61:

4008:

1756:

1736:

1716:

11947:

11929:

11909:

11904:

11623:

11455:

11435:

11282:

11225:

11064:

10974:

10694:

10679:

10644:

10332:

10232:

10100:

4441:

4111:

3975:

3590:

2382:

110:

10562:

9556:

12022:

11977:

11967:

11653:

11628:

11597:

11577:

11415:

11337:

11189:

10714:

10669:

10115:

9906:

9901:

9801:

9577:

9520:

9122:

9112:

8476:

7980:

7953:

3981:

3950:

3865:

3508:

3485:

3190:

3170:

3114:

2114:

1501:

1081:

1054:

1027:

963:

936:

909:

855:

677:

145:

131:

114:

3735:{\displaystyle X_{t}=\varphi ^{N}X_{t-N}+\sum _{k=0}^{N-1}\varphi ^{k}\varepsilon _{t-k}.}

2220:

8:

12017:

11857:

11782:

11587:

11347:

11257:

11147:

10289:

10267:

10016:

10011:

9969:

9921:

9092:

8415:

3312:

1733:, only the previous term in the process and the noise term contribute to the output. If

467:

9805:

9524:

8808:'s Econometrics Toolbox and System Identification Toolbox includes autoregressive models

6606:{\displaystyle \rho _{1}=\gamma _{1}/\gamma _{0}={\frac {\varphi _{1}}{1-\varphi _{2}}}}

11987:

11952:

11867:

11837:

11668:

11607:

11602:

11425:

11262:

10927:

10865:

10804:

10674:

10252:

9775:

9598:

9137:

4164:

2707:

and then by noticing that the quantity above is a stable fixed point of this relation.

2085:

since it is obtained as the output of a stable filter whose input is white noise. (If

1615:

1175:

360:

9351:

Theodoridis, Sergios (2015-04-10). "Chapter 1. Probability and

Stochastic Processes".

9274:"Understanding Autoregressive Model for Time Series as a Deterministic Dynamic System"

8135:, the process has a pair of complex-conjugate poles, creating a mid-frequency peak at:

2866:

It can be seen that the autocovariance function decays with a decay time (also called

1328:

on the right side is carried out, the polynomial in the backshift operator applied to

12007:

11220:

11137:

11106:

10999:

10979:

10969:

10825:

10820:

10740:

10728:

10532:

10184:

10055:

10048:

9782:

9392:

9356:

9253:

9219:

9209:

9184:

9174:

7619:

4182:

2910:

11812:

11463:

8067:{\displaystyle {\begin{bmatrix}\varphi _{1}&\varphi _{2}\\1&0\end{bmatrix}}}

12027:

11914:

11797:

11673:

11410:

11167:

11142:

11091:

10942:

10895:

10485:

10475:

10282:

10076:

10026:

10021:

9964:

9952:

9528:

9384:

8858:

6785:

3944:

2906:

11019:

11992:

11892:

11877:

11638:

11572:

11250:

11194:

11177:

10922:

10598:

10542:

10364:

10006:

9926:

9563:

9331:

9304:

9280:

8250:

with bandwidth about the peak inversely proportional to the moduli of the poles:

1774:

11807:

11039:

9627:

6891:{\displaystyle X_{t}=\sum _{i=1}^{p}\varphi _{i}X_{t+i}+\varepsilon _{t}^{*}\,.}

11997:

11962:

11882:

11488:

11235:

11152:

11121:

11116:

11096:

11086:

11029:

11004:

10984:

10949:

10917:

10900:

10572:

10537:

10527:

10352:

10110:

9936:

2711:

11024:

9447:

Burg, John Parker (1968); "A new analysis technique for time series data", in

6793:

prediction equations, relating to the backward representation of the AR model:

12060:

11899:

11440:

11272:

11230:

11172:

10994:

10910:

10850:

10517:

10497:

10414:

10093:

9714:

9685:

9388:

2867:

2217:

by the very definition of weak sense stationarity. If the mean is denoted by

9532:

9223:

9188:

6393:{\displaystyle \gamma _{1}=\varphi _{1}\gamma _{0}+\varphi _{2}\gamma _{-1}}

5065:{\displaystyle X_{t}=\sum _{i=1}^{p}\varphi _{i}X_{t-i}+\varepsilon _{t}.\,}

4812:{\displaystyle \operatorname {E} (X_{t+n}|X_{t})=\mu \left+X_{t}\theta ^{n}}

1185:

values infinitely far into the future from when they occur, any given value

456:{\displaystyle X_{t}=\sum _{i=1}^{p}\varphi _{i}B^{i}X_{t}+\varepsilon _{t}}

11957:

11919:

11473:

11405:

11294:

11289:

11101:

11034:

11009:

10845:

10603:

10434:

9849:

9495:

Proceedings of the 37th

Meeting of the Society of Exploration Geophysicists

8971:{\displaystyle X_{t}=\sum _{i=1}^{p}\varphi _{i}X_{t-i}+\varepsilon _{t}\,}

7541:

7536:

The behavior of an AR(2) process is determined entirely by the roots of it

6467:{\displaystyle \gamma _{2}=\varphi _{1}\gamma _{1}+\varphi _{2}\gamma _{0}}

9828:

3852:{\displaystyle X_{t}=\sum _{k=0}^{\infty }\varphi ^{k}\varepsilon _{t-k}.}

12002:

11537:

11521:

11516:

11511:

11501:

11304:

11245:

11240:

11204:

10964:

10855:

10699:

10470:

10379:

10374:

9996:

9974:

9203:

9168:

8473:

it acts as a high-pass filter on the white noise with a spectral peak at

7623:

671:

356:

268:{\displaystyle X_{t}=\sum _{i=1}^{p}\varphi _{i}X_{t-i}+\varepsilon _{t}}

8412:

it acts as a low-pass filter on the white noise with a spectral peak at

4326:{\displaystyle X_{t+1}=X_{t}+(1-\theta )(\mu -X_{t})+\varepsilon _{t+1}}

4118:

growth or decay). In this case, the solution can be found analytically:

12012:

11552:

11496:

11380:

11333:

Generalized autoregressive conditional heteroskedasticity (GARCH) model

10773:

10593:

10552:

10547:

10460:

10369:

10277:

10189:

10169:

9844:

7524:

5368:, the set of equations can be solved by representing the equations for

4544:{\displaystyle X_{t+1}=\theta X_{t}+(1-\theta )\mu +\varepsilon _{t+1}}

663:{\displaystyle \Phi (z):=\textstyle 1-\sum _{i=1}^{p}\varphi _{i}z^{i}}

106:

9461:

Brockwell, Peter J.; Dahlhaus, Rainer; Trindade, A. Alexandre (2005).

9099:-step-ahead predictions; the confidence interval will become wider as

6169:{\displaystyle \rho (\tau )=\sum _{k=1}^{p}\varphi _{k}\rho (k-\tau )}

1355:

has an infinite order—that is, an infinite number of lagged values of

11506:

10588:

10557:

10455:

10299:

10262:

10199:

10153:

10148:

10133:

9741:"statsmodels.tsa.ar_model.AutoReg — statsmodels 0.12.2 documentation"

9132:

7726:{\displaystyle H_{z}=(1-\varphi _{1}z^{-1}-\varphi _{2}z^{-2})^{-1}.}

7457:

5119:

1753:

is close to 0, then the process still looks like white noise, but as

5361:

Because the last part of an individual equation is non-zero only if

10490:

10322:

2409:

528:

An autoregressive model can thus be viewed as the output of an all-

1488:{\displaystyle \rho (\tau )=\sum _{k=1}^{p}a_{k}y_{k}^{-|\tau |},}

10613:

10450:

10404:

10327:

10227:

10222:

10174:

9463:"Modified Burg Algorithms for Multivariate Subset Autoregression"

6941:

4188:

589:) model to be weak-sense stationary, the roots of the polynomial

538:

Some parameter constraints are necessary for the model to remain

9250:

Time series analysis and its applications : with R examples

10628:

10608:

10480:

10272:

9686:"The Time Series Analysis (TSA) toolbox for Octave and Matlab®"

4193:

The AR(1) model is the discrete-time analogy of the continuous

1701:

9493:

Burg, John Parker (1967) "Maximum

Entropy Spectral Analysis",

9002:

into the autoregressive equation while setting the error term

6305:{\displaystyle \rho _{1}=\gamma _{1}/\gamma _{0}=\varphi _{1}}

4960:

There are many ways to estimate the coefficients, such as the

4438:

is a white-noise process with zero mean and constant variance

3111:

This expression is periodic due to the discrete nature of the

1986:

is a white noise process with zero mean and constant variance

1314:{\displaystyle X_{t}={\frac {1}{\phi (B)}}\varepsilon _{t}\,.}

466:

so that, moving the summation term to the left side and using

10429:

10409:

10399:

10394:

10389:

10384:

10347:

10179:

9059:

right-side values are predicted values from preceding steps.

8829:

7456:

there is a single spectral peak at f=0, often referred to as

6948:

117:, it is a special case and key component of the more general

10419:

9507:

Bos, Robert; De Waele, Stijn; Broersen, Piet M. T. (2002).

1600:{\displaystyle \phi (B)=1-\sum _{k=1}^{p}\varphi _{k}B^{k}}

11313:

Autoregressive conditional heteroskedasticity (ARCH) model

5321:

is the standard deviation of the input noise process, and

814:{\displaystyle X_{t}=\varphi _{1}X_{t-1}+\varepsilon _{t}}

9460:

9353:

Machine

Learning: A Bayesian and Optimization Perspective

8774:

7810:{\displaystyle 1-\varphi _{1}z^{-1}-\varphi _{2}z^{-2}=0}

10841:

Independent and identically distributed random variables

9208:. David S. Stoffer. New York: Springer. pp. 90–91.

8580:

The full PSD function can be expressed in real form as:

8570:{\displaystyle -1\leq \varphi _{2}\leq 1-|\varphi _{1}|}

8329:{\displaystyle |z_{1}|=|z_{2}|={\sqrt {-\varphi _{2}}}.}

3482:

is the angular frequency associated with the decay time

1952:{\displaystyle X_{t}=\varphi X_{t-1}+\varepsilon _{t}\,}

8822:

contains several estimation functions for uni-variate,

1647:

is the function defining the autoregression, and where

92:

In statistics, econometrics, and signal processing, an

11318:

Autoregressive integrated moving average (ARIMA) model

8019:

7736:

It follows that the poles are values of z satisfying:

5701:

5468:

5390:

611:

9069:

9008:

8893:

8589:

8518:

8479:

8446:

8418:

8385:

8345:

8259:

8145:

8087:

8013:

7983:

7956:

7830:

7745:

7635:

7553:

7496:

7466:

7429:

7241:

7180:

7034:

7018:{\displaystyle \mathrm {Var} (Z_{t})=\sigma _{Z}^{2}}

6969:

6805:

6621:

6529:

6484:

6407:

6330:

6250:

6198:

6101:

6069:

6024:

5961:

5863:

5790:

5384:

5327:

5300:

5269:

5135:

5081:

4984:

4827:

4710:

4557:

4468:

4444:

4411:

4376:

4339:

4234:

4203:

4167:

4124:

4067:

4034:

4011:

3984:

3953:

3922:

3895:

3868:

3785:

3755:

3633:

3593:

3538:

3511:

3488:

3454:

3324:

3223:

3193:

3173:

3144:

3117:

2922:

2876:

2723:

2607:

2577:

2550:

2421:

2385:

2344:

2243:

2223:

2180:

2144:

2117:

2091:

2055:

2024:

1992:

1965:

1902:

1867:

1840:

1813:

1786:

1759:

1739:

1719:

1653:

1624:

1534:

1504:

1407:

1361:

1334:

1262:

1203:

1178:

then the effect diminishes toward zero in the limit.

1153:

1111:

1084:

1057:

1030:

993:

966:

939:

912:

885:

858:

827:

758:

707:

680:

595:

548:

479:

375:

334:

284:

191:

148:

9513:

7608:{\displaystyle 1-\varphi _{1}B-\varphi _{2}B^{2}=0,}

9836:

Econometrics lecture (topic: Autoregressive models)

9506:

9414:"The Yule Walker Equations for the AR Coefficients"

9170:

Time series analysis : forecasting and control

8802:

function to fit various models including AR models.

8128:{\displaystyle \varphi _{1}^{2}+4\varphi _{2}<0}

6322:The Yule–Walker equations for an AR(2) process are

6235:{\displaystyle \gamma _{1}=\varphi _{1}\gamma _{0}}

4955:

1147:. Continuing this process shows that the effect of

9774:

9083:

9021:

8970:

8763:

8569:

8499:

8465:

8430:

8404:

8364:

8328:

8239:

8127:

8066:

7996:

7969:

7939:

7809:

7725:

7607:

7515:

7479:

7448:

7411:

7216:

7155:

7017:

6890:

6735:

6605:

6513:

6466:

6392:

6304:

6234:

6168:

6084:

6045:

6010:

5944:

5842:

5773:

5346:

5313:

5282:

5235:

5094:

5064:

4929:

4811:

4693:

4543:

4450:

4430:

4397:

4362:

4325:

4217:

4173:

4153:

4102:

4053:

4017:

3997:

3966:

3935:

3916:kernel plus the constant mean. If the white noise

3908:

3881:

3851:

3768:

3734:

3620:in the defining equation. Continuing this process

3612:

3580:{\displaystyle \varphi X_{t-2}+\varepsilon _{t-1}}

3579:

3524:

3494:

3474:

3437:

3300:

3206:

3179:

3159:

3130:

3100:

2894:

2855:

2696:

2590:

2563:

2533:

2397:

2368:

2330:

2229:

2205:

2166:

2130:

2103:

2077:

2037:

2010:

1978:

1951:

1880:

1853:

1826:

1799:

1765:

1745:

1725:

1666:

1639:

1599:

1517:

1487:

1374:

1347:

1313:

1242:

1166:

1139:

1097:

1070:

1043:

1016:

979:

952:

925:

898:

871:

840:

813:

736:

693:

662:

577:

542:. For example, processes in the AR(1) model with

517:

455:

347:

316:

267:

166:

9815:Time Series and System Analysis with Applications

747:

317:{\displaystyle \varphi _{1},\ldots ,\varphi _{p}}

12058:

11200:Stochastic chains with memory of variable length

9378:

7523:there is a minimum at f=0, often referred to as

6924:Other possible approaches to estimation include

6059:. The AR parameters are determined by the first

5843:{\displaystyle \{\varphi _{m};m=1,2,\dots ,p\}.}

4005:will be approximately normally distributed when

3974:is also a Gaussian process. In other cases, the

3187:), then we can use a continuum approximation to

1243:{\displaystyle \phi (B)X_{t}=\varepsilon _{t}\,}

1140:{\displaystyle \varphi _{1}^{2}\varepsilon _{1}}

9796:Percival, Donald B.; Walden, Andrew T. (1993).

9579:astsa: Applied Statistical Time Series Analysis

9310:Philosophical Transactions of the Royal Society

6011:{\displaystyle \{\varphi _{m};m=1,2,\dots ,p\}}

9795:

9657:"Autoregressive Model - MATLAB & Simulink"

9247:

6750:

6055:An alternative formulation is in terms of the

4189:Explicit mean/difference form of AR(1) process

585:are not stationary. More generally, for an AR(

10789:

9865:

9575:

9379:Von Storch, Hans; Zwiers, Francis W. (2001).

1681:) process is a sum of decaying exponentials.

359:. This can be equivalently written using the

9879:

9813:Pandit, Sudhakar M.; Wu, Shien-Ming (1983).

9576:Stoffer, David; Poison, Nicky (2023-01-09),

9287:, June 2017, number 15, June 2017, pages 7-9

6005:

5962:

5834:

5791:

4425:

4412:

1891:

1692:

1385:

1017:{\displaystyle \varphi _{1}\varepsilon _{1}}

9798:Spectral Analysis for Physical Applications

9350:

9325:"On Periodicity in Series of Related Terms"

9248:Shumway, Robert H.; Stoffer, David (2010).

8876:

8375:Otherwise the process has real roots, and:

6046:{\displaystyle \sigma _{\varepsilon }^{2}.}

4941:

518:{\displaystyle \phi X_{t}=\varepsilon _{t}}

174:indicates an autoregressive model of order

11328:Autoregressive–moving-average (ARMA) model

10796:

10782:

9872:

9858:

9557:"Fit Autoregressive Models to Time Series"

8884:Once the parameters of the autoregression

5375:in matrix form, thus getting the equation

2011:{\displaystyle \sigma _{\varepsilon }^{2}}

1382:appear on the right side of the equation.

27:Representation of a type of random process

9454:

9205:Time series analysis and its applications

9080:

8967:

6884:

5061:

4359:

4211:

3393:

3355:

3277:

3276:

3025:

2953:

2829:

2828:

2206:{\displaystyle \operatorname {E} (X_{t})}

1948:

1307:

1239:

80:Learn how and when to remove this message

10803:

9812:

9381:Statistical analysis in climate research

9374:

9372:

6947:

6940:

6514:{\displaystyle \gamma _{-k}=\gamma _{k}}

5113:

1700:

123:autoregressive integrated moving average

43:This article includes a list of general

9721:from the original on September 28, 2020

9355:. Academic Press, 2015. pp. 9–51.

9201:

4218:{\displaystyle \theta \in \mathbb {R} }

3167:) is much smaller than the decay time (

1174:never ends, although if the process is

14:

12059:

11634:Doob's martingale convergence theorems

8871:vector autoregression#impulse response

8775:Implementations in statistics packages

5314:{\displaystyle \sigma _{\varepsilon }}

5126:, are the following set of equations.

2564:{\displaystyle \sigma _{\varepsilon }}

1677:The autocorrelation function of an AR(

11386:Constant elasticity of variance (CEV)

11376:Chan–Karolyi–Longstaff–Sanders (CKLS)

10777:

9853:

9777:Time Series Techniques for Economists

9772:

9487:

9369:

7540:, which is expressed in terms of the

7217:{\displaystyle S(f)=\sigma _{Z}^{2}.}

5118:The Yule–Walker equations, named for

4103:{\displaystyle X_{t}=\varphi X_{t-1}}

3532:can be derived by first substituting

1713:For an AR(1) process with a positive

10710:Generative adversarial network (GAN)

9441:

7618:or equivalently by the poles of its

2369:{\displaystyle \mu =\varphi \mu +0,}

578:{\displaystyle |\varphi _{1}|\geq 1}

29:

9500:

9166:

9029:equal to zero (because we forecast

8852:

8826:and adaptive autoregressive models.

6918:maximum entropy spectral estimation

6615:Using the recursion formula yields

5290:is the autocovariance function of X

4154:{\displaystyle X_{t}=a\varphi ^{t}}

2598:. This can be shown by noting that

535:filter whose input is white noise.

24:

11873:Skorokhod's representation theorem

11654:Law of large numbers (weak/strong)

9569:

9084:{\displaystyle \varepsilon _{t}\,}

8865:periods earlier, as a function of

6977:

6974:

6971:

4711:

4629:

4054:{\displaystyle \varepsilon _{t}=0}

3889:is white noise convolved with the

3815:

3325:

3145:

2973:

2968:

2923:

2737:

2448:

2303:

2272:

2244:

2181:

2045:has been dropped.) The process is

596:

49:it lacks sufficient corresponding

25:

12083:

11843:Martingale representation theorem

9822:

9411:

9285:Predictive Analytics and Futurism

8512:coefficients are in the triangle

8466:{\displaystyle \varphi _{1}<0}

8405:{\displaystyle \varphi _{1}>0}

8365:{\displaystyle \varphi _{2}<0}

7516:{\displaystyle \varphi _{1}<0}

7449:{\displaystyle \varphi _{1}>0}

4975:) model is given by the equation

4968:(through Yule–Walker equations).

4551:and then deriving (by induction)

4431:{\displaystyle \{\epsilon _{t}\}}

11888:Stochastic differential equation

11778:Doob's optional stopping theorem

11773:Doob–Meyer decomposition theorem

10748:

10747:

10727:

9337:Proceedings of the Royal Society

9022:{\displaystyle \varepsilon _{t}}

8848:: implementation in statsmodels.

6523:Using the first equation yields

4956:Calculation of the AR parameters

4948:Partial autocorrelation function

4363:{\displaystyle |\theta |<1\,}

3936:{\displaystyle \varepsilon _{t}}

2895:{\displaystyle \tau =1-\varphi }

2591:{\displaystyle \varepsilon _{t}}

1979:{\displaystyle \varepsilon _{t}}

1525:are the roots of the polynomial

1375:{\displaystyle \varepsilon _{t}}

1348:{\displaystyle \varepsilon _{t}}

1167:{\displaystyle \varepsilon _{1}}

899:{\displaystyle \varepsilon _{1}}

841:{\displaystyle \varepsilon _{t}}

348:{\displaystyle \varepsilon _{t}}

34:

11758:Convergence of random variables

11644:Fisher–Tippett–Gnedenko theorem

9751:from the original on 2021-02-28

9733:

9707:

9696:from the original on 2012-05-11

9678:

9667:from the original on 2022-02-16

9649:

9638:from the original on 2022-02-16

9628:"System Identification Toolbox"

9620:

9609:from the original on 2023-04-16

9591:

9550:

9539:from the original on 2023-04-16

9430:from the original on 2018-07-13

9230:from the original on 2023-04-16

6179:Examples for some Low-order AR(

3475:{\displaystyle \gamma =1/\tau }

2213:is identical for all values of

2167:{\displaystyle |\varphi |<1}

2078:{\displaystyle |\varphi |<1}

1398:) process can be expressed as

11356:Binomial options pricing model

10660:Recurrent neural network (RNN)

10650:Differentiable neural computer

9800:. Cambridge University Press.

9781:. Cambridge University Press.

9405:

9383:. Cambridge University Press.

9344:

9317:

9290:

9272:Lai, Dihui; and Lu, Bingfeng;

9266:

9241:

9195:

9160:

8755:

8743:

8718:

8706:

8697:

8678:

8599:

8593:

8563:

8548:

8299:

8284:

8276:

8261:

7708:

7649:

7320:

7276:

7251:

7245:

7190:

7184:

7137:

7069:

7044:

7038:

6994:

6981:

6963:) process with noise variance

6163:

6151:

6111:

6105:

6079:

6073:

4868:

4854:

4834:

4751:

4737:

4717:

4619:

4600:

4516:

4504:

4392:

4386:

4349:

4341:

4301:

4282:

4279:

4267:

3505:An alternative expression for

3429:

3403:

3334:

3328:

3292:

3284:

3233:

3227:

3085:

3079:

2932:

2926:

2844:

2836:

2772:

2743:

2670:

2651:

2628:

2615:

2472:

2454:

2442:

2429:

2322:

2309:

2297:

2278:

2263:

2250:

2200:

2187:

2154:

2146:

2065:

2057:

1634:

1628:

1544:

1538:

1476:

1468:

1417:

1411:

1291:

1285:

1213:

1207:

1024:. Then by the AR equation for

906:. Then by the AR equation for

748:Intertemporal effect of shocks

724:

709:

605:

599:

565:

550:

489:

483:

161:

155:

13:

1:

11823:Kolmogorov continuity theorem

11659:Law of the iterated logarithm

10705:Variational autoencoder (VAE)

10665:Long short-term memory (LSTM)

9932:Computational learning theory

9766:

9341:, Ser. A, Vol. 131, 518–532.

9314:, Ser. A, Vol. 226, 267–298.]

6926:maximum likelihood estimation

6018:are known, can be solved for

5347:{\displaystyle \delta _{m,0}}

2571:is the standard deviation of

1896:An AR(1) process is given by:

1640:{\displaystyle \phi (\cdot )}

137:

119:autoregressive–moving-average

11828:Kolmogorov extension theorem

11507:Generalized queueing network

11015:Interacting particle systems

10685:Convolutional neural network

9829:AutoRegression Analysis (AR)

7480:{\displaystyle \varphi _{1}}

6764:be produced by some choices.

6085:{\displaystyle \rho (\tau )}

5784:which can be solved for all

5095:{\displaystyle \varphi _{i}}

3909:{\displaystyle \varphi ^{k}}

3769:{\displaystyle \varphi ^{N}}

2038:{\displaystyle \varphi _{1}}

1881:{\displaystyle \varphi _{2}}

1854:{\displaystyle \varphi _{1}}

1827:{\displaystyle \varphi _{2}}

1800:{\displaystyle \varphi _{1}}

1667:{\displaystyle \varphi _{k}}

737:{\displaystyle |z_{i}|>1}

674:, i.e., each (complex) root

7:

10960:Continuous-time random walk

10680:Multilayer perceptron (MLP)

9202:Shumway, Robert H. (2000).

9106:

6936:

6933:close to non-stationarity.

6751:Estimation of AR parameters

5850:The remaining equation for

5283:{\displaystyle \gamma _{m}}

1181:Because each shock affects

10:

12088:

11968:Extreme value theory (EVT)

11768:Doob decomposition theorem

11060:Ornstein–Uhlenbeck process

10831:Chinese restaurant process

10756:Artificial neural networks

10670:Gated recurrent unit (GRU)

9896:Differentiable programming

9773:Mills, Terence C. (1990).

9497:, Oklahoma City, Oklahoma.

9252:(3rd ed.). Springer.

9167:Box, George E. P. (1994).

9143:Ornstein–Uhlenbeck process

9118:Linear difference equation

7622:, which is defined in the

5075:It is based on parameters

4945:

4398:{\displaystyle \mu :=E(X)}

4195:Ornstein-Uhlenbeck process

3315:for the spectral density:

3160:{\displaystyle \Delta t=1}

2104:{\displaystyle \varphi =1}

2018:. (Note: The subscript on

12036:

11940:

11848:Optional stopping theorem

11745:

11707:

11649:Large deviation principle

11616:

11530:

11487:

11454:

11401:Heath–Jarrow–Morton (HJM)

11346:

11338:Moving-average (MA) model

11323:Autoregressive (AR) model

11303:

11213:

11148:Hidden Markov model (HMM)

11130:

11082:Schramm–Loewner evolution

10886:

10811:

10723:

10637:

10581:

10510:

10443:

10315:

10215:

10208:

10162:

10126:

10089:Artificial neural network

10069:

9945:

9912:Automatic differentiation

9885:

9715:"christophmark/bayesloop"

9476:: 197–213. Archived from

9148:Infinite impulse response

6902:Here predicted values of

1892:Example: An AR(1) process

1386:Characteristic polynomial

533:infinite impulse response

115:moving-average (MA) model

11763:Doléans-Dade exponential

11593:Progressively measurable

11391:Cox–Ingersoll–Ross (CIR)

9917:Neuromorphic engineering

9880:Differentiable computing

9817:. John Wiley & Sons.

9449:Modern Spectrum Analysis

9389:10.1017/CBO9780511612336

9323:Walker, Gilbert (1931)

9153:

9128:Linear predictive coding

7531:

7227:

7171:For white noise (AR(0))

7166:

6769:least squares regression

6057:autocorrelation function

5356:Kronecker delta function

4942:Choosing the maximum lag

4181:is an unknown constant (

4018:{\displaystyle \varphi }

3776:will approach zero and:

1766:{\displaystyle \varphi }

1746:{\displaystyle \varphi }

1726:{\displaystyle \varphi }

1392:autocorrelation function

11983:Mathematical statistics

11973:Large deviations theory

11803:Infinitesimal generator

11664:Maximal ergodic theorem

11583:Piecewise-deterministic

11185:Random dynamical system

11050:Markov additive process

10690:Residual neural network

10106:Artificial Intelligence

9533:10.1109/TIM.2002.808031

8880:-step-ahead forecasting

7538:characteristic equation

4451:{\displaystyle \sigma }

4405:is the model mean, and

3613:{\displaystyle X_{t-1}}

2398:{\displaystyle \mu =0.}

821:. A non-zero value for

64:more precise citations.

11818:Karhunen–Loève theorem

11753:Cameron–Martin formula

11717:Burkholder–Davis–Gundy

11112:Variance gamma process

9599:"Econometrics Toolbox"

9421:stat.wharton.upenn.edu

9085:

9023:

8972:

8927:

8765:

8571:

8501:

8467:

8432:

8406:

8366:

8330:

8241:

8129:

8068:

7998:

7971:

7941:

7811:

7727:

7609:

7517:

7481:

7450:

7413:

7218:

7157:

7099:

7019:

6957:power spectral density

6952:

6945:

6911:would be based on the

6892:

6839:

6737:

6607:

6515:

6468:

6394:

6306:

6236:

6170:

6137:

6086:

6047:

6012:

5946:

5897:

5844:

5775:

5348:

5315:

5284:

5237:

5169:

5096:

5066:

5018:

4962:ordinary least squares

4931:

4813:

4695:

4545:

4452:

4432:

4399:

4364:

4327:

4219:

4175:

4155:

4104:

4055:

4019:

3999:

3968:

3937:

3910:

3883:

3853:

3819:

3770:

3749:approaching infinity,

3736:

3702:

3614:

3581:

3526:

3496:

3476:

3439:

3302:

3208:

3181:

3161:

3132:

3102:

2977:

2896:

2857:

2698:

2592:

2565:

2535:

2399:

2370:

2332:

2231:

2207:

2168:

2132:

2105:

2079:

2039:

2012:

1980:

1953:

1882:

1855:

1828:

1801:

1767:

1747:

1727:

1706:

1668:

1641:

1601:

1576:

1519:

1489:

1443:

1376:

1349:

1315:

1244:

1168:

1141:

1099:

1072:

1045:

1018:

981:

954:

927:

900:

873:

842:

815:

738:

695:

664:

638:

579:

519:

457:

409:

349:

318:

269:

225:

182:) model is defined as

168:

11948:Actuarial mathematics

11910:Uniform integrability

11905:Stratonovich integral

11833:Lévy–Prokhorov metric

11737:Marcinkiewicz–Zygmund

11624:Central limit theorem

11226:Gaussian random field

11055:McKean–Vlasov process

10975:Dyson Brownian motion

10836:Galton–Watson process

10645:Neural Turing machine

10233:Human image synthesis

9296:Yule, G. Udny (1927)

9086:

9024:

8973:

8907:

8766:

8572:

8502:

8500:{\displaystyle f=1/2}

8468:

8433:

8407:

8367:

8331:

8242:

8130:

8069:

7999:

7997:{\displaystyle z_{2}}

7972:

7970:{\displaystyle z_{1}}

7942:

7812:

7728:

7610:

7518:

7482:

7451:

7414:

7219:

7158:

7079:

7020:

6951:

6944:

6893:

6819:

6738:

6608:

6516:

6469:

6395:

6307:

6237:

6171:

6117:

6087:

6048:

6013:

5947:

5877:

5845:

5776:

5349:

5316:

5285:

5238:

5149:

5114:Yule–Walker equations

5097:

5067:

4998:

4932:

4814:

4696:

4546:

4462:By rewriting this as

4453:

4433:

4400:

4365:

4328:

4220:

4176:

4156:

4112:geometric progression

4105:

4056:

4020:

4000:

3998:{\displaystyle X_{t}}

3976:central limit theorem

3969:

3967:{\displaystyle X_{t}}

3938:

3911:

3884:

3882:{\displaystyle X_{t}}

3854:

3799:

3771:

3737:

3676:

3615:

3582:

3527:

3525:{\displaystyle X_{t}}

3497:

3495:{\displaystyle \tau }

3477:

3440:

3303:

3209:

3207:{\displaystyle B_{n}}

3182:

3180:{\displaystyle \tau }

3162:

3133:

3131:{\displaystyle X_{j}}

3103:

2954:

2897:

2858:

2699:

2593:

2566:

2536:

2400:

2371:

2333:

2232:

2208:

2169:

2133:

2131:{\displaystyle X_{t}}

2111:then the variance of

2106:

2080:

2047:weak-sense stationary

2040:

2013:

1981:

1954:

1883:

1856:

1829:

1802:

1768:

1748:

1728:

1704:

1669:

1642:

1602:

1556:

1520:

1518:{\displaystyle y_{k}}

1490:

1423:

1377:

1350:

1316:

1245:

1169:

1142:

1100:

1098:{\displaystyle X_{3}}

1073:

1071:{\displaystyle X_{2}}

1046:

1044:{\displaystyle X_{3}}

1019:

982:

980:{\displaystyle X_{2}}

955:

953:{\displaystyle X_{1}}

928:

926:{\displaystyle X_{2}}

901:

874:

872:{\displaystyle X_{1}}

843:

816:

739:

696:

694:{\displaystyle z_{i}}

670:must lie outside the

665:

618:

580:

540:weak-sense stationary

520:

458:

389:

350:

319:

270:

205:

169:

167:{\displaystyle AR(p)}

132:Large language models

111:differential equation

12023:Time series analysis

11978:Mathematical finance

11863:Reflection principle

11190:Regenerative process

10990:Fleming–Viot process

10805:Stochastic processes

10736:Computer programming

10715:Graph neural network

10290:Text-to-video models

10268:Text-to-image models

10116:Large language model

10101:Scientific computing

9907:Statistical manifold

9902:Information geometry

9717:. December 7, 2021.

9123:Predictive analytics

9113:Moving average model

9067:

9006:

8891:

8787:package includes an

8587:

8516:

8477:

8444:

8416:

8383:

8343:

8257:

8143:

8085:

8011:

7981:

7954:

7828:

7743:

7633:

7551:

7494:

7464:

7427:

7239:

7178:

7032:

6967:

6803:

6619:

6527:

6482:

6405:

6328:

6248:

6196:

6099:

6067:

6022:

5959:

5861:

5788:

5382:

5325:

5298:

5267:

5133:

5079:

4982:

4825:

4708:

4701:, one can show that

4555:

4466:

4442:

4409:

4374:

4337:

4232:

4201:

4165:

4122:

4065:

4032:

4009:

3982:

3951:

3920:

3893:

3866:

3783:

3753:

3631:

3591:

3536:

3509:

3486:

3452:

3322:

3221:

3191:

3171:

3142:

3115:

2920:

2874:

2721:

2605:

2575:

2548:

2419:

2383:

2342:

2241:

2230:{\displaystyle \mu }

2221:

2178:

2142:

2115:

2089:

2053:

2022:

1990:

1963:

1900:

1865:

1838:

1811:

1784:

1757:

1737:

1717:

1651:

1622:

1532:

1502:

1405:

1359:

1332:

1260:

1201:

1151:

1109:

1082:

1055:

1028:

991:

964:

937:

910:

883:

856:

825:

756:

744:(see pages 89,92 ).

705:

678:

593:

546:

477:

373:

332:

282:

189:

146:

113:. Together with the

12018:Stochastic analysis

11858:Quadratic variation

11853:Prokhorov's theorem

11788:Feynman–Kac formula

11258:Markov random field

10906:Birth–death process

10082:In-context learning

9922:Pattern recognition

9806:1993sapa.book.....P

9745:www.statsmodels.org

9729:– via GitHub.

9525:2002ITIM...51.1289B

9093:confidence interval

8661:

8643:

8621:

8431:{\displaystyle f=0}

8102:

7913:

7374:

7352:

7273:

7210:

7066:

7014:

6883:

6698:

6680:

6039:

5938:

5213:

4648:

3372:

3255:

3046:

2807:

2690:

2507:

2471:

2007:

1481:

1326:polynomial division

1126:

468:polynomial notation

11988:Probability theory

11868:Skorokhod integral

11838:Malliavin calculus

11421:Korn-Kreer-Lenssen

11305:Time series models

11268:Pitman–Yor process

10675:Echo state network

10563:Jürgen Schmidhuber

10258:Facial recognition

10253:Speech recognition

10163:Software libraries

9562:2016-01-28 at the

9330:2011-06-07 at the

9303:2011-05-14 at the

9279:2023-03-24 at the

9138:Levinson recursion

9081:

9019:

8968:

8761:

8647:

8629:

8607:

8567:

8497:

8463:

8428:

8402:

8362:

8326:

8237:

8125:

8088:

8064:

8058:

7994:

7967:

7937:

7899:

7807:

7723:

7605:

7513:

7477:

7446:

7409:

7360:

7338:

7259:

7214:

7196:

7153:

7052:

7015:

7000:

6953:

6946:

6888:

6869:

6733:

6684:

6666:

6603:

6511:

6464:

6390:

6302:

6232:

6166:

6082:

6043:

6025:

6008:

5942:

5924:

5840:

5771:

5765:

5690:

5454:

5344:

5311:

5280:

5233:

5199:

5092:

5062:

4927:

4809:

4691:

4628:

4541:

4448:

4428:

4395:

4360:

4323:

4215:

4171:

4151:

4100:

4051:

4015:

3995:

3964:

3933:

3906:

3879:

3849:

3766:

3732:

3610:

3577:

3522:

3492:

3472:

3435:

3358:

3313:Lorentzian profile

3298:

3241:

3204:

3177:

3157:

3128:

3098:

3032:

2892:

2853:

2793:

2694:

2676:

2588:

2561:

2531:

2493:

2457:

2395:

2366:

2328:

2227:

2203:

2164:

2128:

2101:

2075:

2035:

2008:

1993:

1976:

1949:

1878:

1861:is positive while

1851:

1824:

1797:

1763:

1743:

1723:

1707:

1664:

1637:

1616:backshift operator

1597:

1515:

1485:

1454:

1372:

1345:

1311:

1240:

1164:

1137:

1112:

1095:

1068:

1041:

1014:

977:

950:

923:

896:

869:

838:

811:

734:

691:

660:

659:

575:

515:

453:

361:backshift operator

345:

328:of the model, and

314:

265:

164:

12072:Signal processing

12054:

12053:

12008:Signal processing

11727:Doob's upcrossing

11722:Doob's martingale

11686:Engelbert–Schmidt

11629:Donsker's theorem

11563:Feller-continuous

11431:Rendleman–Bartter

11221:Dirichlet process

11138:Branching process

11107:Telegraph process

11000:Geometric process

10980:Empirical process

10970:Diffusion process

10826:Branching process

10821:Bernoulli process

10771:

10770:

10533:Stephen Grossberg

10506:

10505:

9661:www.mathworks.com

9632:www.mathworks.com

9603:www.mathworks.com

9470:Statistica Sinica

9362:978-0-12-801522-3

9055:predictions, all

8759:

8321:

8228:

8225:

8172:

7930:

7877:

7620:transfer function

7407:

7331:

7148:

6767:Formulation as a

6731:

6601:

4966:method of moments

4925:

4183:initial condition

4174:{\displaystyle a}

4025:is close to one.

3433:

3391:

3353:

3352:

3274:

3089:

3023:

3022:

2951:

2950:

2911:Fourier transform

2826:

2648:

2612:

2526:

2426:

2237:, it follows from

1295:

90:

89:

82:

16:(Redirected from

12079:

12028:Machine learning

11915:Usual hypotheses

11798:Girsanov theorem

11783:Dynkin's formula

11548:Continuous paths

11456:Actuarial models

11396:Garman–Kohlhagen

11366:Black–Karasinski

11361:Black–Derman–Toy

11348:Financial models

11214:Fields and other

11143:Gaussian process

11092:Sigma-martingale

10896:Additive process

10798:

10791:

10784:

10775:

10774:

10761:Machine learning

10751:

10750:

10731:

10486:Action selection

10476:Self-driving car

10283:Stable Diffusion

10248:Speech synthesis

10213:

10212:

10077:Machine learning

9953:Gradient descent

9874:

9867:

9860:

9851:

9850:

9837:

9818:

9809:

9792:

9780:

9760:

9759:

9757:

9756:

9737:

9731:

9730:

9728:

9726:

9711:

9705:

9704:

9702:

9701:

9682:

9676:

9675:

9673:

9672:

9653:

9647:

9646:

9644:

9643:

9624:

9618:

9617:

9615:

9614:

9595:

9589:

9588:

9587:

9586:

9573:

9567:

9554:

9548:

9547:

9545:

9544:

9504:

9498:

9491:

9485:

9484:

9482:

9467:

9458:

9452:

9445:

9439:

9438:

9436:

9435:

9429:

9418:

9409:

9403:

9402:

9376:

9367:

9366:

9348:

9342:

9321:

9315:

9294:

9288:

9270:

9264:

9263:

9245:

9239:

9238:

9236:

9235:

9199:

9193:

9192:

9164:

9090:

9088:

9087:

9082:

9079:

9078:

9042:to refer to the

9028:

9026:

9025:

9020:

9018:

9017:

8977:

8975:

8974:

8969:

8966:

8965:

8953:

8952:

8937:

8936:

8926:

8921:

8903:

8902:

8859:impulse response

8853:Impulse response

8770:

8768:

8767:

8762:

8760:

8758:

8736:

8735:

8696:

8695:

8677:

8676:

8660:

8655:

8642:

8637:

8620:

8615:

8606:

8576:

8574:

8573:

8568:

8566:

8561:

8560:

8551:

8537:

8536:

8506:

8504:

8503:

8498:

8493:

8472:

8470:

8469:

8464:

8456:

8455:

8437:

8435:

8434:

8429:

8411:

8409:

8408:

8403:

8395:

8394:

8371:

8369:

8368:

8363:

8355:

8354:

8335:

8333:

8332:

8327:

8322:

8320:

8319:

8307:

8302:

8297:

8296:

8287:

8279:

8274:

8273:

8264:

8246:

8244:

8243:

8238:

8233:

8229:

8227:

8226:

8224:

8223:

8211:

8205:

8204:

8195:

8186:

8185:

8173:

8171:

8160:

8155:

8154:

8134:

8132:

8131:

8126:

8118:

8117:

8101:

8096:

8073:

8071:

8070:

8065:

8063:

8062:

8043:

8042:

8031:

8030:

8003:

8001:

8000:

7995:

7993:

7992:

7976:

7974:

7973:

7968:

7966:

7965:

7946:

7944:

7943:

7938:

7936:

7932:

7931:

7929:

7928:

7912:

7907:

7898:

7893:

7892:

7878:

7876:

7875:

7874:

7858:

7853:

7852:

7840:

7839:

7816:

7814:

7813:

7808:

7800:

7799:

7787:

7786:

7774:

7773:

7761:

7760:

7732:

7730:

7729:

7724:

7719:

7718:

7706:

7705:

7693:

7692:

7680:

7679:

7667:

7666:

7645:

7644:

7614:

7612:

7611:

7606:

7595:

7594:

7585:

7584:

7569:

7568:

7522:

7520:

7519:

7514:

7506:

7505:

7486:

7484:

7483:

7478:

7476:

7475:

7455:

7453:

7452:

7447:

7439:

7438:

7418:

7416:

7415:

7410:

7408:

7406:

7390:

7389:

7373:

7368:

7351:

7346:

7337:

7332:

7330:

7329:

7328:

7323:

7317:

7316:

7295:

7294:

7279:

7272:

7267:

7258:

7223:

7221:

7220:

7215:

7209:

7204:

7162:

7160:

7159:

7154:

7149:

7147:

7146:

7145:

7140:

7134:

7133:

7109:

7108:

7098:

7093:

7072:

7065:

7060:

7051:

7024:

7022:

7021:

7016:

7013:

7008:

6993:

6992:

6980:

6897:

6895:

6894:

6889:

6882:

6877:

6865:

6864:

6849:

6848:

6838:

6833:

6815:

6814:

6786:normal equations

6742:

6740:

6739:

6734:

6732:

6730:

6729:

6728:

6712:

6711:

6710:

6697:

6692:

6679:

6674:

6664:

6659:

6658:

6649:

6644:

6643:

6631:

6630:

6612:

6610:

6609:

6604:

6602:

6600:

6599:

6598:

6582:

6581:

6572:

6567:

6566:

6557:

6552:

6551:

6539:

6538:

6520:

6518:

6517:

6512:

6510:

6509:

6497:

6496:

6473:

6471:

6470:

6465:

6463:

6462:

6453:

6452:

6440:

6439:

6430:

6429:

6417:

6416:

6399:

6397:

6396:

6391:

6389:

6388:

6376:

6375:

6363:

6362:

6353:

6352:

6340:

6339:

6311:

6309:

6308:

6303:

6301:

6300:

6288:

6287:

6278:

6273:

6272:

6260:

6259:

6241:

6239:

6238:

6233:

6231:

6230:

6221:

6220:

6208:

6207:

6175:

6173:

6172:

6167:

6147:

6146:

6136:

6131:

6091:

6089:

6088:

6083:

6052:

6050:

6049:

6044:

6038:

6033:

6017:

6015:

6014:

6009:

5974:

5973:

5951:

5949:

5948:

5943:

5937:

5932:

5920:

5919:

5907:

5906:

5896:

5891:

5873:

5872:

5849:

5847:

5846:

5841:

5803:

5802:

5780:

5778:

5777:

5772:

5770:

5769:

5762:

5761:

5741:

5740:

5727:

5726:

5713:

5712:

5695:

5694:

5682:

5681:

5664:

5663:

5646:

5645:

5599:

5598:

5587:

5586:

5575:

5574:

5556:

5555:

5541:

5540:

5529:

5528:

5510:

5509:

5495:

5494:

5480:

5479:

5459:

5458:

5451:

5450:

5430:

5429:

5416:

5415:

5402:

5401:

5374:

5367:

5353:

5351:

5350:

5345:

5343:

5342:

5320:

5318:

5317:

5312:

5310:

5309:

5289:

5287:

5286:

5281:

5279:

5278:

5263:equations. Here

5262:

5255:

5242:

5240:

5239:

5234:

5229:

5228:

5212:

5207:

5195:

5194:

5179:

5178:

5168:

5163:

5145:

5144:

5101:

5099:

5098:

5093:

5091:

5090:

5071:

5069:

5068:

5063:

5057:

5056:

5044:

5043:

5028:

5027:

5017:

5012:

4994:

4993:

4936:

4934:

4933:

4928:

4926:

4924:

4923:

4922:

4906:

4905:

4904:

4885:

4883:

4882:

4867:

4866:

4857:

4852:

4851:

4818:

4816:

4815:

4810:

4808:

4807:

4798:

4797:

4785:

4781:

4780:

4779:

4750:

4749:

4740:

4735:

4734:

4700:

4698:

4697:

4692:

4690:

4686:

4685:

4684:

4669:

4668:

4647:

4642:

4618:

4617:

4596:

4595:

4586:

4585:

4573:

4572:

4550:

4548:

4547:

4542:

4540:

4539:

4500:

4499:

4484:

4483:

4457:

4455:

4454:

4449:

4437:

4435:

4434:

4429:

4424:

4423:

4404:

4402:

4401:

4396:

4369:

4367:

4366:

4361:

4352:

4344:

4332:

4330:

4329:

4324:

4322:

4321:

4300:

4299:

4263:

4262:

4250:

4249:

4224:

4222:

4221:

4216:

4214:

4180:

4178:

4177:

4172:

4160:

4158:

4157:

4152:

4150:

4149:

4134:

4133:

4109:

4107:

4106:

4101:

4099:

4098:

4077:

4076:

4060:

4058:

4057:

4052:

4044:

4043:

4024:

4022:

4021:

4016:

4004:

4002:

4001:

3996:

3994:

3993:

3973:

3971:

3970:

3965:

3963:

3962:

3945:Gaussian process

3942:

3940:

3939:

3934:

3932:

3931:

3915:

3913:

3912:

3907:

3905:

3904:

3888:

3886:

3885:

3880:

3878:

3877:

3862:It is seen that

3858:

3856:

3855:

3850:

3845:

3844:

3829:

3828:

3818:

3813:

3795:

3794:

3775:

3773:

3772:

3767:

3765:

3764:

3741:

3739:

3738:

3733:

3728:

3727:

3712:

3711:

3701:

3690:

3672:

3671:

3656:

3655:

3643:

3642:

3619:

3617:

3616:

3611:

3609:

3608:

3586:

3584:

3583:

3578:

3576:

3575:

3557:

3556:

3531:

3529:

3528:

3523:

3521:

3520:

3501:

3499:

3498:

3493:

3481:

3479:

3478:

3473:

3468:

3444:

3442:

3441:

3436:

3434:

3432:

3428:

3427:

3415:

3414:

3395:

3392:

3390:

3389:

3388:

3371:

3366:

3357:

3354:

3345:

3341:

3307:

3305:

3304:

3299:

3297:

3296:

3295:

3287:

3275:

3273:

3272:

3271:

3254:

3249:

3240:

3213:

3211:

3210:

3205:

3203:

3202:

3186:

3184:

3183:

3178:

3166:

3164:

3163:

3158:

3137:

3135:

3134:

3129:

3127:

3126:

3107:

3105:

3104:

3099:

3094:

3090:

3088:

3063:

3062:

3045:

3040:

3031:

3024:

3015:

3011:

3006:

3005:

2987:

2986:

2976:

2971:

2952:

2943:

2939:

2909:function is the

2907:spectral density

2901:

2899:

2898:

2893:

2862:

2860:

2859:

2854:

2849:

2848:

2847:

2839:

2827:

2825:

2824:

2823:

2806:

2801:

2792:

2787:

2786:

2771:

2770:

2761:

2760:

2733:

2732:

2703:

2701:

2700:

2695:

2689:

2684:

2669:

2668:

2650:

2649:

2646:

2643:

2642:

2627:

2626:

2614:

2613:

2610:

2597:

2595:

2594:

2589:

2587:

2586:

2570:

2568:

2567:

2562:

2560:

2559:

2540:

2538:

2537:

2532:

2527:

2525:

2524:

2523:

2506:

2501:

2492:

2487:

2486:

2470:

2465:

2441:

2440:

2428:

2427:

2424:

2404:

2402:

2401:

2396:

2375:

2373:

2372:

2367:

2337:

2335:

2334:

2329:

2321:

2320:

2296:

2295:

2262:

2261:

2236:

2234:

2233:

2228:

2212:

2210:

2209:

2204:

2199:

2198:

2173:

2171:

2170:

2165:

2157:

2149:

2137:

2135:

2134:

2129:

2127:

2126:

2110:

2108:

2107:

2102:

2084:

2082:

2081:

2076:

2068:

2060:

2044:

2042:

2041:

2036:

2034:

2033:

2017:

2015:

2014:

2009:

2006:

2001:

1985:

1983:

1982:

1977:

1975:

1974:

1958:

1956:

1955:

1950:

1947:

1946:

1934:

1933:

1912:

1911:

1887:

1885:

1884:

1879:

1877:

1876:

1860:

1858:

1857:

1852:

1850:

1849:

1833:

1831:

1830:

1825:

1823:

1822:

1806:

1804:

1803:

1798:

1796:

1795:

1772:

1770:

1769:

1764:

1752:

1750:

1749:

1744:

1732:

1730:

1729:

1724:

1673:

1671:

1670:

1665:

1663:

1662:

1646:

1644:

1643: