1642:

31:

39:

78:

281:

In a directory-based system, the data being shared is placed in a common directory that maintains the coherence between caches. The directory acts as a filter through which the processor must ask permission to load an entry from the primary memory to its cache. When an entry is changed, the directory

325:

If the protocol design states that whenever any copy of the shared data is changed, all the other copies must be "updated" to reflect the change, then it is a write-update protocol. If the design states that a write to a cached copy by any processor requires other processors to discard or invalidate

235:

is available, since all transactions are a request/response seen by all processors. The drawback is that snooping isn't scalable. Every request must be broadcast to all nodes in a system, meaning that as the system gets larger, the size of the (logical or physical) bus and the bandwidth it provides

141:

In a read made by a processor P1 to location X that follows a write by another processor P2 to X, with no other writes to X made by any processor occurring between the two accesses and with the read and write being sufficiently separated, X must always return the value written by P2. This condition

94:

multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is changed, the other copies must reflect that change.

194:

memory location in a total order that respects the program order of each thread". Thus, the only difference between the cache coherent system and sequentially consistent system is in the number of address locations the definition talks about (single memory location for a cache coherent system, and

73:

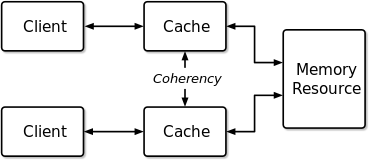

In the illustration on the right, consider both the clients have a cached copy of a particular memory block from a previous read. Suppose the client on the bottom updates/changes that memory block, the client on the top could be left with an invalid cache of memory without any notification of the

303:

Protocols can also be classified as snoopy or directory-based. Typically, early systems used directory-based protocols where a directory would keep a track of the data being shared and the sharers. In snoopy protocols, the transaction requests (to read, write, or upgrade) are sent out to all

264:

For the snooping mechanism, a snoop filter reduces the snooping traffic by maintaining a plurality of entries, each representing a cache line that may be owned by one or more nodes. When replacement of one of the entries is required, the snoop filter selects for the replacement of the entry

236:

must grow. Directories, on the other hand, tend to have longer latencies (with a 3 hop request/forward/respond) but use much less bandwidth since messages are point to point and not broadcast. For this reason, many of the larger systems (>64 processors) use this type of cache coherence.

181:

Writes to the same location must be sequenced. In other words, if location X received two different values A and B, in this order, from any two processors, the processors can never read location X as B and then read it as A. The location X must be seen with values A and B in that

142:

defines the concept of coherent view of memory. Propagating the writes to the shared memory location ensures that all the caches have a coherent view of the memory. If processor P1 reads the old value of X, even after the write by P2, we can say that the memory is incoherent.

265:

representing the cache line or lines owned by the fewest nodes, as determined from a presence vector in each of the entries. A temporal or other type of algorithm is used to refine the selection if more than one cache line is owned by the fewest nodes.

137:

In a read made by a processor P to a location X that follows a write by the same processor P to X, with no writes to X by another processor occurring between the write and the read instructions made by P, X must always return the value written by

146:

The above conditions satisfy the Write

Propagation criteria required for cache coherence. However, they are not sufficient as they do not satisfy the Transaction Serialization condition. To illustrate this better, consider the following example:

314:

When a write operation is observed to a location that a cache has a copy of, the cache controller invalidates its own copy of the snooped memory location, which forces a read from main memory of the new value on its next

177:

Therefore, in order to satisfy

Transaction Serialization, and hence achieve Cache Coherence, the following condition along with the previous two mentioned in this section must be met:

205:. Multiple copies of same data can exist in different cache simultaneously and if processors are allowed to update their own copies freely, an inconsistent view of memory can result.

133:

In a multiprocessor system, consider that more than one processor has cached a copy of the memory location X. The following conditions are necessary to achieve cache coherence:

321:

When a write operation is observed to a location that a cache has a copy of, the cache controller updates its own copy of the snooped memory location with the new data.

95:

Cache coherence is the discipline which ensures that the changes in the values of shared operands (data) are propagated throughout the system in a timely fashion.

297:

Coherence protocols apply cache coherence in multiprocessor systems. The intention is that two clients must never see different values for the same shared data.

968:

251:

First introduced in 1983, snooping is a process where the individual caches monitor address lines for accesses to memory locations that they have cached. The

825:

541:

198:

Another definition is: "a multiprocessor is cache consistent if all writes to the same memory location are performed in some sequential order".

660:

1058:

910:

74:

change. Cache coherence is intended to manage such conflicts by maintaining a coherent view of the data values in multiple caches.

130:

One type of data occurring simultaneously in different cache memory is called cache coherence, or in some systems, global memory.

1039:

711:

Formal

Analysis of the ACE Specification for Cache Coherent Systems-on-Chip. In Formal Methods for Industrial Critical Systems

300:

The protocol must implement the basic requirements for coherence. It can be tailor-made for the target system or application.

170:. P4 on the other hand may see changes made by P1 and P2 in the order in which they are made and hence return 20 on a read to

782:

718:

525:

1079:

166:

by P1 and P2. However, P3 may see the change made by P1 after seeing the change made by P2 and hence return 10 on a read to

150:

A multi-processor system consists of four processors - P1, P2, P3 and P4, all containing cached copies of a shared variable

1306:

214:

289:

systems mimic these mechanisms in an attempt to maintain consistency between blocks of memory in loosely coupled systems.

1329:

380:, which belongs to AMBA5 group of specifications defines the interfaces for the connection of fully coherent processors.

162:

in its own cached copy to 20. If we ensure only write propagation, then P3 and P4 will certainly see the changes made to

433:

1218:

833:

361:

1074:

1324:

1301:

805:

620:

903:

816:

1296:

1111:

275:

227:

17:

1403:

1317:

1266:

637:"Ravishankar, Chinya; Goodman, James (February 28, 1983). "Cache Implementation for Multiple Microprocessors""

1677:

1627:

1461:

1312:

999:

545:

105:

Changes to the data in any cache must be propagated to other copies (of that cache line) in the peer caches.

735:

357:

62:

of a common memory resource, problems may arise with incoherent data, which is particularly the case with

1682:

1672:

1646:

1592:

1052:

896:

762:

1571:

1366:

1251:

1213:

1063:

953:

850:

Steinke, Robert C.; Nutt, Gary J. (1 September 2004). "A unified theory of shared memory consistency".

91:

231:, each having their own benefits and drawbacks. Snooping based protocols tend to be faster, if enough

1587:

1566:

1511:

1398:

1388:

1361:

1223:

404:

394:

286:

1667:

1541:

1167:

1106:

1019:

657:

190:

memory model: "the cache coherent system must appear to execute all threads’ loads and stores to a

1602:

1597:

1456:

1047:

681:

558:

Steinke, Robert C.; Nutt, Gary J. (2004-09-01). "A Unified Theory of Shared Memory

Consistency".

63:

1341:

1273:

1177:

1069:

1024:

636:

187:

1433:

1393:

1346:

1336:

1131:

994:

933:

307:

Write propagation in snoopy protocols can be implemented by either of the following methods:

202:

47:

1373:

1261:

1256:

1246:

1233:

1029:

111:

Reads/Writes to a single memory location must be seen by all processors in the same order.

8:

1536:

1491:

1291:

1157:

1561:

1410:

1383:

1208:

1172:

1162:

1121:

963:

943:

938:

919:

877:

859:

593:

567:

34:

An illustration showing multiple caches of some memory, which acts as a shared resource

1607:

1283:

1241:

1136:

829:

801:

778:

714:

616:

585:

521:

493:

467:

389:

119:. However, in practice it is generally performed at the granularity of cache blocks.

55:

1617:

1416:

1351:

1198:

1014:

1009:

1004:

973:

881:

869:

766:

597:

577:

373:

232:

332:

Various models and protocols have been devised for maintaining coherence, such as

1481:

1421:

1356:

1193:

1126:

1116:

958:

948:

797:

774:

664:

365:

127:

Coherence defines the behavior of reads and writes to a single address location.

67:

42:

Incoherent caches: The caches have different values of a single address location.

1612:

1428:

1085:

978:

399:

353:

349:

345:

487:

1661:

1501:

1378:

589:

497:

471:

442:

410:

341:

337:

158:(in its cached copy) to 10 following which processor P2 changes the value of

873:

581:

1101:

333:

245:

221:

201:

Rarely, but especially in algorithms, coherence can instead refer to the

54:

is the uniformity of shared resource data that ends up stored in multiple

1622:

186:

The alternative definition of a coherent system is via the definition of

116:

304:

processors. All processors snoop the request and respond appropriately.

671:. Norwegian University of Science and Technology. Retrieved 2014-01-20.

435:

The

Architecture of the Nehalem Processor and Nehalem-EP SMP Platforms

174:. The processors P3 and P4 now have an incoherent view of the memory.

1496:

1471:

888:

59:

658:"Design of a Snoop Filter for Snoop-Based Cache Coherency Protocols"

1546:

1526:

1451:

864:

572:

377:

30:

1551:

1531:

1506:

1141:

488:

Sorin, Daniel J.; Hill, Mark D.; Wood, David Allen (2011-01-01).

369:

81:

Coherent caches: The value in all the caches' copies is the same.

329:

However, scalability is one shortcoming of broadcast protocols.

1521:

1516:

282:

either updates or invalidates the other caches with that entry.

38:

326:

their cached copies, then it is a write-invalidate protocol.

195:

all memory locations for a sequentially consistent system).

154:

whose initial value is 0. Processor P1 changes the value of

115:

Theoretically, coherence can be performed at the load/store

1556:

1486:

1476:

376:. The AMBA CHI (Coherent Hub Interface) specification from

77:

27:

Computer architecture term concerning shared resource data

1466:

1443:

219:

The two most common mechanisms of ensuring coherency are

98:

The following are the requirements for cache coherence:

441:. Texas A&M University. p. 30. Archived from

682:"Lecture 18: Snooping vs. Directory Based Coherency"

818:A Primer on Memory Consistency and Cache Coherence

515:

490:A primer on memory consistency and cache coherence

372:proposed the AMBA 4 ACE for handling coherency in

761:

610:

1659:

615:. Morgan Kaufmann Publishers. pp. 467–468.

815:Sorin, Daniel; Hill, Mark; Wood, David (2011).

611:Patterson, David A.; Hennessy, John L. (1990).

464:Fundamentals of parallel multicore architecture

518:Computer Organization and Design - 4th Edition

904:

814:

613:Computer Architecture A Quantitative Approach

431:

849:

557:

911:

897:

863:

571:

708:

76:

37:

29:

208:

14:

1660:

918:

292:

892:

791:

644:Proceedings of IEEE COMPCON: 346–350

511:

509:

507:

492:. Morgan & Claypool Publishers.

483:

481:

457:

455:

427:

425:

215:Cache coherency protocols (examples)

58:. When clients in a system maintain

24:

755:

540:Neupane, Mahesh (April 16, 2004).

269:

25:

1694:

504:

478:

452:

422:

1641:

1640:

771:Computer Organization and Design

1112:Analysis of parallel algorithms

733:

727:

702:

674:

461:

432:E. Thomadakis, Michael (2011).

276:Directory-based cache coherence

713:. Springer Berlin Heidelberg.

709:Kriouile (16 September 2013).

650:

629:

604:

551:

534:

13:

1:

1059:Simultaneous and heterogenous

416:

122:

1647:Category: Parallel computing

656:Rasmus Ulfsnes (June 2013).

7:

383:

259:make use of this mechanism.

239:

85:

10:

1699:

954:High-performance computing

273:

253:write-invalidate protocols

243:

212:

1636:

1588:Automatic parallelization

1580:

1442:

1282:

1232:

1224:Application checkpointing

1186:

1150:

1094:

1038:

987:

926:

405:Non-uniform memory access

395:Directory-based coherence

287:Distributed shared memory

108:Transaction Serialization

516:Patterson and Hennessy.

1603:Embarrassingly parallel

1598:Deterministic algorithm

874:10.1145/1017460.1017464

582:10.1145/1017460.1017464

1318:Associative processing

1274:Non-blocking algorithm

1080:Clustered multi-thread

548:(PDF) on 20 June 2010.

257:write-update protocols

188:sequential consistency

82:

43:

35:

1434:Hardware acceleration

1347:Superscalar processor

1337:Dataflow architecture

934:Distributed computing

794:The Cache Memory Book

544:(PDF). Archived from

360:, Synapse, Berkeley,

203:locality of reference

80:

48:computer architecture

41:

33:

1678:Concurrent computing

1313:Pipelined processing

1262:Explicit parallelism

1257:Implicit parallelism

1247:Dataflow programming

209:Coherence mechanisms

1537:Parallel Extensions

1342:Pipelined processor

826:Morgan and Claypool

792:Handy, Jim (1998).

293:Coherence protocols

1683:Consistency models

1673:Parallel computing

1411:Massively parallel

1389:distributed shared

1209:Cache invalidation

1173:Instruction window

964:Manycore processor

944:Massively parallel

939:Parallel computing

920:Parallel computing

852:Journal of the ACM

663:2014-02-01 at the

83:

44:

36:

1655:

1654:

1608:Parallel slowdown

1242:Stream processing

1132:Karp–Flatt metric

784:978-0-12-374493-7

720:978-3-642-41010-9

542:"Cache Coherence"

527:978-0-12-374493-7

390:Consistency model

102:Write Propagation

16:(Redirected from

1690:

1644:

1643:

1618:Software lockout

1417:Computer cluster

1352:Vector processor

1307:Array processing

1292:Flynn's taxonomy

1199:Memory coherence

974:Computer network

913:

906:

899:

890:

889:

885:

867:

846:

844:

842:

823:

811:

796:(2nd ed.).

788:

773:(4th ed.).

763:Patterson, David

750:

749:

747:

746:

731:

725:

724:

706:

700:

699:

697:

695:

686:

678:

672:

654:

648:

647:

641:

633:

627:

626:

608:

602:

601:

575:

555:

549:

538:

532:

531:

513:

502:

501:

485:

476:

475:

459:

450:

449:

447:

440:

429:

340:(aka Illinois),

311:Write-invalidate

21:

1698:

1697:

1693:

1692:

1691:

1689:

1688:

1687:

1668:Cache coherency

1658:

1657:

1656:

1651:

1632:

1576:

1482:Coarray Fortran

1438:

1422:Beowulf cluster

1278:

1228:

1219:Synchronization

1204:Cache coherence

1194:Multiprocessing

1182:

1146:

1127:Cost efficiency

1122:Gustafson's law

1090:

1034:

983:

959:Multiprocessing

949:Cloud computing

922:

917:

840:

838:

836:

821:

808:

798:Morgan Kaufmann

785:

775:Morgan Kaufmann

758:

756:Further reading

753:

744:

742:

736:"AMBA | AMBA 5"

732:

728:

721:

707:

703:

693:

691:

684:

680:

679:

675:

669:diva-portal.org

665:Wayback Machine

655:

651:

639:

635:

634:

630:

623:

609:

605:

556:

552:

539:

535:

528:

514:

505:

486:

479:

460:

453:

445:

438:

430:

423:

419:

386:

366:Dragon protocol

295:

278:

272:

270:Directory-based

248:

242:

228:directory-based

217:

211:

125:

88:

68:multiprocessing

52:cache coherence

28:

23:

22:

18:Cache coherency

15:

12:

11:

5:

1696:

1686:

1685:

1680:

1675:

1670:

1653:

1652:

1650:

1649:

1637:

1634:

1633:

1631:

1630:

1625:

1620:

1615:

1613:Race condition

1610:

1605:

1600:

1595:

1590:

1584:

1582:

1578:

1577:

1575:

1574:

1569:

1564:

1559:

1554:

1549:

1544:

1539:

1534:

1529:

1524:

1519:

1514:

1509:

1504:

1499:

1494:

1489:

1484:

1479:

1474:

1469:

1464:

1459:

1454:

1448:

1446:

1440:

1439:

1437:

1436:

1431:

1426:

1425:

1424:

1414:

1408:

1407:

1406:

1401:

1396:

1391:

1386:

1381:

1371:

1370:

1369:

1364:

1357:Multiprocessor

1354:

1349:

1344:

1339:

1334:

1333:

1332:

1327:

1322:

1321:

1320:

1315:

1310:

1299:

1288:

1286:

1280:

1279:

1277:

1276:

1271:

1270:

1269:

1264:

1259:

1249:

1244:

1238:

1236:

1230:

1229:

1227:

1226:

1221:

1216:

1211:

1206:

1201:

1196:

1190:

1188:

1184:

1183:

1181:

1180:

1175:

1170:

1165:

1160:

1154:

1152:

1148:

1147:

1145:

1144:

1139:

1134:

1129:

1124:

1119:

1114:

1109:

1104:

1098:

1096:

1092:

1091:

1089:

1088:

1086:Hardware scout

1083:

1077:

1072:

1067:

1061:

1056:

1050:

1044:

1042:

1040:Multithreading

1036:

1035:

1033:

1032:

1027:

1022:

1017:

1012:

1007:

1002:

997:

991:

989:

985:

984:

982:

981:

979:Systolic array

976:

971:

966:

961:

956:

951:

946:

941:

936:

930:

928:

924:

923:

916:

915:

908:

901:

893:

887:

886:

858:(5): 800–849.

847:

835:978-1608455645

834:

812:

806:

789:

783:

767:Hennessy, John

757:

754:

752:

751:

726:

719:

701:

673:

649:

628:

621:

603:

566:(5): 800–849.

550:

533:

526:

503:

477:

462:Yan, Solihin.

451:

448:on 2014-08-11.

420:

418:

415:

414:

413:

408:

402:

400:Memory barrier

397:

392:

385:

382:

323:

322:

319:

316:

312:

294:

291:

284:

283:

274:Main article:

271:

268:

267:

266:

261:

260:

244:Main article:

241:

238:

213:Main article:

210:

207:

184:

183:

144:

143:

139:

124:

121:

113:

112:

109:

106:

103:

87:

84:

26:

9:

6:

4:

3:

2:

1695:

1684:

1681:

1679:

1676:

1674:

1671:

1669:

1666:

1665:

1663:

1648:

1639:

1638:

1635:

1629:

1626:

1624:

1621:

1619:

1616:

1614:

1611:

1609:

1606:

1604:

1601:

1599:

1596:

1594:

1591:

1589:

1586:

1585:

1583:

1579:

1573:

1570:

1568:

1565:

1563:

1560:

1558:

1555:

1553:

1550:

1548:

1545:

1543:

1540:

1538:

1535:

1533:

1530:

1528:

1525:

1523:

1520:

1518:

1515:

1513:

1510:

1508:

1505:

1503:

1502:Global Arrays

1500:

1498:

1495:

1493:

1490:

1488:

1485:

1483:

1480:

1478:

1475:

1473:

1470:

1468:

1465:

1463:

1460:

1458:

1455:

1453:

1450:

1449:

1447:

1445:

1441:

1435:

1432:

1430:

1429:Grid computer

1427:

1423:

1420:

1419:

1418:

1415:

1412:

1409:

1405:

1402:

1400:

1397:

1395:

1392:

1390:

1387:

1385:

1382:

1380:

1377:

1376:

1375:

1372:

1368:

1365:

1363:

1360:

1359:

1358:

1355:

1353:

1350:

1348:

1345:

1343:

1340:

1338:

1335:

1331:

1328:

1326:

1323:

1319:

1316:

1314:

1311:

1308:

1305:

1304:

1303:

1300:

1298:

1295:

1294:

1293:

1290:

1289:

1287:

1285:

1281:

1275:

1272:

1268:

1265:

1263:

1260:

1258:

1255:

1254:

1253:

1250:

1248:

1245:

1243:

1240:

1239:

1237:

1235:

1231:

1225:

1222:

1220:

1217:

1215:

1212:

1210:

1207:

1205:

1202:

1200:

1197:

1195:

1192:

1191:

1189:

1185:

1179:

1176:

1174:

1171:

1169:

1166:

1164:

1161:

1159:

1156:

1155:

1153:

1149:

1143:

1140:

1138:

1135:

1133:

1130:

1128:

1125:

1123:

1120:

1118:

1115:

1113:

1110:

1108:

1105:

1103:

1100:

1099:

1097:

1093:

1087:

1084:

1081:

1078:

1076:

1073:

1071:

1068:

1065:

1062:

1060:

1057:

1054:

1051:

1049:

1046:

1045:

1043:

1041:

1037:

1031:

1028:

1026:

1023:

1021:

1018:

1016:

1013:

1011:

1008:

1006:

1003:

1001:

998:

996:

993:

992:

990:

986:

980:

977:

975:

972:

970:

967:

965:

962:

960:

957:

955:

952:

950:

947:

945:

942:

940:

937:

935:

932:

931:

929:

925:

921:

914:

909:

907:

902:

900:

895:

894:

891:

883:

879:

875:

871:

866:

861:

857:

853:

848:

837:

831:

827:

820:

819:

813:

809:

807:9780123229809

803:

799:

795:

790:

786:

780:

776:

772:

768:

764:

760:

759:

741:

740:Arm Developer

737:

730:

722:

716:

712:

705:

690:

683:

677:

670:

666:

662:

659:

653:

645:

638:

632:

624:

622:1-55860-069-8

618:

614:

607:

599:

595:

591:

587:

583:

579:

574:

569:

565:

561:

554:

547:

543:

537:

529:

523:

519:

512:

510:

508:

499:

495:

491:

484:

482:

473:

469:

465:

458:

456:

444:

437:

436:

428:

426:

421:

412:

411:False sharing

409:

406:

403:

401:

398:

396:

393:

391:

388:

387:

381:

379:

375:

371:

367:

363:

359:

355:

351:

347:

343:

339:

335:

330:

327:

320:

317:

313:

310:

309:

308:

305:

301:

298:

290:

288:

280:

279:

277:

263:

262:

258:

254:

250:

249:

247:

237:

234:

230:

229:

224:

223:

216:

206:

204:

199:

196:

193:

189:

180:

179:

178:

175:

173:

169:

165:

161:

157:

153:

148:

140:

136:

135:

134:

131:

128:

120:

118:

110:

107:

104:

101:

100:

99:

96:

93:

92:shared memory

79:

75:

71:

69:

65:

61:

57:

53:

49:

40:

32:

19:

1203:

1187:Coordination

1117:Amdahl's law

1053:Simultaneous

855:

851:

839:. Retrieved

817:

793:

770:

743:. Retrieved

739:

729:

710:

704:

692:. Retrieved

689:Berkeley.edu

688:

676:

668:

652:

643:

631:

612:

606:

563:

559:

553:

546:the original

536:

517:

489:

463:

443:the original

434:

331:

328:

324:

318:Write-update

306:

302:

299:

296:

285:

256:

252:

246:Bus snooping

226:

220:

218:

200:

197:

191:

185:

176:

171:

167:

163:

159:

155:

151:

149:

145:

132:

129:

126:

114:

97:

89:

72:

56:local caches

51:

45:

1623:Scalability

1384:distributed

1267:Concurrency

1234:Programming

1075:Cooperative

1064:Speculative

1000:Instruction

368:. In 2011,

117:granularity

1662:Categories

1628:Starvation

1367:asymmetric

1102:PRAM model

1070:Preemptive

865:cs/0208027

841:20 October

745:2021-04-27

734:Ltd, Arm.

573:cs/0208027

417:References

358:write-once

123:Definition

1362:symmetric

1107:PEM model

590:0004-5411

498:726930429

472:884540034

233:bandwidth

1593:Deadlock

1581:Problems

1547:pthreads

1527:OpenHMPP

1452:Ateji PX

1413:computer

1284:Hardware

1151:Elements

1137:Slowdown

1048:Temporal

1030:Pipeline

769:(2009).

661:Archived

384:See also

240:Snooping

222:snooping

86:Overview

70:system.

1552:RaftLib

1532:OpenACC

1507:GPUOpen

1497:C++ AMP

1472:Charm++

1214:Barrier

1158:Process

1142:Speedup

927:General

882:3206071

667:(PDF).

598:3206071

378:ARM Ltd

370:ARM Ltd

362:Firefly

315:access.

1645:

1522:OpenCL

1517:OpenMP

1462:Chapel

1379:shared

1374:Memory

1309:(SIMT)

1252:Models

1163:Thread

1095:Theory

1066:(SpMT)

1020:Memory

1005:Thread

988:Levels

880:

832:

804:

781:

717:

694:14 May

619:

596:

588:

560:J. ACM

524:

496:

470:

407:(NUMA)

192:single

182:order.

60:caches

1492:Dryad

1457:Boost

1178:Array

1168:Fiber

1082:(CMT)

1055:(SMT)

969:GPGPU

878:S2CID

860:arXiv

822:(PDF)

685:(PDF)

640:(PDF)

594:S2CID

568:arXiv

446:(PDF)

439:(PDF)

354:MESIF

350:MERSI

346:MOESI

90:In a

66:in a

1557:ROCm

1487:CUDA

1477:Cilk

1444:APIs

1404:COMA

1399:NUMA

1330:MIMD

1325:MISD

1302:SIMD

1297:SISD

1025:Loop

1015:Data

1010:Task

843:2017

830:ISBN

802:ISBN

779:ISBN

715:ISBN

696:2023

617:ISBN

586:ISSN

522:ISBN

494:OCLC

468:OCLC

374:SoCs

364:and

342:MOSI

338:MESI

255:and

225:and

64:CPUs

1572:ZPL

1567:TBB

1562:UPC

1542:PVM

1512:MPI

1467:HPX

1394:UMA

995:Bit

870:doi

578:doi

334:MSI

46:In

1664::

876:.

868:.

856:51

854:.

828:.

824:.

800:.

777:.

765:;

738:.

687:.

642:.

592:.

584:.

576:.

564:51

562:.

520:.

506:^

480:^

466:.

454:^

424:^

356:,

352:,

348:,

344:,

336:,

138:P.

50:,

912:e

905:t

898:v

884:.

872::

862::

845:.

810:.

787:.

748:.

723:.

698:.

646:.

625:.

600:.

580::

570::

530:.

500:.

474:.

172:S

168:S

164:S

160:S

156:S

152:S

20:)

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.