2837:(HDMR) (the term is due to H. Rabitz) is essentially an emulator approach, which involves decomposing the function output into a linear combination of input terms and interactions of increasing dimensionality. The HDMR approach exploits the fact that the model can usually be well-approximated by neglecting higher-order interactions (second or third-order and above). The terms in the truncated series can then each be approximated by e.g. polynomials or splines (REFS) and the response expressed as the sum of the main effects and interactions up to the truncation order. From this perspective, HDMRs can be seen as emulators which neglect high-order interactions; the advantage is that they are able to emulate models with higher dimensionality than full-order emulators.

1020:. This appears a logical approach as any change observed in the output will unambiguously be due to the single variable changed. Furthermore, by changing one variable at a time, one can keep all other variables fixed to their central or baseline values. This increases the comparability of the results (all 'effects' are computed with reference to the same central point in space) and minimizes the chances of computer program crashes, more likely when several input factors are changed simultaneously. OAT is frequently preferred by modelers because of practical reasons. In case of model failure under OAT analysis the modeler immediately knows which is the input factor responsible for the failure.

2880:

motivations of its author may become a matter of great importance, and a pure sensitivity analysis – with its emphasis on parametric uncertainty – may be seen as insufficient. The emphasis on the framing may derive inter-alia from the relevance of the policy study to different constituencies that are characterized by different norms and values, and hence by a different story about 'what the problem is' and foremost about 'who is telling the story'. Most often the framing includes more or less implicit assumptions, which could be political (e.g. which group needs to be protected) all the way to technical (e.g. which variable can be treated as a constant).

803:

2884:

quantitative information with the generation of `Pedigrees' of numbers. Sensitivity auditing has been especially designed for an adversarial context, where not only the nature of the evidence, but also the degree of certainty and uncertainty associated to the evidence, will be the subject of partisan interests. Sensitivity auditing is recommended in the

European Commission guidelines for impact assessment, as well as in the report Science Advice for Policy by European Academies.

194:

2916:" I have proposed a form of organized sensitivity analysis that I call 'global sensitivity analysis' in which a neighborhood of alternative assumptions is selected and the corresponding interval of inferences is identified. Conclusions are judged to be sturdy only if the neighborhood of assumptions is wide enough to be credible and the corresponding interval of inferences is narrow enough to be useful."

2865:. In a design of experiments, one studies the effect of some process or intervention (the 'treatment') on some objects (the 'experimental units'). In sensitivity analysis one looks at the effect of varying the inputs of a mathematical model on the output of the model itself. In both disciplines one strives to obtain information from the system with a minimum of physical or numerical experiments.

2444:

thus overcomes the scale issue of traditional sensitivity analysis methods. More importantly, VARS is able to provide relatively stable and statistically robust estimates of parameter sensitivity with much lower computational cost than other strategies (about two orders of magnitude more efficient). Noteworthy, it has been shown that there is a theoretical link between the VARS framework and the

33:

1075:. With 5 inputs, the explored space already drops to less than 1% of the total parameter space. And even this is an overestimate, since the off-axis volume is not actually being sampled at all. Compare this to random sampling of the space, where the convex hull approaches the entire volume as more points are added. While the sparsity of OAT is theoretically not a concern for

2520:, that approximates the input/output behavior of the model itself. In other words, it is the concept of "modeling a model" (hence the name "metamodel"). The idea is that, although computer models may be a very complex series of equations that can take a long time to solve, they can always be regarded as a function of their inputs

2747:, although random designs can also be used, at the loss of some efficiency. The selection of the metamodel type and the training are intrinsically linked since the training method will be dependent on the class of metamodel. Some types of metamodels that have been used successfully for sensitivity analysis include:

582:-function) multiple times. Depending on the complexity of the model there are many challenges that may be encountered during model evaluation. Therefore, the choice of method of sensitivity analysis is typically dictated by a number of problem constraints, settings or challenges. Some of the most common are:

2921:

Note Leamer's emphasis is on the need for 'credibility' in the selection of assumptions. The easiest way to invalidate a model is to demonstrate that it is fragile with respect to the uncertainty in the assumptions or to show that its assumptions have not been taken 'wide enough'. The same concept is

1237:

function. Similar to OAT, local methods do not attempt to fully explore the input space, since they examine small perturbations, typically one variable at a time. It is possible to select similar samples from derivative-based sensitivity through Neural

Networks and perform uncertainty quantification.

1236:

indicates that the derivative is taken at some fixed point in the space of the input (hence the 'local' in the name of the class). Adjoint modelling and

Automated Differentiation are methods which allow to compute all partial derivatives at a cost at most 4-6 times of that for evaluating the original

793:

The various types of "core methods" (discussed below) are distinguished by the various sensitivity measures which are calculated. These categories can somehow overlap. Alternative ways of obtaining these measures, under the constraints of the problem, can be given. In addition, an engineering view of

2943:

Different statistical tests and measures are applied to the problem and different factors rankings are obtained. The test should instead be tailored to the purpose of the analysis, e.g. one uses Monte Carlo filtering if one is interested in which factors are most responsible for generating high/low

2883:

In order to take these concerns into due consideration the instruments of SA have been extended to provide an assessment of the entire knowledge and model generating process. This approach has been called 'sensitivity auditing'. It takes inspiration from NUSAP, a method used to qualify the worth of

2443:

for a given perturbation scale can be considered as a comprehensive illustration of sensitivity information, through linking variogram analysis to both direction and perturbation scale concepts. As a result, the VARS framework accounts for the fact that sensitivity is a scale-dependent concept, and

715:

Sometimes it is not possible to evaluate the code at all desired points, either because the code is confidential or because the experiment is not reproducible. The code output is only available for a given set of points, and it can be difficult to perform a sensitivity analysis on a limited set of

197:

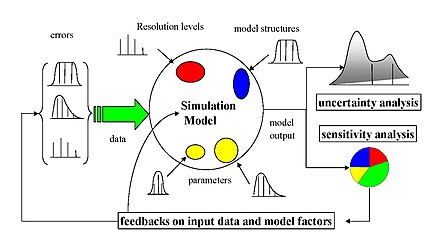

Figure 1. Schematic representation of uncertainty analysis and sensitivity analysis. In mathematical modeling, uncertainty arises from a variety of sources - errors in input data, parameter estimation and approximation procedure, underlying hypothesis, choice of model, alternative model structures

2879:

It may happen that a sensitivity analysis of a model-based study is meant to underpin an inference, and to certify its robustness, in a context where the inference feeds into a policy or decision-making process. In these cases the framing of the analysis itself, its institutional context, and the

1260:

as direct measures of sensitivity. The regression is required to be linear with respect to the data (i.e. a hyperplane, hence with no quadratic terms, etc., as regressors) because otherwise it is difficult to interpret the standardised coefficients. This method is therefore most suitable when the

1031:

The proportion of input space which remains unexplored with an OAT approach grows superexponentially with the number of inputs. For example, a 3-variable parameter space which is explored one-at-a-time is equivalent to taking points along the x, y, and z axes of a cube centered at the origin. The

706:

A code is said to be stochastic when, for several evaluations of the code with the same inputs, different outputs are obtained (as opposed to a deterministic code when, for several evaluations of the code with the same inputs, the same output is always obtained). In this case, it is necessary to

2646:

to within an acceptable margin of error. Then, sensitivity measures can be calculated from the metamodel (either with Monte Carlo or analytically), which will have a negligible additional computational cost. Importantly, the number of model runs required to fit the metamodel can be orders of

561:

Taking into account uncertainty arising from different sources, whether in the context of uncertainty analysis or sensitivity analysis (for calculating sensitivity indices), requires multiple samples of the uncertain parameters and, consequently, running the model (evaluating the

132:, errors in input data, parameter estimation and approximation procedure, absence of information and poor or partial understanding of the driving forces and mechanisms, choice of underlying hypothesis of model, and so on. This uncertainty limits our confidence in the

756:

There are a large number of approaches to performing a sensitivity analysis, many of which have been developed to address one or more of the constraints discussed above. They are also distinguished by the type of sensitivity measure, be it based on (for example)

699:

is a vector or function. When outputs are correlated, it does not preclude the possibility of performing different sensitivity analyses for each output of interest. However, for models in which the outputs are correlated, the sensitivity measures can be hard to

95:

or system (numerical or otherwise) can be divided and allocated to different sources of uncertainty in its inputs. This involves estimating sensitivity indices that quantify the influence of an input or group of inputs on the output. A related practice is

1240:

One advantage of the local methods is that it is possible to make a matrix to represent all the sensitivities in a system, thus providing an overview that cannot be achieved with global methods if there is a large number of input and output variables.

1287:, and decompose the output variance into parts attributable to input variables and combinations of variables. The sensitivity of the output to an input variable is therefore measured by the amount of variance in the output caused by that input.

1895:

Variance-based methods allow full exploration of the input space, accounting for interactions, and nonlinear responses. For these reasons they are widely used when it is feasible to calculate them. Typically this calculation involves the use of

2965:

The importance of understanding and managing uncertainty in model results has inspired many scientists from different research centers all over the world to take a close interest in this subject. National and international agencies involved in

2479:

and represent the average marginal contribution of a given factors across all possible combinations of factors. These value are related to Sobol’s indices as their value falls between the first order Sobol’ effect and the total order effect.

120:(for example in biology, climate change, economics, renewable energy, agronomy...) can be highly complex, and as a result, its relationships between inputs and outputs may be faultily understood. In such cases, the model can be viewed as a

1023:

Despite its simplicity however, this approach does not fully explore the input space, since it does not take into account the simultaneous variation of input variables. This means that the OAT approach cannot detect the presence of

143:

In models involving many input variables, sensitivity analysis is an essential ingredient of model building and quality assurance and can be useful to determine the impact of a uncertain variable for a range of purposes, including:

2466:

to represent a multivariate function (the model) in the frequency domain, using a single frequency variable. Therefore, the integrals required to calculate sensitivity indices become univariate, resulting in computational savings.

1227:

1093:

Named after statistician Max D. Morris this method is suitable for screening systems with many parameters. This is also known as method of elementary effects because it combines repeated steps along the various parametric axes.

2845:

Sensitivity analysis via Monte Carlo filtering is also a sampling-based approach, whose objective is to identify regions in the space of the input factors corresponding to particular values (e.g., high or low) of the output.

2438:

Basically, the higher the variability the more heterogeneous is the response surface along a particular direction/parameter, at a specific perturbation scale. Accordingly, in the VARS framework, the values of directional

2500:). Generally, these methods focus on efficiently (by creating a metamodel of the costly function to be evaluated and/or by “ wisely ” sampling the factor space) calculating variance-based measures of sensitivity.

5013:

Van der Sluijs, JP; Craye, M; Funtowicz, S; Kloprogge, P; Ravetz, J; Risbey, J (2005). "Combining quantitative and qualitative measures of uncertainty in model based environmental assessment: the NUSAP system".

4012:

Kabir HD, Khosravi A, Nahavandi D, Nahavandi S. Uncertainty

Quantification Neural Network from Similarity and Sensitivity. In2020 International Joint Conference on Neural Networks (IJCNN) 2020 Jul 19 (pp. 1-8).

4855:

Li, G.; Hu, J.; Wang, S.-W.; Georgopoulos, P.; Schoendorf, J.; Rabitz, H. (2006). "Random

Sampling-High Dimensional Model Representation (RS-HDMR) and orthogonality of its different order component functions".

1863:

932:

using scatter plots, and observe the behavior of these pairs. The diagrams give an initial idea of the correlation and which input has an impact on the output. Figure 2 shows an example where two inputs,

1739:

596:

Time-consuming models are very often encountered when complex models are involved. A single run of the model takes a significant amount of time (minutes, hours or longer). The use of statistical model (

4535:

Storlie, C.B.; Swiler, L.P.; Helton, J.C.; Sallaberry, C.J. (2009). "Implementation and evaluation of nonparametric regression procedures for sensitivity analysis of computationally demanding models".

3662:

2956:

This is when one performs sensitivity analysis on one sub-model at a time. This approach is non conservative as it might overlook interactions among factors in different sub-models (Type II error).

2488:

The principle is to project the function of interest onto a basis of orthogonal polynomials. The Sobol indices are then expressed analytically in terms of the coefficients of this decomposition.

1469:

2433:

773:

Quantify the uncertainty in each input (e.g. ranges, probability distributions). Note that this can be difficult and many methods exist to elicit uncertainty distributions from subjective data.

158:

Uncertainty reduction, through the identification of model input that cause significant uncertainty in the output and should therefore be the focus of attention in order to increase robustness.

3017:

790:

In some cases this procedure will be repeated, for example in high-dimensional problems where the user has to screen out unimportant variables before performing a full sensitivity analysis.

2496:

A number of methods have been developed to overcome some of the constraints discussed above, which would otherwise make the estimation of sensitivity measures infeasible (most often due to

2937:, although even then it may be hard to build distributions with great confidence. The subjectivity of the probability distributions or ranges will strongly affect the sensitivity analysis.

2296:(CDFs) to characterize the maximum distance between the unconditional output distribution and conditional output distribution (obtained by varying all input parameters and by setting the

2727:"Training" the metamodel using the sample data from the model – this generally involves adjusting the metamodel parameters until the metamodel mimics the true model as well as possible.

5112:

2644:

2809:, in conjunction with canonical models such as noisy models. Noisy models exploit information on the conditional independence between variables to significantly reduce dimensionality.

2332:

One of the major shortcomings of the previous sensitivity analysis methods is that none of them considers the spatially ordered structure of the response surface/output of the model

4408:

Haghnegahdar, Amin; Razavi, Saman (September 2017). "Insights into sensitivity analysis of Earth and environmental systems models: On the impact of parameter perturbation scale".

444:

328:

748:

To address the various constraints and challenges, a number of methods for sensitivity analysis have been proposed in the literature, which we will examine in the next section.

3002:

2128:

2686:

2591:

2371:

and covariograms, variogram analysis of response surfaces (VARS) addresses this weakness through recognizing a spatially continuous correlation structure to the values of

1529:

2289:-importance measure, the new correlation coefficient of Chatterjee, the Wasserstein correlation of Wiesel and the kernel-based sensitivity measures of Barr and Rabitz.

2201:

2093:

2260:

1498:

1001:

One of the simplest and most common approaches is that of changing one-factor-at-a-time (OAT), to see what effect this produces on the output. OAT customarily involves

5379:

2007:

2287:

391:

3022:

2553:

2365:

2159:

1980:

1890:

1639:

1586:

1559:

1381:

1319:

1151:

985:

958:

910:

531:

2715:

1073:

1900:

methods, but since this can involve many thousands of model runs, other methods (such as metamodels) can be used to reduce computational expense when necessary.

2389:

2314:

2225:

1950:

1926:

1612:

1339:

1124:

930:

742:

697:

626:

580:

551:

488:

464:

351:

260:

228:

3125:

Saltelli, A.; Ratto, M.; Andreas, T.; Campolongo, F.; Gariboni, J.; Gatelli, D.; Saisana, M.; Tarantola, S. (2008). "Global sensitivity analysis: the primer".

198:

and so on. They propagate through the model and have an impact on the output. The uncertainty on the output is described via uncertainty analysis (represented

136:

of the model's response or output. Further, models may have to cope with the natural intrinsic variability of the system (aleatory), such as the occurrence of

635:, which grows exponentially in size with the number of inputs. Therefore, screening methods can be useful for dimension reduction. Another way to tackle the

2266:, the moment-independent global sensitivity measure satisfies zero-independence. This is a relevant statistical property also known as Renyi's postulate D.

1159:

3012:

1908:

Moment-independent methods extend variance-based techniques by considering the probability density or cumulative distribution function of the model output

4055:

Borgonovo, E., Tarantola, S., Plischke, E., Morris, M. D. (2014). "Transformations and invariance in the sensitivity analysis of computer experiments".

4677:"Categorical Inputs, Sensitivity Analysis, Optimization and Importance Tempering with tgp Version 2, an R Package for Treed Gaussian Process Models"

4444:

3007:

651:

between model inputs, but sometimes inputs can be strongly correlated. Correlations between inputs must then be taken into account in the analysis.

167:

Enhancing communication from modelers to decision makers (e.g. by making recommendations more credible, understandable, compelling or persuasive).

5123:

Science Advice for Policy by

European Academies, Making sense of science for policy under conditions of complexity and uncertainty, Berlin, 2019.

1644:

184:

To identify important connections between observations, model inputs, and predictions or forecasts, leading to the development of better models.

1294:: they represent the proportion of variance explained by an input or group of inputs. This expression essentially measures the contribution of

164:

Model simplification – fixing model input that has no effect on the output, or identifying and removing redundant parts of the model structure.

776:

Identify the model output to be analysed (the target of interest should ideally have a direct relation to the problem tackled by the model).

3158:

Saltelli, A.; Tarantola, S.; Campolongo, F.; Ratto, M. (2004). "Sensitivity analysis in practice: a guide to assessing scientific models".

2858:

in the conclusions of the study, sensitivity analysis tries to identify what source of uncertainty weighs more on the study's conclusions.

1388:

170:

Finding regions in the space of input factors for which the model output is either maximum or minimum or meets some optimum criterion (see

2316:-th input, consequentially). The difference between the unconditional and conditional output distribution is usually calculated using the

810:(vertical axis) is a function of four factors. The points in the four scatterplots are always the same though sorted differently, i.e. by

4812:

Cardenas, IC (2019). "On the use of

Bayesian networks as a meta-modeling approach to analyse uncertainties in slope stability analysis".

3564:

2950:

This may be acceptable for the quality assurance of sub-models but should be avoided when presenting the results of the overall analysis.

5235:

1744:

4562:

Wang, Shangying; Fan, Kai; Luo, Nan; Cao, Yangxiaolu; Wu, Feilun; Zhang, Carolyn; Heller, Katherine A.; You, Lingchong (2019-09-25).

3753:

Bailis, R.; Ezzati, M.; Kammen, D. (2005). "Mortality and

Greenhouse Gas Impacts of Biomass and Petroleum Energy Futures in Africa".

2983:

5204:

2292:

Another measure for global sensitivity analysis, in the category of moment-independent approaches, is the PAWN index. It relies on

1040:

which has a volume only 1/6th of the total parameter space. More generally, the convex hull of the axes of a hyperrectangle forms a

2899:

In uncertainty and sensitivity analysis there is a crucial trade off between how scrupulous an analyst is in exploring the input

2009:, can be defined through an equation similar to variance-based indices replacing the conditional expectation with a distance, as

3235:

Bahremand, A.; De Smedt, F. (2008). "Distributed

Hydrological Modeling and Sensitivity Analysis in Torysa Watershed, Slovakia".

124:, i.e. the output is an "opaque" function of its inputs. Quite often, some or all of the model inputs are subject to sources of

3806:

Murphy, J.; et al. (2004). "Quantification of modelling uncertainties in a large ensemble of climate change simulations".

4777:

Ratto, M.; Pagano, A. (2010). "Using recursive algorithms for the efficient identification of smoothing spline ANOVA models".

5075:

Lo Piano, S; Robinson, M (2019). "Nutrition and public health economic evaluations under the lenses of post normal science".

3680:

3142:

3050:

2834:

2799:

2457:

2320:(KS). The PAWN index for a given input parameter is then obtained by calculating the summary statistics over all KS values.

5369:

2394:

3095:

2445:

1274:

885:

The first intuitive approach (especially useful in less complex cases) is to analyze the relationship between each input

758:

666:

553:(by calculating the corresponding sensitivity indices). Figure 1 provides a schematic representation of this statement.

2987:

2759:

4456:

3612:

3045:

2555:. By running the model at a number of points in the input space, it may be possible to fit a much simpler metamodels

2293:

648:

68:

1279:

Variance-based methods are a class of probabilistic approaches which quantify the input and output uncertainties as

3472:

3421:

503:

17:

2979:

589:

Sensitivity analysis is almost always performed by running the model a (possibly large) number of times, i.e. a

5374:

2596:

794:

the methods that takes into account the four important sensitivity analysis parameters has also been proposed.

2647:

magnitude less than the number of runs required to directly estimate the sensitivity measures from the model.

5359:

4630:

Oakley, J.; O'Hagan, A. (2004). "Probabilistic sensitivity analysis of complex models: a

Bayesian approach".

3903:

2822:

631:

The model has a large number of uncertain inputs. Sensitivity analysis is essentially the exploration of the

358:

3375:"Quasi-Monte Carlo technique in global sensitivity analysis of wind resource assessment with a study on UAE"

707:

separate the variability of the output due to the variability of the inputs from that due to stochasticity.

3329:

Effective Groundwater Model Calibration, with Analysis of Data, Sensitivities, Predictions, and Uncertainty

1262:

499:

206:

showing the proportion that each source of uncertainty contributes to the total uncertainty of the output).

199:

4564:"Massive computational acceleration by using neural networks to emulate mechanism-based biological models"

4279:"A simple and efficient method for global sensitivity analysis based on cumulative distribution functions"

1641:

and its interactions with any of the other input variables. The total effect index is given as following:

3417:"Generalized Hoeffding-Sobol decomposition for dependent variables - application to sensitivity analysis"

3075:

2317:

1008:

returning the variable to its nominal value, then repeating for each of the other inputs in the same way.

996:

398:

269:

2854:

Sensitivity analysis is closely related with uncertainty analysis; while the latter studies the overall

1265:

is large. The advantages of regression analysis are that it is simple and has a low computational cost.

161:

Searching for errors in the model (by encountering unexpected relationships between inputs and outputs).

3090:

2782:, where a succession of simple regressions are used to weight data points to sequentially reduce error.

125:

105:

101:

4985:

Hornberger, G.; Spear, R. (1981). "An approach to the preliminary analysis of environmental systems".

4361:"A new framework for comprehensive, robust, and efficient global sensitivity analysis: 2. Application"

2098:

4500:

4250:

Barr, J., Rabitz, H. (31 March 2022). "A Generalized Kernel Method for Global Sensitivity Analysis".

3559:

3040:

2970:

studies have included sections devoted to sensitivity analysis in their guidelines. Examples are the

2732:

2721:

Sampling (running) the model at a number of points in its input space. This requires a sample design.

766:

155:

Increased understanding of the relationships between input and output variables in a system or model.

43:

202:

on the output) and their relative importance is quantified via sensitivity analysis (represented by

181:

For calibration of models with large number of parameters, by focusing on the sensitive parameters.

5164:

5135:

3523:

3206:"Sensitivity Analysis of Normative Economic Models: Theoretical Framework and Practical Strategies"

3055:

2744:

2653:

2558:

1284:

1257:

1025:

133:

5261:

Pianosi, F.; Beven, K.; Freer, J.; Hall, J.W.; Rougier, J.; Stephenson, D.B.; Wagener, T. (2016).

4644:

1503:

4676:

4312:"A new framework for comprehensive, robust, and efficient global sensitivity analysis: 1. Theory"

2204:

2164:

1897:

636:

129:

5003:

Box GEP, Hunter WG, Hunter, J. Stuart. Statistics for experimenters . New York: Wiley & Sons

2012:

4639:

2789:

2233:

1474:

3518:

1985:

50:

3065:

2862:

2497:

2272:

802:

780:

590:

5256:

3932:

Morris, M. D. (1991). "Factorial Sampling Plans for Preliminary Computational Experiments".

2523:

2335:

5274:

5263:"Sensitivity analysis of environmental models: A systematic review with practical workflow"

5023:

4943:

4908:

4865:

4821:

4724:

4575:

4417:

4372:

4323:

3817:

3764:

3711:

3343:

3278:

Hill, M.; Kavetski, D.; Clark, M.; Ye, M.; Arabi, M.; Lu, D.; Foglia, L.; Mehl, S. (2015).

3244:

3070:

2874:

2861:

The problem setting in sensitivity analysis also has strong similarities with the field of

2263:

2137:

2131:

1958:

1929:

1868:

1617:

1564:

1537:

1359:

1297:

1129:

963:

936:

888:

509:

467:

97:

2691:

1047:

8:

5364:

5211:

3100:

2971:

1249:

1013:

762:

5278:

5027:

4947:

4912:

4869:

4825:

4728:

4579:

4421:

4376:

4327:

4011:

3821:

3768:

3715:

3248:

2516:

approaches that involve building a relatively simple mathematical function, known as an

5255:

International Series in Management Science and Operations Research, Springer New York.

5173:

5144:

5092:

5057:

4967:

4837:

4794:

4657:

4604:

4563:

4214:

4169:

3949:

3879:

3841:

3788:

3735:

3573:

3481:

3430:

3397:

3260:

3221:

2998:

The following pages discuss sensitivity analyses in relation to specific applications:

2934:

2763:

2751:

2374:

2299:

2210:

1935:

1911:

1597:

1324:

1109:

1103:

915:

727:

682:

611:

565:

536:

473:

449:

336:

245:

213:

175:

117:

92:

4205:

Wiesel, J. C. W. (November 2022). "Measuring association with Wasserstein distances".

3560:"An efficient methodology for modeling complex computer codes with Gaussian processes"

2821:. In all cases, it is useful to check the accuracy of the emulator, for example using

1222:{\displaystyle \left|{\frac {\partial Y}{\partial X_{i}}}\right|_{{\textbf {x}}^{0}},}

5229:

5096:

5049:

5035:

4959:

4881:

4841:

4653:

4609:

4591:

4452:

4390:

4341:

4232:

4187:

4142:

4107:

4072:

3833:

3780:

3755:

3739:

3727:

3702:

3676:

3608:

3540:

3499:

3448:

3401:

3309:

3301:

3205:

3138:

3105:

2975:

2967:

2806:

2779:

2269:

The class of moment-independent sensitivity measures includes indicators such as the

1253:

1017:

721:

658:

601:

5061:

4971:

4750:

Sudret, B. (2008). "Global sensitivity analysis using polynomial chaos expansions".

3792:

3177:

Der Kiureghian, A.; Ditlevsen, O. (2009). "Aleatory or epistemic? Does it matter?".

1261:

model response is in fact linear; linearity can be confirmed, for instance, if the

5344:

5292:

5282:

5084:

5039:

5031:

4951:

4916:

4873:

4829:

4798:

4786:

4759:

4732:

4691:

4661:

4649:

4599:

4583:

4544:

4512:

4481:

4425:

4380:

4331:

4290:

4259:

4224:

4179:

4134:

4099:

4064:

4054:

3941:

3912:

3871:

3859:

3825:

3808:

3772:

3719:

3668:

3641:

3583:

3532:

3491:

3440:

3389:

3374:

3355:

3291:

3264:

3252:

3217:

3190:

3186:

3130:

2908:

2814:

2795:

2785:

2513:

2491:

1005:

moving one input variable, keeping others at their baseline (nominal) values, then,

662:

5287:

5262:

5088:

4833:

4429:

4295:

4278:

4183:

3845:

3698:"Photosynthetic Control of Atmospheric Carbonyl Sulfide During the Growing Season"

3467:

3416:

786:

Using the resulting model outputs, calculate the sensitivity measures of interest.

724:) from the available data (that we use for training) to approximate the code (the

506:,...), sensitivity analysis aims to measure and quantify the impact of each input

5327:. Mathematics in Science and Engineering, 177. Academic Press, Inc., Orlando, FL.

4955:

3602:

3519:"Global sensitivity analysis of stochastic computer models with joint metamodels"

3080:

1280:

1041:

149:

4899:

Li, G. (2002). "Practical approaches to construct RS-HDMR component functions".

4814:

Georisk: Assessment and Management of Risk for Engineered Systems and Geohazards

4736:

3999:

Evaluating Derivatives, Principles and Techniques of Algorithmic Differentiation

3899:"Threshold for the volume spanned by random points with independent coordinates"

2900:

2508:

Metamodels (also known as emulators, surrogate models or response surfaces) are

5332:

Ecosystem Modeling in Theory and Practice: An Introduction with Case Histories.

4763:

4587:

4548:

4516:

4213:(4). Bernoulli Society for Mathematical Statistics and Probability: 2816–2832.

4138:

3697:

3587:

3359:

2773:

2736:

2463:

495:

4790:

3917:

3898:

3536:

3256:

1012:

Sensitivity may then be measured by monitoring changes in the output, e.g. by

838:

in turn. Note that the abscissa is different for each plot: (−5, +5) for

5353:

4595:

4394:

4345:

4236:

4191:

4146:

4111:

4076:

4036:

Sobol', I (1993). "Sensitivity analysis for non-linear mathematical models".

4024:

Sobol', I (1990). "Sensitivity estimates for nonlinear mathematical models".

3544:

3503:

3452:

2924:

uncertainties in inputs must be suppressed lest outputs become indeterminate.

2769:

2740:

2509:

2476:

1088:

4057:

Journal of the Royal Statistical Society. Series B (Statistical Methodology)

3776:

3723:

3672:

3646:

3629:

2758:), where any combination of output points is assumed to be distributed as a

5320:

5053:

4963:

4885:

4613:

3837:

3784:

3731:

3344:"Survey of sampling based methods for uncertainty and sensitivity analysis"

3313:

3060:

1076:

171:

5307:

Useless Arithmetic. Why Environmental Scientists Can't Predict the Future.

4696:

3134:

4385:

4360:

4336:

4311:

3984:

Sensitivity and Uncertainty Analysis: Applications to Large-Scale Systems

3660:

2931:

Not enough information to build probability distributions for the inputs:

2855:

1033:

108:; ideally, uncertainty and sensitivity analysis should be run in tandem.

88:

3829:

3342:

Helton, J. C.; Johnson, J. D.; Salaberry, C. J.; Storlie, C. B. (2006).

769:. In general, however, most procedures adhere to the following outline:

5297:

5177:

5148:

5044:

4263:

4228:

4103:

4068:

3953:

3883:

3296:

3279:

2817:

problem, which can be difficult if the response of the model is highly

1037:

676:

491:

137:

4920:

4877:

4485:

3495:

3444:

3393:

3305:

5012:

4160:

Chatterjee, S. (2 October 2021). "A New Coefficient of Correlation".

3085:

3035:

2904:

2818:

2440:

2368:

632:

239:

203:

193:

121:

5133:

Leamer, Edward E. (1983). "Let's Take the Con Out of Econometrics".

5113:

European Commission. 2021. “Better Regulation Toolbox.” November 25.

3945:

3875:

2688:(metamodel) that is a sufficiently close approximation to the model

81:

Study of uncertainty in the output of a mathematical model or system

5253:

Sensitivity Analysis: An Introduction for the Management Scientist.

4712:

4219:

4174:

4125:

Borgonovo, E. (June 2007). "A new uncertainty importance measure".

2762:. Recently, "treed" Gaussian processes have been used to deal with

1341:(averaged over variations in other variables), and is known as the

152:

of the results of a model or system in the presence of uncertainty.

4472:

Owen, A. B. (1 January 2014). "Sobol' Indices and Shapley Value".

3578:

3486:

3468:"Sensitivity analysis for multidimensional and functional outputs"

3435:

661:, can inaccurately measure sensitivity when the model response is

605:

2755:

2367:

in the parameter space. By utilizing the concepts of directional

2323:

3280:"Practical use of computationally frugal model analysis methods"

3157:

2993:

2828:

2492:

Complementary research approaches for time-consuming simulations

1858:{\displaystyle X_{\sim i}=(X_{1},...,X_{i-1},X_{i+1},...,X_{p})}

1028:

between input variables and is unsuitable for nonlinear models.

4713:"Bayesian sensitivity analysis of bifurcating nonlinear models"

4501:"Global sensitivity analysis using polynomial chaos expansions"

3982:

Cacuci, Dan G.; Ionescu-Bujor, Mihaela; Navon, Michael (2005).

1531:

denote the variance and expected value operators respectively.

806:

Figure 2. Sampling-based sensitivity analysis by scatterplots.

5345:

Web site with material from SAMO conference series (1995-2025)

4480:(1). Society for Industrial and Applied Mathematics: 245–251.

3628:

Sacks, J.; Welch, W. J.; Mitchell, T. J.; Wynn, H. P. (1989).

3510:

3124:

5162:

Leamer, Edward E. (1985). "Sensitivity Analyses Would Help".

4534:

3459:

3341:

2922:

expressed by Jerome R. Ravetz, for whom bad modeling is when

1252:, in the context of sensitivity analysis, involves fitting a

783:, dictated by the method of choice and the input uncertainty.

657:

Some sensitivity analysis approaches, such as those based on

5334:

John Wiley & Sons, New York, NY. isbn=978-0-471-34165-9.

4258:(1). Society for Industrial and Applied Mathematics: 27–54.

3862:(1999). "One-Factor-at-a-Time Versus Designed Experiments".

2907:

may be. The point is well illustrated by the econometrician

2724:

Selecting a type of emulator (mathematical function) to use.

2451:

4090:

Rényi, A. (1 September 1959). "On measures of dependence".

3557:

1734:{\displaystyle S_{i}^{T}=1-{\frac {V(\mathbb {E} )}{V(Y)}}}

210:

The object of study for sensitivity analysis is a function

3558:

Marrel, A.; Iooss, B.; Van Dorpe, F.; Volkova, E. (2008).

3516:

2892:

Some common difficulties in sensitivity analysis include:

1588:

has with other variables. A further measure, known as the

556:

188:

3517:

Marrel, A.; Iooss, B.; Da Veiga, S.; Ribatet, M. (2012).

2650:

Clearly, the crux of an metamodel approach is to find an

679:, sensitivity analysis extends to cases where the output

593:-based approach. This can be a significant problem when:

4934:

Rabitz, H (1989). "System analysis at molecular scale".

3981:

3661:

Da Veiga, S., Gamboa, F., Iooss, B., Prieur, C. (2021).

1561:

does not measure the uncertainty caused by interactions

4447:. In Petropoulos, George; Srivastava, Prashant (eds.).

4445:"Challenges and Future Outlook of Sensitivity Analysis"

3465:

3176:

2462:

The Fourier amplitude sensitivity test (FAST) uses the

639:

is to use sampling based on low discrepancy sequences.

628:-function is one way of reducing the computation costs.

4276:

3627:

3604:

Uncertain Judgements: Eliciting Experts' Probabilities

3466:

Gamboa, F.; Janon, A.; Klein, T.; Lagnoux, A. (2014).

533:

or a group of inputs on the variability of the output

5260:

3896:

3414:

3320:

2694:

2656:

2599:

2561:

2526:

2397:

2377:

2338:

2302:

2275:

2236:

2213:

2167:

2140:

2101:

2015:

1988:

1961:

1938:

1914:

1871:

1747:

1647:

1620:

1600:

1567:

1540:

1506:

1477:

1464:{\displaystyle S_{i}={\frac {V(\mathbb {E} )}{V(Y)}}}

1391:

1362:

1327:

1300:

1162:

1132:

1112:

1050:

966:

939:

918:

891:

730:

685:

614:

568:

539:

512:

476:

452:

401:

361:

339:

272:

248:

216:

4854:

4038:

Mathematical Modeling & Computational Experiment

2428:{\displaystyle {\frac {\partial Y}{\partial x_{i}}}}

5312:Santner, T. J.; Williams, B. J.; Notz, W.I. (2003)

4449:

Sensitivity Analysis in Earth Observation Modelling

3277:

4710:

4407:

4249:

3752:

2933:Probability distributions can be constructed from

2709:

2680:

2638:

2585:

2547:

2427:

2383:

2359:

2308:

2281:

2254:

2219:

2195:

2153:

2122:

2087:

2001:

1974:

1944:

1920:

1884:

1857:

1733:

1633:

1606:

1580:

1553:

1523:

1492:

1463:

1375:

1333:

1313:

1221:

1145:

1118:

1102:Local derivative-based methods involve taking the

1067:

979:

952:

924:

904:

736:

691:

620:

574:

545:

525:

482:

458:

438:

385:

345:

322:

254:

222:

5380:Mathematical and quantitative methods (economics)

5325:Design sensitivity analysis of structural systems

4629:

3234:

1097:

5351:

5074:

4359:Razavi, Saman; Gupta, Hoshin V. (January 2016).

4310:Razavi, Saman; Gupta, Hoshin V. (January 2016).

4204:

4092:Acta Mathematica Academiae Scientiarum Hungarica

647:Most common sensitivity analysis methods assume

470:aims to describe the distribution of the output

4984:

4162:Journal of the American Statistical Association

3372:

1955:The moment-independent sensitivity measures of

1290:This amount is quantified and calculated using

751:

4474:SIAM/ASA Journal on Uncertainty Quantification

4252:SIAM/ASA Journal on Uncertainty Quantification

4159:

3551:

3415:Chastaing, G.; Gamboa, F.; Prieur, C. (2012).

2960:

1865:denotes the set of all input variables except

1534:Importantly, first-order sensitivity index of

4561:

4124:

4118:

4048:

3931:

3630:"Design and Analysis of Computer Experiments"

3271:

2994:Specific applications of sensitivity analysis

2829:High-dimensional model representations (HDMR)

1903:

4674:

4451:(1st ed.). Elsevier. pp. 397–415.

3969:Sensitivity and Uncertainty Analysis: Theory

3565:Computational Statistics & Data Analysis

3326:

2887:

1928:. Thus, they do not refer to any particular

1692:

1425:

5314:Design and Analysis of Computer Experiments

5305:Pilkey, O. H. and L. Pilkey-Jarvis (2007),

5108:

5106:

4776:

4752:Reliability Engineering & System Safety

4711:Becker, W.; Worden, K.; Rowson, J. (2013).

4537:Reliability Engineering & System Safety

4471:

4442:

4358:

4309:

4127:Reliability Engineering & System Safety

3600:

3382:Journal of Renewable and Sustainable Energy

779:Run the model a number of times using some

665:with respect to its inputs. In such cases,

4492:

4153:

4035:

4023:

3118:

2731:Sampling the model can often be done with

5296:

5286:

5068:

5043:

4997:

4695:

4643:

4603:

4530:

4528:

4526:

4384:

4335:

4294:

4218:

4173:

4089:

3916:

3664:Basics and Trends in Sensitivity Analysis

3645:

3577:

3485:

3434:

3348:Reliability Engineering and System Safety

3295:

2984:Intergovernmental Panel on Climate Change

2639:{\displaystyle {\hat {f}}(X)\approx f(X)}

2452:Fourier amplitude sensitivity test (FAST)

2324:Variogram analysis of response surfaces (

1682:

1508:

1415:

1268:

716:data. We then build a statistical model (

69:Learn how and when to remove this message

5194:, New Internationalist Publications Ltd.

5103:

4811:

4717:Mechanical Systems and Signal Processing

4168:(536). Taylor & Francis: 2009–2022.

4005:

3996:

3695:

3373:Tsvetkova, O.; Ouarda, T.B.M.J. (2019).

3228:

2840:

987:are highly correlated with the output.

801:

192:

4283:Environmental Modelling \& Software

3858:

3203:

3197:

2868:

1383:, Sobol index is defined as following:

1321:alone to the uncertainty (variance) in

557:Challenges, settings and related issues

189:Mathematical formulation and vocabulary

14:

5352:

5330:Hall, C. A. S. and Day, J. W. (1977).

5267:Environmental Modelling & Software

5234:: CS1 maint: archived copy as title (

5161:

5132:

4933:

4749:

4625:

4623:

4523:

4498:

4410:Environmental Modelling & Software

4198:

3897:Gatzouras, D; Giannopoulos, A (2009).

3805:

3408:

2948:Too many model outputs are considered:

1244:

990:

5006:

4779:AStA Advances in Statistical Analysis

4675:Gramacy, R. B.; Taddy, M. A. (2010).

4083:

3151:

3051:Fourier amplitude sensitivity testing

2835:high-dimensional model representation

2800:high-dimensional model representation

2776:are trained, and the result averaged.

2717:. This requires the following steps,

2458:Fourier amplitude sensitivity testing

5309:New York: Columbia University Press.

4270:

4243:

3940:(2). Taylor & Francis: 161–174.

2813:The use of an emulator introduces a

2798:, normally used in conjunction with

2792:to approximate the response surface.

2483:

1258:standardized regression coefficients

1079:, true linearity is rare in nature.

395:The variability in input parameters

26:

4987:Journal of Environmental Management

4620:

4505:Bayesian Networks in Dependability]

3986:. Vol. II. Chapman & Hall.

3096:Variance-based sensitivity analysis

2849:

1275:Variance-based sensitivity analysis

1203:

439:{\displaystyle X_{i},i=1,\ldots ,p}

323:{\displaystyle X=(X_{1},...,X_{p})}

24:

5245:

4898:

3971:. Vol. I. Chapman & Hall.

3966:

3696:Campbell, J.; et al. (2008).

3222:10.1111/j.1574-0862.1997.tb00449.x

2988:US Environmental Protection Agency

2760:multivariate Gaussian distribution

2470:

2409:

2401:

2391:, and hence also to the values of

1179:

1171:

797:

25:

5391:

5338:

5319:Haug, Edward J.; Choi, Kyung K.;

4277:Pianosi, F., Wagener, T. (2015).

3601:O'Hagan, A.; et al. (2006).

3046:Experimental uncertainty analysis

2448:and derivative-based approaches.

2294:Cumulative Distribution Functions

873:is most important in influencing

5036:10.1111/j.1539-6924.2005.00604.x

4654:10.1111/j.1467-9868.2004.05304.x

3473:Electronic Journal of Statistics

3422:Electronic Journal of Statistics

2941:Unclear purpose of the analysis:

2123:{\displaystyle d(\cdot ,\cdot )}

1256:to the model response and using

1126:with respect to an input factor

1036:bounding all these points is an

31:

5197:

5184:

5155:

5126:

5117:

4978:

4927:

4892:

4858:Journal of Physical Chemistry A

4848:

4805:

4770:

4743:

4704:

4684:Journal of Statistical Software

4668:

4555:

4465:

4436:

4401:

4352:

4303:

4017:

3990:

3975:

3960:

3925:

3890:

3852:

3799:

3746:

3689:

3654:

3621:

3594:

3327:Hill, M.; Tiedeman, C. (2007).

2980:Office of Management and Budget

2802:(HDMR) truncations (see below).

1044:which has a volume fraction of

717:

673:Multiple or functional outputs:

597:

100:, which has a greater focus on

3366:

3335:

3191:10.1016/j.strusafe.2008.06.020

3170:

3018:Multi-criteria decision making

2704:

2698:

2675:

2669:

2663:

2633:

2627:

2618:

2612:

2606:

2580:

2574:

2568:

2542:

2536:

2354:

2348:

2243:

2240:

2177:

2134:between probability measures,

2117:

2105:

2082:

2079:

2063:

2038:

2032:

1852:

1764:

1725:

1719:

1711:

1708:

1686:

1678:

1594:, gives the total variance in

1518:

1512:

1487:

1481:

1455:

1449:

1441:

1438:

1419:

1411:

1098:Derivative-based local methods

877:as it imparts more 'shape' on

377:

371:

317:

279:

40:This article needs editing to

13:

1:

5288:10.1016/j.envsoft.2016.02.008

5089:10.1016/j.futures.2019.06.008

4901:Journal of Physical Chemistry

4834:10.1080/17499518.2018.1498524

4430:10.1016/j.envsoft.2017.03.031

4296:10.1016/j.envsoft.2015.01.004

4184:10.1080/01621459.2020.1758115

4026:Matematicheskoe Modelirovanie

3904:Israel Journal of Mathematics

3112:

2974:(see e.g. the guidelines for

2772:, in which a large number of

2681:{\displaystyle {\hat {f}}(X)}

2586:{\displaystyle {\hat {f}}(X)}

2503:

1344:first-order sensitivity index

446:have an impact on the output

111:

5192:No-Nonsense Guide to Science

4956:10.1126/science.246.4927.221

4443:Gupta, H; Razavi, S (2016).

2766:and discontinuous responses.

1524:{\displaystyle \mathbb {E} }

1263:coefficient of determination

752:Sensitivity analysis methods

633:multidimensional input space

7:

5370:Business intelligence terms

4737:10.1016/j.ymssp.2012.05.010

4034:; translated in English in

3076:Probability bounds analysis

3028:

2961:SA in international context

2903:and how wide the resulting

2897:Assumptions vs. inferences:

2786:Polynomial chaos expansions

2196:{\displaystyle P_{Y|X_{i}}}

997:One-factor-at-a-time method

10:

5396:

4764:10.1016/j.ress.2007.04.002

4588:10.1038/s41467-019-12342-y

4549:10.1016/j.ress.2009.05.007

4517:10.1016/j.ress.2007.04.002

4139:10.1016/J.RESS.2006.04.015

3588:10.1016/j.csda.2008.03.026

3360:10.1016/j.ress.2005.11.017

3237:Water Resources Management

3091:Uncertainty quantification

2872:

2455:

2088:{\displaystyle \xi _{i}=E}

1904:Moment-independent methods

1272:

1086:

994:

353:, presented as following:

106:propagation of uncertainty

102:uncertainty quantification

4791:10.1007/s10182-010-0148-8

3918:10.1007/s11856-009-0007-z

3537:10.1007/s11222-011-9274-8

3257:10.1007/s11269-007-9168-x

3041:Elementary effects method

2888:Pitfalls and difficulties

2733:low-discrepancy sequences

2255:{\displaystyle d()\geq 0}

1493:{\displaystyle V(\cdot )}

1285:probability distributions

1082:

675:Generally introduced for

5165:American Economic Review

5136:American Economic Review

4365:Water Resources Research

4316:Water Resources Research

4133:(6). Elsevier: 771–784.

3524:Statistics and Computing

3331:. John Wiley & Sons.

3056:Info-gap decision theory

2990:'s modeling guidelines.

2745:Latin hypercube sampling

2475:Shapley effects rely on

2002:{\displaystyle \xi _{i}}

1283:, represented via their

87:is the study of how the

42:comply with Knowledge's

3777:10.1126/science.1106881

3724:10.1126/science.1164015

3673:10.1137/1.9781611976694

3204:Pannell, D. J. (1997).

2739:– due to mathematician

2318:Kolmogorov–Smirnov test

2282:{\displaystyle \delta }

2205:conditional probability

759:variance decompositions

667:variance-based measures

637:curse of dimensionality

386:{\displaystyle Y=f(X).}

5251:Borgonovo, E. (2017).

3210:Agricultural Economics

3127:John Wiley \& Sons

3003:Environmental sciences

2954:Piecewise sensitivity:

2918:

2790:orthogonal polynomials

2711:

2682:

2640:

2587:

2549:

2548:{\displaystyle Y=f(X)}

2429:

2385:

2361:

2360:{\displaystyle Y=f(X)}

2310:

2283:

2256:

2221:

2197:

2155:

2124:

2089:

2003:

1976:

1946:

1922:

1886:

1859:

1735:

1635:

1608:

1582:

1555:

1525:

1494:

1465:

1377:

1335:

1315:

1269:Variance-based methods

1223:

1147:

1120:

1069:

981:

954:

926:

906:

882:

852:, (−10, +10) for

738:

693:

622:

587:Computational expense:

576:

547:

527:

484:

460:

440:

387:

347:

324:

256:

224:

207:

5375:Mathematical modeling

4697:10.18637/jss.v033.i06

4568:Nature Communications

3997:Griewank, A. (2000).

3864:American Statistician

3647:10.1214/ss/1177012413

3607:. Chichester: Wiley.

3135:10.1002/9780470725184

3066:Perturbation analysis

2944:values of the output.

2914:

2863:design of experiments

2841:Monte Carlo filtering

2712:

2683:

2641:

2588:

2550:

2498:computational expense

2430:

2386:

2362:

2311:

2284:

2257:

2222:

2203:are the marginal and

2198:

2156:

2154:{\displaystyle P_{Y}}

2125:

2090:

2004:

1977:

1975:{\displaystyle X_{i}}

1947:

1923:

1887:

1885:{\displaystyle X_{i}}

1860:

1736:

1636:

1634:{\displaystyle X_{i}}

1609:

1583:

1581:{\displaystyle X_{i}}

1556:

1554:{\displaystyle X_{i}}

1526:

1495:

1466:

1378:

1376:{\displaystyle X_{i}}

1336:

1316:

1314:{\displaystyle X_{i}}

1224:

1148:

1146:{\displaystyle X_{i}}

1121:

1070:

982:

980:{\displaystyle Z_{4}}

955:

953:{\displaystyle Z_{3}}

927:

907:

905:{\displaystyle Z_{i}}

805:

781:design of experiments

739:

713:Data-driven approach:

694:

669:are more appropriate.

623:

577:

548:

528:

526:{\displaystyle X_{i}}

485:

461:

441:

388:

348:

325:

257:

225:

196:

176:Monte Carlo filtering

130:errors of measurement

5360:Sensitivity analysis

5190:Ravetz, J.R., 2007,

4386:10.1002/2015WR017559

4337:10.1002/2015WR017558

3354:(10–11): 1175–1209.

3160:Wiley Online Library

3071:Probabilistic design

2875:Sensitivity auditing

2869:Sensitivity auditing

2710:{\displaystyle f(X)}

2692:

2654:

2597:

2559:

2524:

2395:

2375:

2336:

2300:

2273:

2234:

2211:

2165:

2138:

2132:statistical distance

2099:

2013:

1986:

1959:

1936:

1912:

1869:

1745:

1645:

1618:

1598:

1565:

1538:

1504:

1475:

1389:

1360:

1325:

1298:

1232:where the subscript

1160:

1130:

1110:

1068:{\displaystyle 1/n!}

1048:

964:

937:

916:

889:

845:, (−8, +8) for

728:

683:

612:

566:

537:

510:

474:

468:uncertainty analysis

450:

399:

359:

337:

270:

246:

214:

98:uncertainty analysis

85:Sensitivity analysis

5279:2016EnvMS..79..214P

5028:2005RiskA..25..481V

4948:1989Sci...246..221R

4913:2002JPCA..106.8721L

4870:2006JPCA..110.2474L

4826:2019GAMRE..13...53C

4729:2013MSSP...34...57B

4580:2019NatCo..10.4354W

4499:Sudret, B. (2008).

4422:2017EnvMS..95..115H

4377:2016WRR....52..440R

4328:2016WRR....52..423R

3830:10.1038/nature02771

3822:2004Natur.430..768M

3769:2005Sci...308...98B

3716:2008Sci...322.1085C

3710:(5904): 1085–1088.

3634:Statistical Science

3249:2008WatRM..22..393B

3101:Multiverse analysis

2978:), the White House

2972:European Commission

1952:, whence the name.

1662:

1250:Regression analysis

1245:Regression analysis

1014:partial derivatives

991:One-at-a-time (OAT)

763:partial derivatives

677:single-output codes

608:to approximate the

91:in the output of a

51:improve the content

5316:; Springer-Verlag.

4632:J. R. Stat. Soc. B

4289:. Elsevier: 1–11.

4264:10.1137/20M1354829

4229:10.3150/21-BEJ1438

4104:10.1007/BF02024507

4069:10.1111/rssb.12052

3297:10.1111/gwat.12330

2935:expert elicitation

2752:Gaussian processes

2707:

2678:

2636:

2583:

2545:

2425:

2381:

2357:

2306:

2279:

2252:

2217:

2193:

2151:

2120:

2085:

1999:

1982:, here denoted by

1972:

1942:

1918:

1882:

1855:

1731:

1648:

1631:

1604:

1591:total effect index

1578:

1551:

1521:

1490:

1461:

1373:

1331:

1311:

1219:

1143:

1116:

1104:partial derivative

1065:

977:

950:

922:

902:

883:

767:elementary effects

734:

689:

645:Correlated inputs:

618:

572:

543:

523:

480:

456:

436:

383:

343:

320:

252:

232:mathematical model

220:

208:

118:mathematical model

93:mathematical model

4942:(4927): 221–226.

4921:10.1021/jp014567t

4907:(37): 8721–8733.

4878:10.1021/jp054148m

4543:(11): 1735–1763.

4486:10.1137/130936233

3860:Czitrom, Veronica

3816:(7001): 768–772.

3682:978-1-61197-668-7

3572:(10): 4731–4744.

3496:10.1214/14-EJS895

3445:10.1214/12-EJS749

3394:10.1063/1.5120035

3179:Structural Safety

3144:978-0-470-05997-5

3106:Feature selection

3023:Model calibration

2976:impact assessment

2968:impact assessment

2807:Bayesian networks

2796:Smoothing splines

2780:Gradient boosting

2666:

2609:

2571:

2484:Chaos polynomials

2423:

2384:{\displaystyle Y}

2309:{\displaystyle i}

2220:{\displaystyle Y}

1945:{\displaystyle Y}

1921:{\displaystyle Y}

1729:

1607:{\displaystyle Y}

1459:

1350:main effect index

1334:{\displaystyle Y}

1254:linear regression

1205:

1193:

1119:{\displaystyle Y}

1018:linear regression

925:{\displaystyle Y}

737:{\displaystyle f}

722:data-driven model

692:{\displaystyle Y}

659:linear regression

621:{\displaystyle f}

602:data-driven model

575:{\displaystyle f}

546:{\displaystyle Y}

483:{\displaystyle Y}

459:{\displaystyle Y}

346:{\displaystyle Y}

255:{\displaystyle p}

223:{\displaystyle f}

79:

78:

71:

16:(Redirected from

5387:

5302:

5300:

5290:

5240:

5239:

5233:

5225:

5223:

5222:

5216:

5210:. Archived from

5209:

5201:

5195:

5188:

5182:

5181:

5159:

5153:

5152:

5130:

5124:

5121:

5115:

5110:

5101:

5100:

5072:

5066:

5065:

5047:

5010:

5004:

5001:

4995:

4994:

4982:

4976:

4975:

4931:

4925:

4924:

4896:

4890:

4889:

4864:(7): 2474–2485.

4852:

4846:

4845:

4809:

4803:

4802:

4774:

4768:

4767:

4747:

4741:

4740:

4708:

4702:

4701:

4699:

4681:

4672:

4666:

4665:

4647:

4627:

4618:

4617:

4607:

4559:

4553:

4552:

4532:

4521:

4520:

4496:

4490:

4489:

4469:

4463:

4462:

4440:

4434:

4433:

4405:

4399:

4398:

4388:

4356:

4350:

4349:

4339:

4307:

4301:

4300:

4298:

4274:

4268:

4267:

4247:

4241:

4240:

4222:

4202:

4196:

4195:

4177:

4157:

4151:

4150:

4122:

4116:

4115:

4087:

4081:

4080:

4063:(5). : 925–947.

4052:

4046:

4045:

4033:

4021:

4015:

4009:

4003:

4002:

3994:

3988:

3987:

3979:

3973:

3972:

3964:

3958:

3957:

3929:

3923:

3922:

3920:

3894:

3888:

3887:

3856:

3850:

3849:

3803:

3797:

3796:

3763:(5718): 98–103.

3750:

3744:

3743:

3693:

3687:

3686:

3658:

3652:

3651:

3649:

3625:

3619:

3618:

3598:

3592:

3591:

3581:

3555:

3549:

3548:

3514:

3508:

3507:

3489:

3463:

3457:

3456:

3438:

3412:

3406:

3405:

3379:

3370:

3364:

3363:

3339:

3333:

3332:

3324:

3318:

3317:

3299:

3275:

3269:

3268:

3232:

3226:

3225:

3201:

3195:

3194:

3174:

3168:

3167:

3155:

3149:

3148:

3122:

2909:Edward E. Leamer

2850:Related concepts

2823:cross-validation

2815:machine learning

2716:

2714:

2713:

2708:

2687:

2685:

2684:

2679:

2668:

2667:

2659:

2645:

2643:

2642:

2637:

2611:

2610:

2602:

2592:

2590:

2589:

2584:

2573:

2572:

2564:

2554:

2552:

2551:

2546:

2514:machine learning

2434:

2432:

2431:

2426:

2424:

2422:

2421:

2420:

2407:

2399:

2390:

2388:

2387:

2382:

2366:

2364:

2363:

2358:

2315:

2313:

2312:

2307:

2288:

2286:

2285:

2280:

2261:

2259:

2258:

2253:

2226:

2224:

2223:

2218:

2202:

2200:

2199:

2194:

2192:

2191:

2190:

2189:

2180:

2160:

2158:

2157:

2152:

2150:

2149:

2129:

2127:

2126:

2121:

2094:

2092:

2091:

2086:

2078:

2077:

2076:

2075:

2066:

2050:

2049:

2025:

2024:

2008:

2006:

2005:

2000:

1998:

1997:

1981:

1979:

1978:

1973:

1971:

1970:

1951:

1949:

1948:

1943:

1927:

1925:

1924:

1919:

1891:

1889:

1888:

1883:

1881:

1880:

1864:

1862:

1861:

1856:

1851:

1850:

1826:

1825:

1807:

1806:

1776:

1775:

1760:

1759:

1740:

1738:

1737:

1732:

1730:

1728:

1714:

1707:

1706:

1685:

1673:

1661:

1656:

1640:

1638:

1637:

1632:

1630:

1629:

1613:

1611:

1610:

1605:

1587:

1585:

1584:

1579:

1577:

1576:

1560:

1558:

1557:

1552:

1550:

1549:

1530:

1528:

1527:

1522:

1511:

1499:

1497:

1496:

1491:

1470:

1468:

1467:

1462:

1460:

1458:

1444:

1437:

1436:

1418:

1406:

1401:

1400:

1382:

1380:

1379:

1374:

1372:

1371:

1340:

1338:

1337:

1332:

1320:

1318:

1317:

1312:

1310:

1309:

1281:random variables

1228:

1226:

1225:

1220:

1215:

1214:

1213:

1212:

1207:

1206:

1198:

1194:

1192:

1191:

1190:

1177:

1169:

1152:

1150:

1149:

1144:

1142:

1141:

1125:

1123:

1122:

1117:

1074:

1072:

1071:

1066:

1058:

986:

984:

983:

978:

976:

975:

959:

957:

956:

951:

949:

948:

931:

929:

928:

923:

911:

909:

908:

903:

901:

900:

743:

741:

740:

735:

704:Stochastic code:

698:

696:

695:

690:

627:

625:

624:

619:

581:

579:

578:

573:

552:

550:

549:

544:

532:

530:

529:

524:

522:

521:

489:

487:

486:

481:

465:

463:

462:

457:

445:

443:

442:

437:

411:

410:

392:

390:

389:

384:

352:

350:

349:

344:

329:

327:

326:

321:

316:

315:

291:

290:

261:

259:

258:

253:

238:"), viewed as a

236:programming code

229:

227:

226:

221:

74:

67:

63:

60:

54:

35:

34:

27:

21:

18:What-if analysis

5395:

5394:

5390:

5389:

5388:

5386:

5385:

5384:

5350:

5349:

5341:

5248:

5246:Further reading

5243:

5227:

5226:

5220:

5218:

5214:

5207:

5205:"Archived copy"

5203:

5202:

5198:

5189:

5185:

5160:

5156:

5131:

5127:

5122:

5118:

5111:

5104:

5073:

5069:

5011:

5007:

5002:

4998:

4983:

4979:

4932:

4928:

4897:

4893:

4853:

4849:

4810:

4806:

4775:

4771:

4748:

4744:

4709:

4705:

4679:

4673:

4669:

4628:

4621:

4560:

4556:

4533:

4524:

4497:

4493:

4470:

4466:

4459:

4441:

4437:

4406:

4402:

4357:

4353:

4308:

4304:

4275:

4271:

4248:

4244:

4203:

4199:

4158:

4154:

4123:

4119:

4088:

4084:

4053:

4049:

4022:

4018:

4010:

4006:

3995:

3991:

3980:

3976:

3967:Cacuci, Dan G.

3965:

3961:

3946:10.2307/1269043

3930:

3926:

3895:

3891:

3876:10.2307/2685731

3857:

3853:

3804:

3800:

3751:

3747:

3694:

3690:

3683:

3659:

3655:

3626:

3622:

3615:

3599:

3595:

3556:

3552:

3515:

3511:

3464:

3460:

3413:

3409:

3377:

3371:

3367:

3340:

3336:

3325:

3321:

3276:

3272:

3233:

3229:

3202:

3198:

3175:

3171:

3156:

3152:

3145:

3123:

3119:

3115:

3110:

3081:Robustification

3031:

2996:

2963:

2890:

2877:

2871:

2852:

2843:

2831:

2764:heteroscedastic

2754:(also known as

2693:

2690:

2689:

2658:

2657:

2655:

2652:

2651:

2601:

2600:

2598:

2595:

2594:

2563:

2562:

2560:

2557:

2556:

2525:

2522:

2521:

2506:

2494:

2486:

2473:

2471:Shapley effects

2460:

2454:

2416:

2412:

2408:

2400:

2398:

2396:

2393:

2392:

2376:

2373:

2372:

2337:

2334:

2333:

2330:

2301:

2298:

2297:

2274:

2271:

2270:

2235:

2232:

2231:

2212:

2209:

2208:

2185:

2181:

2176:

2172:

2168:

2166:

2163:

2162:

2145:

2141:

2139:

2136:

2135:

2100:

2097:

2096:

2071:

2067:

2062:

2058:

2054:

2045:

2041:

2020:

2016:

2014:

2011:

2010:

1993:

1989:

1987:

1984:

1983:

1966:

1962:

1960:

1957:

1956:

1937:

1934:

1933:

1913:

1910:

1909:

1906:

1876:

1872:

1870:

1867:

1866:

1846:

1842:

1815:

1811:

1796:

1792:

1771:

1767:

1752:

1748:

1746:

1743:

1742:

1715:

1699:

1695:

1681:

1674:

1672:

1657:

1652:

1646:

1643:

1642:

1625:

1621:

1619:

1616:

1615:

1599:

1596:

1595:

1572:

1568:

1566:

1563:

1562:

1545:

1541:

1539:

1536:

1535:

1507:

1505:

1502:

1501:

1476:

1473:

1472:

1445:

1432:

1428:

1414:

1407:

1405:

1396:

1392:

1390:

1387:

1386:

1367:

1363:

1361:

1358:

1357:

1326:

1323:

1322:

1305:

1301:

1299:

1296:

1295:

1277:

1271:

1247:

1208:

1202:

1201:

1200:

1199:

1186:

1182:

1178:

1170:

1168:

1164:

1163:

1161:

1158:

1157:

1137:

1133:

1131:

1128:

1127:

1111:

1108:

1107:

1106:of the output

1100:

1091:

1085:

1054:

1049:

1046:

1045:

1042:hyperoctahedron

999:

993:

971:

967:

965:

962:

961:

944:

940:

938:

935:

934:

917:

914:

913:

912:and the output

896:

892:

890:

887:

886:

872:

865:

858:

851:

844:

837:

830:

823:

816:

800:

798:Visual analysis

754:

729:

726:

725:

684:

681:

680:

613:

610:

609:

567:

564:

563:

559:

538:

535:

534:

517:

513:

511:

508:

507:

490:(providing its

475:

472:

471:

451:

448:

447:

406:

402:

400:

397:

396:

360:

357:

356:

338:

335:

334:

311:

307:

286:

282:

271:

268:

267:

247:

244:

243:

215:

212:

211:

191:

114:

82:

75:

64:

58:

55:

48:

44:Manual of Style

36:

32:

23:

22:

15:

12:

11:

5:

5393:

5383:

5382:

5377:

5372:

5367:

5362:

5348:

5347:

5340:

5339:External links

5337:

5336:

5335:

5328:

5317:

5310:

5303:

5258:

5247:

5244:

5242:

5241:

5196:

5183:

5172:(3): 308–313.

5154:

5125:

5116:

5102:

5067:

5022:(2): 481–492.

5005:

4996:

4977:

4926:

4891:

4847:

4804:

4785:(4): 367–388.

4769:

4758:(7): 964–979.

4742:

4723:(1–2): 57–75.

4703:

4667:

4638:(3): 751–769.

4619:

4554:

4522:

4511:(7): 964–979.

4491:

4464:

4457:

4435:

4400:

4371:(1): 440–455.

4351:

4322:(1): 423–439.

4302:

4269:

4242:

4197:

4152:

4117:

4098:(3): 441–451.

4082:

4047:

4028:(in Russian).