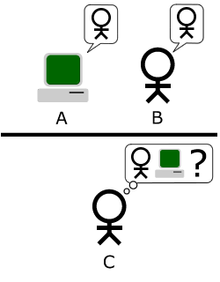

71:". The original Imitation game, that Turing described, is a simple party game involving three players. Player A is a man, player B is a woman and player C (who plays the role of the interrogator) can be of either sex. In the Imitation Game, player C is unable to see either player A or player B (and knows them only as X and Y), and can communicate with them only through written notes or any other form that does not give away any details about their gender. By asking questions of player A and player B, player C tries to determine which of the two is the man and which is the woman. Player A's role is to trick the interrogator into making the wrong decision, while player B attempts to assist the interrogator in making the right one.

527:. The propositions would have various kinds of status, e.g., well-established facts, conjectures, mathematically proved theorems, statements given by an authority, expressions having the logical form of proposition but not belief-value. Certain propositions may be described as "imperatives." The machine should be so constructed that as soon as an imperative is classed as "well established" the appropriate action automatically takes place". Despite this built-in logic system the logical inference programmed in would not be one that is formal, rather it would be one that is more pragmatic. In addition the machine would build on its built-in logic system by a method of "scientific induction".

277:(acceptance speech for his 1948 award of Lister Medal) states that "not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain." Turing replies by saying that we have no way of knowing that any individual other than ourselves experiences emotions, and that therefore we should accept the test. He adds, "I do not wish to give the impression that I think there is no mystery about consciousness ... ut I do not think these mysteries necessarily need to be solved before we can answer the question ." (This argument, that a computer can't have

475:

extended to a human mind and then to a machine. He concludes that such an analogy would indeed be suitable for the human mind with "There does seem to be one for the human mind. The majority of them seem to be "subcritical," i.e., to correspond in this analogy to piles of sub critical size. An idea presented to such a mind will on average give rise to less than one idea in reply. A smallish proportion are supercritical. An idea presented to such a mind that may give rise to a whole "theory" consisting of secondary, tertiary and more remote ideas". He finally asks if a machine could be made to be supercritical.

407:: This argument states that any system governed by laws will be predictable and therefore not truly intelligent. Turing replies by stating that this is confusing laws of behaviour with general rules of conduct, and that if on a broad enough scale (such as is evident in man) machine behaviour would become increasingly difficult to predict. He argues that, just because we can't immediately see what the laws are, does not mean that no such laws exist. He writes "we certainly know of no circumstances under which we could say, 'we have searched enough. There are no such laws.'". (

537:

understanding of the internal state of the machine at every moment during the computation. The machine will be seen to be doing things that we often cannot make sense of or something that we consider to be completely random. Turing mentions that this specific character bestows upon a machine a certain degree of what we consider to be intelligence, in that intelligent behaviour consists of a deviation from the complete determinism of conventional computation but only so long as the deviation does not give rise to pointless loops or random behaviour.

498:

The problem then is broken down into two parts, the programming of a child mind and its education process. He mentions that a child mind would not be expected as desired by the experimenter (programmer) at the first attempt. A learning process that involves a method of reward and punishment must be in place that will select desirable patterns in the mind. This whole process, Turing mentions, to a large extent is similar to that of evolution by natural selection where the similarities are:

547:

such that a systematic approach would investigate several unsatisfactory solutions to a problem before finding the optimal solution which would entail the systematic process inefficient. Turing also mentions that the process of evolution takes the path of random mutations in order to find solutions that benefit an organism but he also admits that in the case of evolution the systematic method of finding a solution would not be possible.

82:'. So the modified game becomes one that involves three participants in isolated rooms: a computer (which is being tested), a human, and a (human) judge. The human judge can converse with both the human and the computer by typing into a terminal. Both the computer and human try to convince the judge that they are the human. If the judge cannot consistently tell which is which, then the computer wins the game.

217:; therefore, a machine cannot think. "In attempting to construct such machines," wrote Turing, "we should not be irreverently usurping His power of creating souls, any more than we are in the procreation of children: rather we are, in either case, instruments of His will providing mansions for the souls that He creates."

54:

318:

Be kind, resourceful, beautiful, friendly, have initiative, have a sense of humour, tell right from wrong, make mistakes, fall in love, enjoy strawberries and cream, make someone fall in love with it, learn from experience, use words properly, be the subject of its own thought, have as much diversity

184:

Hence, Turing states that the focus is not on "whether all digital computers would do well in the game nor whether the computers that are presently available would do well, but whether there are imaginable computers which would do well". What is more important is to consider the advancements possible

546:

The importance of random behaviour: Though Turing cautions us of random behaviour he mentions that inculcating an element of randomness in a learning machine would be of value in a system. He mentions that this could be of value where there might be multiple correct answers or ones where it might be

180:

This allows the original question to be made even more specific. Turing now restates the original question as "Let us fix our attention on one particular digital computer C. Is it true that by modifying this computer to have an adequate storage, suitably increasing its speed of action, and providing

44:

Turing's paper considers the question "Can machines think?" Turing says that since the words "think" and "machine" cannot be clearly defined, we should "replace the question by another, which is closely related to it and is expressed in relatively unambiguous words." To do this, he must first find a

497:

Given this process he asks whether it would be more appropriate to program a child's mind instead of an adult’s mind and then subject the child mind to a period of education. He likens the child to a newly bought notebook and speculates that due to its simplicity it would be more easily programmed.

553:

Turing concludes by speculating about a time when machines will compete with humans on numerous intellectual tasks and suggests tasks that could be used to make that start. Turing then suggests that abstract tasks such as playing chess could be a good place to start another method which he puts as

522:

Nature of inherent complexity: The child machine could either be one that is as simple as possible, merely maintaining consistency with general principles, or the machine could be one with a complete system of logical inference programmed into it. This more complex system is explained by Turing as

465:

Here Turing first returns to Lady

Lovelace's objection that the machine can only do what we tell it to do and he likens it to a situation where a man "injects" an idea into the machine to which the machine responds and then falls off into quiescence. He extends on this thought by an analogy to an

478:

Turing then mentions that the task of being able to create a machine that could play the imitation game is one of programming and he postulates that by the end of the century it will indeed be technologically possible to program a machine to play the game. He then mentions that in the process of

378:

Turing suggests that

Lovelace's objection can be reduced to the assertion that computers "can never take us by surprise" and argues that, to the contrary, computers could still surprise humans, in particular where the consequences of different facts are not immediately recognizable. Turing also

474:

of the pile were to be sufficiently large, then a neutron entering the pile would cause a disturbance that would continue to increase until the whole pile were destroyed, the pile would be supercritical. Turing then asks the question as to whether this analogy of a super critical pile could be

536:

Ignorance of the experimenter: An important feature of a learning machine that Turing points out is the ignorance of the teacher of the machines' internal state during the learning process. This is in contrast to a conventional discrete state machine where the objective is to have a clear

129:, are themselves controversial. Some have taken Turing's question to have been "Can a computer, communicating over a teleprinter, fool a person into believing it is human?" but it seems clear that Turing was not talking about fooling people but about generating human cognitive capacity.

1146:

393:

fire in an all-or-nothing pulse, both the exact timing of the pulse and the probability of the pulse occurring have analog components. Turing acknowledges this, but argues that any analog system can be simulated to a reasonable degree of accuracy given enough computing power.

113:

indistinguishably from the way a thinker acts. This question avoids the difficult philosophical problem of pre-defining the verb "to think" and focuses instead on the performance capacities that being able to think makes possible, and how a causal system can generate them.

78:"What will happen when a machine takes the part of A in this game?" Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, 'Can machines think?

151:, while man-made, would not provide a very interesting example. Turing suggested that we should focus on the capabilities of digital machinery—machines which manipulate the binary digits of 1 and 0, rewriting them into memory using simple rules. He gave two reasons.

45:

simple and unambiguous idea to replace the word "think", second he must explain exactly which "machines" he is considering, and finally, armed with these tools, he formulates a new question, related to the first, that he believes he can answer in the affirmative.

322:

Turing notes that "no support is usually offered for these statements," and that they depend on naive assumptions about how versatile machines may be in the future, or are "disguised forms of the argument from consciousness." He chooses to answer a few of them:

199:

Having clarified the question, Turing turned to answering it: he considered the following nine common objections, which include all the major arguments against artificial intelligence raised in the years since his paper was first published.

223:: "The consequences of machines thinking would be too dreadful. Let us hope and believe that they cannot do so." This thinking is popular among intellectual people, as they believe superiority derives from higher intelligence and

1000:, p. 948 where comment "Turing examined a wide variety of possible objections to the possibility of intelligent machines, including virtually all of those that have been raised in the half century since his paper appeared."

101:

researchers that included Alan Turing. Turing, in particular, had been running the notion of machine intelligence since at least 1941 and one of the earliest-known mentions of "computer intelligence" was made by him in 1947.

379:

argues that Lady

Lovelace was hampered by the context from which she wrote, and if exposed to more contemporary scientific knowledge, it would become evident that the brain's storage is quite similar to that of a computer.

573:(though, this too is controversial) and the numerous computer chess games which can outplay most amateurs. As for the second suggestion Turing makes, it has been likened by some authors as a call to finding a

411:

would argue in 1972 that human reason and problem solving was not based on formal rules, but instead relied on instincts and awareness that would never be captured in rules. More recent AI research in

109:

notes, the question has become "Can machines do what we (as thinking entities) can do?" In other words, Turing is no longer asking whether a machine can "think"; he is asking whether a machine can

1936:

1147:

Scientific

Memoirs edited by Richard Taylor (1781–1858), Volume 3, Sketch of the Analytical Engine invented by Charles Babbage, Esq, Notes by the Translator, by Augusta Ada Lovelace. 1843

298:

227:(as machines have efficient memory capacities and processing speed, machines exceeding the learning and knowledge capabilities are highly probable). This objection is a fallacious

889:

577:

of human cognitive development. Such attempts at finding the underlying algorithms by which children learn the features of the world around them are only beginning to be made.

470:

entering the pile from outside the pile; the neutron will cause a certain disturbance which eventually dies away. Turing then builds on that analogy and mentions that, if the

435:: In 1950, extra-sensory perception was an active area of research and Turing chooses to give ESP the benefit of the doubt, arguing that conditions could be created in which

161:

had proved that a digital computer can, in theory, simulate the behaviour of any other digital machine, given enough memory and time. (This is the essential insight of the

249:

can answer. Turing suggests that humans are too often wrong themselves and pleased at the fallibility of a machine. (This argument would be made again by philosopher

1570:

479:

trying to imitate an adult human mind it becomes important to consider the processes that lead to the adult mind being in its present state; which he summarizes as:

85:

Researchers in the United

Kingdom had been exploring "machine intelligence" for up to ten years prior to the founding of the field of artificial intelligence (

57:

The "standard interpretation" of the Turing Test, in which the interrogator is tasked with trying to determine which player is a computer and which is a human

2039:

224:

859:

829:

1304:

181:

it with an appropriate programme, C can be made to play satisfactorily the part of A in the imitation game, the part of B being taken by a man?"

439:

would not affect the test. Turing admitted to "overwhelming statistical evidence" for telepathy, likely referring to early 1940s experiments by

2019:

882:"A.I. experts say the Google researcher's claim that his chatbot became 'sentient' is ridiculous—but also highlights big problems in the field"

462:

In the final section of the paper Turing details his thoughts about the

Learning Machine that could play the imitation game successfully.

2014:

554:"..it is best to provide the machine with the best sense organs that money can buy, and then teach it to understand and speak English.".

117:

Since Turing introduced his test, it has been both highly influential and widely criticised, and has become an important concept in the

419:

attempts to find the complex rules that govern our "informal" and unconscious skills of perception, mobility and pattern matching. See

881:

795:

67:

Rather than trying to determine if a machine is thinking, Turing suggests we should ask if the machine can win a game, called the "

2024:

1986:

360:: One of the most famous objections states that computers are incapable of originality. This is largely because, according to

2034:

1869:

1696:

1656:

1457:

1253:

1215:

781:

194:

118:

1609:

1576:

466:

atomic pile of less than critical size, which is to be considered the machine, and an injected idea is to correspond to a

242:

1964:

1943:

1024:, pp. 949–950. Russell and Norvig identify Lucas and Penrose's arguments as being the same one answered by Turing.

1810:

1683:

1433:

1287:

915:

561:

that has followed reveals that the learning machine did take the abstract path suggested by Turing as in the case of

1499:

349:. He notes that, with enough storage capacity, a computer can behave in an astronomical number of different ways.

851:

821:

444:

185:

in the state of our machines today regardless of whether we have the available resource to create one or not.

177:

sufficiently powerful digital machine can. Turing writes, "all digital computers are in a sense equivalent."

147:

Turing also notes that we need to determine which "machines" we wish to consider. He points out that a human

375:

to perform. It can follow analysis; but it has no power of anticipating any analytical relations or truths.

703:

The Turing Test

Sourcebook: Philosophical and Methodological Issues in the Quest for the Thinking Computer

1829:

Saygin, Ayse Pinar; Cicekli, Ilyas; Akman, Varol (1999). "An analysis and review of the next 50 years".

1823:

343:

program, can certainly be written. Turing asserts "a machine can undoubtably be its own subject matter."

1447:

562:

420:

416:

1345:

1245:

Parsing the Turing Test:Philosophical and

Methodological Issues in the Quest for the Thinking Computer

1862:

1646:

1839:

1516:

1398:

1605:

431:

250:

166:

162:

142:

2009:

607:

558:

86:

28:

154:

First, there is no reason to speculate whether or not they can exist. They already did in 1950.

1834:

1511:

1393:

294:

228:

1595:

516:

Following this discussion Turing addresses certain specific aspects of the learning machine:

1981:

339:). A program which can report on its internal states and processes, in the simple sense of a

158:

1277:

2029:

1855:

1046:

657:

1734:

1378:

8:

1950:

1889:

1207:

756:

1471:

Mind over

Machine: The Power of Human Intuition and Expertise in the Era of the Computer

1195:

1050:

764:

Proceedings of the 2020 Federated

Conference on Computer Science and Information Systems

523:"..would be such that the machines store would be largely occupied with definitions and

68:

1798:

1787:

1719:

1591:

1332:

1273:

1108:

1075:

949:

930:"The Turing Test Is Not A Trick: Turing Indistinguishability Is A Scientific Criterion"

910:

Wardrip-Fruin, Noah and Nick Montfort, ed (2003). The New Media Reader. The MIT Press.

787:

737:

270:

1407:

1323:

491:

3. Other experience, not to be described as education, to which it has been subjected.

16:

1950 article by Alan Turing on artificial intelligence that introduced the Turing test

1909:

1806:

1679:

1665:

1652:

1529:

1453:

1429:

1337:

1283:

1249:

1211:

1113:

1095:

911:

791:

777:

643:

This describes the simplest version of the test. For a more detailed discussion, see

424:

1723:

953:

1914:

1777:

1769:

1749:

1711:

1521:

1403:

1327:

1319:

1203:

1103:

1087:

1054:

941:

767:

741:

729:

457:

302:

1791:

644:

1525:

1243:

699:"The Annotation Game: On Turing (1950) on Computing, Machinery, and Intelligence"

1615:

1904:

1899:

1753:

1553:

1478:

1466:

1443:

1421:

1137:, pp. 958–960, who identify Searle's argument with the one Turing answers.

570:

408:

398:

138:

33:

1773:

1715:

733:

231:, confusing what should not be with what can or cannot be (Wardrip-Fruin, 56).

2003:

1894:

1642:

1495:

1374:

1269:

1184:, pp. 51–52, who identify Dreyfus' argument with the one Turing answers.

1099:

757:"Game AI Competitions: Motivation for the Imitation Game-Playing Competition"

718:"Minds, Machines, and Turing: The Indistinguishability of Indistinguishables"

471:

265:

257:

245:, to show that there are limits to what questions a computer system based on

106:

1648:

The Emperor's New Mind: Concerning Computers, Minds, and The Laws of Physics

1091:

1669:

1630:

1566:

1533:

1341:

1117:

386:

361:

355:

290:

274:

126:

945:

1919:

1878:

1730:

1692:

524:

440:

395:

286:

236:

211:

122:

98:

94:

62:

38:

24:

1537:

698:

676:

was not published by Turing, and did not see publication until 1968 in:

53:

1782:

574:

336:

90:

1500:"First, Scale Up to the Robotic Turing Test, Then Worry About Feeling"

772:

401:

would make this argument against "the biological assumption" in 1972.)

1059:

1034:

614:(Winter 2021 ed.), Metaphysics Research Lab, Stanford University

436:

254:

205:

157:

Second, digital machinery is "universal". Turing's research into the

74:

Turing proposes a variation of this game that involves the computer:

37:, was the first to introduce his concept of what is now known as the

329:

He notes it's easy to program a machine to appear to make a mistake.

412:

340:

89:) research in 1956. It was a common topic among the members of the

467:

148:

1678:(2nd ed.), Upper Saddle River, New Jersey: Prentice Hall,

1673:

390:

1847:

1608:(1961), "Minds, Machines and Gödel", in Anderson, A.R. (ed.),

389:

research has shown that the brain is not digital. Even though

311:. These arguments all have the form "a computer will never do

929:

717:

246:

1282:. Learning, Development, and Conceptual Change. MIT Press.

214:

566:

1305:"Origins of theory of mind, cognition and communication"

1760:

Saygin, A. P. (2000). "Turing Test: 50 years later".

1426:

AI: The Tumultuous Search for Artificial Intelligence

1242:

Epstein, Robert; Roberts, Gary; Beber, Grace (2008).

367:

The Analytical Engine has no pretensions whatever to

241:: This objection uses mathematical theorems, such as

504:

Structure of the child machine = hereditary material

173:

digital machine can "act like it is thinking", then

1241:

210:: This states that thinking is a function of man's

1828:

660:of 1956 are widely considered the "birth of AI". (

333:A machine cannot be the subject of its own thought

364:, machines are incapable of independent learning.

347:A machine cannot have much diversity of behaviour

2001:

1237:

1235:

1233:

510:Natural selection = judgment of the experimenter

488:2. The education to which it has been subjected,

485:1. The initial state of the mind, say at birth,

319:of behaviour as a man, do something really new.

1494:

1465:

1268:

1173:

873:

679:

383:Argument from continuity in the nervous system

293:argument. Turing's reply is now known as the "

225:the possibility of being overtaken is a threat

1863:

1664:

1230:

1181:

1134:

1021:

997:

2040:Works originally published in Mind (journal)

1597:Gödel, Escher, Bach: an Eternal Golden Braid

701:, in Epstein, Robert; Peters, Grace (eds.),

879:

1870:

1856:

1675:Artificial Intelligence: A Modern Approach

1590:

1017:

722:Journal of Logic, Language and Information

569:and one which defeated the world champion

405:Argument from the informality of behaviour

1838:

1813:. "Lucasfilm's Habitat" pp. 663–677.

1781:

1552:

1515:

1397:

1331:

1107:

1073:

1058:

771:

188:

1302:

852:"Online Love Seerkers Warned Flirt Bots"

849:

605:

565:, a chess playing computer developed by

507:Changes of the child machine = mutations

269:: This argument, suggested by Professor

52:

1641:

1629:

1565:

1477:

1442:

1420:

1193:

1177:

1169:

1157:

1013:

965:

963:

819:

661:

612:The Stanford Encyclopedia of Philosophy

285:, would be made in 1980 by philosopher

2002:

1759:

1735:"Computing Machinery and Intelligence"

1729:

1691:

1558:Artificial Intelligence: The Very Idea

1373:

1130:

993:

981:

969:

927:

715:

696:

673:

631:

593:

2020:Philosophy of artificial intelligence

1851:

1604:

1572:The Role of Raw Power in Intelligence

1009:

766:. IEEE Publishing. pp. 155–160.

557:An examination of the development in

195:Philosophy of artificial intelligence

119:philosophy of artificial intelligence

1958:Computing Machinery and Intelligence

1074:Jefferson, Geoffrey (25 June 1949).

975:

960:

820:Withers, Steven (11 December 2007),

801:from the original on 26 January 2021

451:

423:). This rejoinder also includes the

21:Computing Machinery and Intelligence

1965:The Chemical Basis of Morphogenesis

1824:PDF with the full text of the paper

1504:Artificial Intelligence in Medicine

904:

832:from the original on 4 October 2017

680:Evans, A. D. J.; Robertson (1968),

309:Arguments from various disabilities

132:

13:

2015:History of artificial intelligence

1944:Systems of Logic Based on Ordinals

1312:Journal of Communication Disorders

1208:10.1093/oso/9780198747826.003.0042

1194:Leavitt, David (26 January 2017),

862:from the original on 24 April 2010

850:Williams, Ian (10 December 2007),

606:Oppy, Graham; Dowe, David (2021),

121:. Some of its criticisms, such as

31:. The paper, published in 1950 in

14:

2051:

1817:

892:from the original on 13 June 2022

754:

1801:and Nick Montfort, eds. (2003).

1020:, pp. 471–473, 476–477 and

48:

23:" is a seminal paper written by

1877:

1386:Robotics and Autonomous Systems

1296:

1262:

1187:

1163:

1151:

1140:

1124:

1067:

1027:

1003:

987:

921:

843:

813:

93:, an informal group of British

2025:Artificial intelligence papers

748:

709:

690:

674:"Intelligent Machinery" (1948)

667:

650:

637:

625:

599:

587:

445:Society for Psychical Research

327:Machines cannot make mistakes.

243:Gödel's incompleteness theorem

1:

1704:Behavioral and Brain Sciences

1560:, Cambridge, Mass.: MIT Press

1408:10.1016/S0921-8890(05)80025-9

1367:

1324:10.1016/S0021-9924(99)00009-X

1279:Words, thoughts, and theories

822:"Flirty Bot Passes for Human"

610:, in Zalta, Edward N. (ed.),

371:anything. It can do whatever

315:". Turing offers a selection:

221:'Heads in the Sand' Objection

2035:Cognitive science literature

1697:"Minds, Brains and Programs"

1526:10.1016/j.artmed.2008.08.008

1428:. New York, NY: BasicBooks.

1379:"Elephants Don't Play Chess"

1303:Meltzoff, Andrew N. (1999).

1076:"The Mind of Mechanical Man"

880:Jeremy Kahn (13 June 2022).

755:Swiechowski, Maciej (2020).

7:

1651:, Oxford University Press,

1202:, Oxford University Press,

1196:"Turing and the paranormal"

645:Versions of the Turing test

10:

2056:

1637:, Harvard University Press

1498:; Scherzer, Peter (2008),

1469:; Dreyfus, Stuart (1986),

1174:Dreyfus & Dreyfus 1986

455:

417:computational intelligence

299:Can a machine have a mind?

192:

159:foundations of computation

136:

60:

1974:

1928:

1885:

1716:10.1017/S0140525X00005756

1182:Russell & Norvig 2003

1135:Russell & Norvig 2003

1022:Russell & Norvig 2003

998:Russell & Norvig 2003

1805:. Cambridge: MIT Press.

1754:10.1093/mind/LIX.236.433

1248:. Springer. p. 65.

580:

432:Extra-sensory perception

167:universal Turing machine

1774:10.1023/A:1011288000451

1473:, Oxford, UK: Blackwell

1452:, New York: MIT Press,

1449:What Computers Can't Do

1092:10.1136/bmj.1.4616.1105

1080:British Medical Journal

928:Harnad, Stevan (1992),

734:10.1023/A:1008315308862

716:Harnad, Stevan (2001),

697:Harnad, Stevan (2008),

684:, University Park Press

682:Cybernetics: Key Papers

559:artificial intelligence

421:Dreyfus' critique of AI

373:we know how to order it

41:to the general public.

29:artificial intelligence

377:

321:

229:appeal to consequences

189:Nine common objections

58:

1982:Legacy of Alan Turing

1951:Intelligent Machinery

1937:On Computable Numbers

1489:, New York: MIT Press

946:10.1145/141420.141422

658:Dartmouth conferences

365:

316:

279:conscious experiences

56:

1803:The New Media Reader

163:Church–Turing thesis

143:Church–Turing thesis

1890:Turing completeness

1592:Hofstadter, Douglas

1274:Meltzoff, Andrew N.

1086:(4616): 1105–1110.

1051:1948Natur.162U.138.

1045:(4108): 138. 1948.

934:ACM SIGART Bulletin

1831:Minds and Machines

1799:Noah Wardrip-Fruin

1762:Minds and Machines

1666:Russell, Stuart J.

1611:Minds and Machines

1540:on 8 February 2012

443:, a member of the

271:Geoffrey Jefferson

59:

1997:

1996:

1658:978-0-14-014534-2

1618:on 19 August 2007

1459:978-0-06-011082-6

1255:978-1-4020-6710-5

1217:978-0-19-874782-6

783:978-83-955416-7-4

773:10.15439/2020F126

608:"The Turing Test"

452:Learning machines

297:reply". See also

169:.) Therefore, if

2047:

1915:Turing reduction

1872:

1865:

1858:

1849:

1848:

1844:

1842:

1795:

1785:

1756:

1748:(236): 433–460,

1739:

1733:(October 1950),

1726:

1701:

1688:

1661:

1638:

1626:

1625:

1623:

1614:, archived from

1600:

1587:

1586:

1584:

1575:, archived from

1561:

1548:

1547:

1545:

1536:, archived from

1519:

1490:

1474:

1462:

1439:

1417:

1416:

1414:

1401:

1383:

1361:

1360:

1358:

1356:

1351:on 15 April 2021

1350:

1344:. Archived from

1335:

1309:

1300:

1294:

1293:

1266:

1260:

1259:

1239:

1228:

1227:

1226:

1224:

1200:The Turing Guide

1191:

1185:

1167:

1161:

1155:

1149:

1144:

1138:

1128:

1122:

1121:

1111:

1071:

1065:

1064:

1062:

1060:10.1038/162138e0

1031:

1025:

1007:

1001:

991:

985:

979:

973:

967:

958:

957:

925:

919:

908:

902:

901:

899:

897:

877:

871:

870:

869:

867:

847:

841:

840:

839:

837:

817:

811:

810:

808:

806:

800:

775:

761:

752:

746:

745:

713:

707:

706:

694:

688:

685:

671:

665:

654:

648:

641:

635:

629:

623:

622:

621:

619:

603:

597:

591:

458:Machine learning

303:philosophy of AI

133:Digital machines

81:

77:

27:on the topic of

2055:

2054:

2050:

2049:

2048:

2046:

2045:

2044:

2000:

1999:

1998:

1993:

1970:

1924:

1881:

1876:

1840:10.1.1.157.1592

1820:

1737:

1699:

1686:

1659:

1621:

1619:

1582:

1580:

1579:on 3 March 2016

1554:Haugeland, John

1543:

1541:

1517:10.1.1.115.4269

1483:What Computers

1479:Dreyfus, Hubert

1467:Dreyfus, Hubert

1460:

1444:Dreyfus, Hubert

1436:

1422:Crevier, Daniel

1412:

1410:

1399:10.1.1.588.7539

1381:

1370:

1365:

1364:

1354:

1352:

1348:

1307:

1301:

1297:

1290:

1267:

1263:

1256:

1240:

1231:

1222:

1220:

1218:

1192:

1188:

1168:

1164:

1156:

1152:

1145:

1141:

1129:

1125:

1072:

1068:

1035:"Announcements"

1033:

1032:

1028:

1018:Hofstadter 1979

1008:

1004:

992:

988:

980:

976:

968:

961:

926:

922:

909:

905:

895:

893:

878:

874:

865:

863:

848:

844:

835:

833:

818:

814:

804:

802:

798:

784:

759:

753:

749:

714:

710:

695:

691:

672:

668:

655:

651:

642:

638:

630:

626:

617:

615:

604:

600:

592:

588:

583:

460:

454:

197:

191:

145:

135:

79:

75:

65:

51:

17:

12:

11:

5:

2053:

2043:

2042:

2037:

2032:

2027:

2022:

2017:

2012:

2010:1950 documents

1995:

1994:

1992:

1991:

1990:

1989:

1978:

1976:

1972:

1971:

1969:

1968:

1961:

1954:

1947:

1940:

1932:

1930:

1926:

1925:

1923:

1922:

1917:

1912:

1910:Turing's proof

1907:

1905:Turing pattern

1902:

1900:Turing machine

1897:

1892:

1886:

1883:

1882:

1875:

1874:

1867:

1860:

1852:

1846:

1845:

1826:

1819:

1818:External links

1816:

1815:

1814:

1796:

1768:(4): 463–518.

1757:

1727:

1710:(3): 417–457,

1689:

1684:

1662:

1657:

1643:Penrose, Roger

1639:

1627:

1602:

1588:

1563:

1550:

1496:Harnad, Stevan

1492:

1475:

1463:

1458:

1440:

1434:

1418:

1375:Brooks, Rodney

1369:

1366:

1363:

1362:

1318:(4): 251–269.

1295:

1288:

1270:Gopnik, Alison

1261:

1254:

1229:

1216:

1186:

1162:

1150:

1139:

1123:

1066:

1026:

1002:

986:

974:

959:

920:

903:

872:

842:

812:

782:

747:

728:(4): 425–445,

708:

689:

687:

686:

666:

649:

636:

624:

598:

585:

584:

582:

579:

571:Garry Kasparov

551:

550:

549:

548:

541:

540:

539:

538:

531:

530:

529:

528:

514:

513:

512:

511:

508:

505:

495:

494:

493:

492:

489:

486:

453:

450:

449:

448:

428:

425:Turing's Wager

409:Hubert Dreyfus

402:

399:Hubert Dreyfus

380:

352:

351:

350:

344:

330:

306:

275:Lister Oration

264:Argument From

261:

232:

218:

190:

187:

139:Turing machine

134:

131:

69:Imitation Game

61:Main article:

50:

47:

15:

9:

6:

4:

3:

2:

2052:

2041:

2038:

2036:

2033:

2031:

2028:

2026:

2023:

2021:

2018:

2016:

2013:

2011:

2008:

2007:

2005:

1988:

1985:

1984:

1983:

1980:

1979:

1977:

1973:

1966:

1962:

1959:

1955:

1952:

1948:

1945:

1941:

1938:

1934:

1933:

1931:

1927:

1921:

1918:

1916:

1913:

1911:

1908:

1906:

1903:

1901:

1898:

1896:

1895:Turing degree

1893:

1891:

1888:

1887:

1884:

1880:

1873:

1868:

1866:

1861:

1859:

1854:

1853:

1850:

1841:

1836:

1832:

1827:

1825:

1822:

1821:

1812:

1811:0-262-23227-8

1808:

1804:

1800:

1797:

1793:

1789:

1784:

1779:

1775:

1771:

1767:

1763:

1758:

1755:

1751:

1747:

1743:

1736:

1732:

1728:

1725:

1721:

1717:

1713:

1709:

1705:

1698:

1694:

1690:

1687:

1685:0-13-790395-2

1681:

1677:

1676:

1671:

1670:Norvig, Peter

1667:

1663:

1660:

1654:

1650:

1649:

1644:

1640:

1636:

1635:Mind Children

1632:

1631:Moravec, Hans

1628:

1617:

1613:

1612:

1607:

1603:

1599:

1598:

1593:

1589:

1578:

1574:

1573:

1568:

1567:Moravec, Hans

1564:

1559:

1555:

1551:

1539:

1535:

1531:

1527:

1523:

1518:

1513:

1509:

1505:

1501:

1497:

1493:

1488:

1484:

1480:

1476:

1472:

1468:

1464:

1461:

1455:

1451:

1450:

1445:

1441:

1437:

1435:0-465-02997-3

1431:

1427:

1423:

1419:

1409:

1405:

1400:

1395:

1392:(1–2): 3–15,

1391:

1387:

1380:

1376:

1372:

1371:

1347:

1343:

1339:

1334:

1329:

1325:

1321:

1317:

1313:

1306:

1299:

1291:

1289:9780262071758

1285:

1281:

1280:

1275:

1271:

1265:

1257:

1251:

1247:

1246:

1238:

1236:

1234:

1219:

1213:

1209:

1205:

1201:

1197:

1190:

1183:

1179:

1175:

1171:

1166:

1160:, p. 156

1159:

1154:

1148:

1143:

1136:

1132:

1127:

1119:

1115:

1110:

1105:

1101:

1097:

1093:

1089:

1085:

1081:

1077:

1070:

1061:

1056:

1052:

1048:

1044:

1040:

1036:

1030:

1023:

1019:

1015:

1011:

1006:

999:

995:

990:

984:, p. 436

983:

978:

972:, p. 442

971:

966:

964:

955:

951:

947:

943:

939:

935:

931:

924:

917:

916:0-262-23227-8

913:

907:

891:

887:

883:

876:

861:

857:

853:

846:

831:

827:

823:

816:

797:

793:

789:

785:

779:

774:

769:

765:

758:

751:

743:

739:

735:

731:

727:

723:

719:

712:

704:

700:

693:

683:

678:

677:

675:

670:

664:, p. 49)

663:

659:

653:

646:

640:

634:, p. 434

633:

628:

613:

609:

602:

596:, p. 433

595:

590:

586:

578:

576:

572:

568:

564:

560:

555:

545:

544:

543:

542:

535:

534:

533:

532:

526:

521:

520:

519:

518:

517:

509:

506:

503:

502:

501:

500:

499:

490:

487:

484:

483:

482:

481:

480:

476:

473:

469:

463:

459:

446:

442:

438:

434:

433:

429:

426:

422:

418:

414:

410:

406:

403:

400:

397:

392:

388:

384:

381:

376:

374:

370:

363:

359:

357:

356:Lady Lovelace

353:

348:

345:

342:

338:

335:(or can't be

334:

331:

328:

325:

324:

320:

314:

310:

307:

304:

300:

296:

292:

288:

284:

283:understanding

280:

276:

272:

268:

267:

266:Consciousness

262:

259:

258:Roger Penrose

256:

252:

248:

244:

240:

238:

233:

230:

226:

222:

219:

216:

213:

209:

207:

203:

202:

201:

196:

186:

182:

178:

176:

172:

168:

164:

160:

155:

152:

150:

144:

140:

130:

128:

124:

120:

115:

112:

108:

107:Stevan Harnad

103:

100:

96:

92:

88:

83:

72:

70:

64:

55:

49:Turing's test

46:

42:

40:

36:

35:

30:

26:

22:

1957:

1929:Publications

1830:

1802:

1765:

1761:

1745:

1741:

1731:Turing, Alan

1707:

1703:

1693:Searle, John

1674:

1647:

1634:

1620:, retrieved

1616:the original

1610:

1596:

1581:, retrieved

1577:the original

1571:

1557:

1542:, retrieved

1538:the original

1507:

1503:

1486:

1482:

1470:

1448:

1425:

1411:, retrieved

1389:

1385:

1353:. Retrieved

1346:the original

1315:

1311:

1298:

1278:

1264:

1244:

1221:, retrieved

1199:

1189:

1178:Moravec 1988

1170:Dreyfus 1972

1165:

1158:Dreyfus 1979

1153:

1142:

1126:

1083:

1079:

1069:

1042:

1038:

1029:

1014:Penrose 1989

1005:

989:

977:

937:

933:

923:

906:

894:. Retrieved

885:

875:

864:, retrieved

855:

845:

834:, retrieved

825:

815:

803:. Retrieved

763:

750:

725:

721:

711:

702:

692:

681:

669:

662:Crevier 1993

652:

639:

627:

616:, retrieved

611:

601:

589:

556:

552:

525:propositions

515:

496:

477:

464:

461:

437:mind-reading

430:

404:

387:neurological

382:

372:

368:

366:

362:Ada Lovelace

358:'s Objection

354:

346:

332:

326:

317:

312:

308:

291:Chinese room

282:

278:

273:in his 1949

263:

253:in 1961 and

237:Mathematical

234:

220:

204:

198:

183:

179:

174:

170:

156:

153:

146:

127:Chinese room

116:

110:

104:

84:

73:

66:

43:

32:

20:

18:

2030:Alan Turing

1920:Turing test

1879:Alan Turing

1783:11693/24987

1606:Lucas, John

1510:(2): 83–9,

1355:27 November

1131:Searle 1980

994:Turing 1950

982:Turing 1950

970:Turing 1950

940:(4): 9–10,

866:10 February

836:10 February

805:8 September

632:Turing 1950

594:Turing 1950

441:Samuel Soal

396:Philosopher

295:other minds

287:John Searle

123:John Searle

99:electronics

95:cybernetics

63:Turing test

39:Turing test

25:Alan Turing

2004:Categories

1622:2 December

1583:7 November

1368:References

1010:Lucas 1961

575:simulacrum

456:See also:

337:self-aware

251:John Lucas

193:See also:

137:See also:

91:Ratio Club

1987:namesakes

1835:CiteSeerX

1544:29 August

1512:CiteSeerX

1413:30 August

1394:CiteSeerX

1100:0007-1447

792:222296354

563:Deep Blue

427:argument.

385:: Modern

369:originate

260:in 1989.)

255:physicist

239:Objection

208:Objection

206:Religious

1967:" (1952)

1960:" (1950)

1953:" (1948)

1946:" (1939)

1939:" (1936)

1833:: 2000.

1724:55303721

1695:(1980),

1672:(2003),

1645:(1989),

1633:(1988),

1594:(1979),

1569:(1976),

1556:(1985),

1534:18930641

1487:Can't Do

1481:(1979),

1446:(1972),

1424:(1993).

1377:(1990),

1342:10466097

1276:(1997).

1118:18153422

954:36356326

890:Archived

860:archived

830:archived

796:Archived

705:, Kluwer

618:6 August

413:robotics

341:debugger

212:immortal

165:and the

1975:Related

1333:3629913

1223:23 July

1109:2050428

1047:Bibcode

896:13 June

886:Fortune

742:1911720

468:neutron

391:neurons

301:in the

289:in his

1837:

1809:

1792:990084

1790:

1722:

1682:

1655:

1532:

1514:

1456:

1432:

1396:

1340:

1330:

1286:

1252:

1214:

1116:

1106:

1098:

1039:Nature

952:

914:

826:iTWire

790:

780:

740:

1788:S2CID

1738:(PDF)

1720:S2CID

1700:(PDF)

1485:Still

1382:(PDF)

1349:(PDF)

1308:(PDF)

950:S2CID

799:(PDF)

788:S2CID

760:(PDF)

738:S2CID

581:Notes

247:logic

175:every

149:clone

1807:ISBN

1742:Mind

1680:ISBN

1653:ISBN

1624:2022

1585:2007

1546:2010

1530:PMID

1454:ISBN

1430:ISBN

1415:2007

1357:2014

1338:PMID

1284:ISBN

1250:ISBN

1225:2023

1212:ISBN

1180:and

1133:and

1114:PMID

1096:ISSN

996:see

912:ISBN

898:2022

868:2010

838:2010

807:2020

778:ISBN

656:The

620:2023

472:size

415:and

235:The

215:soul

141:and

97:and

34:Mind

1778:hdl

1770:doi

1750:doi

1746:LIX

1712:doi

1522:doi

1404:doi

1328:PMC

1320:doi

1204:doi

1104:PMC

1088:doi

1055:doi

1043:162

942:doi

768:doi

730:doi

567:IBM

281:or

171:any

125:'s

111:act

105:As

2006::

1786:.

1776:.

1766:10

1764:.

1744:,

1740:,

1718:,

1706:,

1702:,

1668:;

1528:,

1520:,

1508:44

1506:,

1502:,

1402:,

1388:,

1384:,

1336:.

1326:.

1316:32

1314:.

1310:.

1272:;

1232:^

1210:,

1198:,

1176:,

1172:,

1112:.

1102:.

1094:.

1082:.

1078:.

1053:.

1041:.

1037:.

1016:,

1012:,

962:^

948:,

936:,

932:,

888:.

884:.

858:,

856:V3

854:,

828:,

824:,

794:.

786:.

776:.

762:.

736:,

724:,

720:,

305:.)

87:AI

1963:"

1956:"

1949:"

1942:"

1935:"

1871:e

1864:t

1857:v

1843:.

1794:.

1780::

1772::

1752::

1714::

1708:3

1601:.

1562:.

1549:.

1524::

1491:.

1438:.

1406::

1390:6

1359:.

1322::

1292:.

1258:.

1206::

1120:.

1090::

1084:1

1063:.

1057::

1049::

956:.

944::

938:3

918:.

900:.

809:.

770::

744:.

732::

726:9

647:.

447:.

394:(

313:X

80:"

76:'

19:"

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.