813:

164:

792:

The X-Robots-Tag is only effective after the page has been requested and the server responds, and the robots meta tag is only effective after the page has loaded, whereas robots.txt is effective before the page is requested. Thus if a page is excluded by a robots.txt file, any robots meta tags or

664:

The crawl-delay value is supported by some crawlers to throttle their visits to the host. Since this value is not part of the standard, its interpretation is dependent on the crawler reading it. It is used when the multiple burst of visits from bots is slowing down the host. Yandex interprets the

650:

User-agent: googlebot # all Google services

Disallow: /private/ # disallow this directory User-agent: googlebot-news # only the news service Disallow: / # disallow everything User-agent: * # any robot Disallow: /something/ # disallow this

369:

A robots.txt file on a website will function as a request that specified robots ignore specified files or directories when crawling a site. This might be, for example, out of a preference for privacy from search engine results, or the belief that the content of the selected directories might be

522:(NIST) in the United States specifically recommends against this practice: "System security should not depend on the secrecy of the implementation or its components." In the context of robots.txt files, security through obscurity is not recommended as a security technique.

514:; it cannot enforce any of what is stated in the file. Malicious web robots are unlikely to honor robots.txt; some may even use the robots.txt as a guide to find disallowed links and go straight to them. While this is sometimes claimed to be a security risk, this sort of

370:

misleading or irrelevant to the categorization of the site as a whole, or out of a desire that an application only operates on certain data. Links to pages listed in robots.txt can still appear in search results if they are linked to from a page that is crawled.

168:

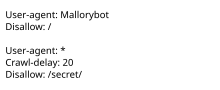

Example of a simple robots.txt file, indicating that a user-agent called "Mallorybot" is not allowed to crawl any of the website's pages, and that other user-agents cannot crawl more than one page every 20 seconds, and are not allowed to crawl the "secret"

501:

s David Pierce said this only began after "training the underlying models that made it so powerful". Also, some bots are used both for search engines and artificial intelligence, and it may be impossible to block only one of these options.

665:

value as the number of seconds to wait between subsequent visits. Bing defines crawl-delay as the size of a time window (from 1 to 30 seconds) during which BingBot will access a web site only once. Google provides an interface in its

359:). This text file contains the instructions in a specific format (see examples below). Robots that choose to follow the instructions try to fetch this file and read the instructions before fetching any other file from the

734:

and X-Robots-Tag HTTP headers. The robots meta tag cannot be used for non-HTML files such as images, text files, or PDF documents. On the other hand, the X-Robots-Tag can be added to non-HTML files by using

455:, this followed widespread use of robots.txt to remove historical sites from search engine results, and contrasted with the nonprofit's aim to archive "snapshots" of the internet as it previously existed.

801:

The Robots

Exclusion Protocol requires crawlers to parse at least 500 kibibytes (512000 bytes) of robots.txt files, which Google maintains as a 500 kibibyte file size restriction for robots.txt files.

366:

A robots.txt file contains instructions for bots indicating which web pages they can and cannot access. Robots.txt files are particularly important for web crawlers from search engines such as Google.

1804:

1346:

1435:

882:

445:

said that "unchecked, and left alone, the robots.txt file ensures no mirroring or reference for items that may have general use and meaning beyond the website's context." In 2017, the

1558:

2166:

475:'s Google-Extended. Many robots.txt files named GPTBot as the only bot explicitly disallowed on all pages. Denying access to GPTBot was common among news websites such as the

1942:

313:

to specify which bots should not access their website or which pages bots should not access. The internet was small enough in 1994 to maintain a complete list of all bots;

1132:

1064:

1590:

489:

announced it would deny access to all artificial intelligence web crawlers as "AI companies have leached value from writers in order to spam

Internet readers".

2056:

1994:

1892:

2127:

1800:

1409:

1338:

640:

It is also possible to list multiple robots with their own rules. The actual robot string is defined by the crawler. A few robot operators, such as

1431:

644:, support several user-agent strings that allow the operator to deny access to a subset of their services by using specific user-agent strings.

637:# Comments appear after the "#" symbol at the start of a line, or after a directive User-agent: * # match all bots Disallow: / # keep them out

519:

1687:

2027:

1498:

363:. If this file does not exist, web robots assume that the website owner does not wish to place any limitations on crawling the entire site.

1550:

1729:

1972:

2152:

979:

1528:

1161:

730:

In addition to root-level robots.txt files, robots exclusion directives can be applied at a more granular level through the use of

1938:

1616:

259:. Malicious bots can use the file as a directory of which pages to visit, though standards bodies discourage countering this with

534:

to the web server when fetching content. A web administrator could also configure the server to automatically return failure (or

1779:

1645:

1227:

106:

94:

1256:

1316:

302:

claims to have provoked Koster to suggest robots.txt, after he wrote a badly behaved web crawler that inadvertently caused a

1124:

1056:

413:

A robots.txt has no enforcement mechanism in law or in technical protocol, despite widespread compliance by bot operators.

1468:

110:

90:

2207:

946:

510:

Despite the use of the terms "allow" and "disallow", the protocol is purely advisory and relies on the compliance of the

102:

1027:

1005:

464:

268:

1913:

631:

User-agent: BadBot # replace 'BadBot' with the actual user-agent of the bot User-agent: Googlebot

Disallow: /private/

1723:

1580:

843:

78:

37:

2052:

1998:

876:

1884:

1096:

340:

2119:

1376:

463:

Starting in the 2020s, web operators began using robots.txt to deny access to bots collecting training data for

1829:

1401:

840:, a file to describe the process for security researchers to follow in order to report security vulnerabilities

339:

On July 1, 2019, Google announced the proposal of the Robots

Exclusion Protocol as an official standard under

67:

26:

1278:

492:

GPTBot complies with the robots.txt standard and gives advice to web operators about how to disallow it, but

1861:

127:

515:

260:

1754:

793:

X-Robots-Tag headers are effectively ignored because the robot will not see them in the first place.

1668:

855:

303:

2019:

1490:

425:

following this standard include Ask, AOL, Baidu, Bing, DuckDuckGo, Google, Yahoo!, and Yandex.

1551:"Robots.txt meant for search engines don't work well for web archives | Internet Archive Blogs"

565:

2053:"Robots meta tag and X-Robots-Tag HTTP header specifications - Webmasters — Google Developers"

377:. For websites with multiple subdomains, each subdomain must have its own robots.txt file. If

2217:

2157:

1713:

666:

74:

33:

123:

2098:

1964:

1203:

256:

351:

When a site owner wishes to give instructions to web robots they place a text file called

8:

2222:

1885:"Is This a Google Easter Egg or Proof That Skynet Is Actually Plotting World Domination?"

968:

53:

1524:

1153:

625:

User-agent: BadBot # replace 'BadBot' with the actual user-agent of the bot

Disallow: /

263:. Some archival sites ignore robots.txt. The standard was used in the 1990s to mitigate

1612:

481:

467:. In 2023, Originality.AI found that 306 of the thousand most-visited websites blocked

374:

314:

264:

1775:

1637:

2212:

1719:

1223:

318:

298:

mailing list, the main communication channel for WWW-related activities at the time.

267:

overload. In the 2020s many websites began denying bots that collect information for

21:

812:

2088:

1679:

1248:

1193:

865:

486:

446:

1308:

870:

860:

818:

731:

584:

This example tells all robots that they can visit all files because the wildcard

84:(Added a book to the "Further reading" section, and performed cleanup.)

49:(Added a book to the "Further reading" section, and performed cleanup.)

2101:

2078:

1206:

1183:

449:

announced that it would stop complying with robots.txt directives. According to

220:

1585:

1460:

451:

422:

299:

287:

969:"Maintaining Distributed Hypertext Infostructures: Welcome to MOMspider's Web"

938:

601:

The same result can be accomplished with an empty or missing robots.txt file.

2201:

1339:"Robots Exclusion Protocol: joining together to provide better documentation"

1035:

913:

310:

1683:

1001:

628:

This example tells two specific robots not to enter one specific directory:

549:

file that displays information meant for humans to read. Some sites such as

1917:

836:

434:

249:

1801:"Deny Strings for Filtering Rules : The Official Microsoft IIS Site"

1520:

918:

908:

573:

442:

245:

1581:"The Internet Archive Will Ignore Robots.txt Files to Maintain Accuracy"

740:

569:

531:

325:

98:

2093:

1995:"Yahoo! Search Blog - Webmasters can now auto-discover with Sitemaps"

1368:

1198:

1101:

1057:"How I got here in the end, part five: "things can only get better!""

1040:

736:

670:

511:

494:

393:. In addition, each protocol and port needs its own robots.txt file;

333:

2120:"How Google Interprets the robots.txt Specification | Documentation"

1825:

883:

National

Digital Information Infrastructure and Preservation Program

324:; most complied, including those operated by search engines such as

903:

898:

888:

689:

616:

This example tells all robots to stay away from one specific file:

613:

User-agent: * Disallow: /cgi-bin/ Disallow: /tmp/ Disallow: /junk/

535:

343:. A proposed standard was published in September 2022 as RFC 9309.

275:

233:

2077:

Koster, M.; Illyes, G.; Zeller, H.; Sassman, L. (September 2022).

1286:

1182:

Koster, M.; Illyes, G.; Zeller, H.; Sassman, L. (September 2022).

1638:"Robots.txt tells hackers the places you don't want them to look"

893:

849:

828:

438:

360:

241:

1851:

1856:

641:

550:

542:

472:

468:

1750:

622:

All other files in the specified directory will be processed.

610:

This example tells all robots not to enter three directories:

782:

329:

317:

overload was a primary concern. By June 1994 it had become a

291:

200:

Gary Illyes, Henner Zeller, Lizzi

Sassman (IETF contributors)

163:

2190:

2084:

1189:

1125:"Robots.txt Celebrates 20 Years Of Blocking Search Engines"

1715:

Innocent Code: A Security Wake-Up Call for Web

Programmers

1613:"Block URLs with robots.txt: Learn about robots.txt files"

56:

to this revision, which may differ significantly from the

2162:

2076:

1396:

1394:

1181:

1154:"Formalizing the Robots Exclusion Protocol Specification"

592:

directive has no value, meaning no pages are disallowed.

476:

252:

which portions of the website they are allowed to visit.

604:

This example tells all robots to stay out of a website:

538:) when it detects a connection using one of the robots.

212:

2153:"Artificial Intelligence Web Crawlers Are Running Amok"

1939:"To crawl or not to crawl, that is BingBot's question"

1391:

274:

The "robots.txt" file can be used in conjunction with

309:

The standard, initially RobotsNotWanted.txt, allowed

1667:

Scarfone, K. A.; Jansen, W.; Tracy, M. (July 2008).

1424:

976:

808:

796:

143:

58:

1965:"Change Googlebot crawl rate - Search Console Help"

1666:

471:'s GPTBot in their robots.txt file and 85 blocked

852:– Now inactive search engine for robots.txt files

746:

278:, another robot inclusion standard for websites.

2199:

1931:

1751:"List of User-Agents (Spiders, Robots, Browser)"

1711:

1572:

1455:

1453:

1331:

1271:

709:

634:Example demonstrating how comments can be used:

433:Some web archiving projects ignore robots.txt.

2047:

2045:

1676:National Institute of Standards and Technology

1361:

1241:

1122:

1028:"Important: Spiders, Robots and Web Wanderers"

832:, a standard for listing authorized ad sellers

520:National Institute of Standards and Technology

437:uses the file to discover more links, such as

1450:

676:User-agent: bingbot Allow: / Crawl-delay: 10

619:User-agent: * Disallow: /directory/file.html

560:Previously, Google had a joke file hosted at

186:1994 published, formally standardized in 2022

1301:

647:Example demonstrating multiple user-agents:

355:in the root of the web site hierarchy (e.g.

2042:

706:Sitemap: http://www.example.com/sitemap.xml

255:The standard, developed in 1994, relies on

2109:sec. 2.5: Limits.

1432:"Submitting your website to Yahoo! Search"

718:does not mention the "*" character in the

458:

162:

120:

2092:

1718:. John Wiley & Sons. pp. 91–92.

1197:

654:

1116:

966:

846:– A failed proposal to extend robots.txt

725:

659:

518:is discouraged by standards bodies. The

385:did not, the rules that would apply for

47:

1519:

1402:"Webmasters: Robots.txt Specifications"

1090:

1088:

1086:

1084:

1082:

65:

14:

2200:

1882:

1175:

1158:Official Google Webmaster Central Blog

1097:"The text file that runs the internet"

1094:

1025:

2150:

2030:from the original on November 2, 2019

1832:from the original on January 24, 2017

1776:"Access Control - Apache HTTP Server"

1578:

1379:from the original on 16 February 2017

142:For Knowledge's robots.txt file, see

66:Revision as of 06:29, 6 July 2024 by

44:

25:

1501:from the original on 10 October 2022

1259:from the original on 27 January 2013

1079:

1026:Koster, Martijn (25 February 1994).

17:

144:https://en.wikipedia.org/robots.txt

119:

88:

2144:

2070:

1669:"Guide to General Server Security"

1319:from the original on 6 August 2013

1224:"Uncrawled URLs in search results"

1095:Pierce, David (14 February 2024).

357:https://www.example.com/robots.txt

269:generative artificial intelligence

137:

2234:

2182:

1864:from the original on May 30, 2016

994:

844:Automated Content Access Protocol

797:Maximum size of a robots.txt file

568:not to kill the company founders

428:

416:

52:. The present address (URL) is a

2169:from the original on 6 July 2024

877:National Digital Library Program

811:

783:A "noindex" HTTP response header

530:Many robots also pass a special

197:Martijn Koster (original author)

2130:from the original on 2022-10-17

2112:

2059:from the original on 2013-08-08

2012:

1987:

1975:from the original on 2018-11-18

1957:

1945:from the original on 2016-02-03

1906:

1895:from the original on 2018-11-18

1883:Newman, Lily Hay (2014-07-03).

1876:

1844:

1818:

1807:from the original on 2014-01-01

1793:

1782:from the original on 2013-12-29

1768:

1757:from the original on 2014-01-07

1743:

1732:from the original on 2016-04-01

1705:

1693:from the original on 2011-10-08

1660:

1648:from the original on 2015-08-21

1630:

1619:from the original on 2015-08-14

1605:

1593:from the original on 2017-05-16

1561:from the original on 2018-12-04

1543:

1531:from the original on 2017-02-18

1513:

1483:

1471:from the original on 2013-01-25

1438:from the original on 2013-01-21

1412:from the original on 2013-01-15

1349:from the original on 2014-08-18

1230:from the original on 2014-01-06

1216:

1164:from the original on 2019-07-10

1135:from the original on 2015-09-07

1123:Barry Schwartz (30 June 2014).

1067:from the original on 2013-11-25

1008:from the original on 2014-01-12

982:from the original on 2013-09-27

949:from the original on 2017-04-03

669:for webmasters, to control the

525:

341:Internet Engineering Task Force

1525:"Robots.txt is a suicide note"

1146:

1049:

1019:

960:

931:

588:stands for all robots and the

397:does not apply to pages under

13:

1:

1579:Jones, Brad (24 April 2017).

1004:. Robotstxt.org. 1994-06-30.

925:

688:directive, allowing multiple

408:

395:http://example.com/robots.txt

373:A robots.txt file covers one

286:The standard was proposed by

134:, was based on this revision.

2151:Allyn, Bobby (5 July 2024).

1916:. 2018-01-10. Archived from

7:

2020:"Robots.txt Specifications"

1249:"About Ask.com: Webmasters"

804:

579:

505:

346:

24:of this page, as edited by

10:

2239:

2208:Search engine optimization

1491:"ArchiveBot: Bad behavior"

679:

607:User-agent: * Disallow: /

553:redirect humans.txt to an

516:security through obscurity

381:had a robots.txt file but

281:

261:security through obscurity

236:used for implementing the

141:

2080:Robots Exclusion Protocol

1712:Sverre H. Huseby (2004).

1185:Robots Exclusion Protocol

595:User-agent: * Disallow:

238:Robots Exclusion Protocol

207:

190:

182:

174:

161:

157:Robots Exclusion Protocol

156:

1226:. YouTube. Oct 5, 2009.

856:Distributed web crawling

786:

750:

716:Robot Exclusion Standard

684:Some crawlers support a

536:pass alternative content

399:http://example.com:8080/

304:denial-of-service attack

294:in February 1994 on the

244:to indicate to visiting

1803:. Iis.net. 2013-11-06.

1684:10.6028/NIST.SP.800-123

598:User-agent: * Allow: /

459:Artificial intelligence

1002:"The Web Robots Pages"

967:Fielding, Roy (1994).

788:X-Robots-Tag: noindex

673:'s subsequent visits.

655:Nonstandard extensions

2158:All Things Considered

1032:www-talk mailing list

726:Meta tags and headers

660:Crawl-delay directive

485:. In 2023, blog host

240:, a standard used by

1914:"/killer-robots.txt"

1778:. Httpd.apache.org.

1495:wiki.archiveteam.org

1045:on October 29, 2013.

747:A "noindex" meta tag

541:Some sites, such as

403:https://example.com/

306:on Koster's server.

257:voluntary compliance

1852:"Github humans.txt"

1826:"Google humans.txt"

1753:. User-agents.org.

1289:on 13 December 2012

774:"noindex"

710:Universal "*" match

389:would not apply to

290:, when working for

153:

95:← Previous revision

2107:Proposed Standard.

1969:support.google.com

1461:"Using robots.txt"

1279:"About AOL Search"

1212:Proposed Standard.

1129:Search Engine Land

873:for search engines

765:"robots"

562:/killer-robots.txt

482:The New York Times

421:Some major search

151:

45:06:29, 6 July 2024

2124:Google Developers

2024:Google Developers

1557:. 17 April 2017.

1406:Google Developers

1043:archived message)

227:

226:

178:Proposed Standard

139:Internet protocol

2230:

2194:

2193:

2191:Official website

2178:

2176:

2174:

2139:

2138:

2136:

2135:

2116:

2110:

2105:

2096:

2094:10.17487/RFC9309

2074:

2068:

2067:

2065:

2064:

2049:

2040:

2039:

2037:

2035:

2016:

2010:

2009:

2007:

2006:

1997:. Archived from

1991:

1985:

1984:

1982:

1980:

1961:

1955:

1954:

1952:

1950:

1935:

1929:

1928:

1926:

1925:

1910:

1904:

1903:

1901:

1900:

1880:

1874:

1873:

1871:

1869:

1848:

1842:

1841:

1839:

1837:

1822:

1816:

1815:

1813:

1812:

1797:

1791:

1790:

1788:

1787:

1772:

1766:

1765:

1763:

1762:

1747:

1741:

1740:

1738:

1737:

1709:

1703:

1702:

1700:

1698:

1692:

1673:

1664:

1658:

1657:

1655:

1653:

1634:

1628:

1627:

1625:

1624:

1609:

1603:

1602:

1600:

1598:

1576:

1570:

1569:

1567:

1566:

1555:blog.archive.org

1547:

1541:

1540:

1538:

1536:

1527:. Archive Team.

1517:

1511:

1510:

1508:

1506:

1497:. Archive Team.

1487:

1481:

1480:

1478:

1476:

1457:

1448:

1447:

1445:

1443:

1428:

1422:

1421:

1419:

1417:

1398:

1389:

1388:

1386:

1384:

1369:"DuckDuckGo Bot"

1365:

1359:

1358:

1356:

1354:

1335:

1329:

1328:

1326:

1324:

1305:

1299:

1298:

1296:

1294:

1285:. Archived from

1275:

1269:

1268:

1266:

1264:

1245:

1239:

1238:

1236:

1235:

1220:

1214:

1210:

1201:

1199:10.17487/RFC9309

1179:

1173:

1172:

1170:

1169:

1150:

1144:

1143:

1141:

1140:

1120:

1114:

1113:

1111:

1109:

1092:

1077:

1076:

1074:

1072:

1063:. 19 June 2006.

1053:

1047:

1046:

1044:

1034:. Archived from

1023:

1017:

1016:

1014:

1013:

998:

992:

991:

989:

987:

973:

964:

958:

957:

955:

954:

943:Greenhills.co.uk

935:

866:Internet Archive

839:

831:

821:

816:

815:

778:

775:

772:

769:

766:

763:

760:

757:

754:

732:Robots meta tags

721:

702:

695:

687:

591:

587:

563:

548:

500:

447:Internet Archive

404:

400:

396:

392:

388:

384:

380:

358:

354:

223:

217:

214:

166:

154:

150:

124:accepted version

107:Newer revision →

85:

82:

61:

59:current revision

51:

50:

46:

42:

41:

2238:

2237:

2233:

2232:

2231:

2229:

2228:

2227:

2198:

2197:

2189:

2188:

2185:

2172:

2170:

2147:

2145:Further reading

2142:

2133:

2131:

2118:

2117:

2113:

2075:

2071:

2062:

2060:

2051:

2050:

2043:

2033:

2031:

2018:

2017:

2013:

2004:

2002:

1993:

1992:

1988:

1978:

1976:

1963:

1962:

1958:

1948:

1946:

1937:

1936:

1932:

1923:

1921:

1912:

1911:

1907:

1898:

1896:

1881:

1877:

1867:

1865:

1850:

1849:

1845:

1835:

1833:

1824:

1823:

1819:

1810:

1808:

1799:

1798:

1794:

1785:

1783:

1774:

1773:

1769:

1760:

1758:

1749:

1748:

1744:

1735:

1733:

1726:

1710:

1706:

1696:

1694:

1690:

1671:

1665:

1661:

1651:

1649:

1636:

1635:

1631:

1622:

1620:

1611:

1610:

1606:

1596:

1594:

1577:

1573:

1564:

1562:

1549:

1548:

1544:

1534:

1532:

1518:

1514:

1504:

1502:

1489:

1488:

1484:

1474:

1472:

1465:Help.yandex.com

1459:

1458:

1451:

1441:

1439:

1430:

1429:

1425:

1415:

1413:

1400:

1399:

1392:

1382:

1380:

1367:

1366:

1362:

1352:

1350:

1337:

1336:

1332:

1322:

1320:

1307:

1306:

1302:

1292:

1290:

1277:

1276:

1272:

1262:

1260:

1247:

1246:

1242:

1233:

1231:

1222:

1221:

1217:

1180:

1176:

1167:

1165:

1152:

1151:

1147:

1138:

1136:

1121:

1117:

1107:

1105:

1093:

1080:

1070:

1068:

1061:Charlie's Diary

1055:

1054:

1050:

1038:

1024:

1020:

1011:

1009:

1000:

999:

995:

985:

983:

971:

965:

961:

952:

950:

937:

936:

932:

928:

923:

861:Focused crawler

835:

827:

819:Internet portal

817:

810:

807:

799:

790:

789:

785:

780:

779:

776:

773:

770:

767:

764:

761:

758:

755:

752:

749:

728:

719:

712:

707:

697:

693:

685:

682:

677:

662:

657:

652:

638:

632:

626:

620:

614:

608:

599:

596:

589:

585:

582:

561:

546:

528:

508:

498:

461:

431:

419:

411:

402:

398:

394:

390:

386:

382:

378:

356:

352:

349:

284:

219:

211:

203:

183:First published

170:

147:

140:

136:

135:

118:

117:

116:

115:

114:

99:Latest revision

87:

86:

83:

72:

70:

57:

48:

31:

29:

12:

11:

5:

2236:

2226:

2225:

2220:

2215:

2210:

2196:

2195:

2184:

2183:External links

2181:

2180:

2179:

2146:

2143:

2141:

2140:

2111:

2069:

2041:

2011:

1986:

1956:

1941:. 3 May 2012.

1930:

1905:

1889:Slate Magazine

1875:

1843:

1817:

1792:

1767:

1742:

1724:

1704:

1659:

1629:

1604:

1586:Digital Trends

1571:

1542:

1512:

1482:

1449:

1423:

1390:

1373:DuckDuckGo.com

1360:

1343:Blogs.bing.com

1330:

1300:

1283:Search.aol.com

1270:

1240:

1215:

1174:

1145:

1115:

1078:

1048:

1018:

993:

959:

929:

927:

924:

922:

921:

916:

911:

906:

901:

896:

891:

886:

880:

874:

868:

863:

858:

853:

847:

841:

833:

824:

823:

822:

806:

803:

798:

795:

787:

784:

781:

751:

748:

745:

727:

724:

711:

708:

705:

681:

678:

675:

667:search console

661:

658:

656:

653:

649:

636:

630:

624:

618:

612:

606:

597:

594:

581:

578:

566:the Terminator

527:

524:

507:

504:

460:

457:

452:Digital Trends

430:

429:Archival sites

427:

418:

417:Search engines

415:

410:

407:

348:

345:

311:web developers

300:Charles Stross

288:Martijn Koster

283:

280:

225:

224:

209:

205:

204:

202:

201:

198:

194:

192:

188:

187:

184:

180:

179:

176:

172:

171:

167:

159:

158:

138:

126:of this page,

121:

68:

54:permanent link

27:

16:

15:

9:

6:

4:

3:

2:

2235:

2224:

2221:

2219:

2216:

2214:

2211:

2209:

2206:

2205:

2203:

2192:

2187:

2186:

2168:

2164:

2160:

2159:

2154:

2149:

2148:

2129:

2125:

2121:

2115:

2108:

2103:

2100:

2095:

2090:

2086:

2082:

2081:

2073:

2058:

2054:

2048:

2046:

2029:

2025:

2021:

2015:

2001:on 2009-03-05

2000:

1996:

1990:

1974:

1970:

1966:

1960:

1944:

1940:

1934:

1920:on 2018-01-10

1919:

1915:

1909:

1894:

1890:

1886:

1879:

1863:

1859:

1858:

1853:

1847:

1831:

1827:

1821:

1806:

1802:

1796:

1781:

1777:

1771:

1756:

1752:

1746:

1731:

1727:

1725:9780470857472

1721:

1717:

1716:

1708:

1689:

1685:

1681:

1677:

1670:

1663:

1647:

1643:

1639:

1633:

1618:

1614:

1608:

1592:

1588:

1587:

1582:

1575:

1560:

1556:

1552:

1546:

1530:

1526:

1522:

1516:

1500:

1496:

1492:

1486:

1470:

1466:

1462:

1456:

1454:

1437:

1433:

1427:

1411:

1407:

1403:

1397:

1395:

1378:

1374:

1370:

1364:

1348:

1344:

1340:

1334:

1318:

1314:

1310:

1309:"Baiduspider"

1304:

1288:

1284:

1280:

1274:

1258:

1254:

1253:About.ask.com

1250:

1244:

1229:

1225:

1219:

1213:

1208:

1205:

1200:

1195:

1191:

1187:

1186:

1178:

1163:

1159:

1155:

1149:

1134:

1130:

1126:

1119:

1104:

1103:

1098:

1091:

1089:

1087:

1085:

1083:

1066:

1062:

1058:

1052:

1042:

1037:

1033:

1029:

1022:

1007:

1003:

997:

986:September 25,

981:

977:

970:

963:

948:

944:

940:

934:

930:

920:

917:

915:

914:Web archiving

912:

910:

907:

905:

902:

900:

897:

895:

892:

890:

887:

884:

881:

878:

875:

872:

871:Meta elements

869:

867:

864:

862:

859:

857:

854:

851:

848:

845:

842:

838:

834:

830:

826:

825:

820:

814:

809:

802:

794:

744:

742:

738:

733:

723:

717:

704:

701:

691:

674:

672:

668:

648:

645:

643:

635:

629:

623:

617:

611:

605:

602:

593:

577:

575:

571:

567:

558:

556:

552:

544:

539:

537:

533:

523:

521:

517:

513:

503:

497:

496:

490:

488:

484:

483:

478:

474:

470:

466:

465:generative AI

456:

454:

453:

448:

444:

441:. Co-founder

440:

436:

426:

424:

414:

406:

391:a.example.com

383:a.example.com

376:

371:

367:

364:

362:

344:

342:

337:

335:

331:

327:

323:

321:

316:

312:

307:

305:

301:

297:

293:

289:

279:

277:

272:

270:

266:

262:

258:

253:

251:

247:

243:

239:

235:

231:

222:

216:

210:

206:

199:

196:

195:

193:

189:

185:

181:

177:

173:

165:

160:

155:

149:

145:

133:

129:

125:

112:

108:

104:

100:

96:

92:

80:

76:

71:

64:

63:

60:

55:

39:

35:

30:

23:

2218:Web scraping

2171:. Retrieved

2156:

2132:. Retrieved

2123:

2114:

2106:

2079:

2072:

2061:. Retrieved

2034:February 15,

2032:. Retrieved

2023:

2014:

2003:. Retrieved

1999:the original

1989:

1977:. Retrieved

1968:

1959:

1947:. Retrieved

1933:

1922:. Retrieved

1918:the original

1908:

1897:. Retrieved

1888:

1878:

1866:. Retrieved

1855:

1846:

1834:. Retrieved

1820:

1809:. Retrieved

1795:

1784:. Retrieved

1770:

1759:. Retrieved

1745:

1734:. Retrieved

1714:

1707:

1695:. Retrieved

1675:

1662:

1650:. Retrieved

1642:The Register

1641:

1632:

1621:. Retrieved

1607:

1595:. Retrieved

1584:

1574:

1563:. Retrieved

1554:

1545:

1533:. Retrieved

1515:

1503:. Retrieved

1494:

1485:

1473:. Retrieved

1464:

1440:. Retrieved

1426:

1414:. Retrieved

1405:

1381:. Retrieved

1372:

1363:

1351:. Retrieved

1342:

1333:

1321:. Retrieved

1312:

1303:

1291:. Retrieved

1287:the original

1282:

1273:

1261:. Retrieved

1252:

1243:

1232:. Retrieved

1218:

1211:

1184:

1177:

1166:. Retrieved

1157:

1148:

1137:. Retrieved

1128:

1118:

1106:. Retrieved

1100:

1069:. Retrieved

1060:

1051:

1036:the original

1031:

1021:

1010:. Retrieved

996:

984:. Retrieved

975:

972:(PostScript)

962:

951:. Retrieved

942:

939:"Historical"

933:

837:security.txt

800:

791:

729:

715:

713:

699:

696:in the form

692:in the same

683:

663:

646:

639:

633:

627:

621:

615:

609:

603:

600:

583:

564:instructing

559:

554:

540:

529:

526:Alternatives

509:

493:

491:

480:

462:

450:

435:Archive Team

432:

420:

412:

372:

368:

365:

350:

338:

319:

308:

295:

285:

273:

254:

246:web crawlers

237:

229:

228:

148:

131:

22:old revision

19:

18:

1535:18 February

1521:Jason Scott

1475:16 February

1442:16 February

1416:16 February

1353:16 February

1323:16 February

1293:16 February

1263:16 February

919:Web crawler

909:Spider trap

722:statement.

574:Sergey Brin

443:Jason Scott

387:example.com

379:example.com

132:6 July 2024

69:DocWatson42

28:DocWatson42

20:This is an

2223:Text files

2202:Categories

2134:2022-10-17

2063:2013-08-17

2005:2009-03-23

1979:22 October

1949:9 February

1924:2018-05-25

1899:2019-10-03

1868:October 3,

1836:October 3,

1811:2013-12-29

1786:2013-12-29

1761:2013-12-29

1736:2015-08-12

1697:August 12,

1652:August 12,

1623:2015-08-10

1565:2018-12-01

1505:10 October

1234:2013-12-29

1168:2019-07-10

1139:2015-11-19

1012:2013-12-29

978:. Geneva.

953:2017-03-03

926:References

741:httpd.conf

694:robots.txt

651:directory

570:Larry Page

547:humans.txt

532:user-agent

409:Compliance

353:robots.txt

326:WebCrawler

250:web robots

248:and other

230:robots.txt

152:robots.txt

1313:Baidu.com

1102:The Verge

1041:Hypermail

737:.htaccess

720:Disallow:

698:Sitemap:

671:Googlebot

545:, host a

512:web robot

495:The Verge

334:AltaVista

213:robotstxt

2213:Websites

2167:Archived

2128:Archived

2057:Archived

2028:Archived

1973:Archived

1943:Archived

1893:Archived

1862:Archived

1830:Archived

1805:Archived

1780:Archived

1755:Archived

1730:Archived

1688:Archived

1646:Archived

1617:Archived

1591:Archived

1559:Archived

1529:Archived

1499:Archived

1469:Archived

1436:Archived

1410:Archived

1383:25 April

1377:Archived

1347:Archived

1317:Archived

1257:Archived

1228:Archived

1162:Archived

1133:Archived

1108:16 March

1071:19 April

1065:Archived

1006:Archived

980:Archived

947:Archived

904:Sitemaps

899:Perma.cc

889:nofollow

885:(NDIIPP)

805:See also

700:full-url

690:Sitemaps

590:Disallow

580:Examples

506:Security

439:sitemaps

347:Standard

322:standard

320:de facto

296:www-talk

276:sitemaps

242:websites

234:filename

221:RFC 9309

128:accepted

79:contribs

38:contribs

894:noindex

850:BotSeer

829:ads.txt

768:content

743:files.

686:Sitemap

680:Sitemap

423:engines

361:website

282:History

232:is the

208:Website

191:Authors

169:folder.

2173:6 July

1857:GitHub

1722:

879:(NDLP)

642:Google

557:page.

551:GitHub

543:Google

487:Medium

473:Google

469:OpenAI

375:origin

332:, and

315:server

265:server

175:Status

1691:(PDF)

1672:(PDF)

1597:8 May

777:/>

555:About

499:'

330:Lycos

292:Nexor

2175:2024

2102:9309

2085:IETF

2036:2020

1981:2018

1951:2016

1870:2019

1838:2019

1720:ISBN

1699:2015

1654:2015

1599:2017

1537:2017

1507:2022

1477:2013

1444:2013

1418:2013

1385:2017

1355:2013

1325:2013

1295:2013

1265:2013

1207:9309

1190:IETF

1110:2024

1073:2014

988:2013

759:name

756:meta

753:<

739:and

714:The

572:and

479:and

215:.org

111:diff

105:) |

103:diff

91:diff

75:talk

34:talk

2163:NPR

2099:RFC

2089:doi

1680:doi

1204:RFC

1194:doi

477:BBC

401:or

130:on

122:An

43:at

2204::

2165:.

2161:.

2155:.

2126:.

2122:.

2097:.

2087:.

2083:.

2055:.

2044:^

2026:.

2022:.

1971:.

1967:.

1891:.

1887:.

1860:.

1854:.

1828:.

1728:.

1686:.

1678:.

1674:.

1644:.

1640:.

1615:.

1589:.

1583:.

1553:.

1523:.

1493:.

1467:.

1463:.

1452:^

1434:.

1408:.

1404:.

1393:^

1375:.

1371:.

1345:.

1341:.

1315:.

1311:.

1281:.

1255:.

1251:.

1202:.

1192:.

1188:.

1160:.

1156:.

1131:.

1127:.

1099:.

1081:^

1059:.

1030:.

974:.

945:.

941:.

703::

576:.

405:.

336:.

328:,

271:.

218:,

97:|

93:)

77:|

36:|

2177:.

2137:.

2104:.

2091::

2066:.

2038:.

2008:.

1983:.

1953:.

1927:.

1902:.

1872:.

1840:.

1814:.

1789:.

1764:.

1739:.

1701:.

1682::

1656:.

1626:.

1601:.

1568:.

1539:.

1509:.

1479:.

1446:.

1420:.

1387:.

1357:.

1327:.

1297:.

1267:.

1237:.

1209:.

1196::

1171:.

1142:.

1112:.

1075:.

1039:(

1015:.

990:.

956:.

771:=

762:=

586:*

146:.

113:)

109:(

101:(

89:(

81:)

73:(

62:.

40:)

32:(

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.