833:

184:

812:

The X-Robots-Tag is only effective after the page has been requested and the server responds, and the robots meta tag is only effective after the page has loaded, whereas robots.txt is effective before the page is requested. Thus if a page is excluded by a robots.txt file, any robots meta tags or

684:

The crawl-delay value is supported by some crawlers to throttle their visits to the host. Since this value is not part of the standard, its interpretation is dependent on the crawler reading it. It is used when the multiple burst of visits from bots is slowing down the host. Yandex interprets the

670:

User-agent: googlebot # all Google services

Disallow: /private/ # disallow this directory User-agent: googlebot-news # only the news service Disallow: / # disallow everything User-agent: * # any robot Disallow: /something/ # disallow this

389:

A robots.txt file on a website will function as a request that specified robots ignore specified files or directories when crawling a site. This might be, for example, out of a preference for privacy from search engine results, or the belief that the content of the selected directories might be

542:(NIST) in the United States specifically recommends against this practice: "System security should not depend on the secrecy of the implementation or its components." In the context of robots.txt files, security through obscurity is not recommended as a security technique.

534:; it cannot enforce any of what is stated in the file. Malicious web robots are unlikely to honor robots.txt; some may even use the robots.txt as a guide to find disallowed links and go straight to them. While this is sometimes claimed to be a security risk, this sort of

390:

misleading or irrelevant to the categorization of the site as a whole, or out of a desire that an application only operates on certain data. Links to pages listed in robots.txt can still appear in search results if they are linked to from a page that is crawled.

188:

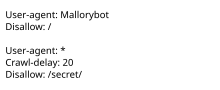

Example of a simple robots.txt file, indicating that a user-agent called "Mallorybot" is not allowed to crawl any of the website's pages, and that other user-agents cannot crawl more than one page every 20 seconds, and are not allowed to crawl the "secret"

521:

s David Pierce said this only began after "training the underlying models that made it so powerful". Also, some bots are used both for search engines and artificial intelligence, and it may be impossible to block only one of these options.

685:

value as the number of seconds to wait between subsequent visits. Bing defines crawl-delay as the size of a time window (from 1 to 30 seconds) during which BingBot will access a web site only once. Google provides an interface in its

379:). This text file contains the instructions in a specific format (see examples below). Robots that choose to follow the instructions try to fetch this file and read the instructions before fetching any other file from the

754:

and X-Robots-Tag HTTP headers. The robots meta tag cannot be used for non-HTML files such as images, text files, or PDF documents. On the other hand, the X-Robots-Tag can be added to non-HTML files by using

475:, this followed widespread use of robots.txt to remove historical sites from search engine results, and contrasted with the nonprofit's aim to archive "snapshots" of the internet as it previously existed.

821:

The Robots

Exclusion Protocol requires crawlers to parse at least 500 kibibytes (512000 bytes) of robots.txt files, which Google maintains as a 500 kibibyte file size restriction for robots.txt files.

386:

A robots.txt file contains instructions for bots indicating which web pages they can and cannot access. Robots.txt files are particularly important for web crawlers from search engines such as Google.

1824:

1366:

1455:

902:

465:

said that "unchecked, and left alone, the robots.txt file ensures no mirroring or reference for items that may have general use and meaning beyond the website's context." In 2017, the

1578:

2186:

495:'s Google-Extended. Many robots.txt files named GPTBot as the only bot explicitly disallowed on all pages. Denying access to GPTBot was common among news websites such as the

1962:

333:

to specify which bots should not access their website or which pages bots should not access. The internet was small enough in 1994 to maintain a complete list of all bots;

1152:

1084:

1610:

509:

announced it would deny access to all artificial intelligence web crawlers as "AI companies have leached value from writers in order to spam

Internet readers".

2076:

2014:

1912:

2147:

1820:

1429:

1358:

660:

It is also possible to list multiple robots with their own rules. The actual robot string is defined by the crawler. A few robot operators, such as

1451:

664:, support several user-agent strings that allow the operator to deny access to a subset of their services by using specific user-agent strings.

657:# Comments appear after the "#" symbol at the start of a line, or after a directive User-agent: * # match all bots Disallow: / # keep them out

539:

1707:

2047:

1518:

383:. If this file does not exist, web robots assume that the website owner does not wish to place any limitations on crawling the entire site.

1570:

1749:

1992:

2172:

999:

1548:

1181:

750:

In addition to root-level robots.txt files, robots exclusion directives can be applied at a more granular level through the use of

1958:

1636:

279:. Malicious bots can use the file as a directory of which pages to visit, though standards bodies discourage countering this with

554:

to the web server when fetching content. A web administrator could also configure the server to automatically return failure (or

1799:

1665:

1247:

126:

114:

1276:

1336:

322:

claims to have provoked Koster to suggest robots.txt, after he wrote a badly behaved web crawler that inadvertently caused a

1144:

1076:

433:

A robots.txt has no enforcement mechanism in law or in technical protocol, despite widespread compliance by bot operators.

1488:

130:

110:

2227:

966:

530:

Despite the use of the terms "allow" and "disallow", the protocol is purely advisory and relies on the compliance of the

122:

1047:

1025:

484:

288:

1933:

651:

User-agent: BadBot # replace 'BadBot' with the actual user-agent of the bot User-agent: Googlebot

Disallow: /private/

1743:

1600:

863:

88:

37:

2072:

2018:

896:

1904:

1116:

360:

2139:

1396:

483:

Starting in the 2020s, web operators began using robots.txt to deny access to bots collecting training data for

1849:

1421:

860:, a file to describe the process for security researchers to follow in order to report security vulnerabilities

359:

On July 1, 2019, Google announced the proposal of the Robots

Exclusion Protocol as an official standard under

77:

26:

1298:

512:

GPTBot complies with the robots.txt standard and gives advice to web operators about how to disallow it, but

1881:

147:

535:

280:

1774:

813:

X-Robots-Tag headers are effectively ignored because the robot will not see them in the first place.

1688:

875:

323:

2039:

1510:

445:

following this standard include Ask, AOL, Baidu, Bing, DuckDuckGo, Google, Yahoo!, and Yandex.

1571:"Robots.txt meant for search engines don't work well for web archives | Internet Archive Blogs"

585:

2073:"Robots meta tag and X-Robots-Tag HTTP header specifications - Webmasters — Google Developers"

397:. For websites with multiple subdomains, each subdomain must have its own robots.txt file. If

2237:

2177:

1733:

686:

84:

33:

143:

2118:

1984:

1223:

276:

371:

When a site owner wishes to give instructions to web robots they place a text file called

8:

2242:

1905:"Is This a Google Easter Egg or Proof That Skynet Is Actually Plotting World Domination?"

988:

63:

1544:

1173:

645:

User-agent: BadBot # replace 'BadBot' with the actual user-agent of the bot

Disallow: /

283:. Some archival sites ignore robots.txt. The standard was used in the 1990s to mitigate

1632:

501:

487:. In 2023, Originality.AI found that 306 of the thousand most-visited websites blocked

394:

334:

284:

1795:

1657:

2232:

1739:

1243:

338:

318:

mailing list, the main communication channel for WWW-related activities at the time.

287:

overload. In the 2020s many websites began denying bots that collect information for

21:

832:

2108:

1699:

1268:

1213:

885:

506:

466:

1328:

890:

880:

838:

751:

604:

This example tells all robots that they can visit all files because the wildcard

2121:

2098:

1226:

1203:

469:

announced that it would stop complying with robots.txt directives. According to

240:

1605:

1480:

471:

442:

319:

307:

989:"Maintaining Distributed Hypertext Infostructures: Welcome to MOMspider's Web"

958:

621:

The same result can be accomplished with an empty or missing robots.txt file.

2221:

1359:"Robots Exclusion Protocol: joining together to provide better documentation"

1055:

933:

330:

1703:

1021:

648:

This example tells two specific robots not to enter one specific directory:

569:

file that displays information meant for humans to read. Some sites such as

1937:

856:

454:

269:

1821:"Deny Strings for Filtering Rules : The Official Microsoft IIS Site"

1540:

938:

928:

593:

462:

265:

1601:"The Internet Archive Will Ignore Robots.txt Files to Maintain Accuracy"

760:

589:

551:

345:

118:

2113:

2015:"Yahoo! Search Blog - Webmasters can now auto-discover with Sitemaps"

1388:

1218:

1121:

1077:"How I got here in the end, part five: "things can only get better!""

1060:

756:

690:

531:

514:

413:. In addition, each protocol and port needs its own robots.txt file;

353:

2140:"How Google Interprets the robots.txt Specification | Documentation"

1845:

903:

National

Digital Information Infrastructure and Preservation Program

344:; most complied, including those operated by search engines such as

923:

918:

908:

709:

636:

This example tells all robots to stay away from one specific file:

633:

User-agent: * Disallow: /cgi-bin/ Disallow: /tmp/ Disallow: /junk/

555:

363:. A proposed standard was published in September 2022 as RFC 9309.

295:

253:

2097:

Koster, M.; Illyes, G.; Zeller, H.; Sassman, L. (September 2022).

1306:

1202:

Koster, M.; Illyes, G.; Zeller, H.; Sassman, L. (September 2022).

1658:"Robots.txt tells hackers the places you don't want them to look"

913:

869:

848:

458:

380:

261:

1871:

1876:

661:

570:

562:

492:

488:

1770:

642:

All other files in the specified directory will be processed.

630:

This example tells all robots not to enter three directories:

802:

349:

337:

overload was a primary concern. By June 1994 it had become a

311:

220:

Gary Illyes, Henner Zeller, Lizzi

Sassman (IETF contributors)

183:

2210:

2104:

1209:

1145:"Robots.txt Celebrates 20 Years Of Blocking Search Engines"

1735:

Innocent Code: A Security Wake-Up Call for Web

Programmers

1633:"Block URLs with robots.txt: Learn about robots.txt files"

66:

to this revision, which may differ significantly from the

2182:

2096:

1416:

1414:

1201:

1174:"Formalizing the Robots Exclusion Protocol Specification"

612:

directive has no value, meaning no pages are disallowed.

496:

272:

which portions of the website they are allowed to visit.

624:

This example tells all robots to stay out of a website:

558:) when it detects a connection using one of the robots.

232:

2173:"Artificial Intelligence Web Crawlers Are Running Amok"

1959:"To crawl or not to crawl, that is BingBot's question"

1411:

294:

The "robots.txt" file can be used in conjunction with

329:

The standard, initially RobotsNotWanted.txt, allowed

1687:

Scarfone, K. A.; Jansen, W.; Tracy, M. (July 2008).

1444:

996:

828:

816:

163:

68:

1985:"Change Googlebot crawl rate - Search Console Help"

1686:

491:'s GPTBot in their robots.txt file and 85 blocked

872:– Now inactive search engine for robots.txt files

766:

298:, another robot inclusion standard for websites.

2219:

1951:

1771:"List of User-Agents (Spiders, Robots, Browser)"

1731:

1592:

1475:

1473:

1351:

1291:

729:

654:Example demonstrating how comments can be used:

453:Some web archiving projects ignore robots.txt.

2067:

2065:

1696:National Institute of Standards and Technology

1381:

1261:

1142:

1048:"Important: Spiders, Robots and Web Wanderers"

852:, a standard for listing authorized ad sellers

540:National Institute of Standards and Technology

457:uses the file to discover more links, such as

1470:

696:User-agent: bingbot Allow: / Crawl-delay: 10

639:User-agent: * Disallow: /directory/file.html

580:Previously, Google had a joke file hosted at

206:1994 published, formally standardized in 2022

1321:

667:Example demonstrating multiple user-agents:

375:in the root of the web site hierarchy (e.g.

2062:

726:Sitemap: http://www.example.com/sitemap.xml

275:The standard, developed in 1994, relies on

2129:sec. 2.5: Limits.

1452:"Submitting your website to Yahoo! Search"

738:does not mention the "*" character in the

478:

182:

140:

2112:

1738:. John Wiley & Sons. pp. 91–92.

1217:

674:

1136:

986:

866:– A failed proposal to extend robots.txt

745:

679:

538:is discouraged by standards bodies. The

405:did not, the rules that would apply for

47:

1539:

1422:"Webmasters: Robots.txt Specifications"

1110:

1108:

1106:

1104:

1102:

75:

14:

2220:

1902:

1195:

1178:Official Google Webmaster Central Blog

1117:"The text file that runs the internet"

1114:

1045:

2170:

2050:from the original on November 2, 2019

1852:from the original on January 24, 2017

1796:"Access Control - Apache HTTP Server"

1598:

1399:from the original on 16 February 2017

162:For Knowledge's robots.txt file, see

76:Revision as of 06:33, 6 July 2024 by

44:

25:

1521:from the original on 10 October 2022

1279:from the original on 27 January 2013

1099:

1046:Koster, Martijn (25 February 1994).

97:

52:

17:

164:https://en.wikipedia.org/robots.txt

139:

108:

2164:

2090:

1689:"Guide to General Server Security"

1339:from the original on 6 August 2013

1244:"Uncrawled URLs in search results"

1115:Pierce, David (14 February 2024).

377:https://www.example.com/robots.txt

289:generative artificial intelligence

157:

2254:

2202:

1884:from the original on May 30, 2016

1014:

864:Automated Content Access Protocol

817:Maximum size of a robots.txt file

588:not to kill the company founders

448:

436:

62:. The present address (URL) is a

2189:from the original on 6 July 2024

897:National Digital Library Program

831:

803:A "noindex" HTTP response header

550:Many robots also pass a special

217:Martijn Koster (original author)

2150:from the original on 2022-10-17

2132:

2079:from the original on 2013-08-08

2032:

2007:

1995:from the original on 2018-11-18

1977:

1965:from the original on 2016-02-03

1926:

1915:from the original on 2018-11-18

1903:Newman, Lily Hay (2014-07-03).

1896:

1864:

1838:

1827:from the original on 2014-01-01

1813:

1802:from the original on 2013-12-29

1788:

1777:from the original on 2014-01-07

1763:

1752:from the original on 2016-04-01

1725:

1713:from the original on 2011-10-08

1680:

1668:from the original on 2015-08-21

1650:

1639:from the original on 2015-08-14

1625:

1613:from the original on 2017-05-16

1581:from the original on 2018-12-04

1563:

1551:from the original on 2017-02-18

1533:

1503:

1491:from the original on 2013-01-25

1458:from the original on 2013-01-21

1432:from the original on 2013-01-15

1369:from the original on 2014-08-18

1250:from the original on 2014-01-06

1236:

1184:from the original on 2019-07-10

1155:from the original on 2015-09-07

1143:Barry Schwartz (30 June 2014).

1087:from the original on 2013-11-25

1028:from the original on 2014-01-12

1002:from the original on 2013-09-27

969:from the original on 2017-04-03

689:for webmasters, to control the

545:

361:Internet Engineering Task Force

1545:"Robots.txt is a suicide note"

1166:

1069:

1039:

980:

951:

608:stands for all robots and the

417:does not apply to pages under

13:

1:

1599:Jones, Brad (24 April 2017).

1024:. Robotstxt.org. 1994-06-30.

945:

708:directive, allowing multiple

428:

415:http://example.com/robots.txt

393:A robots.txt file covers one

306:The standard was proposed by

154:, was based on this revision.

2171:Allyn, Bobby (5 July 2024).

1936:. 2018-01-10. Archived from

7:

2040:"Robots.txt Specifications"

1269:"About Ask.com: Webmasters"

824:

599:

525:

366:

24:of this page, as edited by

10:

2259:

2228:Search engine optimization

1511:"ArchiveBot: Bad behavior"

699:

627:User-agent: * Disallow: /

573:redirect humans.txt to an

536:security through obscurity

401:had a robots.txt file but

301:

281:security through obscurity

256:used for implementing the

161:

95:

50:

2100:Robots Exclusion Protocol

1732:Sverre H. Huseby (2004).

1205:Robots Exclusion Protocol

615:User-agent: * Disallow:

258:Robots Exclusion Protocol

227:

210:

202:

194:

181:

177:Robots Exclusion Protocol

176:

1246:. YouTube. Oct 5, 2009.

876:Distributed web crawling

806:

770:

736:Robot Exclusion Standard

704:Some crawlers support a

556:pass alternative content

419:http://example.com:8080/

324:denial-of-service attack

314:in February 1994 on the

264:to indicate to visiting

1823:. Iis.net. 2013-11-06.

1704:10.6028/NIST.SP.800-123

618:User-agent: * Allow: /

479:Artificial intelligence

1022:"The Web Robots Pages"

987:Fielding, Roy (1994).

808:X-Robots-Tag: noindex

693:'s subsequent visits.

675:Nonstandard extensions

2178:All Things Considered

1052:www-talk mailing list

746:Meta tags and headers

680:Crawl-delay directive

505:. In 2023, blog host

260:, a standard used by

1934:"/killer-robots.txt"

1798:. Httpd.apache.org.

1515:wiki.archiveteam.org

1065:on October 29, 2013.

767:A "noindex" meta tag

561:Some sites, such as

423:https://example.com/

326:on Koster's server.

277:voluntary compliance

1872:"Github humans.txt"

1846:"Google humans.txt"

1773:. User-agents.org.

1309:on 13 December 2012

794:"noindex"

730:Universal "*" match

409:would not apply to

310:, when working for

173:

115:← Previous revision

2127:Proposed Standard.

1989:support.google.com

1481:"Using robots.txt"

1299:"About AOL Search"

1232:Proposed Standard.

1149:Search Engine Land

893:for search engines

785:"robots"

582:/killer-robots.txt

502:The New York Times

441:Some major search

171:

45:06:33, 6 July 2024

2144:Google Developers

2044:Google Developers

1577:. 17 April 2017.

1426:Google Developers

1063:archived message)

247:

246:

198:Proposed Standard

159:Internet protocol

98:→Further reading

53:→Further reading

2250:

2214:

2213:

2211:Official website

2198:

2196:

2194:

2159:

2158:

2156:

2155:

2136:

2130:

2125:

2116:

2114:10.17487/RFC9309

2094:

2088:

2087:

2085:

2084:

2069:

2060:

2059:

2057:

2055:

2036:

2030:

2029:

2027:

2026:

2017:. Archived from

2011:

2005:

2004:

2002:

2000:

1981:

1975:

1974:

1972:

1970:

1955:

1949:

1948:

1946:

1945:

1930:

1924:

1923:

1921:

1920:

1900:

1894:

1893:

1891:

1889:

1868:

1862:

1861:

1859:

1857:

1842:

1836:

1835:

1833:

1832:

1817:

1811:

1810:

1808:

1807:

1792:

1786:

1785:

1783:

1782:

1767:

1761:

1760:

1758:

1757:

1729:

1723:

1722:

1720:

1718:

1712:

1693:

1684:

1678:

1677:

1675:

1673:

1654:

1648:

1647:

1645:

1644:

1629:

1623:

1622:

1620:

1618:

1596:

1590:

1589:

1587:

1586:

1575:blog.archive.org

1567:

1561:

1560:

1558:

1556:

1547:. Archive Team.

1537:

1531:

1530:

1528:

1526:

1517:. Archive Team.

1507:

1501:

1500:

1498:

1496:

1477:

1468:

1467:

1465:

1463:

1448:

1442:

1441:

1439:

1437:

1418:

1409:

1408:

1406:

1404:

1389:"DuckDuckGo Bot"

1385:

1379:

1378:

1376:

1374:

1355:

1349:

1348:

1346:

1344:

1325:

1319:

1318:

1316:

1314:

1305:. Archived from

1295:

1289:

1288:

1286:

1284:

1265:

1259:

1258:

1256:

1255:

1240:

1234:

1230:

1221:

1219:10.17487/RFC9309

1199:

1193:

1192:

1190:

1189:

1170:

1164:

1163:

1161:

1160:

1140:

1134:

1133:

1131:

1129:

1112:

1097:

1096:

1094:

1092:

1083:. 19 June 2006.

1073:

1067:

1066:

1064:

1054:. Archived from

1043:

1037:

1036:

1034:

1033:

1018:

1012:

1011:

1009:

1007:

993:

984:

978:

977:

975:

974:

963:Greenhills.co.uk

955:

886:Internet Archive

859:

851:

841:

836:

835:

798:

795:

792:

789:

786:

783:

780:

777:

774:

752:Robots meta tags

741:

722:

715:

707:

611:

607:

583:

568:

520:

467:Internet Archive

424:

420:

416:

412:

408:

404:

400:

378:

374:

243:

237:

234:

186:

174:

170:

144:accepted version

127:Newer revision →

105:

103:

101:

92:

71:

69:current revision

61:

60:

58:

56:

46:

42:

41:

2258:

2257:

2253:

2252:

2251:

2249:

2248:

2247:

2218:

2217:

2209:

2208:

2205:

2192:

2190:

2167:

2165:Further reading

2162:

2153:

2151:

2138:

2137:

2133:

2095:

2091:

2082:

2080:

2071:

2070:

2063:

2053:

2051:

2038:

2037:

2033:

2024:

2022:

2013:

2012:

2008:

1998:

1996:

1983:

1982:

1978:

1968:

1966:

1957:

1956:

1952:

1943:

1941:

1932:

1931:

1927:

1918:

1916:

1901:

1897:

1887:

1885:

1870:

1869:

1865:

1855:

1853:

1844:

1843:

1839:

1830:

1828:

1819:

1818:

1814:

1805:

1803:

1794:

1793:

1789:

1780:

1778:

1769:

1768:

1764:

1755:

1753:

1746:

1730:

1726:

1716:

1714:

1710:

1691:

1685:

1681:

1671:

1669:

1656:

1655:

1651:

1642:

1640:

1631:

1630:

1626:

1616:

1614:

1597:

1593:

1584:

1582:

1569:

1568:

1564:

1554:

1552:

1538:

1534:

1524:

1522:

1509:

1508:

1504:

1494:

1492:

1485:Help.yandex.com

1479:

1478:

1471:

1461:

1459:

1450:

1449:

1445:

1435:

1433:

1420:

1419:

1412:

1402:

1400:

1387:

1386:

1382:

1372:

1370:

1357:

1356:

1352:

1342:

1340:

1327:

1326:

1322:

1312:

1310:

1297:

1296:

1292:

1282:

1280:

1267:

1266:

1262:

1253:

1251:

1242:

1241:

1237:

1200:

1196:

1187:

1185:

1172:

1171:

1167:

1158:

1156:

1141:

1137:

1127:

1125:

1113:

1100:

1090:

1088:

1081:Charlie's Diary

1075:

1074:

1070:

1058:

1044:

1040:

1031:

1029:

1020:

1019:

1015:

1005:

1003:

991:

985:

981:

972:

970:

957:

956:

952:

948:

943:

881:Focused crawler

855:

847:

839:Internet portal

837:

830:

827:

819:

810:

809:

805:

800:

799:

796:

793:

790:

787:

784:

781:

778:

775:

772:

769:

748:

739:

732:

727:

717:

713:

705:

702:

697:

682:

677:

672:

658:

652:

646:

640:

634:

628:

619:

616:

609:

605:

602:

581:

566:

548:

528:

518:

481:

451:

439:

431:

422:

418:

414:

410:

406:

402:

398:

376:

372:

369:

304:

239:

231:

223:

203:First published

190:

167:

160:

156:

155:

138:

137:

136:

135:

134:

119:Latest revision

107:

106:

96:

93:

82:

80:

67:

51:

48:

31:

29:

12:

11:

5:

2256:

2246:

2245:

2240:

2235:

2230:

2216:

2215:

2204:

2203:External links

2201:

2200:

2199:

2166:

2163:

2161:

2160:

2131:

2089:

2061:

2031:

2006:

1976:

1961:. 3 May 2012.

1950:

1925:

1909:Slate Magazine

1895:

1863:

1837:

1812:

1787:

1762:

1744:

1724:

1679:

1649:

1624:

1606:Digital Trends

1591:

1562:

1532:

1502:

1469:

1443:

1410:

1393:DuckDuckGo.com

1380:

1363:Blogs.bing.com

1350:

1320:

1303:Search.aol.com

1290:

1260:

1235:

1194:

1165:

1135:

1098:

1068:

1038:

1013:

979:

949:

947:

944:

942:

941:

936:

931:

926:

921:

916:

911:

906:

900:

894:

888:

883:

878:

873:

867:

861:

853:

844:

843:

842:

826:

823:

818:

815:

807:

804:

801:

771:

768:

765:

747:

744:

731:

728:

725:

701:

698:

695:

687:search console

681:

678:

676:

673:

669:

656:

650:

644:

638:

632:

626:

617:

614:

601:

598:

586:the Terminator

547:

544:

527:

524:

480:

477:

472:Digital Trends

450:

449:Archival sites

447:

438:

437:Search engines

435:

430:

427:

368:

365:

331:web developers

320:Charles Stross

308:Martijn Koster

303:

300:

245:

244:

229:

225:

224:

222:

221:

218:

214:

212:

208:

207:

204:

200:

199:

196:

192:

191:

187:

179:

178:

158:

146:of this page,

141:

102:Updated a URL.

78:

64:permanent link

57:Updated a URL.

27:

16:

15:

9:

6:

4:

3:

2:

2255:

2244:

2241:

2239:

2236:

2234:

2231:

2229:

2226:

2225:

2223:

2212:

2207:

2206:

2188:

2184:

2180:

2179:

2174:

2169:

2168:

2149:

2145:

2141:

2135:

2128:

2123:

2120:

2115:

2110:

2106:

2102:

2101:

2093:

2078:

2074:

2068:

2066:

2049:

2045:

2041:

2035:

2021:on 2009-03-05

2020:

2016:

2010:

1994:

1990:

1986:

1980:

1964:

1960:

1954:

1940:on 2018-01-10

1939:

1935:

1929:

1914:

1910:

1906:

1899:

1883:

1879:

1878:

1873:

1867:

1851:

1847:

1841:

1826:

1822:

1816:

1801:

1797:

1791:

1776:

1772:

1766:

1751:

1747:

1745:9780470857472

1741:

1737:

1736:

1728:

1709:

1705:

1701:

1697:

1690:

1683:

1667:

1663:

1659:

1653:

1638:

1634:

1628:

1612:

1608:

1607:

1602:

1595:

1580:

1576:

1572:

1566:

1550:

1546:

1542:

1536:

1520:

1516:

1512:

1506:

1490:

1486:

1482:

1476:

1474:

1457:

1453:

1447:

1431:

1427:

1423:

1417:

1415:

1398:

1394:

1390:

1384:

1368:

1364:

1360:

1354:

1338:

1334:

1330:

1329:"Baiduspider"

1324:

1308:

1304:

1300:

1294:

1278:

1274:

1273:About.ask.com

1270:

1264:

1249:

1245:

1239:

1233:

1228:

1225:

1220:

1215:

1211:

1207:

1206:

1198:

1183:

1179:

1175:

1169:

1154:

1150:

1146:

1139:

1124:

1123:

1118:

1111:

1109:

1107:

1105:

1103:

1086:

1082:

1078:

1072:

1062:

1057:

1053:

1049:

1042:

1027:

1023:

1017:

1006:September 25,

1001:

997:

990:

983:

968:

964:

960:

954:

950:

940:

937:

935:

934:Web archiving

932:

930:

927:

925:

922:

920:

917:

915:

912:

910:

907:

904:

901:

898:

895:

892:

891:Meta elements

889:

887:

884:

882:

879:

877:

874:

871:

868:

865:

862:

858:

854:

850:

846:

845:

840:

834:

829:

822:

814:

764:

762:

758:

753:

743:

737:

724:

721:

711:

694:

692:

688:

668:

665:

663:

655:

649:

643:

637:

631:

625:

622:

613:

597:

595:

591:

587:

578:

576:

572:

564:

559:

557:

553:

543:

541:

537:

533:

523:

517:

516:

510:

508:

504:

503:

498:

494:

490:

486:

485:generative AI

476:

474:

473:

468:

464:

461:. Co-founder

460:

456:

446:

444:

434:

426:

411:a.example.com

403:a.example.com

396:

391:

387:

384:

382:

364:

362:

357:

355:

351:

347:

343:

341:

336:

332:

327:

325:

321:

317:

313:

309:

299:

297:

292:

290:

286:

282:

278:

273:

271:

267:

263:

259:

255:

251:

242:

236:

230:

226:

219:

216:

215:

213:

209:

205:

201:

197:

193:

185:

180:

175:

169:

165:

153:

149:

145:

132:

128:

124:

120:

116:

112:

99:

90:

86:

81:

74:

73:

70:

65:

54:

39:

35:

30:

23:

2238:Web scraping

2191:. Retrieved

2176:

2152:. Retrieved

2143:

2134:

2126:

2099:

2092:

2081:. Retrieved

2054:February 15,

2052:. Retrieved

2043:

2034:

2023:. Retrieved

2019:the original

2009:

1997:. Retrieved

1988:

1979:

1967:. Retrieved

1953:

1942:. Retrieved

1938:the original

1928:

1917:. Retrieved

1908:

1898:

1886:. Retrieved

1875:

1866:

1854:. Retrieved

1840:

1829:. Retrieved

1815:

1804:. Retrieved

1790:

1779:. Retrieved

1765:

1754:. Retrieved

1734:

1727:

1715:. Retrieved

1695:

1682:

1670:. Retrieved

1662:The Register

1661:

1652:

1641:. Retrieved

1627:

1615:. Retrieved

1604:

1594:

1583:. Retrieved

1574:

1565:

1553:. Retrieved

1535:

1523:. Retrieved

1514:

1505:

1493:. Retrieved

1484:

1460:. Retrieved

1446:

1434:. Retrieved

1425:

1401:. Retrieved

1392:

1383:

1371:. Retrieved

1362:

1353:

1341:. Retrieved

1332:

1323:

1311:. Retrieved

1307:the original

1302:

1293:

1281:. Retrieved

1272:

1263:

1252:. Retrieved

1238:

1231:

1204:

1197:

1186:. Retrieved

1177:

1168:

1157:. Retrieved

1148:

1138:

1126:. Retrieved

1120:

1089:. Retrieved

1080:

1071:

1056:the original

1051:

1041:

1030:. Retrieved

1016:

1004:. Retrieved

995:

992:(PostScript)

982:

971:. Retrieved

962:

959:"Historical"

953:

857:security.txt

820:

811:

749:

735:

733:

719:

716:in the form

712:in the same

703:

683:

666:

659:

653:

647:

641:

635:

629:

623:

620:

603:

584:instructing

579:

574:

560:

549:

546:Alternatives

529:

513:

511:

500:

482:

470:

455:Archive Team

452:

440:

432:

392:

388:

385:

370:

358:

339:

328:

315:

305:

293:

274:

266:web crawlers

257:

249:

248:

168:

151:

22:old revision

19:

18:

1555:18 February

1541:Jason Scott

1495:16 February

1462:16 February

1436:16 February

1373:16 February

1343:16 February

1313:16 February

1283:16 February

939:Web crawler

929:Spider trap

742:statement.

594:Sergey Brin

463:Jason Scott

407:example.com

399:example.com

152:6 July 2024

79:DocWatson42

28:DocWatson42

20:This is an

2243:Text files

2222:Categories

2154:2022-10-17

2083:2013-08-17

2025:2009-03-23

1999:22 October

1969:9 February

1944:2018-05-25

1919:2019-10-03

1888:October 3,

1856:October 3,

1831:2013-12-29

1806:2013-12-29

1781:2013-12-29

1756:2015-08-12

1717:August 12,

1672:August 12,

1643:2015-08-10

1585:2018-12-01

1525:10 October

1254:2013-12-29

1188:2019-07-10

1159:2015-11-19

1032:2013-12-29

998:. Geneva.

973:2017-03-03

946:References

761:httpd.conf

714:robots.txt

671:directory

590:Larry Page

567:humans.txt

552:user-agent

429:Compliance

373:robots.txt

346:WebCrawler

270:web robots

268:and other

250:robots.txt

172:robots.txt

1333:Baidu.com

1122:The Verge

1061:Hypermail

757:.htaccess

740:Disallow:

718:Sitemap:

691:Googlebot

565:, host a

532:web robot

515:The Verge

354:AltaVista

233:robotstxt

2233:Websites

2187:Archived

2148:Archived

2077:Archived

2048:Archived

1993:Archived

1963:Archived

1913:Archived

1882:Archived

1850:Archived

1825:Archived

1800:Archived

1775:Archived

1750:Archived

1708:Archived

1666:Archived

1637:Archived

1611:Archived

1579:Archived

1549:Archived

1519:Archived

1489:Archived

1456:Archived

1430:Archived

1403:25 April

1397:Archived

1367:Archived

1337:Archived

1277:Archived

1248:Archived

1182:Archived

1153:Archived

1128:16 March

1091:19 April

1085:Archived

1026:Archived

1000:Archived

967:Archived

924:Sitemaps

919:Perma.cc

909:nofollow

905:(NDIIPP)

825:See also

720:full-url

710:Sitemaps

610:Disallow

600:Examples

526:Security

459:sitemaps

367:Standard

342:standard

340:de facto

316:www-talk

296:sitemaps

262:websites

254:filename

241:RFC 9309

148:accepted

89:contribs

38:contribs

914:noindex

870:BotSeer

849:ads.txt

788:content

763:files.

706:Sitemap

700:Sitemap

443:engines

381:website

302:History

252:is the

228:Website

211:Authors

189:folder.

2193:6 July

1877:GitHub

1742:

899:(NDLP)

662:Google

577:page.

571:GitHub

563:Google

507:Medium

493:Google

489:OpenAI

395:origin

352:, and

335:server

285:server

195:Status

1711:(PDF)

1692:(PDF)

1617:8 May

797:/>

575:About

519:'

350:Lycos

312:Nexor

2195:2024

2122:9309

2105:IETF

2056:2020

2001:2018

1971:2016

1890:2019

1858:2019

1740:ISBN

1719:2015

1674:2015

1619:2017

1557:2017

1527:2022

1497:2013

1464:2013

1438:2013

1405:2017

1375:2013

1345:2013

1315:2013

1285:2013

1227:9309

1210:IETF

1130:2024

1093:2014

1008:2013

779:name

776:meta

773:<

759:and

734:The

592:and

499:and

235:.org

131:diff

125:) |

123:diff

111:diff

85:talk

34:talk

2183:NPR

2119:RFC

2109:doi

1700:doi

1224:RFC

1214:doi

497:BBC

421:or

150:on

142:An

43:at

2224::

2185:.

2181:.

2175:.

2146:.

2142:.

2117:.

2107:.

2103:.

2075:.

2064:^

2046:.

2042:.

1991:.

1987:.

1911:.

1907:.

1880:.

1874:.

1848:.

1748:.

1706:.

1698:.

1694:.

1664:.

1660:.

1635:.

1609:.

1603:.

1573:.

1543:.

1513:.

1487:.

1483:.

1472:^

1454:.

1428:.

1424:.

1413:^

1395:.

1391:.

1365:.

1361:.

1335:.

1331:.

1301:.

1275:.

1271:.

1222:.

1212:.

1208:.

1180:.

1176:.

1151:.

1147:.

1119:.

1101:^

1079:.

1050:.

994:.

965:.

961:.

723::

596:.

425:.

356:.

348:,

291:.

238:,

117:|

113:)

100::

87:|

55::

36:|

2197:.

2157:.

2124:.

2111::

2086:.

2058:.

2028:.

2003:.

1973:.

1947:.

1922:.

1892:.

1860:.

1834:.

1809:.

1784:.

1759:.

1721:.

1702::

1676:.

1646:.

1621:.

1588:.

1559:.

1529:.

1499:.

1466:.

1440:.

1407:.

1377:.

1347:.

1317:.

1287:.

1257:.

1229:.

1216::

1191:.

1162:.

1132:.

1095:.

1059:(

1035:.

1010:.

976:.

791:=

782:=

606:*

166:.

133:)

129:(

121:(

109:(

104:)

94:(

91:)

83:(

72:.

59:)

49:(

40:)

32:(

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.