119:

189:

381:

111:

28:

457:

20:

246:

matrix multiplication to map those −1 to 1 values to 0 to 1, which are more usual coordinates for depth map (texture map) lookup. This scaling can be done before the perspective division, and is easily folded into the previous transformation calculation by multiplying that matrix with the following:

81:

If you looked out from a source of light, all the objects you can see would appear in light. Anything behind those objects, however, would be in shadow. This is the basic principle used to create a shadow map. The light's view is rendered, storing the depth of every surface it sees (the shadow map).

179:

coordinates with axis X and Y to represent its geometric shape on screen, these vertex coordinates will match up with the corresponding edges of the shadow parts within the shadow map (depth map) itself. The second step is the depth test which compares the object z values against the z values from

525:

or shadow continuity glitches. A simple way to overcome this limitation is to increase the shadow map size, but due to memory, computational or hardware constraints, it is not always possible. Commonly used techniques for real-time shadow mapping have been developed to circumvent this limitation.

153:

In many implementations, it is practical to render only a subset of the objects in the scene to the shadow map to save some of the time it takes to redraw the map. Also, a depth offset which shifts the objects away from the light may be applied to the shadow map rendering in an attempt to resolve

437:

implementation, this test would be done at the fragment level. Also, care needs to be taken when selecting the type of texture map storage to be used by the hardware: if interpolation cannot be done, the shadow will appear to have a sharp, jagged edge (an effect that can be reduced with greater

533:, several solutions have been developed, either by doing several lookups on the shadow map, generating geometry meant to emulate the soft edge or creating non-standard depth shadow maps. Notable examples of these are Percentage Closer Filtering, Smoothies, and Variance Shadow maps.

364:

149:

This depth map must be updated any time there are changes to either the light or the objects in the scene, but can be reused in other situations, such as those where only the viewing camera moves. (If there are multiple lights, a separate depth map must be used for each light.)

89:, but the shadow map can be a faster alternative depending on how much fill time is required for either technique in a particular application and therefore may be more suitable to real-time applications. In addition, shadow maps do not require the use of an additional

158:

problems where the depth map value is close to the depth of a surface being drawn (i.e., the shadow-casting surface) in the next step. Alternatively, culling front faces and only rendering the back of objects to the shadow map is sometimes used for a similar result.

141:

From this rendering, the depth buffer is extracted and saved. Because only the depth information is relevant, it is common to avoid updating the color buffers and disable all lighting and texture calculations for this rendering, to save drawing time. This

101:

Rendering a shadowed scene involves two major drawing steps. The first produces the shadow map itself, and the second applies it to the scene. Depending on the implementation (and the number of lights), this may require two or more drawing passes.

468:

are available, the depth map test may be performed by a fragment shader which simply draws the object in shadow or lighted depending on the result, drawing the scene in a single pass (after an initial earlier pass to generate the shadow map).

252:

489:) this should technically be done using only the ambient component of the light, but this is usually adjusted to also include a dim diffuse light to prevent curved surfaces from appearing flat in shadow.

372:, or other graphics hardware extension, this transformation is usually applied at the vertex level, and the generated value is interpolated between other vertices and passed to the fragment level.

441:

It is possible to modify the depth map test to produce shadows with a soft edge by using a range of values (based on the proximity to the edge of the shadow) rather than simply pass or fail.

196:

To test a point against the depth map, its position in the scene coordinates must be transformed into the equivalent position as seen by the light. This is accomplished by a

54:

in 1978, in a paper entitled "Casting curved shadows on curved surfaces." Since then, it has been used both in pre-rendered and realtime scenes in many console and PC games.

521:

One of the key disadvantages of real-time shadow mapping is that the size and depth of the shadow map determine the quality of the final shadows. This is usually visible as

207:

The matrix used to transform the world coordinates into the light's viewing coordinates is the same as the one used to render the shadow map in the first step (under

1072:

171:

viewpoint, applying the shadow map. This process has three major components. The first step is to find the coordinates of the object as seen from the light, as a

82:

Next, the regular scene is rendered comparing the depth of every point drawn (as if it were being seen by the light, rather than the eye) to this depth map.

499:

to combine their effect with the lights already drawn. (Each of these passes requires an additional previous pass to generate the associated shadow map.)

639:

93:

and can be modified to produce shadows with a soft edge. Unlike shadow volumes, however, the accuracy of a shadow map is limited by its resolution.

1156:

752:

695:

430:) location falls outside the depth map, the programmer must either decide that the surface should be lit or shadowed by default (usually lit).

529:

Also notable is that generated shadows, even if aliasing free, have hard edges, which is not always desirable. In order to emulate real world

931:

564:

359:{\displaystyle {\begin{bmatrix}0.5&0&0&0.5\\0&0.5&0&0.5\\0&0&0.5&0.5\\0&0&0&1\end{bmatrix}}}

492:

Enable the depth map test and render the scene lit. Areas where the depth map test fails will not be overwritten and will remain shadowed.

1353:

905:

832:

1064:

526:

These include

Cascaded Shadow Maps, Trapezoidal Shadow Maps, Light Space Perspective Shadow maps, or Parallel-Split Shadow maps.

478:), which usually does not allow a choice between two lighting models (lit and shadowed), and necessitate more rendering passes:

633:

1742:

1715:

915:

734:

472:

If shaders are not available, performing the depth map test must usually be implemented by some hardware extension (such as

1758:

1362:

130:

as wide as its desired angle of effect (it will be a sort of square spotlight). For directional light (e.g., that from the

51:

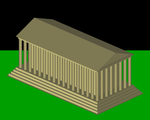

448:. The picture above captioned "visualization of the depth map projected onto the scene" is an example of such a process.

1482:

1305:

444:

The shadow mapping technique can also be modified to draw a texture onto the lit regions, simulating the effect of a

819:

1326:

640:

https://developer.nvidia.com/gpugems/gpugems3/part-ii-light-and-shadows/chapter-8-summed-area-variance-shadow-maps

1346:

1216:

1191:

126:

The first step renders the scene from the light's point of view. For a point light source, the view should be a

1605:

1406:

1296:

1262:

1730:

1720:

1610:

1429:

753:

https://web.archive.org/web/20101208212121/http://visual-computing.intel-research.net/art/publications/sdsm/

696:

https://web.archive.org/web/20101208213624/http://visual-computing.intel-research.net/art/publications/avsm/

1725:

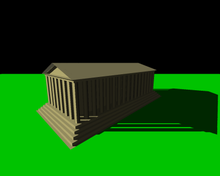

1590:

1530:

530:

658:

565:

http://developer.download.nvidia.com/SDK/10.5/opengl/src/cascaded_shadow_maps/doc/cascaded_shadow_maps.pdf

238:) falls between −1 and 1 (if it is visible from the light view). Many implementations (such as OpenGL and

1518:

1339:

180:

the depth map, and finally, once accomplished, the object must be drawn either in shadow or in light.

1316:

841:

performs the shadow test in eye-space rather than light-space to keep texture access more sequential.

201:

1149:

1049:

1007:

965:

890:

627:

558:

1093:

608:

212:

172:

135:

860:

740:

415:) location, the object is considered to be behind an occluding object and should be marked as a

1737:

1687:

1652:

1630:

1625:

808:

485:

168:

127:

758:

508:

1580:

1559:

1503:

1311:

1136:

1036:

994:

952:

877:

197:

1463:

1449:

1393:

722:

634:

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.104.2569&rep=rep1&type=pdf

47:

735:

http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.59.3376&rep=rep1&type=pdf

716:

621:

400:

value corresponds to its associated depth, which can now be tested against the depth map.

211:

this is the product of the modelview and projection matrices). This will produce a set of

8:

1595:

1523:

1411:

746:

204:, but a second set of coordinates must be generated to locate the object in light space.

176:

1122:

764:

1657:

1645:

1492:

1487:

1439:

1268:

670:

473:

464:

Drawing the scene with shadows can be done in several different ways. If programmable

1707:

1600:

1585:

1401:

1258:

911:

710:

689:

589:

496:

664:

1779:

1692:

1682:

1564:

1552:

1250:

419:, to be drawn in shadow by the drawing process. Otherwise, it should be drawn lit.

1272:

577:

1513:

1378:

1370:

1247:

Proceedings of the 2006 symposium on

Interactive 3D graphics and games - SI3D '06

1022:

445:

70:

1321:

1312:

Riemer's step-by-step tutorial implementing Shadow

Mapping with HLSL and DirectX

595:

1118:

813:

90:

1773:

1667:

1541:

1471:

980:

798:

583:

218:

86:

1254:

838:

407:

value is greater than the value stored in the depth map at the appropriate (

1672:

1635:

1620:

1615:

1535:

1476:

1020:

1242:

396:

values usually correspond to a location in the depth map texture, and the

1640:

1508:

1444:

804:

62:

482:

Render the entire scene in shadow. For the most common lighting models (

659:

http://developer.download.nvidia.com/shaderlibrary/docs/shadow_PCSS.pdf

155:

676:

200:. The location of the object on the screen is determined by the usual

143:

1331:

1498:

1116:

904:

Akenine-Mo ̈ller, Tomas; Haines, Eric; Hoffman, Naty (2018-08-06).

522:

239:

1547:

728:

701:

628:

https://doclib.uhasselt.be/dspace/bitstream/1942/8040/1/3227.pdf

559:

https://developer.nvidia.com/gpugems/GPUGems3/gpugems3_ch10.html

495:

An additional pass may be used for each additional light, using

1677:

1434:

1300:

1171:

1097:

938:

504:

465:

434:

369:

208:

43:

609:

https://developer.nvidia.com/gpugems/GPUGems/gpugems_ch11.html

61:

is visible from the light source, by comparing the pixel to a

1697:

1383:

903:

741:

http://www.cspaul.com/doku.php?id=publications:rosen.2012.i3d

118:

58:

1169:

816:, a much slower technique capable of very realistic lighting

645:

27:

1421:

1062:

981:"Anti-aliasing and Continuity with Trapezoidal Shadow Maps"

759:

http://image.diku.dk/projects/media/morten.mikkelsen.07.pdf

188:

380:

69:

image of the light source's view, stored in the form of a

1249:. Association for Computing Machinery. pp. 161–165.

131:

110:

76:

723:

http://www.cs.unc.edu/~zhangh/technotes/shadow/shadow.ps

717:

http://graphics.pixar.com/library/DeepShadows/paper.pdf

622:

https://discovery.ucl.ac.uk/id/eprint/10001/1/10001.pdf

516:

456:

192:

Visualization of the depth map projected onto the scene

19:

1241:

Donnelly, William; Lauritzen, Andrew (14 March 2006).

978:

747:

http://www.idav.ucdavis.edu/func/return_pdf?pub_id=919

261:

1021:

Michael Wimmer; Daniel

Scherzer; Werner Purgathofer.

765:

http://graphics.stanford.edu/papers/silmap/silmap.pdf

255:

513:

to accomplish the shadow map process in two passes.

167:

The second step is to draw the scene from the usual

1327:

Shadow

Mapping implementation using Java and OpenGL

671:

http://www.crcnetbase.com/doi/abs/10.1201/b10648-36

690:http://www.cs.cornell.edu/~kb/publications/ASM.pdf

590:http://www-sop.inria.fr/reves/Marc.Stamminger/psm/

358:

1322:NVIDIA Real-time Shadow Algorithms and Techniques

1240:

1065:"Parallel-Split Shadow Maps on Programmable GPUs"

711:http://sites.google.com/site/osmanbrian2/dpsm.pdf

665:https://jankautz.com/publications/VSSM_PG2010.pdf

146:is often stored as a texture in graphics memory.

16:Method to draw shadows in computer graphic images

1771:

1192:"Common Techniques to Improve Shadow Depth Maps"

822:, another very slow but very realistic technique

388:Once the light-space coordinates are found, the

578:https://www.cg.tuwien.ac.at/research/vr/lispsm/

503:The example pictures in this article used the

1347:

644:SMSR "Shadow Map Silhouette Revectorization"

596:http://bib.irb.hr/datoteka/570987.12_CSSM.pdf

1123:"Rendering Fake Soft Shadows with Smoothies"

833:Smooth Penumbra Transitions with Shadow Maps

782:Light Space Perspective Shadow Maps (LSPSMs)

536:

1306:Shadow Mapping with Today's OpenGL Hardware

861:"Casting curved shadows on curved surfaces"

1354:

1340:

1317:Improvements for Shadow Mapping using GLSL

858:

584:http://www.comp.nus.edu.sg/~tants/tsm.html

460:Final scene, rendered with ambient shadows

183:

105:

1155:CS1 maint: multiple names: authors list (

57:Shadows are created by testing whether a

455:

379:

187:

117:

109:

26:

18:

1772:

77:Principle of a shadow and a shadow map

1361:

1335:

1063:Fan Zhang; Hanqiu Sun; Oskari Nyman.

1023:"Light Space Perspective Shadow Maps"

757:SPPSM "Separating Plane Perspective"

96:

85:This technique is less accurate than

1759:List of computer graphics algorithms

1170:William Donnelly; Andrew Lauritzen.

663:VSSM "Variance Soft Shadow Mapping"

517:Shadow map real-time implementations

451:

162:

122:Scene from the light view, depth map

1420:

907:Real-Time Rendering, Fourth Edition

807:, a slower technique often used in

677:http://getlab.org/publications/FIV/

675:FIV "Fullsphere Irradiance Vector"

13:

826:

607:PCF "Percentage Closer Filtering"

215:that need a perspective division (

114:Scene rendered from the light view

50:. This concept was introduced by

14:

1791:

1290:

669:SSSS "Screen space soft shadows"

576:LiSPSM "Light Space Perspective"

375:

1282:– via ACM Digital Library.

770:

1234:

1209:

1184:

651:

1163:

1110:

1086:

1056:

1014:

979:Tobias Martin; Tiow-Seng Tan.

972:

924:

897:

852:

779:Perspective shadow maps (PSMs)

729:http://gamma.cs.unc.edu/LOGSM/

1:

1716:3D computer graphics software

845:

801:, another shadowing technique

702:http://free-zg.t-com.hr/cssm/

638:SAVSM "Summed Area Variance"

224:normalized device coordinates

1531:Hidden-surface determination

614:

601:

551:

7:

792:

788:Variance Shadow Maps (VSMs)

785:Cascaded Shadow Maps (CSMs)

751:SDSM "Sample Distribution"

694:AVSM "Adaptive Volumetric"

682:

226:, in which each component (

10:

1796:

745:RMSM "Resolution Matched"

570:

1751:

1706:

1573:

1462:

1392:

1369:

1117:Eric Chan, Fredo Durand,

1094:"Shadow Map Antialiasing"

763:SSSM "Shadow Silhouette"

657:PCSS "Percentage Closer"

646:http://bondarev.nl/?p=326

541:

537:Shadow mapping techniques

202:coordinate transformation

23:Scene with shadow mapping

438:shadow map resolution).

242:) require an additional

1743:Vector graphics editors

1738:Raster graphics editors

1297:Hardware Shadow Mapping

1255:10.1145/1111411.1111440

776:Shadow Depth Maps (SDM)

709:DPSM "Dual Paraboloid"

384:Depth map test failures

213:homogeneous coordinates

184:Light space coordinates

136:orthographic projection

106:Creating the shadow map

1626:Checkerboard rendering

1243:"Variance shadow maps"

1217:"Cascaded Shadow Maps"

1172:"Variance Shadow Maps"

1144:Cite journal requires

1044:Cite journal requires

1002:Cite journal requires

960:Cite journal requires

932:"Cascaded shadow maps"

885:Cite journal requires

839:Forward shadow mapping

733:MDSM "Multiple Depth"

557:PSSM "Parallel Split"

486:Phong reflection model

461:

385:

360:

193:

128:perspective projection

123:

115:

42:is a process by which

32:

24:

1581:Affine transformation

1560:Surface triangulation

1504:Anisotropic filtering

510:GL_ARB_shadow_ambient

459:

383:

361:

198:matrix multiplication

191:

121:

113:

31:Scene with no shadows

30:

22:

706:DASM "Deep Adaptive"

700:CSSM "Camera Space"

594:CSSM "Camera Space"

253:

48:3D computer graphics

40:shadowing projection

1596:Collision detection

1524:Global illumination

1075:on January 17, 2010

727:LPSM "Logarithmic"

1646:Scanline rendering

1440:Parallax scrolling

1430:Isometric graphics

1221:Msdn.microsoft.com

1196:Msdn.microsoft.com

739:RTW "Rectilinear"

626:CSM "Convolution"

620:ESM "Exponential"

588:PSM "Perspective"

462:

386:

356:

350:

194:

124:

116:

97:Algorithm overview

33:

25:

1767:

1766:

1708:Graphics software

1601:Planar projection

1586:Back-face culling

1458:

1457:

1402:Alpha compositing

1363:Computer graphics

917:978-1-351-81615-1

835:Willem H. de Boer

497:additive blending

452:Drawing the scene

175:object only uses

163:Shading the scene

1787:

1693:Volume rendering

1565:Wire-frame model

1418:

1417:

1356:

1349:

1342:

1333:

1332:

1284:

1283:

1281:

1279:

1238:

1232:

1231:

1229:

1227:

1213:

1207:

1206:

1204:

1202:

1188:

1182:

1181:

1179:

1178:

1167:

1161:

1160:

1153:

1147:

1142:

1140:

1132:

1130:

1129:

1114:

1108:

1107:

1105:

1104:

1090:

1084:

1083:

1081:

1080:

1071:. Archived from

1060:

1054:

1053:

1047:

1042:

1040:

1032:

1030:

1029:

1018:

1012:

1011:

1005:

1000:

998:

990:

988:

987:

976:

970:

969:

963:

958:

956:

948:

946:

945:

936:

928:

922:

921:

901:

895:

894:

888:

883:

881:

873:

871:

870:

865:

859:Lance Williams.

856:

582:TSM "Trapezoid"

365:

363:

362:

357:

355:

354:

138:should be used.

1795:

1794:

1790:

1789:

1788:

1786:

1785:

1784:

1770:

1769:

1768:

1763:

1747:

1702:

1569:

1514:Fluid animation

1454:

1416:

1388:

1379:Diffusion curve

1371:Vector graphics

1365:

1360:

1293:

1288:

1287:

1277:

1275:

1265:

1239:

1235:

1225:

1223:

1215:

1214:

1210:

1200:

1198:

1190:

1189:

1185:

1176:

1174:

1168:

1164:

1154:

1145:

1143:

1134:

1133:

1127:

1125:

1115:

1111:

1102:

1100:

1092:

1091:

1087:

1078:

1076:

1061:

1057:

1045:

1043:

1034:

1033:

1027:

1025:

1019:

1015:

1003:

1001:

992:

991:

985:

983:

977:

973:

961:

959:

950:

949:

943:

941:

934:

930:

929:

925:

918:

902:

898:

886:

884:

875:

874:

868:

866:

863:

857:

853:

848:

829:

827:Further reading

795:

773:

688:ASM "Adaptive"

685:

654:

632:VSM "Variance"

617:

604:

573:

563:CSM "Cascaded"

554:

544:

539:

519:

454:

378:

368:If done with a

349:

348:

343:

338:

333:

327:

326:

321:

316:

311:

305:

304:

299:

294:

289:

283:

282:

277:

272:

267:

257:

256:

254:

251:

250:

186:

165:

108:

99:

79:

17:

12:

11:

5:

1793:

1783:

1782:

1765:

1764:

1762:

1761:

1755:

1753:

1749:

1748:

1746:

1745:

1740:

1735:

1734:

1733:

1728:

1723:

1712:

1710:

1704:

1703:

1701:

1700:

1695:

1690:

1685:

1680:

1675:

1670:

1665:

1663:Shadow mapping

1660:

1655:

1650:

1649:

1648:

1643:

1638:

1633:

1628:

1623:

1618:

1608:

1603:

1598:

1593:

1588:

1583:

1577:

1575:

1571:

1570:

1568:

1567:

1562:

1557:

1556:

1555:

1545:

1538:

1533:

1528:

1527:

1526:

1516:

1511:

1506:

1501:

1496:

1490:

1485:

1479:

1474:

1468:

1466:

1460:

1459:

1456:

1455:

1453:

1452:

1447:

1442:

1437:

1432:

1426:

1424:

1415:

1414:

1409:

1404:

1398:

1396:

1390:

1389:

1387:

1386:

1381:

1375:

1373:

1367:

1366:

1359:

1358:

1351:

1344:

1336:

1330:

1329:

1324:

1319:

1314:

1309:

1303:

1292:

1291:External links

1289:

1286:

1285:

1263:

1233:

1208:

1183:

1162:

1146:|journal=

1119:Marco Corbetta

1109:

1085:

1055:

1046:|journal=

1013:

1004:|journal=

971:

962:|journal=

923:

916:

896:

887:|journal=

850:

849:

847:

844:

843:

842:

836:

828:

825:

824:

823:

817:

814:Photon mapping

811:

802:

794:

791:

790:

789:

786:

783:

780:

777:

772:

769:

768:

767:

761:

755:

749:

743:

737:

731:

725:

721:FSM "Forward"

719:

713:

707:

704:

698:

692:

684:

681:

680:

679:

673:

667:

661:

653:

650:

649:

648:

642:

636:

630:

624:

616:

613:

612:

611:

603:

600:

599:

598:

592:

586:

580:

572:

569:

568:

567:

561:

553:

550:

549:

548:

543:

540:

538:

535:

518:

515:

501:

500:

493:

490:

453:

450:

377:

376:Depth map test

374:

353:

347:

344:

342:

339:

337:

334:

332:

329:

328:

325:

322:

320:

317:

315:

312:

310:

307:

306:

303:

300:

298:

295:

293:

290:

288:

285:

284:

281:

278:

276:

273:

271:

268:

266:

263:

262:

260:

244:scale and bias

185:

182:

164:

161:

107:

104:

98:

95:

91:stencil buffer

87:shadow volumes

78:

75:

52:Lance Williams

36:Shadow mapping

15:

9:

6:

4:

3:

2:

1792:

1781:

1778:

1777:

1775:

1760:

1757:

1756:

1754:

1750:

1744:

1741:

1739:

1736:

1732:

1729:

1727:

1724:

1722:

1719:

1718:

1717:

1714:

1713:

1711:

1709:

1705:

1699:

1696:

1694:

1691:

1689:

1686:

1684:

1681:

1679:

1676:

1674:

1671:

1669:

1668:Shadow volume

1666:

1664:

1661:

1659:

1656:

1654:

1651:

1647:

1644:

1642:

1639:

1637:

1634:

1632:

1629:

1627:

1624:

1622:

1619:

1617:

1614:

1613:

1612:

1609:

1607:

1604:

1602:

1599:

1597:

1594:

1592:

1589:

1587:

1584:

1582:

1579:

1578:

1576:

1572:

1566:

1563:

1561:

1558:

1554:

1551:

1550:

1549:

1546:

1543:

1542:Triangle mesh

1539:

1537:

1534:

1532:

1529:

1525:

1522:

1521:

1520:

1517:

1515:

1512:

1510:

1507:

1505:

1502:

1500:

1497:

1494:

1491:

1489:

1486:

1484:

1480:

1478:

1475:

1473:

1472:3D projection

1470:

1469:

1467:

1465:

1461:

1451:

1448:

1446:

1443:

1441:

1438:

1436:

1433:

1431:

1428:

1427:

1425:

1423:

1419:

1413:

1412:Text-to-image

1410:

1408:

1405:

1403:

1400:

1399:

1397:

1395:

1391:

1385:

1382:

1380:

1377:

1376:

1374:

1372:

1368:

1364:

1357:

1352:

1350:

1345:

1343:

1338:

1337:

1334:

1328:

1325:

1323:

1320:

1318:

1315:

1313:

1310:

1307:

1304:

1302:

1298:

1295:

1294:

1274:

1270:

1266:

1260:

1256:

1252:

1248:

1244:

1237:

1222:

1218:

1212:

1197:

1193:

1187:

1173:

1166:

1158:

1151:

1138:

1124:

1120:

1113:

1099:

1095:

1089:

1074:

1070:

1066:

1059:

1051:

1038:

1024:

1017:

1009:

996:

982:

975:

967:

954:

940:

933:

927:

919:

913:

910:. CRC Press.

909:

908:

900:

892:

879:

862:

855:

851:

840:

837:

834:

831:

830:

821:

818:

815:

812:

810:

806:

803:

800:

799:Shadow volume

797:

796:

787:

784:

781:

778:

775:

774:

771:Miscellaneous

766:

762:

760:

756:

754:

750:

748:

744:

742:

738:

736:

732:

730:

726:

724:

720:

718:

714:

712:

708:

705:

703:

699:

697:

693:

691:

687:

686:

678:

674:

672:

668:

666:

662:

660:

656:

655:

647:

643:

641:

637:

635:

631:

629:

625:

623:

619:

618:

610:

606:

605:

597:

593:

591:

587:

585:

581:

579:

575:

574:

566:

562:

560:

556:

555:

546:

545:

534:

532:

527:

524:

514:

512:

511:

506:

498:

494:

491:

488:

487:

481:

480:

479:

477:

476:

475:GL_ARB_shadow

470:

467:

458:

449:

447:

442:

439:

436:

431:

429:

425:

420:

418:

414:

410:

406:

401:

399:

395:

391:

382:

373:

371:

366:

351:

345:

340:

335:

330:

323:

318:

313:

308:

301:

296:

291:

286:

279:

274:

269:

264:

258:

248:

245:

241:

237:

233:

229:

225:

221:

220:

219:3D projection

214:

210:

205:

203:

199:

190:

181:

178:

174:

170:

160:

157:

151:

147:

145:

139:

137:

133:

129:

120:

112:

103:

94:

92:

88:

83:

74:

72:

68:

64:

60:

55:

53:

49:

46:are added to

45:

41:

37:

29:

21:

1673:Shear matrix

1662:

1636:Path tracing

1621:Cone tracing

1616:Beam tracing

1536:Polygon mesh

1477:3D rendering

1276:. Retrieved

1246:

1236:

1224:. Retrieved

1220:

1211:

1199:. Retrieved

1195:

1186:

1175:. Retrieved

1165:

1137:cite journal

1126:. Retrieved

1112:

1101:. Retrieved

1088:

1077:. Retrieved

1073:the original

1068:

1058:

1037:cite journal

1026:. Retrieved

1016:

995:cite journal

984:. Retrieved

974:

953:cite journal

942:. Retrieved

926:

906:

899:

878:cite journal

867:. Retrieved

854:

652:Soft Shadows

547:SSM "Simple"

531:soft shadows

528:

520:

509:

502:

483:

474:

471:

463:

443:

440:

432:

427:

423:

421:

416:

412:

408:

404:

402:

397:

393:

389:

387:

367:

249:

243:

235:

231:

227:

223:

222:) to become

216:

206:

195:

166:

152:

148:

140:

125:

100:

84:

80:

66:

56:

39:

35:

34:

1688:Translation

1641:Ray casting

1631:Ray tracing

1509:Cel shading

1483:Image-based

1464:3D graphics

1445:Ray casting

1394:2D graphics

1226:November 7,

1201:November 7,

809:ray tracing

805:Ray casting

715:DSM "Deep"

1752:Algorithms

1606:Reflection

1278:7 November

1264:159593295X

1177:2008-02-14

1128:2008-02-14

1103:2008-02-14

1079:2008-02-14

1069:GPU Gems 3

1028:2008-02-14

986:2008-02-14

944:2008-02-14

869:2020-12-22

846:References

507:extension

1731:rendering

1721:animation

1611:Rendering

820:Radiosity

615:Filtering

602:Smoothing

552:Splitting

446:projector

156:stitching

144:depth map

1774:Category

1726:modeling

1653:Rotation

1591:Clipping

1574:Concepts

1553:Deferred

1519:Lighting

1499:Aliasing

1493:Unbiased

1488:Spectral

1308:, nVidia

793:See also

683:Assorted

523:aliasing

422:If the (

240:Direct3D

63:z-buffer

1780:Shading

1658:Scaling

1548:Shading

571:Warping

466:shaders

417:failure

403:If the

71:texture

44:shadows

1678:Shader

1450:Skybox

1435:Mode 7

1407:Layers

1301:nVidia

1273:538139

1271:

1261:

1098:NVidia

939:NVidia

914:

542:Simple

505:OpenGL

435:shader

370:shader

209:OpenGL

169:camera

134:), an

1698:Voxel

1683:Texel

1384:Pixel

1269:S2CID

935:(PDF)

864:(PDF)

433:In a

234:, or

67:depth

59:pixel

1422:2.5D

1280:2021

1259:ISBN

1228:2021

1203:2021

1157:link

1150:help

1050:help

1008:help

966:help

912:ISBN

891:help

484:see

392:and

217:see

1251:doi

324:0.5

319:0.5

302:0.5

292:0.5

280:0.5

265:0.5

132:Sun

65:or

38:or

1776::

1299:,

1267:.

1257:.

1245:.

1219:.

1194:.

1141::

1139:}}

1135:{{

1121:.

1096:.

1067:.

1041::

1039:}}

1035:{{

999::

997:}}

993:{{

957::

955:}}

951:{{

937:.

882::

880:}}

876:{{

230:,

177:2D

173:3D

73:.

1544:)

1540:(

1495:)

1481:(

1355:e

1348:t

1341:v

1253::

1230:.

1205:.

1180:.

1159:)

1152:)

1148:(

1131:.

1106:.

1082:.

1052:)

1048:(

1031:.

1010:)

1006:(

989:.

968:)

964:(

947:.

920:.

893:)

889:(

872:.

428:y

426:,

424:x

413:y

411:,

409:x

405:z

398:z

394:y

390:x

352:]

346:1

341:0

336:0

331:0

314:0

309:0

297:0

287:0

275:0

270:0

259:[

236:z

232:y

228:x

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.