16629:

15690:

16624:{\displaystyle {\begin{aligned}\Pr(Y_{i}=1\mid \mathbf {X} _{i})={}&\Pr \left(Y_{i}^{1\ast }>Y_{i}^{0\ast }\mid \mathbf {X} _{i}\right)&\\={}&\Pr \left(Y_{i}^{1\ast }-Y_{i}^{0\ast }>0\mid \mathbf {X} _{i}\right)&\\={}&\Pr \left({\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}+\varepsilon _{1}-\left({\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}+\varepsilon _{0}\right)>0\right)&\\={}&\Pr \left(({\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}-{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i})+(\varepsilon _{1}-\varepsilon _{0})>0\right)&\\={}&\Pr(({\boldsymbol {\beta }}_{1}-{\boldsymbol {\beta }}_{0})\cdot \mathbf {X} _{i}+(\varepsilon _{1}-\varepsilon _{0})>0)&\\={}&\Pr(({\boldsymbol {\beta }}_{1}-{\boldsymbol {\beta }}_{0})\cdot \mathbf {X} _{i}+\varepsilon >0)&&{\text{(substitute }}\varepsilon {\text{ as above)}}\\={}&\Pr({\boldsymbol {\beta }}\cdot \mathbf {X} _{i}+\varepsilon >0)&&{\text{(substitute }}{\boldsymbol {\beta }}{\text{ as above)}}\\={}&\Pr(\varepsilon >-{\boldsymbol {\beta }}\cdot \mathbf {X} _{i})&&{\text{(now, same as above model)}}\\={}&\Pr(\varepsilon <{\boldsymbol {\beta }}\cdot \mathbf {X} _{i})&\\={}&\operatorname {logit} ^{-1}({\boldsymbol {\beta }}\cdot \mathbf {X} _{i})\\={}&p_{i}\end{aligned}}}

16730:

changes the utility of a given choice. A voter might expect that the right-of-center party would lower taxes, especially on rich people. This would give low-income people no benefit, i.e. no change in utility (since they usually don't pay taxes); would cause moderate benefit (i.e. somewhat more money, or moderate utility increase) for middle-incoming people; would cause significant benefits for high-income people. On the other hand, the left-of-center party might be expected to raise taxes and offset it with increased welfare and other assistance for the lower and middle classes. This would cause significant positive benefit to low-income people, perhaps a weak benefit to middle-income people, and significant negative benefit to high-income people. Finally, the secessionist party would take no direct actions on the economy, but simply secede. A low-income or middle-income voter might expect basically no clear utility gain or loss from this, but a high-income voter might expect negative utility since he/she is likely to own companies, which will have a harder time doing business in such an environment and probably lose money.

18889:

18173:

18884:{\displaystyle {\begin{aligned}\Pr(Y_{i}=1)&={\frac {e^{({\boldsymbol {\beta }}_{1}+\mathbf {C} )\cdot \mathbf {X} _{i}}}{e^{({\boldsymbol {\beta }}_{0}+\mathbf {C} )\cdot \mathbf {X} _{i}}+e^{({\boldsymbol {\beta }}_{1}+\mathbf {C} )\cdot \mathbf {X} _{i}}}}\\&={\frac {e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}e^{\mathbf {C} \cdot \mathbf {X} _{i}}}{e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}e^{\mathbf {C} \cdot \mathbf {X} _{i}}+e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}e^{\mathbf {C} \cdot \mathbf {X} _{i}}}}\\&={\frac {e^{\mathbf {C} \cdot \mathbf {X} _{i}}e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}{e^{\mathbf {C} \cdot \mathbf {X} _{i}}(e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}+e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}})}}\\&={\frac {e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}{e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}+e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}}.\end{aligned}}}

24635:

a single degree of freedom. If the predictor model has significantly smaller deviance (c.f. chi-square using the difference in degrees of freedom of the two models), then one can conclude that there is a significant association between the "predictor" and the outcome. Although some common statistical packages (e.g. SPSS) do provide likelihood ratio test statistics, without this computationally intensive test it would be more difficult to assess the contribution of individual predictors in the multiple logistic regression case. To assess the contribution of individual predictors one can enter the predictors hierarchically, comparing each new model with the previous to determine the contribution of each predictor. There is some debate among statisticians about the appropriateness of so-called "stepwise" procedures. The fear is that they may not preserve nominal statistical properties and may become misleading.

758:

probability value ranging between 0 and 1. This probability indicates the likelihood that a given input corresponds to one of two predefined categories. The essential mechanism of logistic regression is grounded in the logistic function's ability to model the probability of binary outcomes accurately. With its distinctive S-shaped curve, the logistic function effectively maps any real-valued number to a value within the 0 to 1 interval. This feature renders it particularly suitable for binary classification tasks, such as sorting emails into "spam" or "not spam". By calculating the probability that the dependent variable will be categorized into a specific group, logistic regression provides a probabilistic framework that supports informed decision-making.

24484:

14899:

24282:

14533:

29155:

14030:

24479:{\displaystyle {\begin{aligned}D_{\text{null}}-D_{\text{fitted}}&=-2\left(\ln {\frac {\text{likelihood of null model}}{\text{likelihood of the saturated model}}}-\ln {\frac {\text{likelihood of fitted model}}{\text{likelihood of the saturated model}}}\right)\\&=-2\ln {\frac {\left({\dfrac {\text{likelihood of null model}}{\text{likelihood of the saturated model}}}\right)}{\left({\dfrac {\text{likelihood of fitted model}}{\text{likelihood of the saturated model}}}\right)}}\\&=-2\ln {\frac {\text{likelihood of the null model}}{\text{likelihood of fitted model}}}.\end{aligned}}}

17702:

34342:

29609:

14894:{\displaystyle {\begin{aligned}\Pr(Y_{i}=1\mid \mathbf {X} _{i})&=\Pr(Y_{i}^{\ast }>0\mid \mathbf {X} _{i})\\&=\Pr({\boldsymbol {\beta }}\cdot \mathbf {X} _{i}+\varepsilon _{i}>0)\\&=\Pr(\varepsilon _{i}>-{\boldsymbol {\beta }}\cdot \mathbf {X} _{i})\\&=\Pr(\varepsilon _{i}<{\boldsymbol {\beta }}\cdot \mathbf {X} _{i})&&{\text{(because the logistic distribution is symmetric)}}\\&=\operatorname {logit} ^{-1}({\boldsymbol {\beta }}\cdot \mathbf {X} _{i})&\\&=p_{i}&&{\text{(see above)}}\end{aligned}}}

629:

25243:. Thus, although the observed dependent variable in binary logistic regression is a 0-or-1 variable, the logistic regression estimates the odds, as a continuous variable, that the dependent variable is a 'success'. In some applications, the odds are all that is needed. In others, a specific yes-or-no prediction is needed for whether the dependent variable is or is not a 'success'; this categorical prediction can be based on the computed odds of success, with predicted odds above some chosen cutoff value being translated into a prediction of success.

21475:

28636:

13667:

7877:

8426:

17389:

1042:

27:

29150:{\displaystyle {\begin{aligned}&\lim \limits _{N\rightarrow +\infty }N^{-1}\sum _{i=1}^{N}\log \Pr(y_{i}\mid x_{i};\theta )=\sum _{x\in {\mathcal {X}}}\sum _{y\in {\mathcal {Y}}}\Pr(X=x,Y=y)\log \Pr(Y=y\mid X=x;\theta )\\={}&\sum _{x\in {\mathcal {X}}}\sum _{y\in {\mathcal {Y}}}\Pr(X=x,Y=y)\left(-\log {\frac {\Pr(Y=y\mid X=x)}{\Pr(Y=y\mid X=x;\theta )}}+\log \Pr(Y=y\mid X=x)\right)\\={}&-D_{\text{KL}}(Y\parallel Y_{\theta })-H(Y\mid X)\end{aligned}}}

34328:

25042:) rather than a continuous outcome. Given this difference, the assumptions of linear regression are violated. In particular, the residuals cannot be normally distributed. In addition, linear regression may make nonsensical predictions for a binary dependent variable. What is needed is a way to convert a binary variable into a continuous one that can take on any real value (negative or positive). To do that, binomial logistic regression first calculates the

25291:. The Lagrangian is equal to the entropy plus the sum of the products of Lagrange multipliers times various constraint expressions. The general multinomial case will be considered, since the proof is not made that much simpler by considering simpler cases. Equating the derivative of the Lagrangian with respect to the various probabilities to zero yields a functional form for those probabilities which corresponds to those used in logistic regression.

14025:{\displaystyle \Pr(Y_{i}=y\mid \mathbf {X} _{i})={p_{i}}^{y}(1-p_{i})^{1-y}=\left({\frac {e^{{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}{1+e^{{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}}\right)^{y}\left(1-{\frac {e^{{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}{1+e^{{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}}\right)^{1-y}={\frac {e^{{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}\cdot y}}{1+e^{{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}}}

24112:

a model with at least one predictor and the saturated model. In this respect, the null model provides a baseline upon which to compare predictor models. Given that deviance is a measure of the difference between a given model and the saturated model, smaller values indicate better fit. Thus, to assess the contribution of a predictor or set of predictors, one can subtract the model deviance from the null deviance and assess the difference on a

2354:), meaning the actual outcome is "more surprising". Since the value of the logistic function is always strictly between zero and one, the log loss is always greater than zero and less than infinity. Unlike in a linear regression, where the model can have zero loss at a point by passing through a data point (and zero loss overall if all points are on a line), in a logistic regression it is not possible to have zero loss at any points, since

7387:

4649:

20486:

10513:

16647:

17697:{\displaystyle {\begin{aligned}\Pr(Y_{i}=0)&={\frac {e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}}{e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}+e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}}\\\Pr(Y_{i}=1)&={\frac {e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}{e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}+e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}}.\end{aligned}}}

34366:

24271:

34354:

32016:

6193:

21723:

subjectively) regard confidence interval coverage less than 93 percent, type I error greater than 7 percent, or relative bias greater than 15 percent as problematic, our results indicate that problems are fairly frequent with 2–4 EPV, uncommon with 5–9 EPV, and still observed with 10–16 EPV. The worst instances of each problem were not severe with 5–9 EPV and usually comparable to those with 10–16 EPV".

6485:

23757:) increases, becoming exactly chi-square distributed in the limit of an infinite number of data points. As in the case of linear regression, we may use this fact to estimate the probability that a random set of data points will give a better fit than the fit obtained by the proposed model, and so have an estimate how significantly the model is improved by including the

7872:{\displaystyle {\begin{aligned}Y_{i}\mid x_{1,i},\ldots ,x_{m,i}\ &\sim \operatorname {Bernoulli} (p_{i})\\\operatorname {\mathbb {E} } &=p_{i}\\\Pr(Y_{i}=y\mid x_{1,i},\ldots ,x_{m,i})&={\begin{cases}p_{i}&{\text{if }}y=1\\1-p_{i}&{\text{if }}y=0\end{cases}}\\\Pr(Y_{i}=y\mid x_{1,i},\ldots ,x_{m,i})&=p_{i}^{y}(1-p_{i})^{(1-y)}\end{aligned}}}

9274:

19221:

15095:

24747:

healthy people in order to obtain data for only a few diseased individuals. Thus, we may evaluate more diseased individuals, perhaps all of the rare outcomes. This is also retrospective sampling, or equivalently it is called unbalanced data. As a rule of thumb, sampling controls at a rate of five times the number of cases will produce sufficient control data.

21076:

17249:

28435:

24104:. Smaller values indicate better fit as the fitted model deviates less from the saturated model. When assessed upon a chi-square distribution, nonsignificant chi-square values indicate very little unexplained variance and thus, good model fit. Conversely, a significant chi-square value indicates that a significant amount of the variance is unexplained.

20168:

10214:

29246:. The model of logistic regression, however, is based on quite different assumptions (about the relationship between the dependent and independent variables) from those of linear regression. In particular, the key differences between these two models can be seen in the following two features of logistic regression. First, the conditional distribution

20157:

24166:

17025:

29486:

surpassed it. This relative popularity was due to the adoption of the logit outside of bioassay, rather than displacing the probit within bioassay, and its informal use in practice; the logit's popularity is credited to the logit model's computational simplicity, mathematical properties, and generality, allowing its use in varied fields.

14368:

13153:

12587:= 0. This was convenient, but not necessary. Again, the optimum beta coefficients may be found by maximizing the log-likelihood function generally using numerical methods. A possible method of solution is to set the derivatives of the log-likelihood with respect to each beta coefficient equal to zero and solve for the beta coefficients:

20544:

predictors. The model will not converge with zero cell counts for categorical predictors because the natural logarithm of zero is an undefined value so that the final solution to the model cannot be reached. To remedy this problem, researchers may collapse categories in a theoretically meaningful way or add a constant to all cells.

13648:

6202:

13326:

24614:

predictor. In logistic regression, however, the regression coefficients represent the change in the logit for each unit change in the predictor. Given that the logit is not intuitive, researchers are likely to focus on a predictor's effect on the exponential function of the regression coefficient – the odds ratio (see

11668:

26935:

21381:

27361:

11868:

17980:

24746:

Suppose cases are rare. Then we might wish to sample them more frequently than their prevalence in the population. For example, suppose there is a disease that affects 1 person in 10,000 and to collect our data we need to do a complete physical. It may be too expensive to do thousands of physicals of

24111:

Two measures of deviance are particularly important in logistic regression: null deviance and model deviance. The null deviance represents the difference between a model with only the intercept (which means "no predictors") and the saturated model. The model deviance represents the difference between

21726:

Others have found results that are not consistent with the above, using different criteria. A useful criterion is whether the fitted model will be expected to achieve the same predictive discrimination in a new sample as it appeared to achieve in the model development sample. For that criterion, 20

24733:

Although several statistical packages (e.g., SPSS, SAS) report the Wald statistic to assess the contribution of individual predictors, the Wald statistic has limitations. When the regression coefficient is large, the standard error of the regression coefficient also tends to be larger increasing the

24634:

discussed above to assess model fit is also the recommended procedure to assess the contribution of individual "predictors" to a given model. In the case of a single predictor model, one simply compares the deviance of the predictor model with that of the null model on a chi-square distribution with

20515:

In some instances, the model may not reach convergence. Non-convergence of a model indicates that the coefficients are not meaningful because the iterative process was unable to find appropriate solutions. A failure to converge may occur for a number of reasons: having a large ratio of predictors to

24613:

After fitting the model, it is likely that researchers will want to examine the contribution of individual predictors. To do so, they will want to examine the regression coefficients. In linear regression, the regression coefficients represent the change in the criterion for each unit change in the

24032:

is used in lieu of a sum of squares calculations. Deviance is analogous to the sum of squares calculations in linear regression and is a measure of the lack of fit to the data in a logistic regression model. When a "saturated" model is available (a model with a theoretically perfect fit), deviance

21740:

In any fitting procedure, the addition of another fitting parameter to a model (e.g. the beta parameters in a logistic regression model) will almost always improve the ability of the model to predict the measured outcomes. This will be true even if the additional term has no predictive value, since

15220:

20543:

Sparseness in the data refers to having a large proportion of empty cells (cells with zero counts). Zero cell counts are particularly problematic with categorical predictors. With continuous predictors, the model can infer values for the zero cell counts, but this is not the case with categorical

19285:

model start out either by extending the "log-linear" formulation presented here or the two-way latent variable formulation presented above, since both clearly show the way that the model could be extended to multi-way outcomes. In general, the presentation with latent variables is more common in

16802:

Separate sets of regression coefficients need to exist for each choice. When phrased in terms of utility, this can be seen very easily. Different choices have different effects on net utility; furthermore, the effects vary in complex ways that depend on the characteristics of each individual, so

15501:

It turns out that this model is equivalent to the previous model, although this seems non-obvious, since there are now two sets of regression coefficients and error variables, and the error variables have a different distribution. In fact, this model reduces directly to the previous one with the

5940:

illustrates that the probability of the dependent variable equaling a case is equal to the value of the logistic function of the linear regression expression. This is important in that it shows that the value of the linear regression expression can vary from negative to positive infinity and yet,

29485:

and following years. The logit model was initially dismissed as inferior to the probit model, but "gradually achieved an equal footing with the probit", particularly between 1960 and 1970. By 1970, the logit model achieved parity with the probit model in use in statistics journals and thereafter

20547:

Another numerical problem that may lead to a lack of convergence is complete separation, which refers to the instance in which the predictors perfectly predict the criterion – all cases are accurately classified and the likelihood maximized with infinite coefficients. In such instances, one

20539:

Multicollinearity refers to unacceptably high correlations between predictors. As multicollinearity increases, coefficients remain unbiased but standard errors increase and the likelihood of model convergence decreases. To detect multicollinearity amongst the predictors, one can conduct a linear

17837:

24750:

Logistic regression is unique in that it may be estimated on unbalanced data, rather than randomly sampled data, and still yield correct coefficient estimates of the effects of each independent variable on the outcome. That is to say, if we form a logistic model from such data, if the model is

16729:

that results from making each of the choices. We can also interpret the regression coefficients as indicating the strength that the associated factor (i.e. explanatory variable) has in contributing to the utility — or more correctly, the amount by which a unit change in an explanatory variable

12768:

6196:

The image represents an outline of what an odds ratio looks like in writing, through a template in addition to the test score example in the "Example" section of the contents. In simple terms, if we hypothetically get an odds ratio of 2 to 1, we can say... "For every one-unit increase in hours

21722:

participants. However, there is considerable debate about the reliability of this rule, which is based on simulation studies and lacks a secure theoretical underpinning. According to some authors the rule is overly conservative in some circumstances, with the authors stating, "If we (somewhat

9130:

14373:

The choice of modeling the error variable specifically with a standard logistic distribution, rather than a general logistic distribution with the location and scale set to arbitrary values, seems restrictive, but in fact, it is not. It must be kept in mind that we can choose the regression

25797:

and will minimized by equating the derivatives of the

Lagrangian with respect to these probabilities to zero. An important point is that the probabilities are treated equally and the fact that they sum to 1 is part of the Lagrangian formulation, rather than being assumed from the beginning.

19033:

14939:

12149:

757:

tasks, such as identifying whether an email is spam or not and diagnosing diseases by assessing the presence or absence of specific conditions based on patient test results. This approach utilizes the logistic (or sigmoid) function to transform a linear combination of input features into a

3077:

24968:

20920:

21745:" to the noise in the data. The question arises as to whether the improvement gained by the addition of another fitting parameter is significant enough to recommend the inclusion of the additional term, or whether the improvement is simply that which may be expected from overfitting.

20481:{\displaystyle \Pr(Y_{i}=y\mid \mathbf {X} _{i})={n_{i} \choose y}p_{i}^{y}(1-p_{i})^{n_{i}-y}={n_{i} \choose y}\left({\frac {1}{1+e^{-{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}}\right)^{y}\left(1-{\frac {1}{1+e^{-{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}}\right)^{n_{i}-y}\,.}

17073:

10508:{\displaystyle p={\frac {b^{{\boldsymbol {\beta }}\cdot {\boldsymbol {x}}}}{1+b^{{\boldsymbol {\beta }}\cdot x}}}={\frac {b^{\beta _{0}+\beta _{1}x_{1}+\beta _{2}x_{2}}}{1+b^{\beta _{0}+\beta _{1}x_{1}+\beta _{2}x_{2}}}}={\frac {1}{1+b^{-(\beta _{0}+\beta _{1}x_{1}+\beta _{2}x_{2})}}}}

28178:

6091:

serves as a link function between the probability and the linear regression expression. Given that the logit ranges between negative and positive infinity, it provides an adequate criterion upon which to conduct linear regression and the logit is easily converted back into the odds.

9766:

7275:, response variable, output variable, or class), i.e. it can assume only the two possible values 0 (often meaning "no" or "failure") or 1 (often meaning "yes" or "success"). The goal of logistic regression is to use the dataset to create a predictive model of the outcome variable.

29374:

for details. In his earliest paper (1838), Verhulst did not specify how he fit the curves to the data. In his more detailed paper (1845), Verhulst determined the three parameters of the model by making the curve pass through three observed points, which yielded poor predictions.

9935:

15468:

This model has a separate latent variable and a separate set of regression coefficients for each possible outcome of the dependent variable. The reason for this separation is that it makes it easy to extend logistic regression to multi-outcome categorical variables, as in the

5679:

26418:

24651:-test in linear regression, is used to assess the significance of coefficients. The Wald statistic is the ratio of the square of the regression coefficient to the square of the standard error of the coefficient and is asymptotically distributed as a chi-square distribution.

24604:

to assess whether or not the observed event rates match expected event rates in subgroups of the model population. This test is considered to be obsolete by some statisticians because of its dependence on arbitrary binning of predicted probabilities and relative low power.

19961:

24266:{\displaystyle {\begin{aligned}D_{\text{null}}&=-2\ln {\frac {\text{likelihood of null model}}{\text{likelihood of the saturated model}}}\\D_{\text{fitted}}&=-2\ln {\frac {\text{likelihood of fitted model}}{\text{likelihood of the saturated model}}}.\end{aligned}}}

23747:

733:. Disaster planners and engineers rely on these models to predict decision take by householders or building occupants in small-scale and large-scales evacuations, such as building fires, wildfires, hurricanes among others. These models help in the development of reliable

24511:

is used to assess goodness of fit as it represents the proportion of variance in the criterion that is explained by the predictors. In logistic regression analysis, there is no agreed upon analogous measure, but there are several competing measures each with limitations.

2015:

16847:

15463:

20551:

One can also take semi-parametric or non-parametric approaches, e.g., via local-likelihood or nonparametric quasi-likelihood methods, which avoid assumptions of a parametric form for the index function and is robust to the choice of the link function (e.g., probit or

29339:. If the assumptions of linear discriminant analysis hold, the conditioning can be reversed to produce logistic regression. The converse is not true, however, because logistic regression does not require the multivariate normal assumption of discriminant analysis.

23402:

6819:

17378:

14234:

12908:

709:, etc.). Another example might be to predict whether a Nepalese voter will vote Nepali Congress or Communist Party of Nepal or Any Other Party, based on age, income, sex, race, state of residence, votes in previous elections, etc. The technique can also be used in

6480:{\displaystyle \mathrm {OR} ={\frac {\operatorname {odds} (x+1)}{\operatorname {odds} (x)}}={\frac {\left({\frac {p(x+1)}{1-p(x+1)}}\right)}{\left({\frac {p(x)}{1-p(x)}}\right)}}={\frac {e^{\beta _{0}+\beta _{1}(x+1)}}{e^{\beta _{0}+\beta _{1}x}}}=e^{\beta _{1}}}

13489:

29230:. This leads to the intuition that by maximizing the log-likelihood of a model, you are minimizing the KL divergence of your model from the maximal entropy distribution. Intuitively searching for the model that makes the fewest assumptions in its parameters.

26200:

24086:

15695:

13164:

23218:

8708:

21616:

allows these posteriors to be computed using simulation, so lack of conjugacy is not a concern. However, when the sample size or the number of parameters is large, full

Bayesian simulation can be slow, and people often use approximate methods such as

19022:

23752:

which will always be positive or zero. The reason for this choice is that not only is the deviance a good measure of the goodness of fit, it is also approximately chi-squared distributed, with the approximation improving as the number of data points

173:(it is not a classifier), though it can be used to make a classifier, for instance by choosing a cutoff value and classifying inputs with probability greater than the cutoff as one class, below the cutoff as the other; this is a common way to make a

27035:

7184:

7039:

21727:

events per candidate variable may be required. Also, one can argue that 96 observations are needed only to estimate the model's intercept precisely enough that the margin of error in predicted probabilities is ±0.1 with a 0.95 confidence level.

12506:

11545:

19683:

19462:

29414:

in 1925 and has been followed since. Pearl and Reed first applied the model to the population of the United States, and also initially fitted the curve by making it pass through three points; as with

Verhulst, this again yielded poor results.

28582:

27701:

26622:

13342:

The intuition for transforming using the logit function (the natural log of the odds) was explained above. It also has the practical effect of converting the probability (which is bounded to be between 0 and 1) to a variable that ranges over

24107:

When the saturated model is not available (a common case), deviance is calculated simply as −2·(log likelihood of the fitted model), and the reference to the saturated model's log likelihood can be removed from all that follows without harm.

21224:

15682:

3574:

20508:. Unlike linear regression with normally distributed residuals, it is not possible to find a closed-form expression for the coefficient values that maximize the likelihood function so an iterative process must be used instead; for example

27238:

22404:

26731:

7349:), that is, separate explanatory variables taking the value 0 or 1 are created for each possible value of the discrete variable, with a 1 meaning "variable does have the given value" and a 0 meaning "variable does not have that value".)

25213:

25136:

22914:

14145:

11718:

8998:

19276:

15555:

3458:

17856:

27161:

19818:

16806:

Even though income is a continuous variable, its effect on utility is too complex for it to be treated as a single variable. Either it needs to be directly split up into ranges, or higher powers of income need to be added so that

4303:

4153:

25034:, logistic regression makes use of one or more predictor variables that may be either continuous or categorical. Unlike ordinary linear regression, however, logistic regression is used for predicting dependent variables that take

208:, and in this sense is the "simplest" way to convert a real number to a probability. In particular, it maximizes entropy (minimizes added information), and in this sense makes the fewest assumptions of the data being modeled; see

27880:

23509:

102:(any real value). The corresponding probability of the value labeled "1" can vary between 0 (certainly the value "0") and 1 (certainly the value "1"), hence the labeling; the function that converts log-odds to probability is the

26212:

and the data. Rather than being specific to the assumed multinomial logistic case, it is taken to be a general statement of the condition at which the log-likelihood is maximized and makes no reference to the functional form of

25929:

12874:-th measurement. Once the beta coefficients have been estimated from the data, we will be able to estimate the probability that any subsequent set of explanatory variables will result in any of the possible outcome categories.

15106:

14538:

692:

using logistic regression. Many other medical scales used to assess severity of a patient have been developed using logistic regression. Logistic regression may be used to predict the risk of developing a given disease (e.g.

28166:

17713:

15346:

13416:

on the coefficients, but other regularizers are also possible.) Whether or not regularization is used, it is usually not possible to find a closed-form solution; instead, an iterative numerical method must be used, such as

9269:{\displaystyle p({\boldsymbol {x}})={\frac {b^{{\boldsymbol {\beta }}\cdot {\boldsymbol {x}}}}{1+b^{{\boldsymbol {\beta }}\cdot {\boldsymbol {x}}}}}={\frac {1}{1+b^{-{\boldsymbol {\beta }}\cdot {\boldsymbol {x}}}}}=S_{b}(t)}

16820:

Yet another formulation combines the two-way latent variable formulation above with the original formulation higher up without latent variables, and in the process provides a link to one of the standard formulations of the

26050:

19216:{\displaystyle \Pr(Y_{i}=1)={\frac {e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}{1+e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}}={\frac {1}{1+e^{-{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}}=p_{i}}

15090:{\displaystyle {\begin{aligned}Y_{i}^{0\ast }&={\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}+\varepsilon _{0}\,\\Y_{i}^{1\ast }&={\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}+\varepsilon _{1}\,\end{aligned}}}

12593:

9089:

1560:

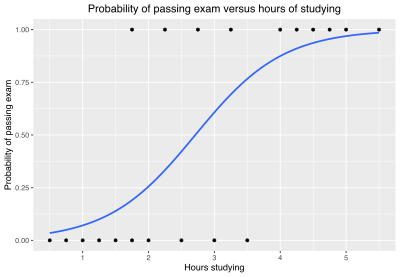

Remark: This model is actually an oversimplification, since it assumes everybody will pass if they learn long enough (limit = 1). The limit value should be a variable parameter too, if you want to make it more realistic.

24095:

represents the deviance and ln represents the natural logarithm. The log of this likelihood ratio (the ratio of the fitted model to the saturated model) will produce a negative value, hence the need for a negative sign.

21948:

23929:

12037:

8185:

5248:

20890:

29390:

for the same reaction, while the supply of one of the reactants is fixed. This naturally gives rise to the logistic equation for the same reason as population growth: the reaction is self-reinforcing but constrained.

21071:{\displaystyle \mathbf {w} _{k+1}=\left(\mathbf {X} ^{T}\mathbf {S} _{k}\mathbf {X} \right)^{-1}\mathbf {X} ^{T}\left(\mathbf {S} _{k}\mathbf {X} \mathbf {w} _{k}+\mathbf {y} -\mathbf {\boldsymbol {\mu }} _{k}\right)}

2828:

17244:{\displaystyle {\begin{aligned}\Pr(Y_{i}=0)&={\frac {1}{Z}}e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}\\\Pr(Y_{i}=1)&={\frac {1}{Z}}e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}\end{aligned}}}

14522:

4943:

28430:{\displaystyle {\begin{aligned}L(\theta \mid y;x)&=\Pr(Y\mid X;\theta )\\&=\prod _{i}\Pr(y_{i}\mid x_{i};\theta )\\&=\prod _{i}h_{\theta }(x_{i})^{y_{i}}(1-h_{\theta }(x_{i}))^{(1-y_{i})}\end{aligned}}}

24838:

20512:. This process begins with a tentative solution, revises it slightly to see if it can be improved, and repeats this revision until no more improvement is made, at which point the process is said to have converged.

18178:

14916:

instead of a standard logistic distribution. Both the logistic and normal distributions are symmetric with a basic unimodal, "bell curve" shape. The only difference is that the logistic distribution has somewhat

14374:

coefficients ourselves, and very often can use them to offset changes in the parameters of the error variable's distribution. For example, a logistic error-variable distribution with a non-zero location parameter

7392:

21152:

3201:

25637:

14203:

8911:

4085:

24728:

12301:

4235:

31744:

26742:

22105:

15473:

model. In such a model, it is natural to model each possible outcome using a different set of regression coefficients. It is also possible to motivate each of the separate latent variables as the theoretical

9585:

8413:

5779:

28641:

24287:

1302:

23020:

11350:

In the above cases of two categories (binomial logistic regression), the categories were indexed by "0" and "1", and we had two probabilities: The probability that the outcome was in category 1 was given by

2547:

30:

Example graph of a logistic regression curve fitted to data. The curve shows the estimated probability of passing an exam (binary dependent variable) versus hours studying (scalar independent variable). See

9785:

20152:{\displaystyle \operatorname {logit} \left(\operatorname {\mathbb {E} } \left\right)=\operatorname {logit} (p_{i})=\ln \left({\frac {p_{i}}{1-p_{i}}}\right)={\boldsymbol {\beta }}\cdot \mathbf {X} _{i}\,,}

15602:

15486:

models, because it both provides a theoretically strong foundation and facilitates intuitions about the model, which in turn makes it easy to consider various sorts of extensions. (See the example below.)

7980:

The fourth line is another way of writing the probability mass function, which avoids having to write separate cases and is more convenient for certain types of calculations. This relies on the fact that

12233:

8429:

This is an example of an SPSS output for a logistic regression model using three explanatory variables (coffee use per week, energy drink use per week, and soda use per week) and two categories (male and

23607:

10208:

5504:

26234:

24171:

19950:

17394:

17078:

23813:

23622:

17020:{\displaystyle {\begin{aligned}\ln \Pr(Y_{i}=0)&={\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}-\ln Z\\\ln \Pr(Y_{i}=1)&={\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}-\ln Z\end{aligned}}}

6177:

22440:

outcomes. It can be shown that the optimized error of any of these fits will never be less than the optimum error of the null model, and that the difference between these minimum error will follow a

18932:

1872:

27961:

7990:

can take only the value 0 or 1. In each case, one of the exponents will be 1, "choosing" the value under it, while the other is 0, "canceling out" the value under it. Hence, the outcome is either

25275:", and the logistic function is the canonical link function), while other sigmoid functions are non-canonical link functions; this underlies its mathematical elegance and ease of optimization. See

3856:

24489:

If the model deviance is significantly smaller than the null deviance then one can conclude that the predictor or set of predictors significantly improve the model's fit. This is analogous to the

15357:

14363:{\displaystyle Y_{i}={\begin{cases}1&{\text{if }}Y_{i}^{\ast }>0\ {\text{ i.e. }}{-\varepsilon _{i}}<{\boldsymbol {\beta }}\cdot \mathbf {X} _{i},\\0&{\text{otherwise.}}\end{cases}}}

13148:{\displaystyle \operatorname {logit} (\operatorname {\mathbb {E} } )=\operatorname {logit} (p_{i})=\ln \left({\frac {p_{i}}{1-p_{i}}}\right)=\beta _{0}+\beta _{1}x_{1,i}+\cdots +\beta _{m}x_{m,i}}

4845:

141:

Binary variables are widely used in statistics to model the probability of a certain class or event taking place, such as the probability of a team winning, of a patient being healthy, etc. (see

28183:

25777:

23273:

16852:

15111:

14944:

6665:

1148:

29926:

Biondo, S.; Ramos, E.; Deiros, M.; Ragué, J. M.; De Oca, J.; Moreno, P.; Farran, L.; Jaurrieta, E. (2000). "Prognostic factors for mortality in left colonic peritonitis: A new scoring system".

17289:

24622:

test. In logistic regression, there are several different tests designed to assess the significance of an individual predictor, most notably the likelihood ratio test and the Wald statistic.

13393:

and the regression coefficients are unobserved, and the means of determining them is not part of the model itself. They are typically determined by some sort of optimization procedure, e.g.

29961:

Marshall, J. C.; Cook, D. J.; Christou, N. V.; Bernard, G. R.; Sprung, C. L.; Sibbald, W. J. (1995). "Multiple organ dysfunction score: A reliable descriptor of a complex clinical outcome".

13643:{\displaystyle \operatorname {\mathbb {E} } =p_{i}=\operatorname {logit} ^{-1}({\boldsymbol {\beta }}\cdot \mathbf {X} _{i})={\frac {1}{1+e^{-{\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}}}}

5141:

2686:

2620:

23854:

21562:

8234:

27642:

27613:

27584:

27551:

27458:

27393:

23261:

15607:

An intuition for this comes from the fact that, since we choose based on the maximum of two values, only their difference matters, not the exact values — and this effectively removes one

22300:

22174:

18167:

will produce the same probabilities for all possible explanatory variables. In fact, it can be seen that adding any constant vector to both of them will produce the same probabilities:

18143:

13321:{\displaystyle \operatorname {logit} (\operatorname {\mathbb {E} } )=\operatorname {logit} (p_{i})=\ln \left({\frac {p_{i}}{1-p_{i}}}\right)={\boldsymbol {\beta }}\cdot \mathbf {X} _{i}}

1514:

31325:, p. 8, "As far as I can see the introduction of the logistics as an alternative to the normal probability function is the work of a single person, Joseph Berkson (1899–1982), ..."

26061:

22637:

19567:

12834:

11938:

3904:

24043:

21987:

30529:

22751:

12018:

11976:

11909:

5092:

1410:

27220:

26492:

23077:

23066:

11417:

6660:

3751:

22234:

21216:

12882:

There are various equivalent specifications and interpretations of logistic regression, which fit into different types of more general models, and allow different generalizations.

12345:

9520:

8571:

3785:

28043:

25147:

25063:

21464:

20807:

18940:

13401:

conditions that seek to exclude unlikely values, e.g. extremely large values for any of the regression coefficients. The use of a regularization condition is equivalent to doing

11380:

9461:

13379:

4726:

4636:

This simple model is an example of binary logistic regression, and has one explanatory variable and a binary categorical variable which can assume one of two categorical values.

721:, it can be used to predict the likelihood of a person ending up in the labor force, and a business application would be to predict the likelihood of a homeowner defaulting on a

25444:

23999:

10893:

1349:

26946:

20707:

11663:{\displaystyle p_{n}({\boldsymbol {x}})={\frac {e^{{\boldsymbol {\beta }}_{n}\cdot {\boldsymbol {x}}}}{1+\sum _{u=1}^{N}e^{{\boldsymbol {\beta }}_{u}\cdot {\boldsymbol {x}}}}}}

9430:

7050:

24151:

22538:

22142:

12551:

6904:

6589:

5378:

4622:

25020:

23539:

22261:

20540:

regression analysis with the predictors of interest for the sole purpose of examining the tolerance statistic used to assess whether multicollinearity is unacceptably high.

6915:

4428:

4390:

2424:

775:

A group of 20 students spends between 0 and 6 hours studying for an exam. How does the number of hours spent studying affect the probability of the student passing the exam?

22567:

21828:

12380:

11712:

8493:

6549:

5496:

1646:

1555:

1451:

29224:

21376:{\displaystyle \mathbf {X} ={\begin{bmatrix}1&x_{1}(1)&x_{2}(1)&\ldots \\1&x_{1}(2)&x_{2}(2)&\ldots \\\vdots &\vdots &\vdots \end{bmatrix}}}

19598:

19588:. With this choice, the single-layer neural network is identical to the logistic regression model. This function has a continuous derivative, which allows it to be used in

14048:

models and makes it easier to extend to certain more complicated models with multiple, correlated choices, as well as to compare logistic regression to the closely related

10839:

10711:

10665:

10586:

10047:

28002:

20912:

19328:

18067:

18025:

15482:. (In terms of utility theory, a rational actor always chooses the choice with the greatest associated utility.) This is the approach taken by economists when formulating

11119:

10927:

10113:

10080:

28446:

27647:

26503:

21643:", states that logistic regression models give stable values for the explanatory variables if based on a minimum of about 10 events per explanatory variable (EPV); where

12801:

6033:

1202:

30379:

29193:

25251:

Of all the functional forms used for estimating the probabilities of a particular categorical outcome which optimize the fit by maximizing the likelihood function (e.g.

24830:

24803:

24776:

23432:

21521:

13474:

9356:

8742:

6854:

6515:

5997:

4679:

3972:

3941:

3702:

3671:

3637:

3606:

3350:

3319:

3280:

3249:

2776:

2745:

2352:

2286:

1809:

1778:

27356:{\displaystyle p_{nk}={\frac {e^{{\boldsymbol {\lambda }}_{n}\cdot {\boldsymbol {x}}_{k}}}{\sum _{u=0}^{N}e^{{\boldsymbol {\lambda }}_{u}\cdot {\boldsymbol {x}}_{k}}}}}

24600:

15614:

1862:

682:

Logistic regression is used in various fields, including machine learning, most medical fields, and social sciences. For example, the Trauma and Injury

Severity Score (

22194:

3466:

29270:

27767:

25390:

24028:

calculations – variance in the criterion is essentially divided into variance accounted for by the predictors and residual variance. In logistic regression analysis,

11863:{\displaystyle p_{0}({\boldsymbol {x}})=1-\sum _{n=1}^{N}p_{n}({\boldsymbol {x}})={\frac {1}{1+\sum _{u=1}^{N}e^{{\boldsymbol {\beta }}_{u}\cdot {\boldsymbol {x}}}}}}

11249:

10767:

5848:

4588:

2319:

2253:

2220:

2187:

2154:

2121:

1743:

27488:

27076:

25474:

22308:

17061:

17030:

Two separate sets of regression coefficients have been introduced, just as in the two-way latent variable model, and the two equations appear a form that writes the

12864:

11054:

5448:

2803:

2714:

1015:

28624:

27427:

26640:

25546:

25503:

25355:

22474:

21720:

12372:

11303:

11276:

11193:

11146:

11001:

10954:

9551:

9305:

8820:

8439:

The above example of binary logistic regression on one explanatory variable can be generalized to binary logistic regression on any number of explanatory variables

7970:. This is because doing an average this way simply computes the proportion of successes seen, which we expect to converge to the underlying probability of success.

5405:

5330:

2381:

2082:

2051:

1706:

1675:

24991:

22762:

22671:

14081:

10011:

8917:

8069:

5968:

5938:

5909:

5874:

5280:

192:. The defining characteristic of the logistic model is that increasing one of the independent variables multiplicatively scales the odds of the given outcome at a

27520:

26205:

A very important point here is that this expression is (remarkably) not an explicit function of the beta coefficients. It is only a function of the probabilities

25716:

25665:

25324:

22943:

22699:

20611:

20585:

19229:

17975:{\displaystyle \Pr(Y_{i}=c)=\operatorname {softmax} (c,{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i},{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i},\dots ).}

16709:

As an example, consider a province-level election where the choice is between a right-of-center party, a left-of-center party, and a secessionist party (e.g. the

15508:

12178:

11515:

11485:

11448:

11333:

11219:

11084:

11027:

10793:

10737:

10619:

10547:

9985:

9577:

9487:

9408:

9382:

9122:

8559:

7213:

4003:

3360:

983:

7952:, which is a general property of the Bernoulli distribution. In other words, if we run a large number of Bernoulli trials using the same probability of success

779:

The reason for using logistic regression for this problem is that the values of the dependent variable, pass and fail, while represented by "1" and "0", are not

27740:

21689:

21665:

11166:

10974:

9329:

8773:

6113:

6085:

6056:

5810:

5428:

5300:

5040:

5016:

4996:

4973:

4787:

4746:

21752:

is defined which is a measure of the error between the logistic model fit and the outcome data. In the limit of a large number of data points, the deviance is

29547:

dependent variable (with unordered values, also called "classification"). The general case of having dependent variables with more than two values is termed

27084:

19728:

4243:

4093:

29996:

Le Gall, J. R.; Lemeshow, S.; Saulnier, F. (1993). "A new

Simplified Acute Physiology Score (SAPS II) based on a European/North American multicenter study".

27775:

23440:

15215:{\displaystyle {\begin{aligned}\varepsilon _{0}&\sim \operatorname {EV} _{1}(0,1)\\\varepsilon _{1}&\sim \operatorname {EV} _{1}(0,1)\end{aligned}}}

7918:. As noted above, each separate trial has its own probability of success, just as each trial has its own explanatory variables. The probability of success

25811:

22540:

in the linear regression case, except that the likelihood is maximized rather than minimized. Denote the maximized log-likelihood of the proposed model by

12020:

to be defined in terms of the other probabilities is artificial. Any of the probabilities could have been selected to be so defined. This special value of

17832:{\displaystyle \Pr(Y_{i}=c)={\frac {e^{{\boldsymbol {\beta }}_{c}\cdot \mathbf {X} _{i}}}{\sum _{h}e^{{\boldsymbol {\beta }}_{h}\cdot \mathbf {X} _{i}}}}}

14412:

than in the former case, for all sets of explanatory variables — but critically, it will always remain on the same side of 0, and hence lead to the same

28048:

12763:{\displaystyle {\frac {\partial \ell }{\partial \beta _{nm}}}=0=\sum _{k=1}^{K}\Delta (n,y_{k})x_{mk}-\sum _{k=1}^{K}p_{n}({\boldsymbol {x}}_{k})x_{mk}}

30156:

Palei, S. K.; Das, S. K. (2009). "Logistic regression model for prediction of roof fall risks in bord and pillar workings in coal mines: An approach".

19226:

which shows that this formulation is indeed equivalent to the previous formulation. (As in the two-way latent variable formulation, any settings where

15242:

25940:

13397:, that finds values that best fit the observed data (i.e. that give the most accurate predictions for the data already observed), usually subject to

12144:{\displaystyle t_{n}=\ln \left({\frac {p_{n}({\boldsymbol {x}})}{p_{0}({\boldsymbol {x}})}}\right)={\boldsymbol {\beta }}_{n}\cdot {\boldsymbol {x}}}

153:

when there are more than two possible values (e.g. whether an image is of a cat, dog, lion, etc.), and the binary logistic regression generalized to

29899:

Kologlu, M.; Elker, D.; Altun, H.; Sayek, I. (2001). "Validation of MPI and PIA II in two different groups of patients with secondary peritonitis".

16664:

9016:

7379:

that is specific to the outcome at hand, but related to the explanatory variables. This can be expressed in any of the following equivalent forms:

21840:

14424:(This predicts that the irrelevancy of the scale parameter may not carry over into more complex models where more than two choices are available.)

3072:{\displaystyle \ell =\sum _{k:y_{k}=1}\ln(p_{k})+\sum _{k:y_{k}=0}\ln(1-p_{k})=\sum _{k=1}^{K}\left(\,y_{k}\ln(p_{k})+(1-y_{k})\ln(1-p_{k})\right)}

23859:

8081:

5149:

20812:

24963:{\displaystyle {\widehat {\beta }}_{0}^{*}={\widehat {\beta }}_{0}+\log {\frac {\pi }{1-\pi }}-\log {{\tilde {\pi }} \over {1-{\tilde {\pi }}}}}

83:

the parameters of a logistic model (the coefficients in the linear or non linear combinations). In binary logistic regression there is a single

33463:

20532:

Having a large ratio of variables to cases results in an overly conservative Wald statistic (discussed below) and can lead to non-convergence.

18894:

As a result, we can simplify matters, and restore identifiability, by picking an arbitrary value for one of the two vectors. We choose to set

14457:

9775:

parameters will require numerical methods. One useful technique is to equate the derivatives of the log likelihood with respect to each of the

4853:

33968:

29410:, but they gave him little credit and did not adopt his terminology. Verhulst's priority was acknowledged and the term "logistic" revived by

29280:, because the dependent variable is binary. Second, the predicted values are probabilities and are therefore restricted to (0,1) through the

6821:. Then when this is used in the equation relating the log odds of a success to the values of the predictors, the linear regression will be a

21084:

3092:

1813:

which give the "best fit" to the data. In the case of linear regression, the sum of the squared deviations of the fit from the data points (

26930:{\displaystyle {\frac {\partial {\mathcal {L}}}{\partial p_{n'k'}}}=0=-\ln(p_{n'k'})-1+\sum _{m=0}^{M}(\lambda _{n'm}x_{mk'})-\alpha _{k'}}

25553:

14156:

9761:{\displaystyle \ell =\sum _{k=1}^{K}y_{k}\log _{b}(p({\boldsymbol {x_{k}}}))+\sum _{k=1}^{K}(1-y_{k})\log _{b}(1-p({\boldsymbol {x_{k}}}))}

8830:

4011:

34118:

30983:

24657:

13339:

by fitting a linear predictor function of the above form to some sort of arbitrary transformation of the expected value of the variable.

12238:

4164:

771:

As a simple example, we can use a logistic regression with one explanatory variable and two categories to answer the following question:

22020:

9930:{\displaystyle {\frac {\partial \ell }{\partial \beta _{m}}}=0=\sum _{k=1}^{K}y_{k}x_{mk}-\sum _{k=1}^{K}p({\boldsymbol {x}}_{k})x_{mk}}

8363:

5690:

169:

for further extensions. The logistic regression model itself simply models probability of output in terms of input and does not perform

33742:

32383:

30607:

Gourieroux, Christian; Monfort, Alain (1981). "Asymptotic

Properties of the Maximum Likelihood Estimator in Dichotomous Logit Models".

1218:

25140:

Although the dependent variable in logistic regression is

Bernoulli, the logit is on an unrestricted scale. The logit function is the

22951:

2437:

31366:

26055:

Assuming the multinomial logistic function, the derivative of the log-likelihood with respect the beta coefficients was found to be:

22433:, and then fitted using the proposed model. Specifically, we can consider the fits of the proposed model to every permutation of the

5674:{\displaystyle g(p(x))=\sigma ^{-1}(p(x))=\operatorname {logit} p(x)=\ln \left({\frac {p(x)}{1-p(x)}}\right)=\beta _{0}+\beta _{1}x,}

26413:{\displaystyle {\mathcal {L}}_{fit}=\sum _{n=0}^{N}\sum _{m=0}^{M}\lambda _{nm}\sum _{k=1}^{K}(p_{nk}x_{mk}-\Delta (n,y_{k})x_{mk})}

15561:

33516:

25260:

12183:

659:

24643:

Alternatively, when assessing the contribution of individual predictors in a given model, one may examine the significance of the

23742:{\displaystyle D=\ln \left({\frac {{\hat {L}}^{2}}{{\hat {L}}_{\varphi }^{2}}}\right)=2({\hat {\ell }}-{\hat {\ell }}_{\varphi })}

23561:

16737:

Estimated strength of regression coefficient for different outcomes (party choices) and different values of explanatory variables

15611:. Another critical fact is that the difference of two type-1 extreme-value-distributed variables is a logistic distribution, i.e.

10121:

33955:

30339:

29521:

29473:. However, the development of the logistic model as a general alternative to the probit model was principally due to the work of

29406:, which led to its use in modern statistics. They were initially unaware of Verhulst's work and presumably learned about it from

23555:

and it can be shown that the maximum log-likelihood of these permutation fits will never be smaller than that of the null model:

19854:

11419:. The sum of these probabilities equals 1, which must be true, since "0" and "1" are the only possible categories in this setup.

4640:

is the generalization of binary logistic regression to include any number of explanatory variables and any number of categories.

2010:{\displaystyle \ell _{k}={\begin{cases}-\ln p_{k}&{\text{ if }}y_{k}=1,\\-\ln(1-p_{k})&{\text{ if }}y_{k}=0.\end{cases}}}

569:

12031:) are expressed in terms of the pivot probability and are again expressed as a linear combination of the explanatory variables:

30423:

29524:

and interpreting odds of alternatives as relative preferences; this gave a theoretical foundation for the logistic regression.

23770:

6121:

5911:

is the probability that the dependent variable equals a case, given some linear combination of the predictors. The formula for

31604:

18897:

15458:{\displaystyle Y_{i}={\begin{cases}1&{\text{if }}Y_{i}^{1\ast }>Y_{i}^{0\ast },\\0&{\text{otherwise.}}\end{cases}}}

31951:

31932:

31913:

31891:

31872:

31846:

31827:

31804:

31014:

30936:

30888:

30677:

30591:

30450:

30113:

29777:

27891:

25267:

of distributions maximizes entropy, given an expected value. In the case of the logistic model, the logistic function is the

23962:

variable and data in the proposed model is a very significant improvement over the null model. In other words, we reject the

12310:

measurements or data points will be generated by the above probabilities can now be calculated. Indexing each measurement by

239:

for discussion. The logistic regression as a general statistical model was originally developed and popularized primarily by

31582:

Studies in

History and Philosophy of Science Part C: Studies in History and Philosophy of Biological and Biomedical Sciences

23397:{\displaystyle {\hat {\ell }}_{\varphi }=K(\,{\overline {y}}\ln({\overline {y}})+(1-{\overline {y}})\ln(1-{\overline {y}}))}

19718:

is the number of successes observed (the sum of the individual

Bernoulli-distributed random variables), and hence follows a

16803:

there need to be separate sets of coefficients for each characteristic, not simply a single extra per-choice characteristic.

6814:{\displaystyle \beta _{0}+\beta _{1}x_{1}+\beta _{2}x_{2}+\cdots +\beta _{m}x_{m}=\beta _{0}+\sum _{i=1}^{m}\beta _{i}x_{i}}

3804:

32378:

32078:

32020:

29811:

Walker, SH; Duncan, DB (1967). "Estimation of the probability of an event as a function of several independent variables".

17373:{\displaystyle Z=e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}+e^{{\boldsymbol {\beta }}_{1}\cdot \mathbf {X} _{i}}}

13418:

6087:

of the predictors) is equivalent to the exponential function of the linear regression expression. This illustrates how the

4804:

559:

30129:

M. Strano; B.M. Colosimo (2006). "Logistic regression analysis for experimental determination of forming limit diagrams".

30056:

Truett, J; Cornfield, J; Kannel, W (1967). "A multivariate analysis of the risk of coronary heart disease in

Framingham".

23767:

For the simple model of student test scores described above, the maximum value of the log-likelihood of the null model is

32982:

32130:

31211:

31032:

25721:

19706:

5303:

4976:

1073:

184:

instead of the logistic function (to convert the linear combination to a probability) can also be used, most notably the

30693:

Van Smeden, M.; De Groot, J. A.; Moons, K. G.; Collins, G. S.; Altman, D. G.; Eijkemans, M. J.; Reitsma, J. B. (2016).

29458:. The probit model influenced the subsequent development of the logit model and these models competed with each other.

24016:. Since this has no direct analog in logistic regression, various methods including the following can be used instead.

22444:, with degrees of freedom equal those of the proposed model minus those of the null model which, in this case, will be

9553:

as the categorical outcome of that measurement, the log likelihood may be written in a form very similar to the simple

220:

25050:

to create a continuous criterion as a transformed version of the dependent variable. The logarithm of the odds is the

227:. Logistic regression by MLE plays a similarly basic role for binary or categorical responses as linear regression by

33765:

33657:

32041:

21763:

Linear regression and logistic regression have many similarities. For example, in simple linear regression, a set of

16694:

5100:

2625:

2559:

27711:

In machine learning applications where logistic regression is used for binary classification, the MLE minimises the

23818:

16676:

196:

rate, with each independent variable having its own parameter; for a binary dependent variable this generalizes the

34370:

33943:

33817:

31967:

30750:

29589:

26195:{\displaystyle {\frac {\partial \ell }{\partial \beta _{nm}}}=\sum _{k=1}^{K}(p_{nk}x_{mk}-\Delta (n,y_{k})x_{mk})}

21526:

21487:

14436:

8193:

3976:

coefficients may be entered into the logistic regression equation to estimate the probability of passing the exam.

523:

27618:

27589:

27560:

27527:

27434:

27369:

25276:

24081:{\displaystyle D=-2\ln {\frac {\text{likelihood of the fitted model}}{\text{likelihood of the saturated model}}}.}

23226:

21647:

denotes the cases belonging to the less frequent category in the dependent variable. Thus a study designed to use

14208:

i.e. the latent variable can be written directly in terms of the linear predictor function and an additive random

34001:

33662:

33407:

32778:

32368:

29581:

29562:

29536:

29227:

24154:

22273:

22147:

18072:

15608:

15494:

seems fairly arbitrary, but it makes the mathematics work out, and it may be possible to justify its use through

11345:

4637:

4564:, the output indicates that hours studying is significantly associated with the probability of passing the exam (

1468:

783:. If the problem was changed so that pass/fail was replaced with the grade 0–100 (cardinal numbers), then simple

717:

applications such as prediction of a customer's propensity to purchase a product or halt a subscription, etc. In

713:, especially for predicting the probability of failure of a given process, system or product. It is also used in

574:

512:

332:

307:

154:

23213:{\displaystyle \ell _{\varphi }=\sum _{k=1}^{K}\left(y_{k}\ln(p_{\varphi })+(1-y_{k})\ln(1-p_{\varphi })\right)}

22582:

21834:

parameters which minimize the sum of the squares of the residuals (the squared error term) for each data point:

19512:

12810:

11914:

3862:

34052:

33264:

33071:

32960:

32918:

30237:

Wibbenmeyer, Matthew J.; Hand, Michael S.; Calkin, David E.; Venn, Tyron J.; Thompson, Matthew P. (June 2013).

29696:

Tolles, Juliana; Meurer, William J (2016). "Logistic Regression Relating Patient Characteristics to Outcomes".

21956:

16657:

1033:" consisting of two categories: "pass" or "fail" corresponding to the categorical values 1 and 0 respectively.

434:

32992:

31007:

Regression Modeling Strategies: With Applications to Linear Models, Logistic Regression, and Survival Analysis

30468:

29571:

is an extension of multinomial logit that allows for correlations among the choices of the dependent variable.

22707:

11985:

11943:

11876:

8744:

are parameters of the model. An additional generalization has been introduced in which the base of the model (

8703:{\displaystyle t=\log _{b}{\frac {p}{1-p}}=\beta _{0}+\beta _{1}x_{1}+\beta _{2}x_{2}+\cdots +\beta _{M}x_{M}}

5048:

1366:

34397:

34295:

33254:

32157:

31680:"On the Rate of Growth of the Population of the United States since 1790 and Its Mathematical Representation"

31074:

29439:

27172:

26440:

23031:

20505:

19017:{\displaystyle e^{{\boldsymbol {\beta }}_{0}\cdot \mathbf {X} _{i}}=e^{\mathbf {0} \cdot \mathbf {X} _{i}}=1}

13394:

3207:

393:

216:

13381:— thereby matching the potential range of the linear prediction function on the right side of the equation.

11535:. The sum of these probabilities over all categories must equal 1. Using the mathematically convenient base

11385:

6622:

3720:

790:

The table shows the number of hours each student spent studying, and whether they passed (1) or failed (0).

34392:

33846:

33795:

33780:

33770:

33639:

33511:

33478:

33304:

33259:

33089:

29336:

29320:). Equivalently, in the latent variable interpretations of these two methods, the first assumes a standard

25802:

24025:

22199:

21618:

21157:

20533:

17985:

In order to prove that this is equivalent to the previous model, the above model is overspecified, in that

13398:

12321:

9496:

3757:

2090:. Log loss is always greater than or equal to 0, equals 0 only in case of a perfect prediction (i.e., when

652:

28007:

27030:{\displaystyle \sum _{m=0}^{M}\lambda _{nm}x_{mk}={\boldsymbol {\lambda }}_{n}\cdot {\boldsymbol {x}}_{k}}

21389:

20712:

11354:

9435:

7179:{\displaystyle p={\frac {1}{1+b^{-(\beta _{0}+\beta _{1}x_{1}+\beta _{2}x_{2}+\cdots +\beta _{m}x_{m})}}}}

34358:

34190:

33991:

33915:

33216:

32970:

32639:

32103:

30239:"Risk Preferences in Strategic Wildfire Decision Making: A Choice Experiment with U.S. Wildfire Managers"

29672:

29554:

19311:

13346:

12902:

outcomes, is the way the probability of a particular outcome is linked to the linear predictor function:

7342:

4684:

750:

730:

595:

162:

158:

31548:(1966). "Some procedures connected with the logistic qualitative response curve". In F. N. David (ed.).

29363:

25399:

23969:

10844:

7034:{\displaystyle \log {\frac {p}{1-p}}=\beta _{0}+\beta _{1}x_{1}+\beta _{2}x_{2}+\cdots +\beta _{m}x_{m}}

3086:

itself, which is the probability that the given data set is produced by a particular logistic function:

1310:

34402:

34075:

34047:

34042:

33790:

33549:

33455:

33435:

33343:

33054:

32872:

32355:

32227:

32032:

30953:

30238:

30042:

29637:

21601:

20632:

15491:

15233:

14427:

It turns out that this formulation is exactly equivalent to the preceding one, phrased in terms of the

12501:{\displaystyle \ell =\sum _{k=1}^{K}\sum _{n=0}^{N}\Delta (n,y_{k})\,\ln(p_{n}({\boldsymbol {x}}_{k}))}

9413:

564:

533:

460:

170:

60:

30830:"Modern modelling techniques are data hungry: a simulation study for predicting dichotomous endpoints"

30371:

30301:

24115:

23548:

values. Again, we can conceptually consider the fit of the proposed model to every permutation of the

22516:

22120:

19678:{\displaystyle {\frac {\mathrm {d} y}{\mathrm {d} X}}=y(1-y){\frac {\mathrm {d} f}{\mathrm {d} X}}.\,}

12514:

7977:

of the Bernoulli distribution, specifying the probability of seeing each of the two possible outcomes.

6859:

6557:

5335:

4601:

33807:

33575:

33296:

33221:

33150:

33079:

32999:

32987:

32857:

32845:

32838:

32546:

32267:

30199:

29632:

24996:

24735:

23517:

22239:

21565:

19472:

19457:{\displaystyle p_{i}={\frac {1}{1+e^{-(\beta _{0}+\beta _{1}x_{1,i}+\cdots +\beta _{k}x_{k,i})}}}.\,}

14918:

13658:

12565:

and zero otherwise. In the case of two explanatory variables, this indicator function was defined as

9779:

parameters to zero yielding a set of equations which will hold at the maximum of the log likelihood:

8777:

8031:

7974:

6003:

from the linear regression equation (the value of the criterion when the predictor is equal to zero).

4404:

4369:

4308:

This table shows the estimated probability of passing the exam for several values of hours studying.

4158:

Similarly, for a student who studies 4 hours, the estimated probability of passing the exam is 0.87:

2390:

554:

543:

507:

414:

28577:{\displaystyle N^{-1}\log L(\theta \mid y;x)=N^{-1}\sum _{i=1}^{N}\log \Pr(y_{i}\mid x_{i};\theta )}

27696:{\displaystyle {\boldsymbol {\beta }}_{n}={\boldsymbol {\lambda }}_{n}-{\boldsymbol {\lambda }}_{0}}

27554:

26617:{\displaystyle {\mathcal {L}}_{norm}=\sum _{k=1}^{K}\alpha _{k}\left(1-\sum _{n=1}^{N}p_{nk}\right)}

25295:

22543:

21784:

16841:

as a linear predictor, we separate the linear predictor into two, one for each of the two outcomes:

15379:

14256:

11673:

8454:

7657:

6520:

5854:(i.e., log-odds or natural logarithm of the odds) is equivalent to the linear regression expression.

5465:

1894:

1602:

1519:

1415:

34290:

34057:

33920:

33605:

33570:

33534:

33319:

32761:

32670:

32629:

32541:

32232:

32071:

32050:

31545:

31513:

29652:

29575:

29490:

29301:

29239:

29202:

27746:

26634:

are the appropriate Lagrange multipliers. The Lagrangian is then the sum of the above three terms:

24575:

24571:

24101:

23938:

22441:

21753:

17268:

14428:

14395:

is equivalent to setting the scale parameter to 1 and then dividing all regression coefficients by

13654:

13449:

the explanatory variable. In the case of a dichotomous explanatory variable, for instance, gender

13405:(MAP) estimation, an extension of maximum likelihood. (Regularization is most commonly done using

13336:

13332:

10798:

10670:

10624:

10555:

10016:

8042:

that are specific to the model at hand but the same for all trials. The linear predictor function

7889:

1824:, is taken as a measure of the goodness of fit, and the best fit is obtained when that function is

726:

686:), which is widely used to predict mortality in injured patients, was originally developed by Boyd

615:

486:

409:

302:

281:

30635:

30200:"Household-Level Model for Hurricane Evacuation Destination Type Choice Using Hurricane Ivan Data"

29508:, which greatly increased the scope of application and the popularity of the logit model. In 1973

27969:

26736:

Setting the derivative of the Lagrangian with respect to one of the probabilities to zero yields:

20895:

18030:

17988:

15677:{\displaystyle \varepsilon =\varepsilon _{1}-\varepsilon _{0}\sim \operatorname {Logistic} (0,1).}

11339:

11091:

10899:

10085:

10052:

8781:. However, in some cases it can be easier to communicate results by working in base 2 or base 10.

6197:

studied, the odds of passing (group 1) or failing (group 0) are (expectedly) 2 to 1 (Denis, 2019).

34199:

33812:

33752:

33689:

33327:

33311:

33049:

32911:

32901:

32751:

32665:

31634:

Reports on Biological Standards: Methods of biological assay depending on a quantal response. III

24618:). In linear regression, the significance of a regression coefficient is assessed by computing a

24515:

Four of the most commonly used indices and one less commonly used one are examined on this page:

21622:

20525:

16672:

12776:

6008:

3569:{\displaystyle 0={\frac {\partial \ell }{\partial \beta _{1}}}=\sum _{k=1}^{K}(y_{k}-p_{k})x_{k}}

1164:

698:

645:

538:

31856:

31049:

30970:

Hosmer, D.W. (1997). "A comparison of goodness-of-fit tests for the logistic regression model".

30198:

Mesa-Arango, Rodrigo; Hasan, Samiul; Ukkusuri, Satish V.; Murray-Tuite, Pamela (February 2013).

29394:

The logistic function was independently rediscovered as a model of population growth in 1920 by

29163:

24808:

24781:

24754:

23410:

21497:

15478:

associated with making the associated choice, and thus motivate logistic regression in terms of

13452:

9334:

8720:

6832:

6493:

5975:

4655:

3950:

3919:

3680:

3649:

3615:

3584:

3328:

3297:

3258:

3227:

2754:

2723:

2324:

2258:

1787:

1756:

34237:

34167:

33960:

33897:

33652:

33539:

32536:

32433:

32340:

32219:

32118:

29517:

29407:

29273:

24578:

24033:

is calculated by comparing a given model with the saturated model. This computation gives the

22399:{\displaystyle {\hat {\varepsilon }}_{\varphi }^{2}=\sum _{k=1}^{K}({\overline {y}}-y_{k})^{2}}

21596:

difficult to calculate except in very low dimensions. Now, though, automatic software such as

21593:

20622:

15495:

8237:

8039:

7902:

7367:

4789:, and outputs a value between zero and one. For the logit, this is interpreted as taking input

1840:

1748:

502:

497:

439:

228:

205:

31815:

29461:

The logistic model was likely first used as an alternative to the probit model in bioassay by

26726:{\displaystyle {\mathcal {L}}={\mathcal {L}}_{ent}+{\mathcal {L}}_{fit}+{\mathcal {L}}_{norm}}

22179:

11335:

is not as much as 10 times greater, it's only the effect on the odds that is 10 times greater.

34262:

34204:

34147:

33973:

33866:

33775:

33501:

33385:

33244:

33236:

33126:

33118:

32933:

32829:

32807:

32766:

32731:

32698:

32644:

32619:

32574:

32513:

32473:

32275:

32098:

30695:"No rationale for 1 variable per 10 events criterion for binary logistic regression analysis"

29593:

29585:

29371:

29321:

29277:

29249:

27752:

25362:

25208:{\displaystyle \operatorname {logit} \operatorname {\mathcal {E}} (Y)=\beta _{0}+\beta _{1}x}

25131:{\displaystyle \operatorname {logit} p=\ln {\frac {p}{1-p}}\quad {\text{for }}0<p<1\,.}

25046:

of the event happening for different levels of each independent variable, and then takes its

24631:

24034:

24029:

23613:

22909:{\displaystyle \ell =\sum _{k=1}^{K}\left(y_{k}\ln(p(x_{k}))+(1-y_{k})\ln(1-p(x_{k}))\right)}

21749:

21668:

21581:

19719:

16808:

16721:). We would then use three latent variables, one for each choice. Then, in accordance with

16668:

14440:

14213:

14140:{\displaystyle Y_{i}^{\ast }={\boldsymbol {\beta }}\cdot \mathbf {X} _{i}+\varepsilon _{i}\,}

14041:

13410:

11224:

10742:

9410:

for a given observation. The main use-case of a logistic model is to be given an observation

8993:{\displaystyle {\boldsymbol {\beta }}=\{\beta _{0},\beta _{1},\beta _{2},\dots ,\beta _{M}\}}

7315:

5815:

4595:

4567:

3284:, determining their optimum values will require numerical methods. One method of maximizing

2291:

2225:

2192:

2159:

2126:

2093:

1715:

754:

590:

286:

31860:

30302:"A discrete choice model based on random utilities for exit choice in emergency evacuations"

28626:

pairs are drawn uniformly from the underlying distribution, then in the limit of large

27463:

27051:

25449:

19271:{\displaystyle {\boldsymbol {\beta }}={\boldsymbol {\beta }}_{1}-{\boldsymbol {\beta }}_{0}}

17067:

ensuring that the result is a distribution. This can be seen by exponentiating both sides:

17037:

15550:{\displaystyle {\boldsymbol {\beta }}={\boldsymbol {\beta }}_{1}-{\boldsymbol {\beta }}_{0}}

14908:

models—makes clear the relationship between logistic regression (the "logit model") and the

14378:(which sets the mean) is equivalent to a distribution with a zero location parameter, where

12839:

11032:

5433:

3453:{\displaystyle 0={\frac {\partial \ell }{\partial \beta _{0}}}=\sum _{k=1}^{K}(y_{k}-p_{k})}

2785:

2696:

988:

34185:

33760:

33709:

33685:

33647:

33565:

33544:

33496:

33375:

33353:

33322:

33231:

33108:

33059:

32977:

32950:

32906:

32862:

32624:

32400:

32280:

32026:

31753:

31731:

31691:

31482:

30388:

30250:

30215:

29544:

29462:

29423:

29343:

28597:

27400:

25519:

25481: