20:

2274:

1178:

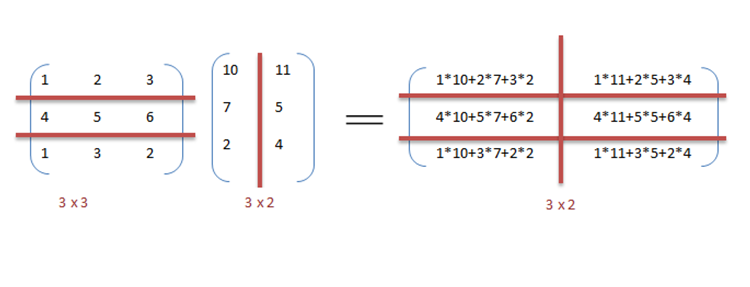

484:. An OpenMP directive, "omp parallel for" instructs the compiler to execute the code in the for loop in parallel. For multiplication, we can divide matrix A and B into blocks along rows and columns respectively. This allows us to calculate every element in matrix C individually thereby making the task parallel. For example:

759:(CPU) A and B, CPU A could add all elements from the top half of the arrays, while CPU B could add all elements from the bottom half of the arrays. Since the two processors work in parallel, the job of performing array addition would take one half the time of performing the same operation in serial using one CPU alone.

1044:

Data parallelism finds its applications in a variety of fields ranging from physics, chemistry, biology, material sciences to signal processing. Sciences imply data parallelism for simulating models like molecular dynamics, sequence analysis of genome data and other physical phenomenon. Driving

91:

Most data parallel hardware supports only a fixed number of parallel levels, often only one. This means that within a parallel operation it is not possible to launch more parallel operations recursively, and means that programmers cannot make use of nested hardware parallelism. The programming

751:

It can be observed from the example that a lot of processors will be required as the matrix sizes keep on increasing. Keeping the execution time low is the priority but as the matrix size increases, we are faced with other constraints like complexity of such a system and its associated costs.

975:

Data and task parallelism, can be simultaneously implemented by combining them together for the same application. This is called Mixed data and task parallelism. Mixed parallelism requires sophisticated scheduling algorithms and software support. It is the best kind of parallelism when

983:

Mixed data and task parallelism finds applications in the global climate modeling. Large data parallel computations are performed by creating grids of data representing Earth's atmosphere and oceans and task parallelism is employed for simulating the function and model of the physical

1084:. Computing applications that devote most of their execution time to computational requirements are deemed compute-intensive, whereas applications are deemed data-intensive require large volumes of data and devote most of their processing time to I/O and manipulation of data.

120:), data parallelism is achieved when each processor performs the same task on different distributed data. In some situations, a single execution thread controls operations on all the data. In others, different threads control the operation, but they execute the same code.

75:

of data operations was also exploited by operating on multiple data at the same time using a single instruction. These processors were called 'array processors'. In the 1980s, the term was introduced to describe this programming style, which was widely used to program

46:

elements can be divided equally among all the processors. Let us assume we want to sum all the elements of the given array and the time for a single addition operation is Ta time units. In the case of sequential execution, the time taken by the process will be

34:

environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to

1014:

programming models on multiple platforms of multiprocessor systems. Since version 4.5, OpenMP is also able to target devices other than typical CPUs. It can program FPGAs, DSPs, GPUs and more. It is not confined to GPUs like

810:

Data parallelism emphasizes the distributed (parallel) nature of the data, as opposed to the processing (task parallelism). Most real programs fall somewhere on a continuum between task parallelism and data parallelism.

1007:: It is a cross-platform message passing programming interface for parallel computers. It defines the semantics of library functions to allow users to write portable message passing programs in C, C++ and Fortran.

566:

59:

plays an important part in evaluating the performance of a data parallel programming model. Locality of data depends on the memory accesses performed by the program as well as the size of the cache.

480:

We can exploit data parallelism in the preceding code to execute it faster as the arithmetic is loop independent. Parallelization of the matrix multiplication code is achieved by using

752:

Therefore, constraining the number of processors in the system, we can still apply the same principle and divide the data into bigger chunks to calculate the product of two matrices.

1025:: CUDA and OpenACC (respectively) are parallel computing API platforms designed to allow a software engineer to utilize GPU's computational units for general purpose processing.

51:×Ta time units as it sums up all the elements of an array. On the other hand, if we execute this job as a data parallel job on 4 processors the time taken would reduce to (

556:

192:

515:

221:

101:

71:, was developed to expedite the performance of mathematical operations by working on a large data array (operating on multiple data in consecutive time steps).

1600:

1366:

Boyer, L. L; Pawley, G. S (1988-10-01). "Molecular dynamics of clusters of particles interacting with pairwise forces using a massively parallel computer".

55:/4)×Ta + merging overhead time units. Parallel execution results in a speedup of 4 over sequential execution. One important thing to note is that the

951:

It is fast for small networks but very slow for large networks since large amounts of data needs to be transferred between processors all at once.

67:

Exploitation of the concept of data parallelism started in 1960s with the development of the

Solomon machine. The Solomon machine, also called a

1192:

1690:

1045:

forces in signal processing for data parallelism are video encoding, image and graphics processing, wireless communications to name a few.

1542:

1035:: Both open source programming environments that enable mixed data/task parallelism in C/C++ environments across heterogeneous resources.

96:

was an early effort at implementing a nested data-parallel programming model on flat parallel machines, and in particular introduced the

1241:

1671:

1279:

135:

Below is the sequential pseudo-code for multiplication and addition of two matrices where the result is stored in the matrix

1711:

1938:

1961:

921:

Load balancing depends on the availability of the hardware and scheduling algorithms like static and dynamic scheduling.

1850:

1706:

100:

that transforms nested data parallelism to flat data parallelism. This work was continued by other languages such as

1956:

1933:

1515:

108:, although arbitrary nested data parallelism is not widely available in current data-parallel programming languages.

1479:, "Data-Intensive Technologies for Cloud Computing," by A.M. Middleton. Handbook of Cloud Computing. Springer, 2010.

1535:

901:

Speedup is less as each processor will execute a different thread or process on the same or different set of data.

88:(GPUs), which use both the techniques of operating on multiple data in space and time using a single instruction.

1928:

1743:

991:. The data is divided among different sub-circuits and parallelism is achieved with orchestration from the tasks.

2035:

1949:

1898:

105:

72:

979:

Mixed data and task parallelism has many applications. It is particularly used in the following applications:

2259:

2093:

1944:

1631:

1099:

158:

If the following programs were executed sequentially, the time taken to calculate the result would be of the

1438:

Singh, H.; Lee, Ming-Hau; Lu, Guangming; Kurdahi, F.J.; Bagherzadeh, N.; Filho, E.M. Chaves (2000-06-01).

2299:

2278:

2224:

1684:

1528:

1440:"MorphoSys: an integrated reconfigurable system for data-parallel and computation-intensive applications"

1000:

A variety of data parallel programming environments are available today, most widely used of which are:

2203:

1998:

1883:

1845:

1695:

1585:

1158:, it's assumed that the loop will exit immediately (i.e. zero iterations will occur) when this happens.

1104:

915:

1401:

Yap, T.K.; Frieder, O.; Martino, R.L. (1998). "Parallel computation in biological sequence analysis".

2219:

2198:

2143:

2030:

2020:

1993:

1855:

1500:

1295:

1227:

1028:

1004:

755:

For addition of arrays in a data parallel implementation, let's assume a more modest system with two

97:

959:

Data parallelism is ideally used in array and matrix computations and convolutional neural networks

2173:

1799:

1738:

1651:

1415:

1337:

1124:

1061:

1055:

85:

2234:

2229:

2088:

1679:

1010:

Open Multi

Processing (Open MP): It's an Application Programming Interface (API) which supports

756:

1973:

1905:

1809:

1701:

1656:

1410:

819:

The process of parallelizing a sequential program can be broken down into four discrete steps.

1476:

943:

Same model is used for every thread but the data given to each of them is divided and shared.

909:

Amount of parallelization is proportional to the number of independent tasks to be performed.

520:

161:

2065:

2025:

1978:

1968:

1763:

1626:

1565:

124:

56:

19:

565:

2005:

1893:

1888:

1878:

1865:

1661:

1375:

1114:

491:

197:

8:

2168:

2123:

1923:

1789:

1379:

2193:

2042:

2015:

1840:

1804:

1794:

1753:

1595:

1575:

1570:

1551:

1065:

988:

77:

31:

1249:

2239:

1915:

1873:

1768:

1511:

1459:

1387:

1275:

898:

Speedup is more as there is only one execution thread operating on all sets of data.

836:

The program is broken down into tasks, the smallest exploitable unit of concurrence.

2249:

2048:

1983:

1830:

1641:

1636:

1605:

1451:

1439:

1420:

1383:

1320:

68:

36:

2113:

2053:

1988:

1835:

1825:

1758:

1748:

1590:

1580:

2244:

2060:

1717:

1610:

1119:

1094:

2293:

2133:

2010:

1487:

1463:

1214:

1069:

1011:

792:

lower_limit := round(d.length / 2) + 1 upper_limit := d.length

16:

Parallelization across multiple processors in parallel computing environments

1733:

2254:

140:

807:

system executed on 2 processor system, both CPUs will execute the code.

1495:

1491:

1222:

1218:

1109:

763:

1455:

1424:

1296:"How to Parallelize Deep Learning on GPUs Part 2/2: Model Parallelism"

946:

Same data is used for every thread, and model is split among threads.

2128:

2103:

1520:

1077:

1073:

194:(assuming row lengths and column lengths of both matrices are n) and

2178:

2158:

2083:

1081:

116:

In a multiprocessor system executing a single set of instructions (

906:

Amount of parallelization is proportional to the input data size.

885:

Different operations are performed on the same or different data.

784:

lower_limit := 1 upper_limit := round(d.length / 2)

2183:

2163:

2138:

1773:

1032:

1022:

882:

Same operations are performed on different subsets of same data.

127:

and addition in a sequential manner as discussed in the example.

2153:

2148:

481:

852:

Data access, communication, and synchronization of processes.

2188:

2118:

2108:

1018:

954:

It is slow for small networks and fast for large networks.

804:

117:

93:

962:

Model parallelism finds its applications in deep learning

2098:

2075:

995:

976:

communication is slow and number of processors is large.

927:

1345:

866:

578:#pragma omp parallel for schedule(dynamic,1) collapse(2)

81:

1403:

IEEE Transactions on

Parallel and Distributed Systems

523:

494:

200:

164:

1072:

approach to process large volumes of data typically

139:. The pseudo-code for multiplication calculates the

970:

550:

509:

215:

186:

1437:

1400:

84:. Today, data parallelism is best exemplified in

30:is parallelization across multiple processors in

2291:

766:below—which applies some arbitrary operation,

223:for multiplication and addition respectively.

1536:

151:and stores the result into the output matrix

1365:

1048:

814:

1543:

1529:

23:Sequential vs. data-parallel job execution

1508:Vector Models for Data-Parallel Computing

1414:

569:Data parallelism in matrix multiplication

564:

18:

1269:

796:i from lower_limit to upper_limit by 1

2292:

1550:

1263:

996:Data parallel programming environments

928:Data parallelism vs. model parallelism

1524:

1272:Fundamentals of Parallel Architecture

1080:in size and typically referred to as

867:Data parallelism vs. task parallelism

1242:"Introduction to Parallel Computing"

575:// Matrix multiplication in parallel

1150:rounds towards zero ) will lead to

860:Processes are bound to processors.

42:A data parallel job on an array of

13:

1239:

14:

2311:

844:Tasks are assigned to processes.

2273:

2272:

1368:Journal of Computational Physics

1054:This section is an excerpt from

770:, on every element in the array

558:when executed in parallel using

80:in data parallel languages like

39:as another form of parallelism.

1744:Analysis of parallel algorithms

1470:

1431:

1394:

1359:

1039:

971:Mixed data and task parallelism

774:—illustrates data parallelism:

1444:IEEE Transactions on Computers

1330:

1313:

1288:

1233:

1208:

1185:

1171:

1136:

545:

527:

504:

498:

210:

204:

181:

168:

111:

1:

1691:Simultaneous and heterogenous

1274:. Boca Raton, FL: CRC Press.

1164:

1100:Instruction level parallelism

2279:Category: Parallel computing

1388:10.1016/0021-9991(88)90057-5

7:

1477:Handbook of Cloud Computing

1142:Some input data (e.g. when

1088:

918:on multi processor system.

57:locality of data references

10:

2316:

1586:High-performance computing

1105:Parallel programming model

1053:

130:

62:

2268:

2220:Automatic parallelization

2212:

2074:

1914:

1864:

1856:Application checkpointing

1818:

1782:

1726:

1670:

1619:

1558:

1501:Communications of the ACM

1228:Communications of the ACM

1068:applications which use a

1029:Threading Building Blocks

1005:Message Passing Interface

893:Asynchronous computation

762:The program expressed in

98:flattening transformation

86:graphics processing units

1497:Data Parallel Algorithms

1224:Data Parallel Algorithms

1130:

1125:Thread level parallelism

1062:Data-intensive computing

1056:Data-intensive computing

1049:Data-intensive computing

890:Synchronous computation

815:Steps to parallelization

757:central processing units

572:

551:{\displaystyle O(m*n*k)}

409:

228:// Matrix multiplication

225:

187:{\displaystyle O(n^{3})}

2235:Embarrassingly parallel

2230:Deterministic algorithm

123:For instance, consider

1950:Associative processing

1906:Non-blocking algorithm

1712:Clustered multi-thread

1179:"The Solomon Computer"

570:

552:

511:

217:

188:

24:

2066:Hardware acceleration

1979:Superscalar processor

1969:Dataflow architecture

1566:Distributed computing

1270:Solihin, Yan (2016).

914:Designed for optimum

568:

553:

512:

218:

189:

125:matrix multiplication

102:Data Parallel Haskell

22:

1945:Pipelined processing

1894:Explicit parallelism

1889:Implicit parallelism

1879:Dataflow programming

1115:Scalable parallelism

521:

510:{\displaystyle O(n)}

492:

216:{\displaystyle O(n)}

198:

162:

2169:Parallel Extensions

1974:Pipelined processor

1380:1988JCoPh..78..405B

1154:being greater than

1146:evaluates to 1 and

488:can be finished in

78:Connection Machines

2300:Parallel computing

2043:Massively parallel

2021:distributed shared

1841:Cache invalidation

1805:Instruction window

1596:Manycore processor

1576:Massively parallel

1571:Parallel computing

1552:Parallel computing

1246:computing.llnl.gov

1066:parallel computing

989:circuit simulation

938:Model parallelism

571:

548:

507:

213:

184:

32:parallel computing

25:

2287:

2286:

2240:Parallel slowdown

1874:Stream processing

1764:Karp–Flatt metric

1506:Blelloch, Guy E,

1488:Hillis, W. Daniel

1456:10.1109/12.859540

1425:10.1109/71.674320

1281:978-1-4822-1118-4

1215:Hillis, W. Daniel

1193:"SIMD/Vector/GPU"

966:

965:

935:Data parallelism

925:

924:

877:Task parallelism

874:Data parallelism

864:

863:

412:// Array addition

2307:

2276:

2275:

2250:Software lockout

2049:Computer cluster

1984:Vector processor

1939:Array processing

1924:Flynn's taxonomy

1831:Memory coherence

1606:Computer network

1545:

1538:

1531:

1522:

1521:

1510:MIT Press 1990.

1509:

1498:

1480:

1474:

1468:

1467:

1435:

1429:

1428:

1418:

1398:

1392:

1391:

1363:

1357:

1356:

1354:

1353:

1344:. Archived from

1334:

1328:

1327:

1325:

1317:

1311:

1310:

1308:

1307:

1292:

1286:

1285:

1267:

1261:

1260:

1258:

1257:

1248:. Archived from

1240:Barney, Blaise.

1237:

1231:

1225:

1212:

1206:

1205:

1203:

1202:

1197:

1189:

1183:

1182:

1175:

1159:

1157:

1153:

1149:

1145:

1140:

987:In timing based

932:

931:

871:

870:

822:

821:

773:

769:

747:

744:

741:

738:

735:

732:

729:

726:

723:

720:

717:

714:

711:

708:

705:

702:

699:

696:

693:

690:

687:

684:

681:

678:

675:

672:

669:

666:

663:

660:

657:

654:

651:

648:

645:

642:

639:

636:

633:

630:

627:

624:

621:

618:

615:

612:

609:

606:

603:

600:

597:

594:

591:

588:

585:

582:

579:

576:

557:

555:

554:

549:

516:

514:

513:

508:

476:

473:

470:

467:

464:

461:

458:

455:

452:

449:

446:

443:

440:

437:

434:

431:

428:

425:

422:

419:

416:

413:

406:

403:

400:

397:

394:

391:

388:

385:

382:

379:

376:

373:

370:

367:

364:

361:

358:

355:

352:

349:

346:

343:

340:

337:

334:

331:

328:

325:

322:

319:

316:

313:

310:

307:

304:

301:

298:

295:

292:

289:

286:

283:

280:

277:

274:

271:

268:

265:

262:

259:

256:

253:

250:

247:

244:

241:

238:

235:

232:

229:

222:

220:

219:

214:

193:

191:

190:

185:

180:

179:

143:of two matrices

69:vector processor

37:task parallelism

28:Data parallelism

2315:

2314:

2310:

2309:

2308:

2306:

2305:

2304:

2290:

2289:

2288:

2283:

2264:

2208:

2114:Coarray Fortran

2070:

2054:Beowulf cluster

1910:

1860:

1851:Synchronization

1836:Cache coherence

1826:Multiprocessing

1814:

1778:

1759:Cost efficiency

1754:Gustafson's law

1722:

1666:

1615:

1591:Multiprocessing

1581:Cloud computing

1554:

1549:

1507:

1496:

1484:

1483:

1475:

1471:

1436:

1432:

1399:

1395:

1364:

1360:

1351:

1349:

1336:

1335:

1331:

1323:

1319:

1318:

1314:

1305:

1303:

1294:

1293:

1289:

1282:

1268:

1264:

1255:

1253:

1238:

1234:

1223:

1213:

1209:

1200:

1198:

1195:

1191:

1190:

1186:

1177:

1176:

1172:

1167:

1162:

1155:

1151:

1147:

1143:

1141:

1137:

1133:

1091:

1086:

1085:

1059:

1051:

1042:

998:

973:

930:

869:

817:

801:

771:

767:

749:

748:

745:

742:

739:

736:

733:

730:

727:

724:

721:

718:

715:

712:

709:

706:

703:

700:

697:

695:column_length_A

694:

691:

688:

685:

682:

679:

676:

673:

670:

667:

664:

661:

658:

655:

652:

649:

646:

644:column_length_B

643:

640:

637:

634:

631:

628:

625:

622:

619:

616:

613:

610:

607:

604:

601:

598:

595:

592:

589:

586:

583:

580:

577:

574:

522:

519:

518:

493:

490:

489:

478:

477:

474:

471:

468:

465:

462:

459:

456:

453:

450:

447:

444:

441:

438:

435:

432:

429:

426:

423:

420:

417:

414:

411:

408:

407:

404:

401:

398:

395:

392:

389:

386:

383:

380:

377:

374:

371:

368:

365:

362:

359:

356:

353:

351:column_length_A

350:

347:

344:

341:

338:

335:

332:

329:

326:

323:

320:

317:

314:

311:

308:

305:

302:

299:

297:column_length_B

296:

293:

290:

287:

284:

281:

278:

275:

272:

269:

266:

263:

260:

257:

254:

251:

248:

245:

242:

239:

236:

233:

230:

227:

199:

196:

195:

175:

171:

163:

160:

159:

154:

150:

146:

138:

133:

114:

65:

17:

12:

11:

5:

2313:

2303:

2302:

2285:

2284:

2282:

2281:

2269:

2266:

2265:

2263:

2262:

2257:

2252:

2247:

2245:Race condition

2242:

2237:

2232:

2227:

2222:

2216:

2214:

2210:

2209:

2207:

2206:

2201:

2196:

2191:

2186:

2181:

2176:

2171:

2166:

2161:

2156:

2151:

2146:

2141:

2136:

2131:

2126:

2121:

2116:

2111:

2106:

2101:

2096:

2091:

2086:

2080:

2078:

2072:

2071:

2069:

2068:

2063:

2058:

2057:

2056:

2046:

2040:

2039:

2038:

2033:

2028:

2023:

2018:

2013:

2003:

2002:

2001:

1996:

1989:Multiprocessor

1986:

1981:

1976:

1971:

1966:

1965:

1964:

1959:

1954:

1953:

1952:

1947:

1942:

1931:

1920:

1918:

1912:

1911:

1909:

1908:

1903:

1902:

1901:

1896:

1891:

1881:

1876:

1870:

1868:

1862:

1861:

1859:

1858:

1853:

1848:

1843:

1838:

1833:

1828:

1822:

1820:

1816:

1815:

1813:

1812:

1807:

1802:

1797:

1792:

1786:

1784:

1780:

1779:

1777:

1776:

1771:

1766:

1761:

1756:

1751:

1746:

1741:

1736:

1730:

1728:

1724:

1723:

1721:

1720:

1718:Hardware scout

1715:

1709:

1704:

1699:

1693:

1688:

1682:

1676:

1674:

1672:Multithreading

1668:

1667:

1665:

1664:

1659:

1654:

1649:

1644:

1639:

1634:

1629:

1623:

1621:

1617:

1616:

1614:

1613:

1611:Systolic array

1608:

1603:

1598:

1593:

1588:

1583:

1578:

1573:

1568:

1562:

1560:

1556:

1555:

1548:

1547:

1540:

1533:

1525:

1519:

1518:

1504:

1492:Steele, Guy L.

1482:

1481:

1469:

1450:(5): 465–481.

1430:

1416:10.1.1.30.2819

1409:(3): 283–294.

1393:

1374:(2): 405–423.

1358:

1329:

1312:

1287:

1280:

1262:

1232:

1219:Steele, Guy L.

1207:

1184:

1169:

1168:

1166:

1163:

1161:

1160:

1134:

1132:

1129:

1128:

1127:

1122:

1120:Segmented scan

1117:

1112:

1107:

1102:

1097:

1095:Active message

1090:

1087:

1064:is a class of

1060:

1052:

1050:

1047:

1041:

1038:

1037:

1036:

1026:

1016:

1008:

997:

994:

993:

992:

985:

972:

969:

964:

963:

960:

956:

955:

952:

948:

947:

944:

940:

939:

936:

929:

926:

923:

922:

919:

911:

910:

907:

903:

902:

899:

895:

894:

891:

887:

886:

883:

879:

878:

875:

868:

865:

862:

861:

858:

854:

853:

850:

849:Orchestration

846:

845:

842:

838:

837:

834:

833:Decomposition

830:

829:

826:

816:

813:

776:

573:

547:

544:

541:

538:

535:

532:

529:

526:

506:

503:

500:

497:

410:

226:

212:

209:

206:

203:

183:

178:

174:

170:

167:

152:

148:

144:

136:

132:

129:

113:

110:

64:

61:

15:

9:

6:

4:

3:

2:

2312:

2301:

2298:

2297:

2295:

2280:

2271:

2270:

2267:

2261:

2258:

2256:

2253:

2251:

2248:

2246:

2243:

2241:

2238:

2236:

2233:

2231:

2228:

2226:

2223:

2221:

2218:

2217:

2215:

2211:

2205:

2202:

2200:

2197:

2195:

2192:

2190:

2187:

2185:

2182:

2180:

2177:

2175:

2172:

2170:

2167:

2165:

2162:

2160:

2157:

2155:

2152:

2150:

2147:

2145:

2142:

2140:

2137:

2135:

2134:Global Arrays

2132:

2130:

2127:

2125:

2122:

2120:

2117:

2115:

2112:

2110:

2107:

2105:

2102:

2100:

2097:

2095:

2092:

2090:

2087:

2085:

2082:

2081:

2079:

2077:

2073:

2067:

2064:

2062:

2061:Grid computer

2059:

2055:

2052:

2051:

2050:

2047:

2044:

2041:

2037:

2034:

2032:

2029:

2027:

2024:

2022:

2019:

2017:

2014:

2012:

2009:

2008:

2007:

2004:

2000:

1997:

1995:

1992:

1991:

1990:

1987:

1985:

1982:

1980:

1977:

1975:

1972:

1970:

1967:

1963:

1960:

1958:

1955:

1951:

1948:

1946:

1943:

1940:

1937:

1936:

1935:

1932:

1930:

1927:

1926:

1925:

1922:

1921:

1919:

1917:

1913:

1907:

1904:

1900:

1897:

1895:

1892:

1890:

1887:

1886:

1885:

1882:

1880:

1877:

1875:

1872:

1871:

1869:

1867:

1863:

1857:

1854:

1852:

1849:

1847:

1844:

1842:

1839:

1837:

1834:

1832:

1829:

1827:

1824:

1823:

1821:

1817:

1811:

1808:

1806:

1803:

1801:

1798:

1796:

1793:

1791:

1788:

1787:

1785:

1781:

1775:

1772:

1770:

1767:

1765:

1762:

1760:

1757:

1755:

1752:

1750:

1747:

1745:

1742:

1740:

1737:

1735:

1732:

1731:

1729:

1725:

1719:

1716:

1713:

1710:

1708:

1705:

1703:

1700:

1697:

1694:

1692:

1689:

1686:

1683:

1681:

1678:

1677:

1675:

1673:

1669:

1663:

1660:

1658:

1655:

1653:

1650:

1648:

1645:

1643:

1640:

1638:

1635:

1633:

1630:

1628:

1625:

1624:

1622:

1618:

1612:

1609:

1607:

1604:

1602:

1599:

1597:

1594:

1592:

1589:

1587:

1584:

1582:

1579:

1577:

1574:

1572:

1569:

1567:

1564:

1563:

1561:

1557:

1553:

1546:

1541:

1539:

1534:

1532:

1527:

1526:

1523:

1517:

1516:0-262-02313-X

1513:

1505:

1503:December 1986

1502:

1499:

1493:

1489:

1486:

1485:

1478:

1473:

1465:

1461:

1457:

1453:

1449:

1445:

1441:

1434:

1426:

1422:

1417:

1412:

1408:

1404:

1397:

1389:

1385:

1381:

1377:

1373:

1369:

1362:

1348:on 2016-09-05

1347:

1343:

1339:

1333:

1322:

1316:

1301:

1297:

1291:

1283:

1277:

1273:

1266:

1252:on 2013-06-10

1251:

1247:

1243:

1236:

1230:December 1986

1229:

1226:

1220:

1216:

1211:

1194:

1188:

1180:

1174:

1170:

1139:

1135:

1126:

1123:

1121:

1118:

1116:

1113:

1111:

1108:

1106:

1103:

1101:

1098:

1096:

1093:

1092:

1083:

1079:

1075:

1071:

1070:data parallel

1067:

1063:

1057:

1046:

1034:

1030:

1027:

1024:

1020:

1017:

1013:

1012:shared memory

1009:

1006:

1003:

1002:

1001:

990:

986:

982:

981:

980:

977:

968:

961:

958:

957:

953:

950:

949:

945:

942:

941:

937:

934:

933:

920:

917:

913:

912:

908:

905:

904:

900:

897:

896:

892:

889:

888:

884:

881:

880:

876:

873:

872:

859:

856:

855:

851:

848:

847:

843:

840:

839:

835:

832:

831:

827:

824:

823:

820:

812:

808:

806:

799:

795:

791:

787:

783:

779:

775:

765:

760:

758:

753:

567:

563:

562:processors.

561:

542:

539:

536:

533:

530:

524:

501:

495:

487:

483:

224:

207:

201:

176:

172:

165:

156:

142:

128:

126:

121:

119:

109:

107:

103:

99:

95:

89:

87:

83:

79:

74:

70:

60:

58:

54:

50:

45:

40:

38:

33:

29:

21:

1819:Coordination

1749:Amdahl's law

1685:Simultaneous

1646:

1472:

1447:

1443:

1433:

1406:

1402:

1396:

1371:

1367:

1361:

1350:. Retrieved

1346:the original

1341:

1338:"OpenMP.org"

1332:

1321:"The Netlib"

1315:

1304:. Retrieved

1302:. 2014-11-09

1300:Tim Dettmers

1299:

1290:

1271:

1265:

1254:. Retrieved

1250:the original

1245:

1235:

1210:

1199:. Retrieved

1187:

1173:

1138:

1043:

1040:Applications

999:

978:

974:

967:

916:load balance

828:Description

818:

809:

802:

797:

793:

789:

785:

781:

777:

761:

754:

750:

605:row_length_A

559:

485:

479:

255:row_length_A

157:

134:

122:

115:

90:

66:

52:

48:

43:

41:

27:

26:

2255:Scalability

2016:distributed

1899:Concurrency

1866:Programming

1707:Cooperative

1696:Speculative

1632:Instruction

1156:upper_limit

1152:lower_limit

841:Assignment

517:instead of

141:dot product

112:Description

73:Concurrency

2260:Starvation

1999:asymmetric

1734:PRAM model

1702:Preemptive

1352:2016-09-07

1342:openmp.org

1306:2016-09-13

1256:2016-09-07

1201:2016-09-07

1165:References

1110:Prefix sum

984:processes.

788:CPU = "b"

780:CPU = "a"

764:pseudocode

1994:symmetric

1739:PEM model

1464:0018-9340

1411:CiteSeerX

1078:petabytes

1074:terabytes

540:∗

534:∗

92:language

2294:Category

2225:Deadlock

2213:Problems

2179:pthreads

2159:OpenHMPP

2084:Ateji PX

2045:computer

1916:Hardware

1783:Elements

1769:Slowdown

1680:Temporal

1662:Pipeline

1144:d.length

1089:See also

1082:big data

1015:OpenACC.

857:Mapping

486:A dot B

2184:RaftLib

2164:OpenACC

2139:GPUOpen

2129:C++ AMP

2104:Charm++

1846:Barrier

1790:Process

1774:Speedup

1559:General

1376:Bibcode

1033:RaftLib

1023:OpenACC

800:foo(d)

786:else if

131:Example

106:Futhark

63:History

2277:

2154:OpenCL

2149:OpenMP

2094:Chapel

2011:shared

2006:Memory

1941:(SIMT)

1884:Models

1795:Thread

1727:Theory

1698:(SpMT)

1652:Memory

1637:Thread

1620:Levels

1514:

1462:

1413:

1278:

803:In an

482:OpenMP

2124:Dryad

2089:Boost

1810:Array

1800:Fiber

1714:(CMT)

1687:(SMT)

1601:GPGPU

1324:(PDF)

1196:(PDF)

1148:round

1131:Notes

825:Type

2189:ROCm

2119:CUDA

2109:Cilk

2076:APIs

2036:COMA

2031:NUMA

1962:MIMD

1957:MISD

1934:SIMD

1929:SISD

1657:Loop

1647:Data

1642:Task

1512:ISBN

1490:and

1460:ISSN

1276:ISBN

1217:and

1031:and

1021:and

1019:CUDA

805:SPMD

790:then

782:then

692:<

641:<

602:<

436:<

348:<

294:<

252:<

118:SIMD

104:and

94:NESL

2204:ZPL

2199:TBB

2194:UPC

2174:PVM

2144:MPI

2099:HPX

2026:UMA

1627:Bit

1452:doi

1421:doi

1384:doi

1076:or

794:for

768:foo

737:sum

710:sum

671:for

659:sum

620:for

581:for

560:m*k

415:for

396:sum

369:sum

327:for

315:sum

273:for

231:for

2296::

1494:,

1458:.

1448:49

1446:.

1442:.

1419:.

1405:.

1382:.

1372:78

1370:.

1340:.

1298:.

1244:.

1221:,

798:do

778:if

713:+=

707:){

704:++

656:){

653:++

617:){

614:++

448:++

372:+=

360:++

306:++

264:++

155:.

147:,

82:C*

1544:e

1537:t

1530:v

1466:.

1454::

1427:.

1423::

1407:9

1390:.

1386::

1378::

1355:.

1326:.

1309:.

1284:.

1259:.

1204:.

1181:.

1058:.

772:d

746:}

743:}

740:;

734:=

731:C

728:}

725:;

722:B

719:*

716:A

701:j

698:;

689:j

686:;

683:0

680:=

677:j

674:(

668:;

665:0

662:=

650:k

647:;

638:k

635:;

632:0

629:=

626:k

623:(

611:i

608:;

599:i

596:;

593:0

590:=

587:i

584:(

546:)

543:k

537:n

531:m

528:(

525:O

505:)

502:n

499:(

496:O

475:}

472:;

469:b

466:+

463:a

460:=

457:c

454:{

451:)

445:i

442:;

439:n

433:i

430:;

427:0

424:=

421:i

418:(

405:}

402:}

399:;

393:=

390:C

387:}

384:;

381:B

378:*

375:A

366:{

363:)

357:j

354:;

345:j

342:;

339:0

336:=

333:j

330:(

324:;

321:0

318:=

312:{

309:)

303:k

300:;

291:k

288:;

285:0

282:=

279:k

276:(

270:{

267:)

261:i

258:;

249:i

246:;

243:0

240:=

237:i

234:(

211:)

208:n

205:(

202:O

182:)

177:3

173:n

169:(

166:O

153:C

149:B

145:A

137:C

53:n

49:n

44:n

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.