176:

563:- which means that the system should prefetch 7 elements ahead. With the first iteration, i will be 0, so the system prefetches the 7th element. Now, with this arrangement, the first 7 accesses (i=0->6) will still be misses (under the simplifying assumption that each element of array1 is in a separate cache line of its own).

780:

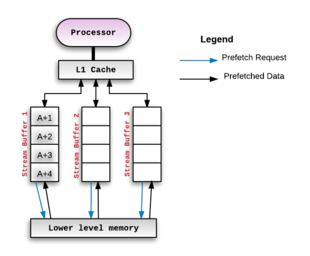

171:. This buffer is called a stream buffer and is separate from the cache. The processor then consumes data/instructions from the stream buffer if the address associated with the prefetched blocks match the requested address generated by the program executing on the processor. The figure below illustrates this setup:

784:

While it appears that having perfect accuracy might imply that there are no misses, this is not the case. The prefetches themselves might result in new misses if the prefetched blocks are placed directly into the cache. Although these may be a small fraction of the total number of misses observed

294:

This class of prefetchers look for memory access streams that repeat over time. E.g. In this stream of memory accesses: N, A, B, C, E, G, H, A, B, C, I, J, K, A, B, C, L, M, N, O, A, B, C, ...; the stream A,B,C is repeating over time. Other design variation have tried to provide more efficient,

184:

Whenever the prefetch mechanism detects a miss on a memory block, say A, it allocates a stream to begin prefetching successive blocks from the missed block onward. If the stream buffer can hold 4 blocks, then the processor would prefetch A+1, A+2, A+3, A+4 and hold those in the allocated stream

90:

is typically accomplished by having a dedicated hardware mechanism in the processor that watches the stream of instructions or data being requested by the executing program, recognizes the next few elements that the program might need based on this stream and prefetches into the processor's

303:

Computer applications generate a variety of access patterns. The processor and memory subsystem architectures used to execute these applications further disambiguate the memory access patterns they generate. Hence, the effectiveness and efficiency of prefetching schemes often depend on the

712:

22:

is a technique used by computer processors to boost execution performance by fetching instructions or data from their original storage in slower memory to a faster local memory before it is actually needed (hence the term 'prefetch'). Most modern computer processors have fast and local

726:

659:

192:

This mechanism can be scaled up by adding multiple such 'stream buffers' - each of which would maintain a separate prefetch stream. For each new miss, there would be a new stream buffer allocated and it would operate in a similar way as described

185:

buffer. If the processor consumes A+1 next, then it shall be moved "up" from the stream buffer to the processor's cache. The first entry of the stream buffer would now be A+2 and so on. This pattern of prefetching successive blocks is called

579:

Software prefetching works well only with loops where there is regular array access as the programmer has to hand code the prefetch instructions, whereas hardware prefetchers work dynamically based on the program's behavior at

304:

application and the architectures used to execute them. Recent research has focused on building collaborative mechanisms to synergistically use multiple prefetching schemes for better prefetching coverage and accuracy.

667:

411:

At each iteration, the i element of the array "array1" is accessed. Therefore, the system can prefetch the elements that are going to be accessed in future iterations by inserting a "prefetch" instruction as shown

285:

In this case, the delta between the addresses of consecutive memory accesses is variable but still follows a pattern. Some prefetchers designs exploit this property to predict and prefetch for future accesses.

796:

Consider a for loop where each iteration takes 3 cycles to execute and the 'prefetch' operation takes 12 cycles. This implies that for the prefetched data to be useful, the system must start the prefetch

587:

Hardware prefetching also has less CPU overhead when compared to software prefetching. However, software prefetching can mitigate certain constraints of hardware prefetching, leading to improvements in

317:

Compiler directed prefetching is widely used within loops with a large number of iterations. In this technique, the compiler predicts future cache misses and inserts a prefetch instruction based on the

54:

fetches data before it is needed. Because data access patterns show less regularity than instruction patterns, accurate data prefetching is generally more challenging than instruction prefetching.

721:

Accuracy is the fraction of total prefetches that were useful - i.e. the ratio of the number of memory addresses prefetched were actually referenced by the program to the total prefetches done.

325:

These prefetches are non-blocking memory operations, i.e. these memory accesses do not interfere with actual memory accesses. They do not change the state of the processor or cause page faults.

1753:. Fourth International Conference on Architectural Support for Programming Languages and Operating Systems. Santa Clara, California, USA: Association for Computing Machinery. pp. 40–52.

35:, so prefetching data and then accessing it from caches is usually many orders of magnitude faster than accessing it directly from main memory. Prefetching can be done with non-blocking

775:{\displaystyle {\text{Prefetch Accuracy}}={\frac {\text{Cache Misses eliminated by prefetching}}{({\text{Useless Cache Prefetches}})+({\text{Cache Misses eliminated by prefetching}})}}}

610:

793:

The qualitative definition of timeliness is how early a block is prefetched versus when it is actually referenced. An example to further explain timeliness is as follows:

561:

829:

1084:. 17th annual international symposium on Computer Architecture – ISCA 1990. New York, New York, USA: Association for Computing Machinery Press. pp. 364–373.

515:

275:

255:

235:

169:

149:

97:

is typically accomplished by having the compiler analyze the code and insert additional "prefetch" instructions in the program during compilation itself.

1595:

1815:

1061:

707:{\displaystyle {\text{Total Cache Misses}}=({\text{Cache misses eliminated by prefetching}})+({\text{Cache misses not eliminated by prefetching}})}

1080:

Jouppi, Norman P. (1990). "Improving direct-mapped cache performance by the addition of a small fully-associative cache and prefetch buffers".

196:

The ideal depth of the stream buffer is something that is subject to experimentation against various benchmarks and depends on the rest of the

60:

fetches instructions before they need to be executed. The first mainstream microprocessors to use some form of instruction prefetch were the

1212:

Grannaes, Marius; Jahre, Magnus; Natvig, Lasse (2011). "Storage

Efficient Hardware Prefetching using Delta-Correlating Prediction Tables".

523:

loop. For instance, if one iteration of the loop takes 7 cycles to execute, and the cache miss penalty is 49 cycles then there should be

1170:. 21st Annual International Symposium on Computer Architecture. Chicago, Illinois, USA: IEEE Computer Society Press. pp. 24–33.

1376:

Shevgoor, Manjunath; Koladiya, Sahil; Balasubramonian, Rajeev; Wilkerson, Chris; Pugsley, Seth H.; Chishti, Zeshan (December 2015).

999:. 1991 ACM/IEEE Conference on Supercomputing. Albuquerque, New Mexico, USA: Association for Computing Machinery. pp. 176–186.

332:

1725:

1682:

1571:

1483:

1438:

1352:

1309:

1258:

209:

This type of prefetching monitors the delta between the addresses of the memory accesses and looks for patterns within it.

1123:

Chen, Tien-Fu; Baer, Jean-Loup (1995-05-01). "Effective hardware-based data prefetching for high-performance processors".

189:. It is mainly used when contiguous locations are to be prefetched. For example, it is used when prefetching instructions.

1419:

Kim, Jinchun; Pugsley, Seth H.; Gratz, Paul V.; Reddy, A.L. Narasimha; Wilkerson, Chris; Chishti, Zeshan (October 2016).

1766:

1193:

1022:

979:

581:

1526:

1395:

1107:

1513:. 35th Annual IEEE/ACM International Symposium on Microarchitecture, 2002. (MICRO-35). Proceedings. pp. 62–73.

123:

are one of the most common hardware based prefetching techniques in use. This technique was originally proposed by

1620:

Kim, Jinchun; Teran, Elvira; Gratz, Paul V.; Jiménez, Daniel A.; Pugsley, Seth H.; Wilkerson, Chris (2017-05-12).

922:"Adaptive priority-based cache replacement and prediction-based cache prefetching in edge computing environment"

1596:"Making Temporal Prefetchers Practical: The MISB Prefetcher – Research Articles – Arm Research – Arm Community"

28:

654:{\displaystyle Coverage={\frac {\text{Cache Misses eliminated by Prefetching}}{\text{Total Cache Misses}}}}

517:

depends on two factors, the cache miss penalty and the time it takes to execute a single iteration of the

1293:

Feedback

Directed Prefetching: Improving the Performance and Bandwidth-Efficiency of Hardware Prefetchers

1470:. ISCA 1997. ISCA 1997. New York, New York, USA: Association for Computing Machinery. pp. 252–263.

27:

in which prefetched data is held until it is required. The source for the prefetch operation is usually

1834:

1712:. 2020 ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA). pp. 118–131.

855:

328:

One main advantage of software prefetching is that it reduces the number of compulsory cache misses.

112:

Stream buffers were developed based on the concept of "one block lookahead (OBL) scheme" proposed by

36:

1382:. 2015 48th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO). pp. 141–152.

1296:. 2007 IEEE 13th International Symposium on High Performance Computer Architecture. pp. 63–74.

1222:

1176:

1090:

1005:

1709:

Bouquet of

Instruction Pointers: Instruction Pointer Classifier-based Spatial Hardware Prefetching

1425:. 2016 49th Annual IEEE/ACM International Symposium on Microarchitecture (MICRO). pp. 1–12.

921:

920:

Li, Chunlin; Song, Mingyang; Du, Shaofeng; Wang, Xiaohai; Zhang, Min; Luo, Youlong (2020-09-01).

127:

in 1990 and many variations of this method have been developed since. The basic idea is that the

1217:

1171:

1085:

1000:

526:

1622:"Kill the Program Counter: Reconstructing Program Behavior in the Processor Cache Hierarchy"

1558:. MICRO-46. New York, New York, USA: Association for Computing Machinery. pp. 247–259.

1245:. ICS 2009. New York, New York, USA: Association for Computing Machinery. pp. 499–500.

800:

31:. Because of their design, accessing cache memories is typically much faster than accessing

1082:

Proceedings of the 17th annual international symposium on

Computer Architecture – ISCA 1990

1055:

840:

331:

The following example shows how a prefetch instruction would be added into code to improve

605:

Coverage is the fraction of total misses that are eliminated because of prefetching, i.e.

74:(four bytes). In recent years, all high-performance processors use prefetching techniques.

8:

1783:

1731:

1688:

1577:

1532:

1489:

1444:

1401:

1358:

1315:

1264:

1148:

941:

902:

500:

260:

240:

220:

154:

134:

1809:

1762:

1735:

1721:

1678:

1643:

1567:

1522:

1479:

1434:

1391:

1348:

1305:

1254:

1189:

1140:

1103:

1018:

975:

945:

894:

197:

1692:

1556:

Proceedings of the 46th Annual IEEE/ACM International

Symposium on Microarchitecture

1405:

1362:

1268:

1798:

1754:

1713:

1670:

1633:

1559:

1536:

1514:

1471:

1448:

1426:

1383:

1340:

1319:

1297:

1246:

1181:

1152:

1132:

1095:

1043:

1040:

Software methods for improvement of cache performance on supercomputer applications

1010:

933:

906:

886:

845:

1581:

1493:

1339:. 2017 IEEE International Conference on Computer Design (ICCD). pp. 373–376.

1717:

319:

175:

1781:

1707:

1667:

Proceedings of the 45th Annual

International Symposium on Computer Architecture

1518:

1508:

1468:

Proceedings of the 24th Annual

International Symposium on Computer Architecture

1430:

1420:

1377:

1334:

1291:

937:

113:

1828:

1647:

1301:

1185:

1144:

898:

179:

A typical stream buffer setup as originally proposed by Norman Jouppi in 1990

124:

1802:

1674:

1638:

1621:

1563:

1387:

1250:

1552:"Linearizing irregular memory accesses for improved correlated prefetching"

974:. Boca Raton, Florida: CRC Press, Taylor & Francis Group. p. 163.

1758:

1662:

1551:

1475:

1238:

1099:

1014:

890:

1344:

1283:

217:

In this pattern, consecutive memory accesses are made to blocks that are

120:

83:

Cache prefetching can be accomplished either by hardware or by software.

32:

1463:

1375:

576:

intervention, hardware prefetching requires special hardware mechanisms.

257:

and uses it to compute the memory address for prefetching. E.g.: If the

850:

128:

1136:

1047:

1287:

24:

997:

An

Effective On-chip Preloading Scheme to Reduce Data Access Penalty

47:

Cache prefetching can either fetch data or instructions into cache.

573:

68:

1507:

Collins, J.; Sair, S.; Calder, B.; Tullsen, D.M. (November 2002).

1243:

Proceedings of the 23rd

International Conference on Supercomputing

566:

151:

subsequent addresses) are fetched into a separate buffer of depth

1669:. ISCA '18. Los Angeles, California: IEEE Press. pp. 83–95.

1749:

Callahan, David; Kennedy, Ken; Porterfield, Allan (1991-01-01).

785:

without any prefetching, this is a non-zero number of misses.

1663:"Division of labor: a more effective approach to prefetching"

237:

addresses apart. In this case, the prefetcher calculates the

71:

61:

1336:

T2: A Highly

Accurate and Energy Efficient Stride Prefetcher

64:

1748:

1168:

Evaluating Stream Buffers As a Secondary Cache Replacement

78:

42:

16:

Computer processing technique to boost memory performance

1782:

Lee, Jaekyu and Kim, Hyesoon and Vuduc, Richard (2012),

1506:

597:

There are three main metrics to judge cache prefetching

831:

iterations prior to its usage to maintain timeliness.

1239:"Access map pattern matching for data cache prefetch"

1237:

Ishii, Yasuo; Inaba, Mary; Hiraki, Kei (2009-06-08).

803:

729:

670:

613:

529:

503:

263:

243:

223:

157:

137:

1619:

1418:

1706:Pakalapati, Samuel; Panda, Biswabandan (May 2020).

1333:Kondguli, Sushant; Huang, Michael (November 2017).

1281:

1211:

1784:"When Prefetching Works, When It Doesn't, and Why"

823:

774:

706:

653:

572:While software prefetching requires programmer or

555:

509:

307:

269:

249:

229:

163:

143:

101:

1165:

289:

1826:

1661:Kondguli, Sushant; Huang, Michael (2018-06-02).

1379:Efficiently prefetching complex address patterns

1236:

877:Smith, Alan Jay (1982-09-01). "Cache Memories".

312:

1705:

972:Fundamentals of parallel multicore architecture

592:

567:Comparison of hardware and software prefetching

1461:

277:is 4, the address to be prefetched would A+4.

1660:

1332:

1166:Palacharla, S.; Kessler, R. E. (1994-01-01).

995:Baer, Jean-Loup; Chen, Tien-Fu (1991-01-01).

1814:: CS1 maint: multiple names: authors list (

1060:: CS1 maint: multiple names: authors list (

926:Journal of Network and Computer Applications

919:

298:

280:

1462:Joseph, Doug; Grunwald, Dirk (1997-05-01).

1422:Path confidence based lookahead prefetching

1550:Jain, Akanksha; Lin, Calvin (2013-12-07).

1207:

1205:

1038:Kennedy, Porterfield, Allan (1989-01-01).

698:Cache misses not eliminated by prefetching

1637:

1221:

1175:

1089:

1004:

1214:Journal of Instruction-Level Parallelism

1122:

994:

322:and execution time of the instructions.

174:

1549:

1202:

1037:

969:

79:Hardware vs. software cache prefetching

1827:

1079:

763:Cache Misses eliminated by prefetching

741:Cache Misses eliminated by prefetching

684:Cache misses eliminated by prefetching

644:Cache Misses eliminated by Prefetching

204:

43:Data vs. instruction cache prefetching

1464:"Prefetching using Markov predictors"

876:

1075:

1073:

1071:

965:

963:

961:

959:

957:

955:

872:

870:

338:Consider a for loop as shown below:

13:

1510:Pointer cache assisted prefetching

212:

14:

1846:

1068:

952:

867:

106:

1282:Srinath, Santhosh; Mutlu, Onur;

1775:

1742:

1699:

1654:

1613:

1588:

1543:

1500:

1455:

1412:

1369:

1326:

1275:

1230:

308:Methods of software prefetching

102:Methods of hardware prefetching

1791:ACM Trans. Archit. Code Optim.

1159:

1125:IEEE Transactions on Computers

1116:

1031:

988:

913:

766:

758:

752:

744:

701:

693:

687:

679:

290:Irregular temporal prefetching

1:

861:

788:

313:Compiler directed prefetching

1718:10.1109/ISCA45697.2020.00021

593:Metrics of cache prefetching

295:performant implementations.

7:

1042:(Thesis). Rice University.

834:

716:

600:

497:Here, the prefetch stride,

10:

1851:

1519:10.1109/MICRO.2002.1176239

1431:10.1109/MICRO.2016.7783763

938:10.1016/j.jnca.2020.102715

95:Software based prefetching

88:Hardware based prefetching

37:cache control instructions

856:Cache control instruction

299:Collaborative prefetching

281:Irregular spatial strides

1302:10.1109/HPCA.2007.346185

1186:10.1109/ISCA.1994.288164

749:Useless Cache Prefetches

556:{\displaystyle k=49/7=7}

414:

340:

1803:10.1145/2133382.2133384

1675:10.1109/ISCA.2018.00018

1639:10.1145/3093336.3037701

1564:10.1145/2540708.2540730

1388:10.1145/2830772.2830793

1251:10.1145/1542275.1542349

58:Instruction prefetching

825:

824:{\displaystyle 12/3=4}

776:

708:

655:

557:

511:

271:

251:

231:

187:Sequential Prefetching

180:

165:

145:

1759:10.1145/106972.106979

1476:10.1145/264107.264207

1100:10.1145/325164.325162

1015:10.1145/125826.125932

970:Solihin, Yan (2016).

891:10.1145/356887.356892

826:

777:

709:

656:

558:

512:

272:

252:

232:

178:

166:

146:

1751:Software Prefetching

1345:10.1109/ICCD.2017.64

841:Prefetch input queue

801:

727:

668:

611:

527:

501:

261:

241:

221:

155:

135:

67:(six bytes) and the

1626:ACM SIGPLAN Notices

205:Strided prefetching

821:

772:

704:

673:Total Cache Misses

651:

647:Total Cache Misses

553:

507:

267:

247:

227:

181:

161:

141:

1835:Cache (computing)

1727:978-1-7281-4661-4

1684:978-1-5386-5984-7

1600:community.arm.com

1573:978-1-4503-2638-4

1485:978-0-89791-901-2

1440:978-1-5090-3508-3

1354:978-1-5386-2254-4

1311:978-1-4244-0804-7

1290:(February 2007).

1260:978-1-60558-498-0

1137:10.1109/12.381947

770:

764:

750:

742:

733:

732:Prefetch Accuracy

699:

685:

674:

649:

648:

645:

510:{\displaystyle k}

333:cache performance

270:{\displaystyle s}

250:{\displaystyle s}

230:{\displaystyle s}

198:microarchitecture

164:{\displaystyle k}

144:{\displaystyle k}

20:Cache prefetching

1842:

1820:

1819:

1813:

1805:

1788:

1779:

1773:

1772:

1746:

1740:

1739:

1703:

1697:

1696:

1658:

1652:

1651:

1641:

1617:

1611:

1610:

1608:

1607:

1592:

1586:

1585:

1547:

1541:

1540:

1504:

1498:

1497:

1459:

1453:

1452:

1416:

1410:

1409:

1373:

1367:

1366:

1330:

1324:

1323:

1279:

1273:

1272:

1234:

1228:

1227:

1225:

1209:

1200:

1199:

1179:

1163:

1157:

1156:

1120:

1114:

1113:

1093:

1077:

1066:

1065:

1059:

1051:

1035:

1029:

1028:

1008:

992:

986:

985:

967:

950:

949:

917:

911:

910:

879:ACM Comput. Surv

874:

846:Link prefetching

830:

828:

827:

822:

811:

781:

779:

778:

773:

771:

769:

765:

762:

751:

748:

740:

739:

734:

731:

713:

711:

710:

705:

700:

697:

686:

683:

675:

672:

660:

658:

657:

652:

650:

646:

643:

642:

562:

560:

559:

554:

543:

516:

514:

513:

508:

493:

490:

487:

484:

481:

478:

475:

472:

469:

466:

463:

460:

457:

454:

451:

448:

445:

442:

439:

436:

433:

430:

427:

424:

421:

418:

407:

404:

401:

398:

395:

392:

389:

386:

383:

380:

377:

374:

371:

368:

365:

362:

359:

356:

353:

350:

347:

344:

276:

274:

273:

268:

256:

254:

253:

248:

236:

234:

233:

228:

170:

168:

167:

162:

150:

148:

147:

142:

52:Data prefetching

1850:

1849:

1845:

1844:

1843:

1841:

1840:

1839:

1825:

1824:

1823:

1807:

1806:

1786:

1780:

1776:

1769:

1747:

1743:

1728:

1704:

1700:

1685:

1659:

1655:

1618:

1614:

1605:

1603:

1594:

1593:

1589:

1574:

1548:

1544:

1529:

1505:

1501:

1486:

1460:

1456:

1441:

1417:

1413:

1398:

1374:

1370:

1355:

1331:

1327:

1312:

1280:

1276:

1261:

1235:

1231:

1223:10.1.1.229.3483

1210:

1203:

1196:

1164:

1160:

1121:

1117:

1110:

1078:

1069:

1053:

1052:

1036:

1032:

1025:

993:

989:

982:

968:

953:

918:

914:

875:

868:

864:

837:

807:

802:

799:

798:

791:

761:

747:

743:

738:

730:

728:

725:

724:

719:

696:

682:

671:

669:

666:

665:

641:

612:

609:

608:

603:

595:

569:

539:

528:

525:

524:

502:

499:

498:

495:

494:

491:

488:

485:

482:

479:

476:

473:

470:

467:

464:

461:

458:

455:

452:

449:

446:

443:

440:

437:

434:

431:

428:

425:

422:

419:

416:

409:

408:

405:

402:

399:

396:

393:

390:

387:

384:

381:

378:

375:

372:

369:

366:

363:

360:

357:

354:

351:

348:

345:

342:

315:

310:

301:

292:

283:

262:

259:

258:

242:

239:

238:

222:

219:

218:

215:

213:Regular strides

207:

156:

153:

152:

136:

133:

132:

109:

104:

81:

45:

17:

12:

11:

5:

1848:

1838:

1837:

1822:

1821:

1774:

1768:978-0897913805

1767:

1741:

1726:

1698:

1683:

1653:

1632:(4): 737–749.

1612:

1602:. 24 June 2019

1587:

1572:

1542:

1527:

1499:

1484:

1454:

1439:

1411:

1396:

1368:

1353:

1325:

1310:

1274:

1259:

1229:

1201:

1195:978-0818655104

1194:

1177:10.1.1.92.3031

1158:

1131:(5): 609–623.

1115:

1108:

1091:10.1.1.37.6114

1067:

1030:

1024:978-0897914598

1023:

1006:10.1.1.642.703

987:

981:978-1482211184

980:

951:

912:

885:(3): 473–530.

865:

863:

860:

859:

858:

853:

848:

843:

836:

833:

820:

817:

814:

810:

806:

790:

787:

768:

760:

757:

754:

746:

737:

718:

715:

703:

695:

692:

689:

681:

678:

640:

637:

634:

631:

628:

625:

622:

619:

616:

602:

599:

594:

591:

590:

589:

585:

577:

568:

565:

552:

549:

546:

542:

538:

535:

532:

506:

415:

341:

314:

311:

309:

306:

300:

297:

291:

288:

282:

279:

266:

246:

226:

214:

211:

206:

203:

202:

201:

194:

190:

173:

172:

160:

140:

117:

114:Alan Jay Smith

108:

107:Stream buffers

105:

103:

100:

99:

98:

92:

80:

77:

76:

75:

55:

44:

41:

15:

9:

6:

4:

3:

2:

1847:

1836:

1833:

1832:

1830:

1817:

1811:

1804:

1800:

1796:

1792:

1785:

1778:

1770:

1764:

1760:

1756:

1752:

1745:

1737:

1733:

1729:

1723:

1719:

1715:

1711:

1710:

1702:

1694:

1690:

1686:

1680:

1676:

1672:

1668:

1664:

1657:

1649:

1645:

1640:

1635:

1631:

1627:

1623:

1616:

1601:

1597:

1591:

1583:

1579:

1575:

1569:

1565:

1561:

1557:

1553:

1546:

1538:

1534:

1530:

1528:0-7695-1859-1

1524:

1520:

1516:

1512:

1511:

1503:

1495:

1491:

1487:

1481:

1477:

1473:

1469:

1465:

1458:

1450:

1446:

1442:

1436:

1432:

1428:

1424:

1423:

1415:

1407:

1403:

1399:

1397:9781450340342

1393:

1389:

1385:

1381:

1380:

1372:

1364:

1360:

1356:

1350:

1346:

1342:

1338:

1337:

1329:

1321:

1317:

1313:

1307:

1303:

1299:

1295:

1294:

1289:

1288:Patt, Yale N.

1285:

1278:

1270:

1266:

1262:

1256:

1252:

1248:

1244:

1240:

1233:

1224:

1219:

1215:

1208:

1206:

1197:

1191:

1187:

1183:

1178:

1173:

1169:

1162:

1154:

1150:

1146:

1142:

1138:

1134:

1130:

1126:

1119:

1111:

1109:0-89791-366-3

1105:

1101:

1097:

1092:

1087:

1083:

1076:

1074:

1072:

1063:

1057:

1049:

1045:

1041:

1034:

1026:

1020:

1016:

1012:

1007:

1002:

998:

991:

983:

977:

973:

966:

964:

962:

960:

958:

956:

947:

943:

939:

935:

931:

927:

923:

916:

908:

904:

900:

896:

892:

888:

884:

880:

873:

871:

866:

857:

854:

852:

849:

847:

844:

842:

839:

838:

832:

818:

815:

812:

808:

804:

794:

786:

782:

755:

735:

722:

714:

690:

676:

662:

638:

635:

632:

629:

626:

623:

620:

617:

614:

606:

598:

586:

583:

578:

575:

571:

570:

564:

550:

547:

544:

540:

536:

533:

530:

522:

521:

504:

413:

339:

336:

334:

329:

326:

323:

321:

305:

296:

287:

278:

264:

244:

224:

210:

199:

195:

191:

188:

183:

182:

177:

158:

138:

131:address (and

130:

126:

125:Norman Jouppi

122:

118:

115:

111:

110:

96:

93:

89:

86:

85:

84:

73:

70:

66:

63:

59:

56:

53:

50:

49:

48:

40:

38:

34:

30:

26:

21:

1794:

1790:

1777:

1750:

1744:

1708:

1701:

1666:

1656:

1629:

1625:

1615:

1604:. Retrieved

1599:

1590:

1555:

1545:

1509:

1502:

1467:

1457:

1421:

1414:

1378:

1371:

1335:

1328:

1292:

1284:Kim, Hyesoon

1277:

1242:

1232:

1216:(13): 1–16.

1213:

1167:

1161:

1128:

1124:

1118:

1081:

1039:

1033:

996:

990:

971:

929:

925:

915:

882:

878:

795:

792:

783:

723:

720:

663:

607:

604:

596:

588:performance.

519:

518:

496:

410:

337:

330:

327:

324:

320:miss penalty

316:

302:

293:

284:

216:

208:

186:

94:

87:

82:

57:

51:

46:

25:cache memory

19:

18:

1056:cite thesis

33:main memory

29:main memory

1606:2022-03-16

1048:1911/19069

932:: 102715.

862:References

851:Prefetcher

789:Timeliness

129:cache miss

1736:218683672

1648:0362-1340

1218:CiteSeerX

1172:CiteSeerX

1145:0018-9340

1086:CiteSeerX

1001:CiteSeerX

946:219506427

899:0360-0300

200:involved.

1829:Category

1810:citation

1797:: 1–29,

1693:50777324

1406:15294463

1363:11055312

1269:37841036

835:See also

717:Accuracy

601:Coverage

574:compiler

462:prefetch

69:Motorola

1537:5608519

1449:1097472

1320:6909725

1153:1450745

907:6023466

664:where,

582:runtime

121:buffers

119:Stream

1765:

1734:

1724:

1691:

1681:

1646:

1582:196831

1580:

1570:

1535:

1525:

1494:434419

1492:

1482:

1447:

1437:

1404:

1394:

1361:

1351:

1318:

1308:

1267:

1257:

1220:

1192:

1174:

1151:

1143:

1106:

1088:

1021:

1003:

978:

944:

905:

897:

486:array1

474:array1

468:array1

412:below:

400:array1

388:array1

193:above.

91:cache.

1787:(PDF)

1732:S2CID

1689:S2CID

1578:S2CID

1533:S2CID

1490:S2CID

1445:S2CID

1402:S2CID

1359:S2CID

1316:S2CID

1265:S2CID

1149:S2CID

942:S2CID

903:S2CID

72:68000

62:Intel

1816:link

1763:ISBN

1722:ISBN

1679:ISBN

1644:ISSN

1568:ISBN

1523:ISBN

1480:ISBN

1435:ISBN

1392:ISBN

1349:ISBN

1306:ISBN

1255:ISBN

1190:ISBN

1141:ISSN

1104:ISBN

1062:link

1019:ISBN

976:ISBN

895:ISSN

444:1024

441:<

370:1024

367:<

65:8086

1799:doi

1755:doi

1714:doi

1671:doi

1634:doi

1560:doi

1515:doi

1472:doi

1427:doi

1384:doi

1341:doi

1298:doi

1247:doi

1182:doi

1133:doi

1096:doi

1044:hdl

1011:doi

934:doi

930:165

887:doi

520:for

423:int

417:for

349:int

343:for

1831::

1812:}}

1808:{{

1793:,

1789:,

1761:.

1730:.

1720:.

1687:.

1677:.

1665:.

1642:.

1630:52

1628:.

1624:.

1598:.

1576:.

1566:.

1554:.

1531:.

1521:.

1488:.

1478:.

1466:.

1443:.

1433:.

1400:.

1390:.

1357:.

1347:.

1314:.

1304:.

1286:;

1263:.

1253:.

1241:.

1204:^

1188:.

1180:.

1147:.

1139:.

1129:44

1127:.

1102:.

1094:.

1070:^

1058:}}

1054:{{

1017:.

1009:.

954:^

940:.

928:.

924:.

901:.

893:.

883:14

881:.

869:^

805:12

661:,

537:49

471:);

453:++

379:++

335:.

39:.

1818:)

1801::

1795:9

1771:.

1757::

1738:.

1716::

1695:.

1673::

1650:.

1636::

1609:.

1584:.

1562::

1539:.

1517::

1496:.

1474::

1451:.

1429::

1408:.

1386::

1365:.

1343::

1322:.

1300::

1271:.

1249::

1226:.

1198:.

1184::

1155:.

1135::

1112:.

1098::

1064:)

1050:.

1046::

1027:.

1013::

984:.

948:.

936::

909:.

889::

819:4

816:=

813:3

809:/

767:)

759:(

756:+

753:)

745:(

736:=

702:)

694:(

691:+

688:)

680:(

677:=

639:=

636:e

633:g

630:a

627:r

624:e

621:v

618:o

615:C

584:.

551:7

548:=

545:7

541:/

534:=

531:k

505:k

492:}

489:;

483:*

480:2

477:=

465:(

459:{

456:)

450:i

447:;

438:i

435:;

432:0

429:=

426:i

420:(

406:}

403:;

397:*

394:2

391:=

385:{

382:)

376:i

373:;

364:i

361:;

358:0

355:=

352:i

346:(

265:s

245:s

225:s

159:k

139:k

116:.

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.