380:

clustering of the masses. For example, assuming that all the springs and the masses are identical in the 2-dimensional spring system pictured, one would intuitively expect that the loosest connected masses on the right-hand side of the system would move with the largest amplitude and in the opposite direction to the rest of the masses when the system is shaken — and this expectation will be confirmed by analyzing components of the eigenvectors of the graph

Laplacian corresponding to the smallest eigenvalues, i.e., the smallest

218:. There are many different ways to define a Laplacian which have different mathematical interpretations, and so the clustering will also have different interpretations. The eigenvectors that are relevant are the ones that correspond to several smallest eigenvalues of the Laplacian except for the smallest eigenvalue which will have a value of 0. For computational efficiency, these eigenvectors are often computed as the eigenvectors corresponding to the largest several eigenvalues of a function of the Laplacian.

229:

28:

20:

1845:, which estimate or sample a neighborhood of a given data point for nearest neighbors, and compute non-zero entries of the adjacency matrix by comparing only pairs of the neighbors. The number of the selected nearest neighbors thus determines the number of non-zero entries, and is often fixed so that the memory footprint of the

2546:

Ideas and network measures related to spectral clustering also play an important role in a number of applications apparently different from clustering problems. For instance, networks with stronger spectral partitions take longer to converge in opinion-updating models used in sociology and economics.

379:

The masses that are tightly connected by the springs in the mass-spring system evidently move together from the equilibrium position in low-frequency vibration modes, so that the components of the eigenvectors corresponding to the smallest eigenvalues of the graph

Laplacian can be used for meaningful

394:

The goal of normalization is making the diagonal entries of the

Laplacian matrix to be all unit, also scaling off-diagonal entries correspondingly. In a weighted graph, a vertex may have a large degree because of a small number of connected edges but with large weights just as well as due to a large

1744:

Moreover, a normalized

Laplacian has exactly the same eigenvectors as the normalized adjacency matrix, but with the order of the eigenvalues reversed. Thus, instead of computing the eigenvectors corresponding to the smallest eigenvalues of the normalized Laplacian, one can equivalently compute the

1700:

The graph

Laplacian can be and commonly is constructed from the adjacency matrix. The construction can be performed matrix-free, i.e., without explicitly forming the matrix of the graph Laplacian and no AO. It can also be performed in-place of the adjacency matrix without increasing the memory

1248:

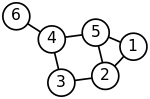

represents, one cluster data points identified with mutually strongly connected masses would move together in one direction, while in the complement cluster data points identified with remaining masses would move together in the opposite direction. The algorithm can be used for

2485:

The ideas behind spectral clustering may not be immediately obvious. It may be useful to highlight relationships with other methods. In particular, it can be described in the context of kernel clustering methods, which reveals several similarities with other approaches.

236:

Spectral clustering is well known to relate to partitioning of a mass-spring system, where each mass is associated with a data point and each spring stiffness corresponds to a weight of an edge describing a similarity of the two related data points, as in the

1225:, thus bi-partitioning the graph and labeling the data points with two labels. This sign-based approach follows the intuitive explanation of spectral clustering via the mass-spring model — in the low frequency vibration mode that the

2530:

of each cluster (in the clustering) was at least α and the weight of the inter-cluster edges was at most ε fraction of the total weight of all the edges in the graph. They also look at two approximation algorithms in the same paper.

1833:

entries of the matrix. Nystrom method can be used to approximate the similarity matrix, but the approximate matrix is not elementwise positive, i.e. cannot be interpreted as a distance-based similarity.

241:. Specifically, the classical reference explains that the eigenvalue problem describing transversal vibration modes of a mass-spring system is exactly the same as the eigenvalue problem for the graph

578:

757:

367:

2526:

Ravi Kannan, Santosh

Vempala and Adrian Vetta proposed a bicriteria measure to define the quality of a given clustering. They said that a clustering was an (α, ε)-clustering if the

1692:

The need to construct the graph

Laplacian is common for all distance- or correlation-based clustering methods. Computing the eigenvectors is specific to spectral clustering only.

677:

63:

in fewer dimensions. The similarity matrix is provided as an input and consists of a quantitative assessment of the relative similarity of each pair of points in the dataset.

1476:

has not already been explicitly constructed, the efficiency of spectral clustering may be improved if the solution to the corresponding eigenvalue problem is performed in a

460:

923:

136:

830:

2156:

2120:

2807:

Acer, Seher; Boman, Erik G.; Glusa, Christian A.; Rajamanickam, Sivasankaran (2021). "Sphynx: A parallel multi-GPU graph partitioner for distributed-memory systems".

2350:

2044:

1280:

278:

1831:

1804:

1777:

1223:

1196:

2408:

2379:

2284:

1970:

1941:

1912:

2208:

2182:

1102:

1647:

1149:

2428:

2324:

2304:

2255:

2235:

2084:

2064:

2018:

1998:

1883:

1863:

1739:

1719:

1687:

1667:

1624:

1604:

1584:

1564:

1540:

1474:

1444:

1424:

1404:

1381:

1361:

1341:

1318:

1246:

1169:

1122:

1073:

1053:

1033:

1013:

993:

973:

953:

873:

853:

783:

601:

483:

302:

216:

176:

156:

100:

2872:. Clustering Large Data Sets; Third IEEE International Conference on Data Mining (ICDM 2003) Melbourne, Florida: IEEE Computer Society. pp. 59–62.

2086:

graph

Laplacian matrix by a vector, which varies greatly whether the graph Laplacian matrix is dense or sparse. For the dense case the cost thus is

400:

3144:

Donath, William; Hoffman, Alan (1972). "Algorithms for partitioning of graphs and computer logic based on eigenvectors of connections matrices".

1566:. No matter the algorithm of the spectral clustering, the two main costly items are the construction of the graph Laplacian and determining its

2502:-means problem shares the objective function with the spectral clustering problem, which can be optimized directly by multi-level methods.

1745:

eigenvectors corresponding to the largest eigenvalues of the normalized adjacency matrix, without even talking about the

Laplacian matrix.

2438:

with distributed memory, resulting not only in high quality clusters, which spectral clustering is famous for, but also top performance.

2886:

Multiscale

Spectral Image Segmentation Multiscale preconditioning for computing eigenvalues of graph Laplacians in image segmentation

2778:

Wang, S.; Gittens, A.; Mahoney, M.W. (2019). "Scalable Kernel K-Means Clustering with Nystrom Approximation: Relative-Error Bounds".

3021:

Dhillon, Inderjit; Guan, Yuqiang; Kulis, Brian (November 2007). "Weighted Graph Cuts without Eigenvectors: A Multilevel Approach".

67:

1701:

footprint. Either way, the costs of constructing the graph Laplacian is essentially determined by the costs of constructing the

3286:

Golub, Benjamin; Jackson, Matthew O. (2012-07-26). "How Homophily Affects the Speed of Learning and Best-Response Dynamics".

2925:

3326:

1514:, and dimension reduction techniques such as locally-linear embedding can be used to reduce errors from noise or outliers.

499:

2561:

381:

2996:

2511:

2046:) matrix of selected eigenvectors of the graph Laplacian is normally proportional to the cost of multiplication of the

1511:

1496:

3207:

Daniel A. Spielman and Shang-Hua Teng (1996). "Spectral Partitioning Works: Planar graphs and finite element meshes".

691:

388:

490:

3331:

413:

2852:

313:

2687:

Arias-Castro, E.; Chen, G.; Lerman, G. (2011), "Spectral clustering based on local linear approximations.",

2954:

2591:

2466:

3192:

Guattery, Stephen; Miller, Gary L. (1995). "On the performance of spectral graph partitioning methods".

2514:— the optimal clusters with no edges cut — spectral clustering is also related to a spectral version of

617:

3094:

Kannan, Ravi; Vempala, Santosh; Vetta, Adrian (2004). "On Clusterings : Good, Bad and Spectral".

1503:

method. Spectral clustering has been successfully applied on large graphs by first identifying their

3035:

3008:

Proceedings of the tenth ACM SIGKDD international conference on Knowledge discovery and data mining

2474:

419:

56:

878:

105:

1842:

1250:

36:

3030:

2446:

Free software implementing spectral clustering is available in large open source projects like

796:

762:

and can also be used for spectral clustering. A mathematically equivalent algorithm takes the

2125:

2089:

2914:. Workshop on Algorithms for Modern Massive Datasets Stanford University and Yahoo! Research.

2571:

2329:

2023:

1259:

251:

2969:

2556:

1809:

1782:

1755:

1201:

1174:

44:

3226:

2384:

2355:

2260:

1946:

1917:

1888:

8:

2623:

2527:

2187:

2161:

1586:

eigenvectors for the spectral embedding. The last step — determining the labels from the

1504:

1477:

1081:

2973:

1629:

1131:

3111:

3056:

2834:

2816:

2787:

2760:

2714:

2696:

2664:

2638:"Data reduction for spectral clustering to analyze high throughput flow cytometry data"

2637:

2610:

2413:

2309:

2289:

2240:

2220:

2069:

2049:

2003:

1983:

1868:

1848:

1724:

1704:

1672:

1652:

1609:

1589:

1569:

1549:

1525:

1459:

1449:

1429:

1409:

1389:

1366:

1346:

1326:

1303:

1231:

1154:

1107:

1058:

1038:

1018:

998:

978:

958:

938:

858:

838:

768:

586:

468:

287:

201:

161:

141:

85:

2869:

Modern preconditioned eigensolvers for spectral image segmentation and graph bisection

1546:

and compute time, or number of arithmetic operations (AO) performed, as a function of

3303:

3268:

3115:

3048:

2909:

2884:

2867:

2838:

2752:

2669:

1481:

1480:(without explicitly manipulating or even computing the similarity matrix), as in the

79:

52:

3129:

Cheeger, Jeff (1969). "A lower bound for the smallest eigenvalue of the Laplacian".

2718:

3295:

3260:

3172:

3103:

3060:

3040:

2977:

2890:

2826:

2764:

2744:

2706:

2659:

2649:

2566:

2540:

1749:

1543:

1488:

928:

790:

612:

373:

242:

222:

195:

179:

60:

2939:

2286:

non-zero entries, the cost of the matrix-vector product and thus of computing the

2981:

2470:

2458:

1492:

305:

2894:

2830:

995:

of selected eigenvectors, mapping — called spectral embedding — of the original

2748:

2211:

1226:

1125:

409:

3264:

2184:

and is clearly misleading, since, e.g., in a hierarchical spectral clustering

3320:

3307:

3272:

3177:

3160:

2654:

1838:

238:

3044:

2732:

3052:

2756:

2673:

2626:", Neural Information Processing Systems 13 (NIPS 2000), 2001, pp. 873–879.

2447:

1499:

is a key technology accelerating the convergence, e.g., in the matrix-free

3107:

3077:

The Relationship of DBSCAN to Matrix Factorization and Spectral Clustering

3075:

3299:

1972:

non-zero entries, and the calculations can be trivially run in parallel.

1626:

matrix of eigenvectors — is typically the least expensive requiring only

1487:

For large-sized graphs, the second eigenvalue of the (normalized) graph

1128:, corresponds to the second smallest eigenvalue. Using the components of

763:

604:

463:

191:

48:

3131:

Proceedings of the Princeton Conference in Honor of Professor S. Bochner

2592:"CS267: Notes for Lecture 23, April 9, 1999, Graph Partitioning, Part 2"

228:

138:

represents a measure of the similarity between data points with indices

2543:

method was popularized by Shi & Malik and Ng, Jordan, & Weiss.

786:

608:

486:

2953:

Filippone, M.; Camastra, F.; Masulli, F.; Rovetta, S. (January 2008).

2710:

66:

In application to image segmentation, spectral clustering is known as

3206:

2455:

3240:

2821:

2792:

178:. The general approach to spectral clustering is to use a standard

2701:

2539:

Spectral clustering has a long history. Spectral clustering as a

3249:"Persuasion Bias, Social Influence, and Unidimensional Opinions"

3248:

2866:

Knyazev, Andrew V. (2003). Boley; Dhillon; Ghosh; Kogan (eds.).

2952:

2515:

2462:

2451:

2431:

1500:

1495:, leading to slow convergence of iterative eigenvalue solvers.

1283:

3023:

IEEE Transactions on Pattern Analysis and Machine Intelligence

2737:

IEEE Transactions on Pattern Analysis and Machine Intelligence

2430:

data points. Moreover, matrix-free eigenvalue solvers such as

2410:— both are the optimal low bounds of complexity of clustering

855:

of the symmetrically normalized Laplacian and the eigenvector

2911:

Multiscale Spectral Graph Partitioning and Image Segmentation

2636:

Zare, Habil; Shooshtari, P.; Gupta, A.; Brinkman, R. (2010).

2480:

1448:

Cluster the graph nodes based on these features (e.g., using

875:

of the left normalized Laplacian are related by the identity

1752:, e.g., using the RBF kernel, make it dense, thus requiring

1253:

by repeatedly partitioning the subsets in the same fashion.

3279:

2635:

2435:

1943:

sequential arithmetic operations are needed to compute the

27:

2806:

927:

398:

A popular normalized spectral clustering technique is the

19:

3074:

Schubert, Erich; Hess, Sibylle; Morik, Katharina (2018).

1055:. Now the analysis is reduced to clustering vectors with

3209:

Annual IEEE Symposium on Foundations of Computer Science

2955:"A survey of kernel and spectral methods for clustering"

2926:"Clustering - RDD-based API - Spark 3.2.0 Documentation"

1837:

Algorithms to construct the graph adjacency matrix as a

3247:

DeMarzo, P. M.; Vayanos, D.; Zwiebel, J. (2003-08-01).

3225:

Ng, Andrew Y.; Jordan, Michael I.; Weiss, Yair (2002).

2889:. Fast Manifold Learning Workshop, WM Williamburg, VA.

2686:

2613:, IEEE Transactions on PAMI, Vol. 22, No. 8, Aug 2000.

3246:

2876:

2416:

2387:

2358:

2332:

2312:

2292:

2263:

2243:

2223:

2190:

2164:

2128:

2092:

2072:

2052:

2026:

2006:

1986:

1949:

1920:

1891:

1871:

1851:

1812:

1785:

1758:

1727:

1707:

1675:

1655:

1632:

1612:

1592:

1572:

1552:

1528:

1462:

1432:

1412:

1392:

1369:

1349:

1329:

1306:

1282:, any vector clustering technique can be used, e.g.,

1262:

1234:

1204:

1177:

1157:

1134:

1110:

1084:

1061:

1041:

1021:

1001:

981:

961:

941:

881:

861:

841:

799:

771:

694:

620:

589:

573:{\displaystyle L^{\text{norm}}:=I-D^{-1/2}AD^{-1/2}.}

502:

471:

422:

316:

290:

254:

204:

164:

144:

108:

88:

2518:

clustering that finds density-connected components.

1343:

eigenvectors (the eigenvectors corresponding to the

3227:"On spectral clustering: analysis and an algorithm"

2946:

2434:can efficiently run in parallel, e.g., on multiple

3093:

2777:

2534:

2521:

2422:

2402:

2373:

2344:

2318:

2298:

2278:

2249:

2229:

2202:

2176:

2150:

2114:

2078:

2058:

2038:

2012:

1992:

1964:

1935:

1906:

1877:

1857:

1825:

1798:

1771:

1733:

1713:

1681:

1661:

1641:

1618:

1598:

1578:

1558:

1534:

1468:

1438:

1418:

1398:

1375:

1355:

1335:

1312:

1274:

1240:

1217:

1190:

1163:

1143:

1116:

1096:

1067:

1047:

1027:

1007:

987:

967:

947:

917:

867:

847:

824:

777:

751:

671:

595:

572:

477:

454:

361:

296:

272:

210:

170:

150:

130:

94:

3234:Advances in Neural Information Processing Systems

3073:

2988:

2122:. The very commonly cited in the literature cost

387:

3318:

3194:Annual ACM-SIAM Symposium on Discrete Algorithms

3020:

3001:-means: spectral clustering and normalized cuts"

2994:

1075:components, which may be done in various ways.

752:{\displaystyle L^{\text{rw}}:=D^{-1}L=I-D^{-1}A}

3294:(3). Oxford University Press (OUP): 1287–1338.

1695:

3191:

187:

3224:

3143:

395:number of connected edges with unit weights.

3285:

2940:"Kernlab: Kernel-Based Machine Learning Lab"

2733:"Spectral grouping using the Nystrom method"

2489:

2469:for pseudo-eigenvector clustering using the

1151:one can place all points whose component in

1035:-dimensional vector space using the rows of

78:Given an enumerated set of data points, the

23:An example connected graph, with 6 vertices.

2995:Dhillon, I.S.; Guan, Y.; Kulis, B. (2004).

1426:-th row defines the features of graph node

2481:Relationship with other clustering methods

1522:Denoting the number of the data points by

1510:Spectral clustering is closely related to

683:random walk (or left) normalized Laplacian

3176:

3034:

2820:

2791:

2700:

2663:

2653:

2505:

1975:

1386:Consider the matrix formed by the first

2611:"Normalized Cuts and Image Segmentation"

227:

68:segmentation-based object categorization

26:

18:

3259:(3). Oxford University Press: 909–968.

3158:

3128:

2907:

2882:

2865:

2730:

362:{\displaystyle D_{ii}=\sum _{j}A_{ij},}

3319:

2800:

2381:, with the memory footprint also only

31:Partitioning into two connected graphs

3220:

3218:

2624:Learning Segmentation by Random Walks

485:corresponding to the second-smallest

416:. It partitions points into two sets

182:method (there are many such methods,

82:may be defined as a symmetric matrix

2780:Journal of Machine Learning Research

2605:

2603:

2601:

607:corresponding to the second-largest

2562:Kernel principal component analysis

2510:In the trivial case of determining

2352:matrix of selected eigenvectors is

1507:, and then clustering communities.

221:

13:

3288:The Quarterly Journal of Economics

3253:The Quarterly Journal of Economics

3215:

3161:"Algebraic connectivity of graphs"

2589:

1542:, it is important to estimate the

1512:nonlinear dimensionality reduction

1104:, the selected single eigenvector

672:{\displaystyle D^{-1/2}AD^{-1/2}.}

14:

3343:

3165:Czechoslovak Mathematical Journal

3146:IBM Technical Disclosure Bulletin

2598:

1748:Naive constructions of the graph

611:of the symmetrically normalized

2689:Electronic Journal of Statistics

2622:Marina Meilă & Jianbo Shi, "

1689:vector of the labels in memory.

791:random walk normalized adjacency

3200:

3185:

3152:

3137:

3122:

3087:

3067:

3014:

2932:

2918:

2901:

2859:

2609:Jianbo Shi and Jitendra Malik,

2535:History and related literatures

2522:Measures to compare clusterings

1885:graph adjacency matrix is only

2845:

2771:

2724:

2680:

2629:

2616:

2583:

2397:

2391:

2368:

2362:

2273:

2267:

2145:

2132:

2109:

2096:

1959:

1953:

1930:

1924:

1901:

1895:

1015:data points is performed to a

491:symmetric normalized Laplacian

449:

423:

389:Laplacian matrix normalization

232:A 2-dimensional spring system.

73:

1:

2577:

1320:(or the normalized Laplacian)

1289:

785:corresponding to the largest

455:{\displaystyle (B_{1},B_{2})}

408:introduced by Jianbo Shi and

2982:10.1016/j.patcog.2007.05.018

2257:graph Laplacian matrix with

1806:AO to determine each of the

1696:Constructing graph Laplacian

918:{\displaystyle D^{-1/2}v=u.}

131:{\displaystyle A_{ij}\geq 0}

7:

3327:Cluster analysis algorithms

2908:Knyazev, Andrew V. (2006).

2895:10.13140/RG.2.2.35280.02565

2883:Knyazev, Andrew V. (2006).

2831:10.1016/j.parco.2021.102769

2550:

2441:

43:techniques make use of the

10:

3348:

3159:Fiedler, Miroslav (1973).

2749:10.1109/TPAMI.2004.1262185

2512:connected graph components

2217:In the sparse case of the

1980:The cost of computing the

3265:10.1162/00335530360698469

3083:. LWDA. pp. 330–334.

1841:are typically based on a

1456:If the similarity matrix

1300:Calculate the Laplacian

825:{\displaystyle P=D^{-1}A}

401:normalized cuts algorithm

3178:10.21136/CMJ.1973.101168

2655:10.1186/1471-2105-11-403

2151:{\displaystyle O(n^{3})}

2115:{\displaystyle O(n^{2})}

1741:graph adjacency matrix.

1517:

1363:smallest eigenvalues of

57:dimensionality reduction

3045:10.1109/tpami.2007.1115

1843:nearest neighbor search

1649:AO and creating just a

1251:hierarchical clustering

1171:is positive in the set

55:of the data to perform

37:multivariate statistics

3332:Algebraic graph theory

2506:Relationship to DBSCAN

2424:

2404:

2375:

2346:

2345:{\displaystyle k\ll n}

2320:

2300:

2280:

2251:

2231:

2204:

2178:

2152:

2116:

2080:

2060:

2040:

2039:{\displaystyle k\ll n}

2014:

1994:

1976:Computing eigenvectors

1966:

1937:

1908:

1879:

1859:

1827:

1800:

1773:

1735:

1715:

1683:

1663:

1643:

1620:

1600:

1580:

1560:

1536:

1470:

1440:

1420:

1400:

1377:

1357:

1337:

1314:

1276:

1275:{\displaystyle k>1}

1242:

1219:

1192:

1165:

1145:

1118:

1098:

1069:

1049:

1029:

1009:

989:

969:

949:

931:via Spectral Embedding

919:

869:

849:

826:

779:

753:

673:

597:

574:

479:

456:

363:

298:

274:

273:{\displaystyle L:=D-A}

233:

212:

172:

152:

132:

96:

32:

24:

3108:10.1145/990308.990313

2572:Spectral graph theory

2425:

2405:

2376:

2347:

2321:

2301:

2281:

2252:

2232:

2210:as determined by the

2205:

2179:

2153:

2117:

2081:

2061:

2041:

2015:

1995:

1967:

1938:

1909:

1880:

1860:

1828:

1826:{\displaystyle n^{2}}

1801:

1799:{\displaystyle n^{2}}

1774:

1772:{\displaystyle n^{2}}

1736:

1716:

1684:

1664:

1644:

1621:

1601:

1581:

1561:

1537:

1471:

1441:

1421:

1401:

1378:

1358:

1338:

1315:

1277:

1243:

1220:

1218:{\displaystyle B_{-}}

1193:

1191:{\displaystyle B_{+}}

1166:

1146:

1119:

1099:

1078:In the simplest case

1070:

1050:

1030:

1010:

990:

970:

950:

920:

870:

850:

827:

780:

754:

674:

598:

575:

480:

457:

382:vibration frequencies

364:

299:

275:

231:

213:

173:

153:

133:

97:

30:

22:

2557:Affinity propagation

2498:The weighted kernel

2414:

2403:{\displaystyle O(n)}

2385:

2374:{\displaystyle O(n)}

2356:

2330:

2310:

2290:

2279:{\displaystyle O(n)}

2261:

2241:

2221:

2188:

2162:

2158:comes from choosing

2126:

2090:

2070:

2050:

2024:

2004:

1984:

1965:{\displaystyle O(n)}

1947:

1936:{\displaystyle O(n)}

1918:

1907:{\displaystyle O(n)}

1889:

1869:

1849:

1810:

1783:

1756:

1725:

1705:

1673:

1653:

1630:

1610:

1590:

1570:

1550:

1526:

1460:

1430:

1410:

1390:

1367:

1347:

1327:

1323:Calculate the first

1304:

1260:

1256:In the general case

1232:

1202:

1175:

1155:

1132:

1108:

1082:

1059:

1039:

1019:

999:

979:

959:

939:

879:

859:

839:

797:

769:

692:

618:

587:

500:

469:

420:

412:, commonly used for

314:

288:

252:

202:

186:-means is discussed

162:

142:

106:

86:

2974:2008PatRe..41..176F

2962:Pattern Recognition

2942:. 12 November 2019.

2731:Fowlkes, C (2004).

2203:{\displaystyle k=1}

2177:{\displaystyle k=n}

1505:community structure

1478:matrix-free fashion

1406:eigenvectors; the

1097:{\displaystyle k=1}

406:Shi–Malik algorithm

41:spectral clustering

3300:10.1093/qje/qjs021

3096:Journal of the ACM

2809:Parallel Computing

2642:BMC Bioinformatics

2490:Relationship with

2420:

2400:

2371:

2342:

2316:

2296:

2276:

2247:

2227:

2200:

2174:

2148:

2112:

2076:

2056:

2036:

2010:

1990:

1962:

1933:

1904:

1875:

1855:

1823:

1796:

1769:

1731:

1711:

1679:

1659:

1642:{\displaystyle kn}

1639:

1616:

1596:

1576:

1556:

1532:

1466:

1450:k-means clustering

1436:

1416:

1396:

1373:

1353:

1333:

1310:

1272:

1238:

1215:

1188:

1161:

1144:{\displaystyle v,}

1141:

1114:

1094:

1065:

1045:

1025:

1005:

985:

965:

945:

915:

865:

845:

822:

775:

749:

669:

593:

570:

475:

452:

414:image segmentation

359:

342:

294:

270:

234:

208:

168:

148:

128:

92:

33:

25:

16:Clustering methods

3029:(11): 1944–1957.

3010:. pp. 551–6.

2853:"2.3. Clustering"

2711:10.1214/11-ejs651

2423:{\displaystyle n}

2319:{\displaystyle k}

2299:{\displaystyle n}

2250:{\displaystyle n}

2230:{\displaystyle n}

2079:{\displaystyle n}

2059:{\displaystyle n}

2013:{\displaystyle k}

1993:{\displaystyle n}

1878:{\displaystyle n}

1858:{\displaystyle n}

1734:{\displaystyle n}

1714:{\displaystyle n}

1682:{\displaystyle 1}

1662:{\displaystyle n}

1619:{\displaystyle k}

1599:{\displaystyle n}

1579:{\displaystyle k}

1559:{\displaystyle n}

1535:{\displaystyle n}

1482:Lanczos algorithm

1469:{\displaystyle A}

1439:{\displaystyle l}

1419:{\displaystyle l}

1399:{\displaystyle k}

1376:{\displaystyle L}

1356:{\displaystyle k}

1336:{\displaystyle k}

1313:{\displaystyle L}

1241:{\displaystyle v}

1164:{\displaystyle v}

1117:{\displaystyle v}

1068:{\displaystyle k}

1048:{\displaystyle V}

1028:{\displaystyle k}

1008:{\displaystyle n}

988:{\displaystyle V}

968:{\displaystyle k}

948:{\displaystyle n}

868:{\displaystyle u}

848:{\displaystyle v}

778:{\displaystyle u}

702:

596:{\displaystyle v}

510:

478:{\displaystyle v}

333:

297:{\displaystyle D}

211:{\displaystyle A}

171:{\displaystyle j}

151:{\displaystyle i}

95:{\displaystyle A}

80:similarity matrix

53:similarity matrix

3339:

3312:

3311:

3283:

3277:

3276:

3244:

3238:

3237:

3231:

3222:

3213:

3212:

3204:

3198:

3197:

3189:

3183:

3182:

3180:

3156:

3150:

3149:

3141:

3135:

3134:

3126:

3120:

3119:

3091:

3085:

3084:

3082:

3071:

3065:

3064:

3038:

3018:

3012:

3011:

3005:

2992:

2986:

2985:

2959:

2950:

2944:

2943:

2936:

2930:

2929:

2922:

2916:

2915:

2905:

2899:

2898:

2880:

2874:

2873:

2863:

2857:

2856:

2849:

2843:

2842:

2824:

2804:

2798:

2797:

2795:

2775:

2769:

2768:

2728:

2722:

2721:

2704:

2684:

2678:

2677:

2667:

2657:

2633:

2627:

2620:

2614:

2607:

2596:

2595:

2587:

2567:Cluster analysis

2541:machine learning

2429:

2427:

2426:

2421:

2409:

2407:

2406:

2401:

2380:

2378:

2377:

2372:

2351:

2349:

2348:

2343:

2325:

2323:

2322:

2317:

2305:

2303:

2302:

2297:

2285:

2283:

2282:

2277:

2256:

2254:

2253:

2248:

2236:

2234:

2233:

2228:

2209:

2207:

2206:

2201:

2183:

2181:

2180:

2175:

2157:

2155:

2154:

2149:

2144:

2143:

2121:

2119:

2118:

2113:

2108:

2107:

2085:

2083:

2082:

2077:

2065:

2063:

2062:

2057:

2045:

2043:

2042:

2037:

2019:

2017:

2016:

2011:

1999:

1997:

1996:

1991:

1971:

1969:

1968:

1963:

1942:

1940:

1939:

1934:

1913:

1911:

1910:

1905:

1884:

1882:

1881:

1876:

1864:

1862:

1861:

1856:

1832:

1830:

1829:

1824:

1822:

1821:

1805:

1803:

1802:

1797:

1795:

1794:

1778:

1776:

1775:

1770:

1768:

1767:

1750:adjacency matrix

1740:

1738:

1737:

1732:

1720:

1718:

1717:

1712:

1688:

1686:

1685:

1680:

1668:

1666:

1665:

1660:

1648:

1646:

1645:

1640:

1625:

1623:

1622:

1617:

1605:

1603:

1602:

1597:

1585:

1583:

1582:

1577:

1565:

1563:

1562:

1557:

1544:memory footprint

1541:

1539:

1538:

1533:

1489:Laplacian matrix

1475:

1473:

1472:

1467:

1445:

1443:

1442:

1437:

1425:

1423:

1422:

1417:

1405:

1403:

1402:

1397:

1382:

1380:

1379:

1374:

1362:

1360:

1359:

1354:

1342:

1340:

1339:

1334:

1319:

1317:

1316:

1311:

1281:

1279:

1278:

1273:

1247:

1245:

1244:

1239:

1224:

1222:

1221:

1216:

1214:

1213:

1198:and the rest in

1197:

1195:

1194:

1189:

1187:

1186:

1170:

1168:

1167:

1162:

1150:

1148:

1147:

1142:

1123:

1121:

1120:

1115:

1103:

1101:

1100:

1095:

1074:

1072:

1071:

1066:

1054:

1052:

1051:

1046:

1034:

1032:

1031:

1026:

1014:

1012:

1011:

1006:

994:

992:

991:

986:

974:

972:

971:

966:

954:

952:

951:

946:

929:Cluster analysis

924:

922:

921:

916:

902:

901:

897:

874:

872:

871:

866:

854:

852:

851:

846:

835:The eigenvector

831:

829:

828:

823:

818:

817:

784:

782:

781:

776:

758:

756:

755:

750:

745:

744:

720:

719:

704:

703:

700:

678:

676:

675:

670:

665:

664:

660:

641:

640:

636:

613:adjacency matrix

602:

600:

599:

594:

579:

577:

576:

571:

566:

565:

561:

542:

541:

537:

512:

511:

508:

484:

482:

481:

476:

461:

459:

458:

453:

448:

447:

435:

434:

374:adjacency matrix

368:

366:

365:

360:

355:

354:

341:

329:

328:

303:

301:

300:

295:

279:

277:

276:

271:

243:Laplacian matrix

223:Laplacian matrix

217:

215:

214:

209:

196:Laplacian matrix

177:

175:

174:

169:

157:

155:

154:

149:

137:

135:

134:

129:

121:

120:

101:

99:

98:

93:

3347:

3346:

3342:

3341:

3340:

3338:

3337:

3336:

3317:

3316:

3315:

3284:

3280:

3245:

3241:

3229:

3223:

3216:

3205:

3201:

3190:

3186:

3157:

3153:

3142:

3138:

3127:

3123:

3092:

3088:

3080:

3072:

3068:

3036:10.1.1.131.2635

3019:

3015:

3003:

2993:

2989:

2957:

2951:

2947:

2938:

2937:

2933:

2924:

2923:

2919:

2906:

2902:

2881:

2877:

2864:

2860:

2851:

2850:

2846:

2805:

2801:

2776:

2772:

2729:

2725:

2685:

2681:

2634:

2630:

2621:

2617:

2608:

2599:

2588:

2584:

2580:

2553:

2537:

2524:

2508:

2496:

2483:

2471:power iteration

2459:preconditioning

2444:

2415:

2412:

2411:

2386:

2383:

2382:

2357:

2354:

2353:

2331:

2328:

2327:

2311:

2308:

2307:

2291:

2288:

2287:

2262:

2259:

2258:

2242:

2239:

2238:

2222:

2219:

2218:

2189:

2186:

2185:

2163:

2160:

2159:

2139:

2135:

2127:

2124:

2123:

2103:

2099:

2091:

2088:

2087:

2071:

2068:

2067:

2051:

2048:

2047:

2025:

2022:

2021:

2005:

2002:

2001:

1985:

1982:

1981:

1978:

1948:

1945:

1944:

1919:

1916:

1915:

1890:

1887:

1886:

1870:

1867:

1866:

1850:

1847:

1846:

1817:

1813:

1811:

1808:

1807:

1790:

1786:

1784:

1781:

1780:

1763:

1759:

1757:

1754:

1753:

1726:

1723:

1722:

1706:

1703:

1702:

1698:

1674:

1671:

1670:

1654:

1651:

1650:

1631:

1628:

1627:

1611:

1608:

1607:

1591:

1588:

1587:

1571:

1568:

1567:

1551:

1548:

1547:

1527:

1524:

1523:

1520:

1497:Preconditioning

1493:ill-conditioned

1461:

1458:

1457:

1431:

1428:

1427:

1411:

1408:

1407:

1391:

1388:

1387:

1368:

1365:

1364:

1348:

1345:

1344:

1328:

1325:

1324:

1305:

1302:

1301:

1295:Basic Algorithm

1292:

1261:

1258:

1257:

1233:

1230:

1229:

1209:

1205:

1203:

1200:

1199:

1182:

1178:

1176:

1173:

1172:

1156:

1153:

1152:

1133:

1130:

1129:

1109:

1106:

1105:

1083:

1080:

1079:

1060:

1057:

1056:

1040:

1037:

1036:

1020:

1017:

1016:

1000:

997:

996:

980:

977:

976:

960:

957:

956:

940:

937:

936:

933:

893:

886:

882:

880:

877:

876:

860:

857:

856:

840:

837:

836:

810:

806:

798:

795:

794:

770:

767:

766:

737:

733:

712:

708:

699:

695:

693:

690:

689:

656:

649:

645:

632:

625:

621:

619:

616:

615:

588:

585:

584:

557:

550:

546:

533:

526:

522:

507:

503:

501:

498:

497:

470:

467:

466:

443:

439:

430:

426:

421:

418:

417:

392:

347:

343:

337:

321:

317:

315:

312:

311:

306:diagonal matrix

289:

286:

285:

253:

250:

249:

226:

203:

200:

199:

163:

160:

159:

143:

140:

139:

113:

109:

107:

104:

103:

87:

84:

83:

76:

17:

12:

11:

5:

3345:

3335:

3334:

3329:

3314:

3313:

3278:

3239:

3214:

3199:

3184:

3171:(2): 298–305.

3151:

3136:

3121:

3102:(3): 497–515.

3086:

3066:

3013:

2987:

2968:(1): 176–190.

2945:

2931:

2917:

2900:

2875:

2858:

2844:

2799:

2770:

2723:

2679:

2628:

2615:

2597:

2581:

2579:

2576:

2575:

2574:

2569:

2564:

2559:

2552:

2549:

2536:

2533:

2523:

2520:

2507:

2504:

2495:

2488:

2482:

2479:

2443:

2440:

2419:

2399:

2396:

2393:

2390:

2370:

2367:

2364:

2361:

2341:

2338:

2335:

2315:

2295:

2275:

2272:

2269:

2266:

2246:

2226:

2212:Fiedler vector

2199:

2196:

2193:

2173:

2170:

2167:

2147:

2142:

2138:

2134:

2131:

2111:

2106:

2102:

2098:

2095:

2075:

2055:

2035:

2032:

2029:

2009:

1989:

1977:

1974:

1961:

1958:

1955:

1952:

1932:

1929:

1926:

1923:

1903:

1900:

1897:

1894:

1874:

1854:

1820:

1816:

1793:

1789:

1766:

1762:

1730:

1710:

1697:

1694:

1678:

1658:

1638:

1635:

1615:

1595:

1575:

1555:

1531:

1519:

1516:

1465:

1454:

1453:

1446:

1435:

1415:

1395:

1384:

1372:

1352:

1332:

1321:

1309:

1297:

1296:

1291:

1288:

1271:

1268:

1265:

1237:

1227:Fiedler vector

1212:

1208:

1185:

1181:

1160:

1140:

1137:

1126:Fiedler vector

1113:

1093:

1090:

1087:

1064:

1044:

1024:

1004:

984:

964:

944:

932:

926:

914:

911:

908:

905:

900:

896:

892:

889:

885:

864:

844:

821:

816:

813:

809:

805:

802:

774:

760:

759:

748:

743:

740:

736:

732:

729:

726:

723:

718:

715:

711:

707:

698:

685:is defined as

668:

663:

659:

655:

652:

648:

644:

639:

635:

631:

628:

624:

592:

581:

580:

569:

564:

560:

556:

553:

549:

545:

540:

536:

532:

529:

525:

521:

518:

515:

506:

474:

451:

446:

442:

438:

433:

429:

425:

410:Jitendra Malik

391:

386:

370:

369:

358:

353:

350:

346:

340:

336:

332:

327:

324:

320:

293:

282:

281:

269:

266:

263:

260:

257:

225:

220:

207:

190:) on relevant

167:

147:

127:

124:

119:

116:

112:

91:

75:

72:

15:

9:

6:

4:

3:

2:

3344:

3333:

3330:

3328:

3325:

3324:

3322:

3309:

3305:

3301:

3297:

3293:

3289:

3282:

3274:

3270:

3266:

3262:

3258:

3254:

3250:

3243:

3235:

3228:

3221:

3219:

3210:

3203:

3195:

3188:

3179:

3174:

3170:

3166:

3162:

3155:

3147:

3140:

3132:

3125:

3117:

3113:

3109:

3105:

3101:

3097:

3090:

3079:

3078:

3070:

3062:

3058:

3054:

3050:

3046:

3042:

3037:

3032:

3028:

3024:

3017:

3009:

3002:

3000:

2991:

2983:

2979:

2975:

2971:

2967:

2963:

2956:

2949:

2941:

2935:

2927:

2921:

2913:

2912:

2904:

2896:

2892:

2888:

2887:

2879:

2871:

2870:

2862:

2854:

2848:

2840:

2836:

2832:

2828:

2823:

2818:

2814:

2810:

2803:

2794:

2789:

2785:

2781:

2774:

2766:

2762:

2758:

2754:

2750:

2746:

2743:(2): 214–25.

2742:

2738:

2734:

2727:

2720:

2716:

2712:

2708:

2703:

2698:

2694:

2690:

2683:

2675:

2671:

2666:

2661:

2656:

2651:

2647:

2643:

2639:

2632:

2625:

2619:

2612:

2606:

2604:

2602:

2593:

2586:

2582:

2573:

2570:

2568:

2565:

2563:

2560:

2558:

2555:

2554:

2548:

2544:

2542:

2532:

2529:

2519:

2517:

2513:

2503:

2501:

2493:

2487:

2478:

2476:

2472:

2468:

2464:

2460:

2457:

2453:

2449:

2439:

2437:

2433:

2417:

2394:

2388:

2365:

2359:

2339:

2336:

2333:

2313:

2293:

2270:

2264:

2244:

2224:

2215:

2213:

2197:

2194:

2191:

2171:

2168:

2165:

2140:

2136:

2129:

2104:

2100:

2093:

2073:

2053:

2033:

2030:

2027:

2007:

1987:

1973:

1956:

1950:

1927:

1921:

1898:

1892:

1872:

1852:

1844:

1840:

1839:sparse matrix

1835:

1818:

1814:

1791:

1787:

1764:

1760:

1751:

1746:

1742:

1728:

1708:

1693:

1690:

1676:

1656:

1636:

1633:

1613:

1593:

1573:

1553:

1545:

1529:

1515:

1513:

1508:

1506:

1502:

1498:

1494:

1490:

1485:

1483:

1479:

1463:

1451:

1447:

1433:

1413:

1393:

1385:

1370:

1350:

1330:

1322:

1307:

1299:

1298:

1294:

1293:

1287:

1285:

1269:

1266:

1263:

1254:

1252:

1235:

1228:

1210:

1206:

1183:

1179:

1158:

1138:

1135:

1127:

1124:, called the

1111:

1091:

1088:

1085:

1076:

1062:

1042:

1022:

1002:

982:

962:

942:

930:

925:

912:

909:

906:

903:

898:

894:

890:

887:

883:

862:

842:

833:

819:

814:

811:

807:

803:

800:

792:

788:

772:

765:

746:

741:

738:

734:

730:

727:

724:

721:

716:

713:

709:

705:

696:

688:

687:

686:

684:

679:

666:

661:

657:

653:

650:

646:

642:

637:

633:

629:

626:

622:

614:

610:

606:

590:

567:

562:

558:

554:

551:

547:

543:

538:

534:

530:

527:

523:

519:

516:

513:

504:

496:

495:

494:

492:

488:

472:

465:

462:based on the

444:

440:

436:

431:

427:

415:

411:

407:

403:

402:

396:

390:

385:

383:

377:

375:

372:and A is the

356:

351:

348:

344:

338:

334:

330:

325:

322:

318:

310:

309:

308:

307:

291:

267:

264:

261:

258:

255:

248:

247:

246:

244:

240:

239:spring system

230:

224:

219:

205:

197:

193:

189:

185:

181:

165:

145:

125:

122:

117:

114:

110:

89:

81:

71:

69:

64:

62:

58:

54:

50:

46:

42:

38:

29:

21:

3291:

3287:

3281:

3256:

3252:

3242:

3233:

3208:

3202:

3193:

3187:

3168:

3164:

3154:

3145:

3139:

3130:

3124:

3099:

3095:

3089:

3076:

3069:

3026:

3022:

3016:

3007:

2998:

2990:

2965:

2961:

2948:

2934:

2920:

2910:

2903:

2885:

2878:

2868:

2861:

2847:

2812:

2808:

2802:

2783:

2779:

2773:

2740:

2736:

2726:

2692:

2688:

2682:

2645:

2641:

2631:

2618:

2585:

2545:

2538:

2525:

2509:

2499:

2497:

2491:

2484:

2473:method, and

2448:scikit-learn

2445:

2216:

1979:

1836:

1747:

1743:

1699:

1691:

1521:

1509:

1486:

1455:

1255:

1077:

935:Knowing the

934:

834:

761:

682:

680:

603:is also the

582:

405:

399:

397:

393:

378:

371:

283:

245:defined as

235:

192:eigenvectors

183:

77:

65:

40:

34:

2695:: 1537–87,

2590:Demmel, J.

2528:conductance

1779:memory and

764:eigenvector

605:eigenvector

583:The vector

493:defined as

464:eigenvector

74:Definitions

49:eigenvalues

3321:Categories

2822:2105.00578

2815:: 102769.

2793:1706.02803

2578:References

1290:Algorithms

787:eigenvalue

609:eigenvalue

487:eigenvalue

180:clustering

61:clustering

3308:0033-5533

3273:0033-5533

3116:207558562

3031:CiteSeerX

2839:233481603

2702:1001.1323

2456:multigrid

2337:≪

2031:≪

1491:is often

1211:−

888:−

812:−

739:−

731:−

714:−

651:−

627:−

552:−

528:−

520:−

335:∑

265:−

123:≥

51:) of the

3053:17848776

2997:"Kernel

2786:: 1–49.

2757:15376896

2719:88518155

2674:20667133

2551:See also

2442:Software

102:, where

45:spectrum

3061:9402790

2970:Bibcode

2765:2384316

2665:2923634

2648:: 403.

1914:, only

975:matrix

793:matrix

789:of the

489:of the

304:is the

59:before

3306:

3271:

3114:

3059:

3051:

3033:

2837:

2763:

2755:

2717:

2672:

2662:

2516:DBSCAN

2494:-means

2463:ARPACK

2452:LOBPCG

2450:using

2432:LOBPCG

2020:(with

1501:LOBPCG

1284:DBSCAN

284:where

3230:(PDF)

3112:S2CID

3081:(PDF)

3057:S2CID

3004:(PDF)

2958:(PDF)

2835:S2CID

2817:arXiv

2788:arXiv

2761:S2CID

2715:S2CID

2697:arXiv

2467:MLlib

2454:with

2326:with

1518:Costs

194:of a

188:below

3304:ISSN

3269:ISSN

3049:PMID

2753:PMID

2670:PMID

2436:GPUs

2306:-by-

2237:-by-

2066:-by-

2000:-by-

1865:-by-

1721:-by-

1669:-by-

1606:-by-

1267:>

955:-by-

681:The

509:norm

158:and

3296:doi

3292:127

3261:doi

3257:118

3173:doi

3104:doi

3041:doi

2978:doi

2891:doi

2827:doi

2813:106

2745:doi

2707:doi

2660:PMC

2650:doi

2461:or

404:or

198:of

35:In

3323::

3302:.

3290:.

3267:.

3255:.

3251:.

3232:.

3217:^

3169:23

3167:.

3163:.

3110:.

3100:51

3098:.

3055:.

3047:.

3039:.

3027:29

3025:.

3006:.

2976:.

2966:41

2964:.

2960:.

2833:.

2825:.

2811:.

2784:20

2782:.

2759:.

2751:.

2741:26

2739:.

2735:.

2713:,

2705:,

2691:,

2668:.

2658:.

2646:11

2644:.

2640:.

2600:^

2477:.

2465:,

2214:.

1484:.

1286:.

832:.

706::=

701:rw

514::=

384:.

376:.

259::=

70:.

39:,

3310:.

3298::

3275:.

3263::

3236:.

3211:.

3196:.

3181:.

3175::

3148:.

3133:.

3118:.

3106::

3063:.

3043::

2999:k

2984:.

2980::

2972::

2928:.

2897:.

2893::

2855:.

2841:.

2829::

2819::

2796:.

2790::

2767:.

2747::

2709::

2699::

2693:5

2676:.

2652::

2594:.

2500:k

2492:k

2475:R

2418:n

2398:)

2395:n

2392:(

2389:O

2369:)

2366:n

2363:(

2360:O

2340:n

2334:k

2314:k

2294:n

2274:)

2271:n

2268:(

2265:O

2245:n

2225:n

2198:1

2195:=

2192:k

2172:n

2169:=

2166:k

2146:)

2141:3

2137:n

2133:(

2130:O

2110:)

2105:2

2101:n

2097:(

2094:O

2074:n

2054:n

2034:n

2028:k

2008:k

1988:n

1960:)

1957:n

1954:(

1951:O

1931:)

1928:n

1925:(

1922:O

1902:)

1899:n

1896:(

1893:O

1873:n

1853:n

1819:2

1815:n

1792:2

1788:n

1765:2

1761:n

1729:n

1709:n

1677:1

1657:n

1637:n

1634:k

1614:k

1594:n

1574:k

1554:n

1530:n

1464:A

1452:)

1434:l

1414:l

1394:k

1383:)

1371:L

1351:k

1331:k

1308:L

1270:1

1264:k

1236:v

1207:B

1184:+

1180:B

1159:v

1139:,

1136:v

1112:v

1092:1

1089:=

1086:k

1063:k

1043:V

1023:k

1003:n

983:V

963:k

943:n

913:.

910:u

907:=

904:v

899:2

895:/

891:1

884:D

863:u

843:v

820:A

815:1

808:D

804:=

801:P

773:u

747:A

742:1

735:D

728:I

725:=

722:L

717:1

710:D

697:L

667:.

662:2

658:/

654:1

647:D

643:A

638:2

634:/

630:1

623:D

591:v

568:.

563:2

559:/

555:1

548:D

544:A

539:2

535:/

531:1

524:D

517:I

505:L

473:v

450:)

445:2

441:B

437:,

432:1

428:B

424:(

357:,

352:j

349:i

345:A

339:j

331:=

326:i

323:i

319:D

292:D

280:,

268:A

262:D

256:L

206:A

184:k

166:j

146:i

126:0

118:j

115:i

111:A

90:A

47:(

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.