2277:. Time Series Mars is the term used when MARS models are applied in a time series context. Typically in this set up the predictors are the lagged time series values resulting in autoregressive spline models. These models and extensions to include moving average spline models are described in "Univariate Time Series Modelling and Forecasting using TSMARS: A study of threshold time series autoregressive, seasonal and moving average models using TSMARS".

1849:). The two basis functions in the pair are identical except that a different side of a mirrored hinge function is used for each function. Each new basis function consists of a term already in the model (which could perhaps be the intercept term) multiplied by a new hinge function. A hinge function is defined by a variable and a knot, so to add a new basis function, MARS must search over all combinations of the following:

2053:

968:

2669:

691:

2283:(BMARS) uses the same model form, but builds the model using a Bayesian approach. It may arrive at different optimal MARS models because the model building approach is different. The result of BMARS is typically an ensemble of posterior samples of MARS models, which allows for probabilistic prediction.

2030:

A further constraint can be placed on the forward pass by specifying a maximum allowable degree of interaction. Typically only one or two degrees of interaction are allowed, but higher degrees can be used when the data warrants it. The maximum degree of interaction in the first MARS example above is

2018:

is the number of hinge-function knots, so the formula penalizes the addition of knots. Thus the GCV formula adjusts (i.e. increases) the training RSS to penalize more complex models. We penalize flexibility because models that are too flexible will model the specific realization of noise in the data

2241:

is used when the underlying form of the function is known and regression is used only to estimate the parameters of that function. MARS, on the other hand, estimates the functions themselves, albeit with severe constraints on the nature of the functions. (These constraints are necessary because

2174:

MARS models tend to have a good bias-variance trade-off. The models are flexible enough to model non-linearity and variable interactions (thus MARS models have fairly low bias), yet the constrained form of MARS basis functions prevents too much flexibility (thus MARS models have fairly low

1893:

the model. To build a model with better generalization ability, the backward pass prunes the model, deleting the least effective term at each step until it finds the best submodel. Model subsets are compared using the

Generalized cross validation (GCV) criterion described below.

473:

963:{\displaystyle {\begin{aligned}\mathrm {ozone} =&\ 5.2\\&{}+0.93\max(0,\mathrm {temp} -58)\\&{}-0.64\max(0,\mathrm {temp} -68)\\&{}-0.046\max(0,234-\mathrm {ibt} )\\&{}-0.016\max(0,\mathrm {wind} -7)\max(0,200-\mathrm {vis} )\end{aligned}}}

1141:

To obtain the above expression, the MARS model building procedure automatically selects which variables to use (some variables are important, others not), the positions of the kinks in the hinge functions, and how the hinge functions are combined.

2038:

Other constraints on the forward pass are possible. For example, the user can specify that interactions are allowed only for certain input variables. Such constraints could make sense because of knowledge of the process that generated the data.

1137:

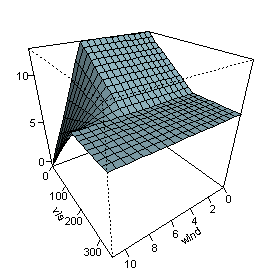

vary, with the other variables fixed at their median values. The figure shows that wind does not affect the ozone level unless visibility is low. We see that MARS can build quite flexible regression surfaces by combining hinge functions.

2171:(meaning it includes important variables in the model and excludes unimportant ones). However, there can be some arbitrariness in the selection, especially when there are correlated predictors, and this can affect interpretability.

1868:

This process of adding terms continues until the change in residual error is too small to continue or until the maximum number of terms is reached. The maximum number of terms is specified by the user before model building starts.

2270:

rather than hinge functions, and they do not automatically model variable interactions. The smoother fit and lack of regression terms reduces variance when compared to MARS, but ignoring variable interactions can worsen the

1601:

A hinge function is zero for part of its range, so can be used to partition the data into disjoint regions, each of which can be treated independently. Thus for example a mirrored pair of hinge functions in the expression

2109:

No regression modeling technique is best for all situations. The guidelines below are intended to give an idea of the pros and cons of MARS, but there will be exceptions to the guidelines. It is useful to compare MARS to

981:

This expression models air pollution (the ozone level) as a function of the temperature and a few other variables. Note that the last term in the formula (on the last line) incorporates an interaction between

1900:

The forward pass adds terms in pairs, but the backward pass typically discards one side of the pair and so terms are often not seen in pairs in the final model. A paired hinge can be seen in the equation for

1245:

1876:

fashion, but a key aspect of MARS is that because of the nature of hinge functions, the search can be done quickly using a fast least-squares update technique. Brute-force search can be sped up by using a

2159:

Building MARS models often requires little or no data preparation. The hinge functions automatically partition the input data, so the effect of outliers is contained. In this respect MARS is similar to

2156:. MARS tends to be better than recursive partitioning for numeric data because hinges are more appropriate for numeric variables than the piecewise constant segmentation used by recursive partitioning.

1463:

1451:. MARS automatically selects variables and values of those variables for knots of the hinge functions. Examples of such basis functions can be seen in the middle three lines of the ozone formula.

696:

357:

479:

160:

974:

1677:

352:

2691:

2218:

The resulting fitted function is continuous, unlike recursive partitioning, which can give a more realistic model in some situations. (However, the model is not smooth or differentiable).

1449:

1406:

219:

1897:

The backward pass has an advantage over the forward pass: at any step it can choose any term to delete, whereas the forward pass at each step can only see the next pair of terms.

1076:

1107:

1011:

1928:

1135:

1039:

513:

309:

280:

251:

2256:(commonly called CART). MARS can be seen as a generalization of recursive partitioning that allows for continuous models, which can provide a better fit for numerical data.

1569:

1522:

1454:

3) a product of two or more hinge functions. These basis functions can model interaction between two or more variables. An example is the last line of the ozone formula.

591:

61:

The term "MARS" is trademarked and licensed to

Salford Systems. In order to avoid trademark infringements, many open-source implementations of MARS are called "Earth".

1689:

One might assume that only piecewise linear functions can be formed from hinge functions, but hinge functions can be multiplied together to form non-linear functions.

1353:

1284:

1841:

MARS then repeatedly adds basis function in pairs to the model. At each step it finds the pair of basis functions that gives the maximum reduction in sum-of-squares

637:

2478:(1993). "Estimating Functions of Mixed Ordinal and Categorical Variables Using Adaptive Splines". In Stephan Morgenthaler; Elvezio Ronchetti; Werner Stahel (eds.).

1722:

1311:

556:

1810:

1777:

17:

1592:

657:

611:

2181:

With MARS models, as with any non-parametric regression, parameter confidence intervals and other checks on the model cannot be calculated directly (unlike

2231:(GLMs) can be incorporated into MARS models by applying a link function after the MARS model is built. Thus, for example, MARS models can incorporate

2859:

2280:

338:). We thus turn to MARS to automatically build a model taking into account non-linearities. MARS software constructs a model from the given

2178:

MARS is suitable for handling large datasets, and implementations run very quickly. However, recursive partitioning can be faster than MARS.

2141:

MARS models are simple to understand and interpret. Compare the equation for ozone concentration above to, say, the innards of a trained

1960:

score in the special case where errors are

Gaussian, or where the squared error loss function is used. GCV was introduced by Craven and

1313:

is a constant coefficient. For example, each line in the formula for ozone above is one basis function multiplied by its coefficient.

2853:

1156:

2274:

682:

and these variables will be unclear and not easily visible by plotting. We can use MARS to discover that non-linear relationship.

1952:

The backward pass compares the performance of different models using

Generalized Cross-Validation (GCV), a minor variant on the

2611:

2123:

2883:

2652:

2638:

2621:

2604:

2587:

2502:

1826:

MARS builds a model in two phases: the forward and the backward pass. This two-stage approach is the same as that used by

2820:

2409:

675:

141:

468:{\displaystyle {\begin{aligned}{\widehat {y}}=&\ 25\\&{}+6.1\max(0,x-13)\\&{}-3.1\max(0,13-x)\end{aligned}}}

1842:

2904:

2717:

2459:

2096:

1608:

148:

matrix is just a single column. Given these measurements, we would like to build a model which predicts the expected

2078:

1358:

1) a constant 1. There is just one such term, the intercept. In the ozone formula above, the intercept term is 5.2.

2215:

MARS models can make predictions very quickly, as they only require evaluating a linear function of the predictors.

2687:

2204:

implementations do not allow missing values in predictors, but free implementations of regression trees (such as

2070:

2027:

One constraint has already been mentioned: the user can specify the maximum number of terms in the forward pass.

1411:

1368:

282:

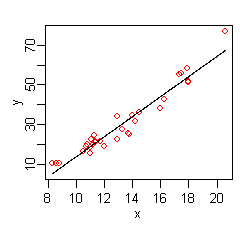

is estimated from the data. The figure on the right shows a plot of this function: a line giving the predicted

2063:

1957:

1838:

MARS starts with a model which consists of just the intercept term (which is the mean of the response values).

2186:

1939:

177:

69:

This section introduces MARS using a few examples. We start with a set of data: a matrix of input variables

2004:

1953:

1947:

1964:

and extended by

Friedman for MARS; lower values of GCV indicate better models. The formula for the GCV is

1995:(effective number of parameters) = (number of mars terms) + (penalty) · ((number of Mars terms) − 1 ) / 2

1701:

2303:

2259:

1047:

2909:

2142:

1081:

985:

51:

2524:

1904:

1112:

1016:

489:

285:

256:

227:

2741:

2699:

2695:

2679:

2352:

2228:

334:

may be non-linear (look at the red dots relative to the regression line at low and high values of

2074:

1533:

1486:

2805:

2776:

2347:

2253:

2161:

2127:

2111:

1827:

561:

2628:

1322:

1253:

2410:

Earth – Multivariate adaptive regression splines in Orange (Python machine learning library)

1930:

in the first MARS example above; there are no complete pairs retained in the ozone example.

1865:

To calculate the coefficient of each term, MARS applies a linear regression over the terms.

616:

2752:

2398:

2377:

2313:

2308:

2267:

2238:

1707:

1289:

541:

2788:

2730:

Several free and commercial software packages are available for fitting MARS-type models.

2385:

1782:

1727:

523:

once again shown as red dots. The predicted response is now a better fit to the original

8:

2764:

2232:

1821:

43:

2495:"Estimating Functions of Mixed Ordinal and Categorical Variables Using Adaptive Splines"

2164:

which also partitions the data into disjoint regions, although using a different method.

2555:

2365:

2247:

1873:

1598:. The figure on the right shows a mirrored pair of hinge functions with a knot at 3.1.

1577:

642:

596:

47:

2879:

2648:

2634:

2617:

2600:

2583:

2547:

2455:

2318:

2293:

2182:

2168:

2135:

2559:

2577:

2539:

2447:

2381:

2357:

2298:

2263:

2153:

1846:

1697:

2800:

2475:

2420:

2373:

2243:

1943:

1462:

2494:

1881:

that reduces the number of parent terms considered at each step ("Fast MARS").

1471:

1317:

2543:

2451:

478:

159:

2898:

2551:

2361:

2146:

1693:

973:

58:

that automatically models nonlinearities and interactions between variables.

1980:

where RSS is the residual sum-of-squares measured on the training data and

168:

55:

2874:

Denison, D. G. T.; Holmes, C. C.; Mallick, B. K.; Smith, A. F. M. (2002).

2847:

1961:

1862:

3) all values of each variable (for the knot of the new hinge function).

2114:

and this is done below. (Recursive partitioning is also commonly called

1724:

notation used in this article, hinge functions are often represented by

2369:

1878:

31:

2825:

2523:

Denison, D. G. T.; Mallick, B. K.; Smith, A. F. M. (1 December 1998).

1686:

linear graph shown for the simple MARS model in the previous section.

2594:

2189:

and related techniques must be used for validating the model instead.

1683:

486:

The figure on the right shows a plot of this function: the predicted

2427:, Stanford University Department of Statistics, Technical Report 110

2338:

Friedman, J. H. (1991). "Multivariate

Adaptive Regression Splines".

2212:) do allow missing values using a technique called surrogate splits.

2081:. Statements consisting only of original research should be removed.

2698:

external links, and converting useful links where appropriate into

2627:

Denison D.G.T., Holmes C.C., Mallick B.K., and Smith A.F.M. (2004)

2624:(has a chapter on MARS and discusses some tweaks to the algorithm)

2830:

1890:

1240:{\displaystyle {\widehat {f}}(x)=\sum _{i=1}^{k}c_{i}B_{i}(x).}

662:

In this simple example, we can easily see from the plot that

2876:

Bayesian methods for nonlinear classification and regression

2810:

Bayesian

Methods for Nonlinear Classification and Regression

2630:

Bayesian

Methods for Nonlinear Classification and Regression

1859:

2) all variables (to select one for the new basis function)

534:

to take into account non-linearity. The kink is produced by

2480:

New

Directions in Statistical Data Analysis and Robustness

670:(and might perhaps guess that y varies with the square of

2873:

2850:

from

Salford Systems. Based on Friedman's implementation.

1984:

is the number of observations (the number of rows in the

538:. The hinge functions are the expressions starting with

530:

MARS has automatically produced a kink in the predicted

2801:

ARESLab: Adaptive Regression Splines toolbox for Matlab

1466:

A mirrored pair of hinge functions with a knot at x=3.1

659:). Hinge functions are described in more detail below.

2019:

instead of just the systematic structure of the data.

2007:) but can be increased by the user if they so desire.

685:

An example MARS expression with multiple variables is

1907:

1785:

1730:

1710:

1611:

1580:

1536:

1489:

1414:

1371:

1325:

1292:

1256:

1159:

1115:

1084:

1050:

1019:

988:

694:

645:

619:

599:

564:

544:

492:

355:

288:

259:

230:

180:

2576:Hastie T., Tibshirani R., and Friedman J.H. (2009)

2522:

2645:Statistical learning from a regression perspective

2222:

2167:MARS (like recursive partitioning) does automatic

1922:

1804:

1771:

1716:

1671:

1586:

1563:

1516:

1443:

1400:

1347:

1305:

1278:

1239:

1129:

1101:

1070:

1033:

1005:

962:

651:

631:

605:

585:

550:

507:

467:

303:

274:

245:

213:

2682:may not follow Knowledge's policies or guidelines

2003:is typically 2 (giving results equivalent to the

1991:The effective number of parameters is defined as

2896:

2821:Earth – Multivariate adaptive regression splines

1711:

1645:

1615:

1537:

1490:

1415:

1372:

924:

889:

842:

792:

742:

565:

545:

437:

398:

1933:

1672:{\displaystyle 6.1\max(0,x-13)-3.1\max(0,13-x)}

1250:The model is a weighted sum of basis functions

674:). However, in general there will be multiple

1872:The search at each step is usually done in a

1815:

54:technique and can be seen as an extension of

2486:

1044:The figure on the right plots the predicted

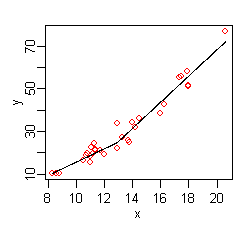

2441:

1444:{\displaystyle \max(0,{\text{constant}}-x)}

1401:{\displaystyle \max(0,x-{\text{constant}})}

1972:· (1 − (effective number of parameters) /

2718:Learn how and when to remove this message

2610:Heping Zhang and Burton H. Singer (2010)

2351:

2097:Learn how and when to remove this message

1365:function. A hinge function has the form

326:indicates that the relationship between

73:, and a vector of the observed responses

2492:

2474:

2337:

2242:discovering a model from the data is an

1461:

1355:takes one of the following three forms:

972:

477:

158:

36:multivariate adaptive regression splines

18:Multivariate adaptive regression splines

2613:Recursive Partitioning and Applications

214:{\displaystyle {\widehat {y}}=-37+5.1x}

14:

2897:

2662:

2579:The Elements of Statistical Learning

2437:

2435:

2433:

2152:MARS can handle both continuous and

2046:

2035:); in the ozone example it is two.

1884:

977:Variable interaction in a MARS model

482:A simple MARS model of the same data

2505:from the original on April 11, 2022

2446:. New York, NY: Springer New York.

2134:MARS models are more flexible than

1833:

666:has a non-linear relationship with

27:Non-parametric regression technique

24:

2607:(has an example using MARS with R)

2570:

2493:Friedman, Jerome H. (1991-06-01).

2442:Kuhn, Max; Johnson, Kjell (2013).

2250:without constraints on the model.)

1457:

1123:

1120:

1117:

1095:

1092:

1089:

1086:

1064:

1061:

1058:

1055:

1052:

1027:

1024:

1021:

999:

996:

993:

990:

949:

946:

943:

911:

908:

905:

902:

867:

864:

861:

814:

811:

808:

805:

764:

761:

758:

755:

712:

709:

706:

703:

700:

81:. For example, the data could be:

77:, with a response for each row in

25:

2921:

2658:

2596:Extending the Linear Model with R

2430:

1145:

2667:

2051:

2042:

2031:one (i.e. no interactions or an

1692:Hinge functions are also called

1071:{\displaystyle \mathrm {ozone} }

2262:. Unlike MARS, GAMs fit smooth

2223:Extensions and related concepts

2014:(number of Mars terms − 1 ) / 2

1150:MARS builds models of the form

1102:{\displaystyle \mathrm {wind} }

1006:{\displaystyle \mathrm {wind} }

678:, and the relationship between

2878:. Chichester, England: Wiley.

2867:

2780:package. Not Friedman's MARS.

2516:

2468:

2414:

2403:

2392:

2331:

2022:

1958:leave-one-out cross-validation

1923:{\displaystyle {\widehat {y}}}

1812:means take the positive part.

1793:

1786:

1760:

1756:

1737:

1731:

1666:

1648:

1636:

1618:

1558:

1540:

1511:

1493:

1476:A key part of MARS models are

1438:

1418:

1395:

1375:

1342:

1336:

1273:

1267:

1231:

1225:

1178:

1172:

1130:{\displaystyle \mathrm {vis} }

1034:{\displaystyle \mathrm {vis} }

953:

927:

921:

892:

871:

845:

824:

795:

774:

745:

580:

568:

519:, with the original values of

508:{\displaystyle {\widehat {y}}}

458:

440:

419:

401:

315:, with the original values of

304:{\displaystyle {\widehat {y}}}

275:{\displaystyle {\widehat {y}}}

246:{\displaystyle {\widehat {y}}}

13:

1:

2324:

1940:Cross-validation (statistics)

64:

2005:Akaike information criterion

1954:Akaike information criterion

1948:Akaike information criterion

1934:Generalized cross validation

322:The data at the extremes of

7:

2444:Applied Predictive Modeling

2287:

2260:Generalized additive models

2077:the claims made and adding

1564:{\displaystyle \max(0,c-x)}

1517:{\displaystyle \max(0,x-c)}

10:

2926:

2792:package for Bayesian MARS.

2304:Rational function modeling

1937:

1852:1) existing terms (called

1819:

1816:The model building process

1704:functions. Instead of the

1594:is a constant, called the

1469:

2616:, 2nd edition. Springer,

2582:, 2nd edition. Springer,

2452:10.1007/978-1-4614-6849-3

2235:to predict probabilities.

2229:Generalized linear models

1889:The forward pass usually

586:{\displaystyle \max(a,b)}

52:non-parametric regression

2905:Nonparametric regression

2532:Statistics and Computing

2340:The Annals of Statistics

1348:{\displaystyle B_{i}(x)}

1279:{\displaystyle B_{i}(x)}

2590:(has a section on MARS)

2544:10.1023/A:1008824606259

140:Here there is only one

2362:10.1214/aos/1176347963

2254:Recursive partitioning

2162:recursive partitioning

2130:article for details).

2128:recursive partitioning

2112:recursive partitioning

1956:that approximates the

1924:

1828:recursive partitioning

1806:

1773:

1718:

1673:

1588:

1565:

1518:

1467:

1445:

1402:

1349:

1307:

1280:

1241:

1204:

1131:

1103:

1072:

1035:

1007:

978:

964:

653:

633:

632:{\displaystyle a>b}

607:

587:

552:

509:

483:

469:

305:

276:

247:

215:

171:for the above data is

164:

2854:STATISTICA Data Miner

2239:Non-linear regression

1938:Further information:

1925:

1807:

1774:

1719:

1717:{\displaystyle \max }

1674:

1589:

1566:

1519:

1470:Further information:

1465:

1446:

1403:

1350:

1308:

1306:{\displaystyle c_{i}}

1281:

1242:

1184:

1132:

1104:

1073:

1036:

1008:

976:

965:

676:independent variables

654:

634:

608:

588:

553:

551:{\displaystyle \max }

510:

481:

470:

306:

277:

248:

216:

162:

2688:improve this article

2314:Spline interpolation

2309:Segmented regression

1905:

1805:{\displaystyle _{+}}

1783:

1772:{\displaystyle _{+}}

1728:

1708:

1609:

1578:

1534:

1487:

1412:

1369:

1323:

1290:

1254:

1157:

1113:

1082:

1048:

1017:

986:

692:

643:

617:

597:

562:

542:

490:

353:

286:

257:

228:

178:

142:independent variable

2840:Commercial software

2700:footnote references

2476:Friedman, Jerome H.

2233:logistic regression

1822:Stepwise regression

319:shown as red dots.

44:regression analysis

2833:for Bayesian MARS.

2812:for Bayesian MARS.

2593:Faraway J. (2005)

2399:CRAN Package earth

2169:variable selection

2062:possibly contains

1920:

1802:

1769:

1714:

1669:

1584:

1561:

1514:

1468:

1441:

1398:

1345:

1303:

1276:

1237:

1127:

1099:

1068:

1031:

1003:

979:

960:

958:

649:

629:

603:

583:

548:

505:

484:

465:

463:

301:

272:

243:

211:

165:

48:Jerome H. Friedman

2885:978-0-471-49036-4

2728:

2727:

2720:

2653:978-0-387-77500-5

2643:Berk R.A. (2008)

2639:978-0-471-49036-4

2622:978-1-4419-6823-4

2605:978-1-58488-424-8

2588:978-0-387-84857-0

2319:Spline regression

2294:Linear regression

2183:linear regression

2136:linear regression

2107:

2106:

2099:

2064:original research

1917:

1885:The backward pass

1856:in this context)

1587:{\displaystyle c}

1430:

1393:

1169:

723:

652:{\displaystyle b}

606:{\displaystyle a}

502:

379:

369:

298:

269:

240:

190:

138:

137:

50:in 1991. It is a

16:(Redirected from

2917:

2910:Machine learning

2890:

2889:

2871:

2791:

2786:function in the

2785:

2779:

2774:function in the

2773:

2767:

2762:function in the

2761:

2755:

2750:function in the

2749:

2723:

2716:

2712:

2709:

2703:

2671:

2670:

2663:

2564:

2563:

2529:

2520:

2514:

2513:

2511:

2510:

2490:

2484:

2483:

2472:

2466:

2465:

2439:

2428:

2418:

2412:

2407:

2401:

2396:

2390:

2389:

2355:

2335:

2299:Local regression

2211:

2207:

2203:

2199:

2195:

2187:Cross-validation

2154:categorical data

2116:regression trees

2102:

2095:

2091:

2088:

2082:

2079:inline citations

2055:

2054:

2047:

1929:

1927:

1926:

1921:

1919:

1918:

1910:

1847:greedy algorithm

1834:The forward pass

1811:

1809:

1808:

1803:

1801:

1800:

1778:

1776:

1775:

1770:

1768:

1767:

1749:

1748:

1723:

1721:

1720:

1715:

1678:

1676:

1675:

1670:

1593:

1591:

1590:

1585:

1570:

1568:

1567:

1562:

1523:

1521:

1520:

1515:

1480:taking the form

1450:

1448:

1447:

1442:

1431:

1428:

1407:

1405:

1404:

1399:

1394:

1391:

1354:

1352:

1351:

1346:

1335:

1334:

1312:

1310:

1309:

1304:

1302:

1301:

1285:

1283:

1282:

1277:

1266:

1265:

1246:

1244:

1243:

1238:

1224:

1223:

1214:

1213:

1203:

1198:

1171:

1170:

1162:

1136:

1134:

1133:

1128:

1126:

1108:

1106:

1105:

1100:

1098:

1077:

1075:

1074:

1069:

1067:

1040:

1038:

1037:

1032:

1030:

1012:

1010:

1009:

1004:

1002:

969:

967:

966:

961:

959:

952:

914:

882:

877:

870:

835:

830:

817:

785:

780:

767:

735:

730:

721:

715:

658:

656:

655:

650:

638:

636:

635:

630:

612:

610:

609:

604:

592:

590:

589:

584:

557:

555:

554:

549:

514:

512:

511:

506:

504:

503:

495:

474:

472:

471:

466:

464:

430:

425:

391:

386:

377:

371:

370:

362:

310:

308:

307:

302:

300:

299:

291:

281:

279:

278:

273:

271:

270:

262:

252:

250:

249:

244:

242:

241:

233:

220:

218:

217:

212:

192:

191:

183:

84:

83:

21:

2925:

2924:

2920:

2919:

2918:

2916:

2915:

2914:

2895:

2894:

2893:

2886:

2872:

2868:

2787:

2783:

2775:

2771:

2763:

2759:

2751:

2747:

2724:

2713:

2707:

2704:

2685:

2676:This article's

2672:

2668:

2661:

2573:

2571:Further reading

2568:

2567:

2527:

2525:"Bayesian MARS"

2521:

2517:

2508:

2506:

2491:

2487:

2473:

2469:

2462:

2440:

2431:

2421:Friedman, J. H.

2419:

2415:

2408:

2404:

2397:

2393:

2336:

2332:

2327:

2290:

2244:inverse problem

2225:

2209:

2205:

2201:

2197:

2193:

2103:

2092:

2086:

2083:

2068:

2056:

2052:

2045:

2025:

1950:

1944:Model selection

1936:

1909:

1908:

1906:

1903:

1902:

1887:

1845:error (it is a

1836:

1824:

1818:

1796:

1792:

1784:

1781:

1780:

1763:

1759:

1744:

1740:

1729:

1726:

1725:

1709:

1706:

1705:

1610:

1607:

1606:

1579:

1576:

1575:

1535:

1532:

1531:

1488:

1485:

1484:

1478:hinge functions

1474:

1460:

1458:Hinge functions

1427:

1413:

1410:

1409:

1390:

1370:

1367:

1366:

1330:

1326:

1324:

1321:

1320:

1297:

1293:

1291:

1288:

1287:

1261:

1257:

1255:

1252:

1251:

1219:

1215:

1209:

1205:

1199:

1188:

1161:

1160:

1158:

1155:

1154:

1148:

1116:

1114:

1111:

1110:

1085:

1083:

1080:

1079:

1051:

1049:

1046:

1045:

1020:

1018:

1015:

1014:

989:

987:

984:

983:

957:

956:

942:

901:

881:

875:

874:

860:

834:

828:

827:

804:

784:

778:

777:

754:

734:

728:

727:

719:

699:

695:

693:

690:

689:

644:

641:

640:

618:

615:

614:

598:

595:

594:

563:

560:

559:

543:

540:

539:

536:hinge functions

494:

493:

491:

488:

487:

462:

461:

429:

423:

422:

390:

384:

383:

375:

361:

360:

356:

354:

351:

350:

290:

289:

287:

284:

283:

261:

260:

258:

255:

254:

253:indicates that

232:

231:

229:

226:

225:

224:The hat on the

182:

181:

179:

176:

175:

67:

42:) is a form of

28:

23:

22:

15:

12:

11:

5:

2923:

2913:

2912:

2907:

2892:

2891:

2884:

2865:

2864:

2863:

2857:

2851:

2844:

2843:

2841:

2837:

2836:

2835:

2834:

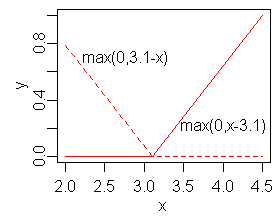

2828:

2823:

2815:

2814:

2813:

2808:from the book

2803:

2795:

2794:

2793:

2781:

2769:

2757:

2738:

2737:

2735:

2726:

2725:

2680:external links

2675:

2673:

2666:

2660:

2659:External links

2657:

2656:

2655:

2641:

2625:

2608:

2591:

2572:

2569:

2566:

2565:

2538:(4): 337–346.

2515:

2485:

2467:

2460:

2429:

2413:

2402:

2391:

2353:10.1.1.382.970

2329:

2328:

2326:

2323:

2322:

2321:

2316:

2311:

2306:

2301:

2296:

2289:

2286:

2285:

2284:

2278:

2272:

2266:or polynomial

2257:

2251:

2236:

2224:

2221:

2220:

2219:

2216:

2213:

2190:

2179:

2176:

2172:

2165:

2157:

2150:

2143:neural network

2139:

2120:decision trees

2105:

2104:

2059:

2057:

2050:

2044:

2041:

2033:additive model

2024:

2021:

2016:

2015:

1997:

1996:

1978:

1977:

1935:

1932:

1916:

1913:

1886:

1883:

1835:

1832:

1817:

1814:

1799:

1795:

1791:

1788:

1766:

1762:

1758:

1755:

1752:

1747:

1743:

1739:

1736:

1733:

1713:

1680:

1679:

1668:

1665:

1662:

1659:

1656:

1653:

1650:

1647:

1644:

1641:

1638:

1635:

1632:

1629:

1626:

1623:

1620:

1617:

1614:

1583:

1572:

1571:

1560:

1557:

1554:

1551:

1548:

1545:

1542:

1539:

1525:

1524:

1513:

1510:

1507:

1504:

1501:

1498:

1495:

1492:

1472:Hinge function

1459:

1456:

1440:

1437:

1434:

1426:

1423:

1420:

1417:

1397:

1389:

1386:

1383:

1380:

1377:

1374:

1344:

1341:

1338:

1333:

1329:

1318:basis function

1300:

1296:

1275:

1272:

1269:

1264:

1260:

1248:

1247:

1236:

1233:

1230:

1227:

1222:

1218:

1212:

1208:

1202:

1197:

1194:

1191:

1187:

1183:

1180:

1177:

1174:

1168:

1165:

1147:

1146:The MARS model

1144:

1125:

1122:

1119:

1097:

1094:

1091:

1088:

1066:

1063:

1060:

1057:

1054:

1029:

1026:

1023:

1001:

998:

995:

992:

971:

970:

955:

951:

948:

945:

941:

938:

935:

932:

929:

926:

923:

920:

917:

913:

910:

907:

904:

900:

897:

894:

891:

888:

885:

880:

878:

876:

873:

869:

866:

863:

859:

856:

853:

850:

847:

844:

841:

838:

833:

831:

829:

826:

823:

820:

816:

813:

810:

807:

803:

800:

797:

794:

791:

788:

783:

781:

779:

776:

773:

770:

766:

763:

760:

757:

753:

750:

747:

744:

741:

738:

733:

731:

729:

726:

720:

718:

714:

711:

708:

705:

702:

698:

697:

648:

628:

625:

622:

602:

582:

579:

576:

573:

570:

567:

547:

501:

498:

476:

475:

460:

457:

454:

451:

448:

445:

442:

439:

436:

433:

428:

426:

424:

421:

418:

415:

412:

409:

406:

403:

400:

397:

394:

389:

387:

385:

382:

376:

374:

368:

365:

359:

358:

297:

294:

268:

265:

239:

236:

222:

221:

210:

207:

204:

201:

198:

195:

189:

186:

163:A linear model

136:

135:

132:

128:

127:

124:

120:

119:

116:

112:

111:

108:

104:

103:

100:

96:

95:

90:

66:

63:

46:introduced by

26:

9:

6:

4:

3:

2:

2922:

2911:

2908:

2906:

2903:

2902:

2900:

2887:

2881:

2877:

2870:

2866:

2861:

2858:

2856:from StatSoft

2855:

2852:

2849:

2846:

2845:

2842:

2839:

2838:

2832:

2829:

2827:

2824:

2822:

2819:

2818:

2816:

2811:

2807:

2804:

2802:

2799:

2798:

2797:Matlab code:

2796:

2790:

2782:

2778:

2770:

2766:

2758:

2754:

2746:

2745:

2743:

2740:

2739:

2736:

2734:Free software

2733:

2732:

2731:

2722:

2719:

2711:

2701:

2697:

2696:inappropriate

2693:

2689:

2683:

2681:

2674:

2665:

2664:

2654:

2650:

2646:

2642:

2640:

2636:

2632:

2631:

2626:

2623:

2619:

2615:

2614:

2609:

2606:

2602:

2598:

2597:

2592:

2589:

2585:

2581:

2580:

2575:

2574:

2561:

2557:

2553:

2549:

2545:

2541:

2537:

2533:

2526:

2519:

2504:

2500:

2496:

2489:

2482:. Birkhauser.

2481:

2477:

2471:

2463:

2461:9781461468486

2457:

2453:

2449:

2445:

2438:

2436:

2434:

2426:

2422:

2417:

2411:

2406:

2400:

2395:

2387:

2383:

2379:

2375:

2371:

2367:

2363:

2359:

2354:

2349:

2345:

2341:

2334:

2330:

2320:

2317:

2315:

2312:

2310:

2307:

2305:

2302:

2300:

2297:

2295:

2292:

2291:

2282:

2281:Bayesian MARS

2279:

2276:

2273:

2269:

2265:

2261:

2258:

2255:

2252:

2249:

2245:

2240:

2237:

2234:

2230:

2227:

2226:

2217:

2214:

2191:

2188:

2184:

2180:

2177:

2173:

2170:

2166:

2163:

2158:

2155:

2151:

2148:

2147:random forest

2144:

2140:

2137:

2133:

2132:

2131:

2129:

2125:

2121:

2117:

2113:

2101:

2098:

2090:

2080:

2076:

2072:

2066:

2065:

2060:This article

2058:

2049:

2048:

2043:Pros and cons

2040:

2036:

2034:

2028:

2020:

2013:

2012:

2011:

2008:

2006:

2002:

1994:

1993:

1992:

1989:

1987:

1983:

1975:

1971:

1968:GCV = RSS / (

1967:

1966:

1965:

1963:

1959:

1955:

1949:

1945:

1941:

1931:

1914:

1911:

1898:

1895:

1892:

1882:

1880:

1875:

1870:

1866:

1863:

1860:

1857:

1855:

1850:

1848:

1844:

1839:

1831:

1829:

1823:

1813:

1797:

1789:

1764:

1753:

1750:

1745:

1741:

1734:

1703:

1699:

1695:

1690:

1687:

1685:

1663:

1660:

1657:

1654:

1651:

1642:

1639:

1633:

1630:

1627:

1624:

1621:

1612:

1605:

1604:

1603:

1599:

1597:

1581:

1555:

1552:

1549:

1546:

1543:

1530:

1529:

1528:

1508:

1505:

1502:

1499:

1496:

1483:

1482:

1481:

1479:

1473:

1464:

1455:

1452:

1435:

1432:

1424:

1421:

1387:

1384:

1381:

1378:

1364:

1359:

1356:

1339:

1331:

1327:

1319:

1314:

1298:

1294:

1270:

1262:

1258:

1234:

1228:

1220:

1216:

1210:

1206:

1200:

1195:

1192:

1189:

1185:

1181:

1175:

1166:

1163:

1153:

1152:

1151:

1143:

1139:

1042:

975:

939:

936:

933:

930:

918:

915:

898:

895:

886:

883:

879:

857:

854:

851:

848:

839:

836:

832:

821:

818:

801:

798:

789:

786:

782:

771:

768:

751:

748:

739:

736:

732:

724:

716:

688:

687:

686:

683:

681:

677:

673:

669:

665:

660:

646:

626:

623:

620:

600:

577:

574:

571:

537:

533:

528:

526:

522:

518:

499:

496:

480:

455:

452:

449:

446:

443:

434:

431:

427:

416:

413:

410:

407:

404:

395:

392:

388:

380:

372:

366:

363:

349:

348:

347:

345:

341:

337:

333:

329:

325:

320:

318:

314:

295:

292:

266:

263:

237:

234:

208:

205:

202:

199:

196:

193:

187:

184:

174:

173:

172:

170:

161:

157:

155:

151:

147:

143:

133:

130:

129:

125:

122:

121:

117:

114:

113:

109:

106:

105:

101:

98:

97:

94:

91:

89:

86:

85:

82:

80:

76:

72:

62:

59:

57:

56:linear models

53:

49:

45:

41:

37:

33:

19:

2875:

2869:

2809:

2729:

2714:

2708:October 2016

2705:

2690:by removing

2677:

2647:, Springer,

2644:

2629:

2612:

2595:

2578:

2535:

2531:

2518:

2507:. Retrieved

2498:

2488:

2479:

2470:

2443:

2424:

2416:

2405:

2394:

2343:

2339:

2333:

2246:that is not

2119:

2115:

2108:

2093:

2087:October 2016

2084:

2061:

2037:

2032:

2029:

2026:

2017:

2009:

2000:

1998:

1990:

1985:

1981:

1979:

1973:

1969:

1951:

1899:

1896:

1888:

1871:

1867:

1864:

1861:

1858:

1854:parent terms

1853:

1851:

1840:

1837:

1825:

1698:hockey stick

1691:

1688:

1682:creates the

1681:

1600:

1595:

1573:

1526:

1477:

1475:

1453:

1362:

1360:

1357:

1315:

1249:

1149:

1140:

1043:

980:

684:

679:

671:

667:

663:

661:

535:

531:

529:

524:

520:

516:

485:

343:

339:

335:

331:

327:

323:

321:

316:

312:

223:

169:linear model

166:

153:

152:for a given

149:

145:

139:

92:

87:

78:

74:

70:

68:

60:

39:

35:

29:

2860:ADAPTIVEREG

2346:(1): 1–67.

2023:Constraints

2010:Note that

1874:brute-force

346:as follows

2899:Categories

2744:packages:

2509:2022-04-11

2386:0765.62064

2325:References

2248:well-posed

2175:variance).

2126:; see the

2071:improve it

1820:See also:

65:The basics

32:statistics

2862:from SAS.

2777:polspline

2692:excessive

2633:, Wiley,

2552:1573-1375

2425:Fast MARS

2348:CiteSeerX

2202:polspline

2185:models).

2075:verifying

1988:matrix).

1915:^

1879:heuristic

1790:⋅

1751:−

1735:±

1702:rectifier

1684:piecewise

1661:−

1640:−

1631:−

1553:−

1506:−

1433:−

1388:−

1186:∑

1167:^

940:−

916:−

884:−

858:−

837:−

819:−

787:−

769:−

500:^

453:−

432:−

414:−

367:^

296:^

267:^

238:^

197:−

188:^

144:, so the

2826:py-earth

2772:polymars

2560:12570055

2503:Archived

2288:See also

1891:overfits

1843:residual

1429:constant

1392:constant

527:values.

2817:Python

2768:package

2756:package

2686:Please

2678:use of

2599:, CRC,

2423:(1993)

2378:1091842

2370:2241837

2268:splines

2138:models.

2069:Please

2001:penalty

1830:trees.

1286:. Each

639:, else

558:(where

515:versus

311:versus

2882:

2831:pyBASS

2651:

2637:

2620:

2603:

2586:

2558:

2550:

2458:

2384:

2376:

2368:

2350:

2275:TSMARS

2200:, and

1999:where

1946:, and

1779:where

1574:where

722:

378:

2753:earth

2748:earth

2556:S2CID

2528:(PDF)

2366:JSTOR

2271:bias.

2264:loess

2210:party

2206:rpart

2194:earth

2145:or a

2122:, or

1962:Wahba

1700:, or

1363:hinge

1361:2) a

1316:Each

887:0.016

840:0.046

134:77.0

118:19.7

110:18.8

102:16.4

2880:ISBN

2848:MARS

2806:Code

2789:BASS

2784:bass

2760:mars

2649:ISBN

2635:ISBN

2618:ISBN

2601:ISBN

2584:ISBN

2548:ISSN

2499:DTIC

2456:ISBN

2208:and

2192:The

2124:CART

1694:ramp

1596:knot

1527:or

1408:or

1109:and

1013:and

790:0.64

740:0.93

624:>

342:and

330:and

131:20.6

126:...

115:10.8

107:10.7

99:10.5

40:MARS

2765:mda

2694:or

2540:doi

2448:doi

2382:Zbl

2358:doi

2198:mda

2073:by

1712:max

1646:max

1643:3.1

1616:max

1613:6.1

1538:max

1491:max

1416:max

1373:max

1078:as

937:200

925:max

890:max

855:234

843:max

793:max

743:max

725:5.2

613:if

593:is

566:max

546:max

438:max

435:3.1

399:max

396:6.1

206:5.1

123:...

30:In

2901::

2554:.

2546:.

2534:.

2530:.

2501:.

2497:.

2454:.

2432:^

2380:.

2374:MR

2372:.

2364:.

2356:.

2344:19

2342:.

2196:,

2118:,

1976:))

1942:,

1696:,

1658:13

1634:13

1041:.

822:68

772:58

450:13

417:13

381:25

200:37

167:A

156:.

34:,

2888:.

2742:R

2721:)

2715:(

2710:)

2706:(

2702:.

2684:.

2562:.

2542::

2536:8

2512:.

2464:.

2450::

2388:.

2360::

2149:.

2100:)

2094:(

2089:)

2085:(

2067:.

1986:x

1982:N

1974:N

1970:N

1912:y

1798:+

1794:]

1787:[

1765:+

1761:]

1757:)

1754:c

1746:i

1742:x

1738:(

1732:[

1667:)

1664:x

1655:,

1652:0

1649:(

1637:)

1628:x

1625:,

1622:0

1619:(

1582:c

1559:)

1556:x

1550:c

1547:,

1544:0

1541:(

1512:)

1509:c

1503:x

1500:,

1497:0

1494:(

1439:)

1436:x

1425:,

1422:0

1419:(

1396:)

1385:x

1382:,

1379:0

1376:(

1343:)

1340:x

1337:(

1332:i

1328:B

1299:i

1295:c

1274:)

1271:x

1268:(

1263:i

1259:B

1235:.

1232:)

1229:x

1226:(

1221:i

1217:B

1211:i

1207:c

1201:k

1196:1

1193:=

1190:i

1182:=

1179:)

1176:x

1173:(

1164:f

1124:s

1121:i

1118:v

1096:d

1093:n

1090:i

1087:w

1065:e

1062:n

1059:o

1056:z

1053:o

1028:s

1025:i

1022:v

1000:d

997:n

994:i

991:w

954:)

950:s

947:i

944:v

934:,

931:0

928:(

922:)

919:7

912:d

909:n

906:i

903:w

899:,

896:0

893:(

872:)

868:t

865:b

862:i

852:,

849:0

846:(

825:)

815:p

812:m

809:e

806:t

802:,

799:0

796:(

775:)

765:p

762:m

759:e

756:t

752:,

749:0

746:(

737:+

717:=

713:e

710:n

707:o

704:z

701:o

680:y

672:x

668:x

664:y

647:b

627:b

621:a

601:a

581:)

578:b

575:,

572:a

569:(

532:y

525:y

521:y

517:x

497:y

459:)

456:x

447:,

444:0

441:(

420:)

411:x

408:,

405:0

402:(

393:+

373:=

364:y

344:y

340:x

336:x

332:x

328:y

324:x

317:y

313:x

293:y

264:y

235:y

209:x

203:+

194:=

185:y

154:x

150:y

146:x

93:y

88:x

79:x

75:y

71:x

38:(

20:)

Text is available under the Creative Commons Attribution-ShareAlike License. Additional terms may apply.